All benchmarks are platform-independent (run on any computing device with appropriate hardware). CuPy tests require an NVIDIA GPU with CUDA toolkit installed.

This command prepares Python prereqs:

pip install -r requirements.txtC and Fortran benchmarks requires building first using CMake.

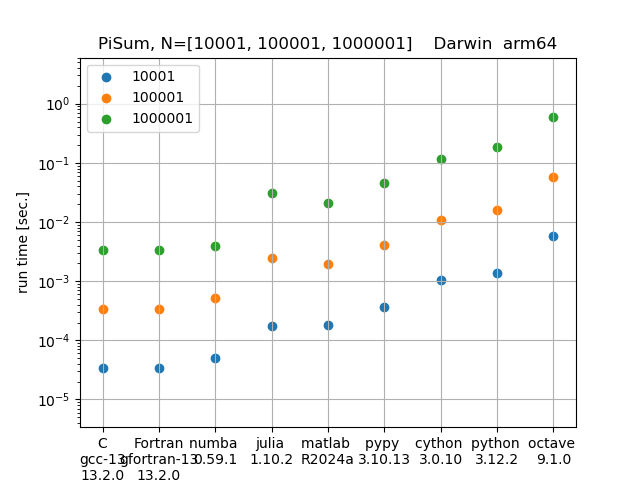

cmake --workflow --preset defaultIterative benchmarks, here using the pisum algorithm:

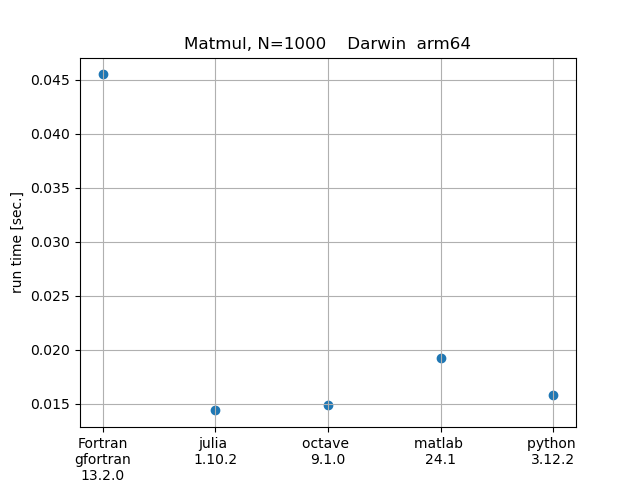

python Pisum.pyMatrix Multiplication benchmarks:

python Matmul.pyFor Python,

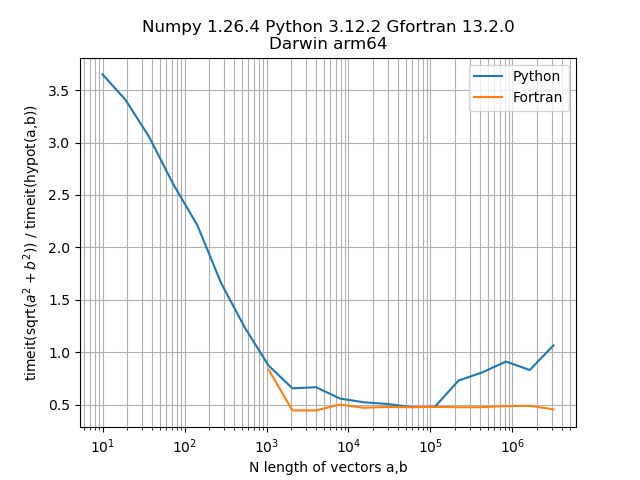

numpy.hypot()

is faster up to about a hundred elements, then

numpy.sqrt(x2 + y2)

becomes slightly faster.

The benefit of hypot() is to not overflow for arguments near REALMAX.

For example, in Python:

from math import sqrt, hypot

a=1e154; hypot(a,a); sqrt(a**2+a**2);

1.414213562373095e+154

infFor Fortran, observe that with Gfortran compiler that sqrt(x**2 + y**2) is slightly faster than hypot(x,y) in general across the tested array sizes.

Execute the Hypot speed test by:

python Hypot.pyJulia binaries are often downloaded to a particular directory.

Python doesn't pickup .bash_aliases, which is commonly used to point to Julia.

https://software.intel.com/en-us/articles/intel-mkl-link-line-advisor

We give a hint to CMake where your MKL libraries on. For example:

MKLROOT=/opt/intel/mkl cmake ..Of course this option can be combined with FC.

You can set this environment variable permanently for your convenience

(normally you always want to use MKL) by adding to your ~/.bashrc the

line:

export MKLROOT=/opt/intel/mkl