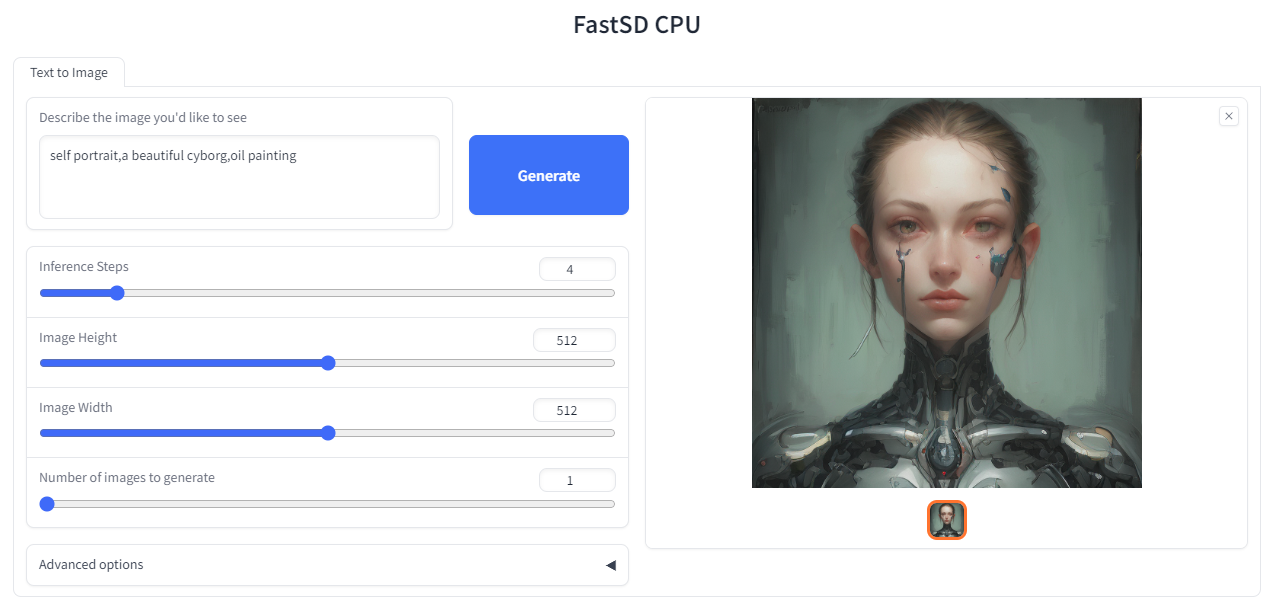

FastSD CPU is a faster version of Stable Diffusion on CPU. Based on Latent Consistency Models. The following interfaces are available :

- Desktop GUI (Qt)

- WebUI

- CLI (CommandLine Interface)

Using OpenVINO, it took 10 seconds to create a single 512x512 image on a Core i7-12700.

- Windows

- Linux

- Mac

- Raspberry PI 4

- Supports 256,512,768 image sizes

- Supports Windows and Linux

- Saves images and diffusion setting used to generate the image

- Settings to control,steps,guidance and seed

- Added safety checker setting

- Maximum inference steps increased to 25

- Added OpenVINO support

- Added web UI

- Added CommandLine Interface(CLI)

- Fixed OpenVINO image reproducibility issue

- Fixed OpenVINO high RAM usage,thanks deinferno

- Added multiple image generation support

- Application settings

- Added Tiny Auto Encoder for SD (TAESD) support, 1.4x speed boost (Fast,moderate quality)

- Safety checker disabled by default

- Added SDXL,SSD1B - 1B LCM models

- Added LCM-LoRA support, works well for fine-tuned Stable Diffusion model 1.5 or SDXL models

- Added negative prompt support in LCM-LoRA mode

- LCM-LoRA models can be configured using text configuration file

- Added support for custom models for OpenVINO (LCM-LoRA baked)

- OpenVINO models now supports negative prompt (Set guidance >1.0)

- Real-time inference support,generates images while you type (experimental)

Thanks deinferno for the OpenVINO model contribution. We can get 2x speed improvement when using OpenVINO. Thanks Disty0 for the conversion script.

We first creates LCM-LoRA baked in model,replaces the scheduler with LCM and then converts it into OpenVINO model. For more details check LCM OpenVINO Converter, you can use this tools to convert any StableDiffusion 1.5 fine tuned models to OpenVINO.

Now we can generate near real-time text to images using FastSD CPU.

CPU (OpenVINO)

Near real-time inference on CPU using OpenVINO, run the start-realtime.bat batch file and open the link in brower (Resolution : 256x256,Latency : 2.3s on Intel Core i7)

Colab (GPU)

You can use the colab to generate real-time images (Resolution : 512x512,Latency : 500ms on Tesla T4)

Watch YouTube video :

Fast SD supports LCM models and LCM-LoRA models.

Following LCM models are supported:

- LCM_Dreamshaper_v7 - https://huggingface.co/SimianLuo/LCM_Dreamshaper_v7 by Simian Luo

- SSD-1B -LCM distilled version of segmind/SSD-1B

- StableDiffusion XL -LCM distilled version of stable-diffusion-xl-base-1.0

These are LCM-LoRA baked in models.

- LCM-dreamshaper-v7-openvino by Rupesh

- LCM_SoteMix by Disty0

- lcm-lora-sdv1-5 - distilled consistency adapter for runwayml/stable-diffusion-v1-5

- lcm-lora-sdxl - Distilled consistency adapter for stable-diffusion-xl-base-1.0

- lcm-lora-ssd-1b - Distilled consistency adapter for segmind/SSD-1B

❗ Currently no support for OpenVINO LCM-LoRA models.

To add new model follow the steps:

For example we will add wavymulder/collage-diffusion, you can give Stable diffusion 1.5 Or SDXL,SSD-1B fine tuned models.

- Open

configs/stable-diffusion-models.txtfile in text editor. - Add the model ID

wavymulder/collage-diffusionor locally cloned path.

Updated file as shown below :

Fictiverse/Stable_Diffusion_PaperCut_Model

stabilityai/stable-diffusion-xl-base-1.0

runwayml/stable-diffusion-v1-5

segmind/SSD-1B

stablediffusionapi/anything-v5

wavymulder/collage-diffusion

Similarly we can update configs/lcm-lora-models.txt file with lcm-lora ID.

Please follow the steps to run LCM-LoRA models offline :

- In the settings ensure that "Use locally cached model" setting is ticked.

- Download the model for example

latent-consistency/lcm-lora-sdv1-5Run the following commands:

git lfs install

git clone https://huggingface.co/latent-consistency/lcm-lora-sdv1-5

Copy the cloned model folder path for example "D:\demo\lcm-lora-sdv1-5" and update the configs/lcm-lora-models.txt file as shown below :

D:\demo\lcm-lora-sdv1-5

latent-consistency/lcm-lora-sdxl

latent-consistency/lcm-lora-ssd-1b

- Open the app and select the newly added local folder in the combo box menu.

- That's all!

❗You must have a working Python installation.(Recommended : Python 3.10 or 3.11 )

Clone/download this repo or download release.

- Double click

install.bat(It will take some time to install,depending on your internet speed.)

You can run in desktop GUI mode or web UI mode.

- To start desktop GUI double click

start.bat

- To start web UI double click

start-webui.bat

Ensure that you have Python 3.8 or higher version installed.

-

Clone/download this repo

-

In the terminal, enter into fastsdcpu directory

-

Run the following command

chmod +x install.sh./install.sh

./start.sh

./start-webui.sh

Ensure that you have Python 3.8 or higher version installed.

-

Clone/download this repo

-

In the terminal, enter into fastsdcpu directory

-

Run the following command

chmod +x install-mac.sh./install-mac.sh

./start.sh

./start-webui.sh

Thanks Autantpourmoi for Mac testing.

❗We don't support OpenVINO on Mac.

If you want to increase image generation speed on Mac(M1/M2 chip) try this:

export DEVICE=mps and start app start.sh

Due to the limitation of using CPU/OpenVINO inside colab, we are using GPU with colab.

Open the terminal and enter into fastsdcpu folder. Activate virtual environment using the command:

(Suppose FastSD CPU available in the directory "D:\fastsdcpu")

D:\fastsdcpu\env\Scripts\activate.bat

source env/bin/activate

Start CLI src/app.py -h

Thanks WGNW_MGM for Raspberry PI 4 testing.FastSD CPU worked without problems. System configuration - Raspberry Pi 4 with 4GB RAM, 8GB of SWAP memory.

The fastsdcpu project is available as open source under the terms of the MIT license