This repository presents the results of the research descirbed in Studying the role of named entities for content preservation in text style transfer

SGDD-TST - Schema-Guided Dialogue Dataset for Text Style Transfer is a dataset for evaluating the quality of content similarity measures for text style transfer in the domain of the personal plans. The original texts were obtained from The Schema-Guided Dialogue Dataset and were paraphrased by the T5-based model trained on GYAFC formality dataset. The results were annotated by the crowdsource workers using Yandex.Toloka.

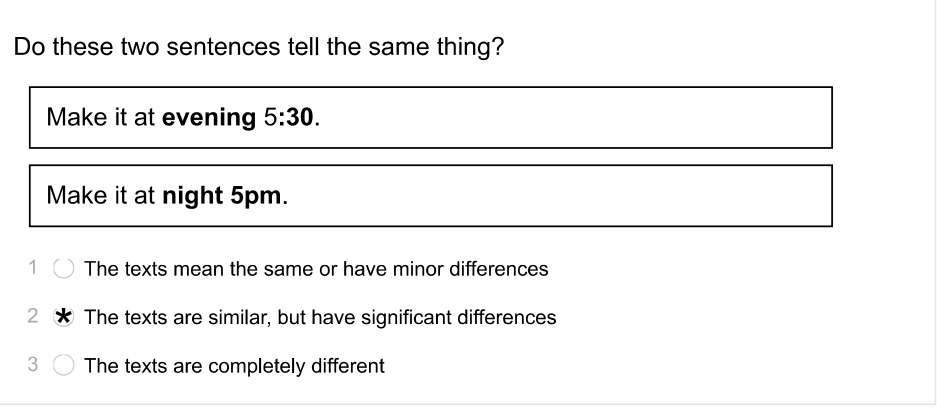

Fig.1 The example of crowdourcing task

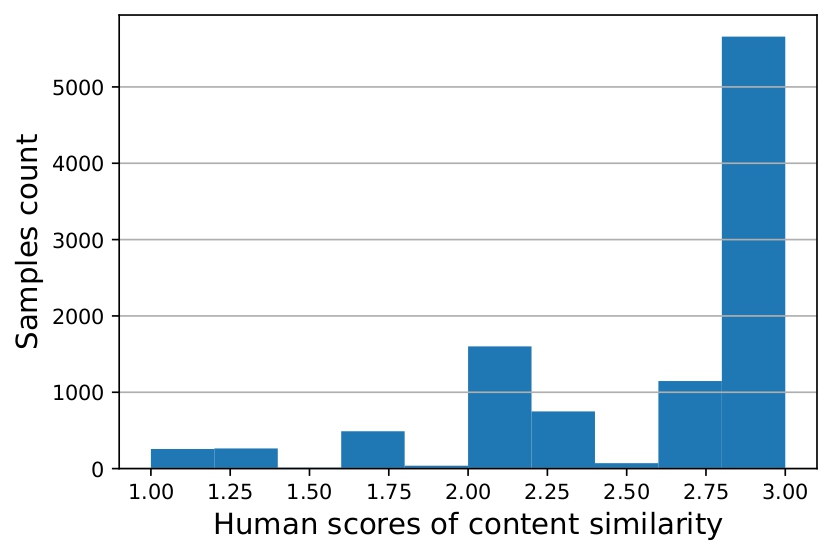

The dataset consists of 10,287 samples. Krippendorf's alpha agrrement score is 0.64

Fig.2 The distribution of the similarity scores in the collected dataset

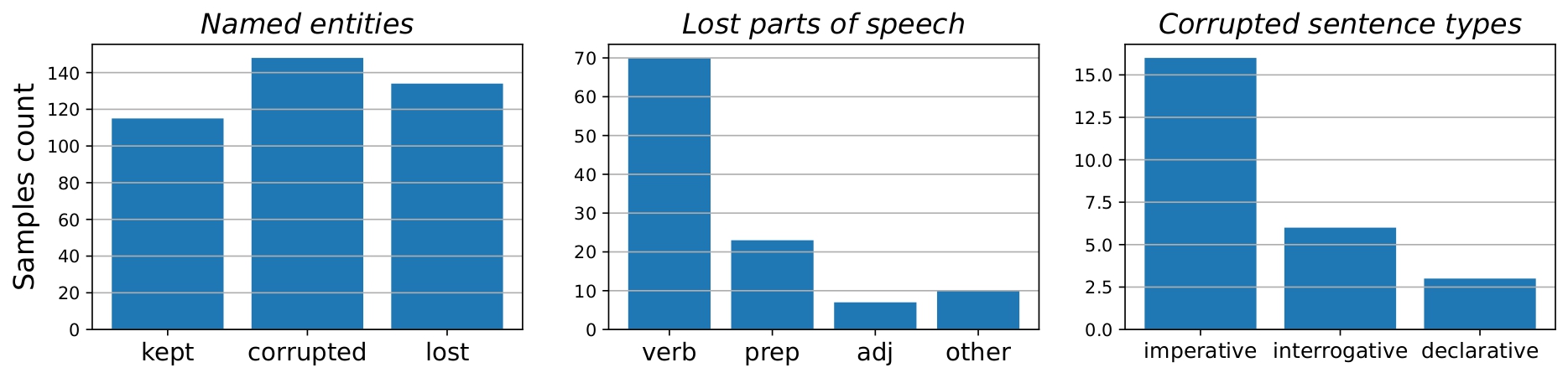

SGDD_self_annotated_subset is a subset of SGDD-TST manually annotated to perform an error analysis of the pre-trained formality transfer model. According to the error analysis, we learned that loss or corruption of named entities and some essential parts of speech like verbs, prepositions, adjectives, etc. play a significant role in the problem of the content loss in formality transfer.

Fig.3 Statistics of different reasons of content loss in TST

Fig.4 Frequency of the reasons for the change of content between original and generated sentences: named entities (NE), parts of speech (POS), named entities with parts of speech (NE+POS), and other reasons (Other).

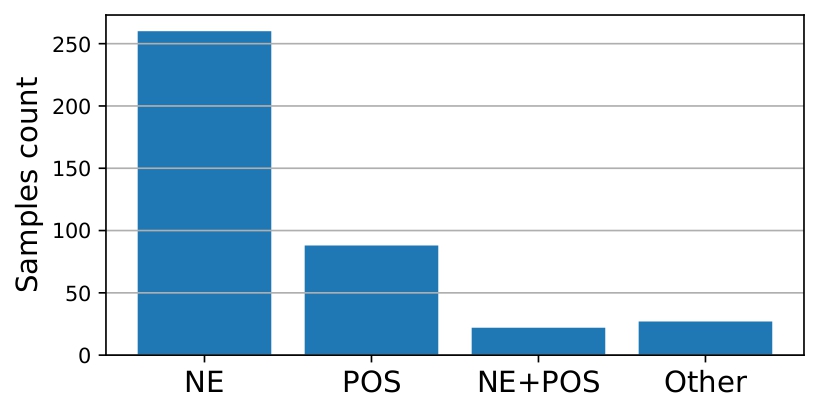

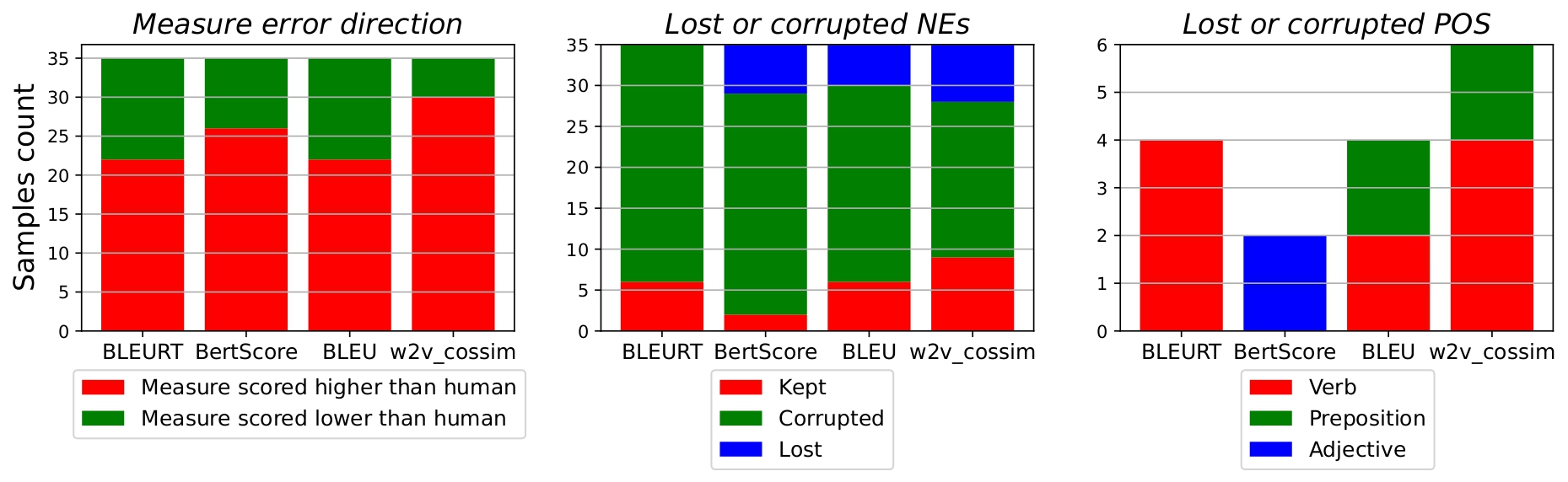

We also perform an error analysis of some content preservation metrics. We produce two rankings of sentences: a ranking based on their automatic scores and another one based on the manual scores, then sort the sentences by the absolute difference between their automatic and manual ranks, so the sentences scored worse with automatic metrics are at the top of the list. We manually annotate the top 35 samples for the metrics based on various calculation logic.

Fig.5 Errors statistics of the analyzed metrics. BertScore/DeBERTa is referred as BertScore here.

Our findings show that Named Entities play a significant role in the content loss, thus we try to improve existing metrics with NE-based signals. To make the results of this analysis more generalizable we use the simple open-sourced Spacy NER-tagger to extract entities from the collected dataset. These entities are processed with lemmatization and then used to calculate the Jaccard index over the intersection between entities from original and generated sentences. This score is used as a baseline Named Entity-based content similarity metric. This signal is merged with the main metrics according to the following formula,

| Metric | Correlation with pure metric | Correlation with merged metric | Is increase significant? |

|---|---|---|---|

| Elron/bleurt-large-512 | 0.56 | 0.56 | False |

| bertscore/microsoft/deberta-xlarge-mnli | 0.47 | 0.45 | False |

| bertscore/roberta-large | 0.4 | 0.37 | False |

| bleu | 0.35 | 0.38 | True |

| rouge1 | 0.29 | 0.36 | True |

| bertscore/bert-base-multilingual-cased | 0.28 | 0.36 | True |

| rougeL | 0.27 | 0.35 | True |

| chrf | 0.27 | 0.3 | True |

| w2v_cossim | 0.22 | 0.33 | True |

| fasttext_cossim | 0.22 | 0.32 | True |

| rouge2 | 0.15 | 0.22 | True |

| rouge3 | 0.09 | 0.14 | True |

Fig.6 Spearman correlation of automatic content similarity metrics with human content similarity scores with and without using auxiliary named Entitis-based metric on the collected SGDD-TST dataset.

Refer to reproduce_experiments.ipynb for the implementation of this approach. In this notebook, we show that it yields significant improvement in correlation with human judgments for most of the standardly used content similarity metrics.

If you have any questions feel free to drop a line to Nikolay

If you find this repository helpful, feel free to cite our publication:

@InProceedings{10.1007/978-3-031-08473-7_40,

author="Babakov, Nikolay

and Dale, David

and Logacheva, Varvara

and Krotova, Irina

and Panchenko, Alexander",

editor="Rosso, Paolo

and Basile, Valerio

and Mart{\'i}nez, Raquel

and M{\'e}tais, Elisabeth

and Meziane, Farid",

title="Studying the Role of Named Entities for Content Preservation in Text Style Transfer",

booktitle="Natural Language Processing and Information Systems",

year="2022",

publisher="Springer International Publishing",

address="Cham",

pages="437--448",

abstract="Text style transfer techniques are gaining popularity in Natural Language Processing, finding various applications such as text detoxification, sentiment, or formality transfer. However, the majority of the existing approaches were tested on such domains as online communications on public platforms, music, or entertainment yet none of them were applied to the domains which are typical for task-oriented production systems, such as personal plans arrangements (e.g. booking of flights or reserving a table in a restaurant). We fill this gap by studying formality transfer in this domain.",

isbn="978-3-031-08473-7"

}