This is the code accompanying the paper KEMTLS vs. PQTLS: Performance on Embedded Systems.

The experiments where designed to be fully reproducible.

This repository glues together all the pieces needed to run the code.

For this purpose it makes heavy use of submodules.

To clone it you therefore have to use --recurse-submodules:

git clone --recurse-submodules https://github.com/rugo/wolfssl-kemtls-experimentsThis repository contains:

- Scripts for running the experiments and analyzing them

- Submodule

OQS/oqs-demosto generate PQTLS certificates using liboqs' command line tool - Submodule

kemtls-server-reproduciblewrapper repository around the kemtls reference implementation. Used to generate KEMTLS certificates and build the KEMTLS server. - Submodule

zephyr-dockerdockerized build container for Zephyr RTOS, which is the OS used on the board. Includes scripts for building the actual Zephyr projects with KEMTLS and PQTLS.

Other components of the project, that are only indirectly included are:

- Zephyr Project Including KEMTLS, pulled by Zephyr's

westbuild tool- WolfSSL with KEMTLS including PQM4 finalists, pulled by Zephyr project

- Zephyr Project Including PQTLS, pulled by Zephyr's

westbuild tool- WolfSSL with PQTLS including PQM4 finalists, pulled by Zephyr project

- Namespaced PQM4, PQM4 fork that includes namespacing for the finalists. Fixes some alignment issues and has

verifyfunctions for the finalists. This is a submodule of the WolfSSL libraries.

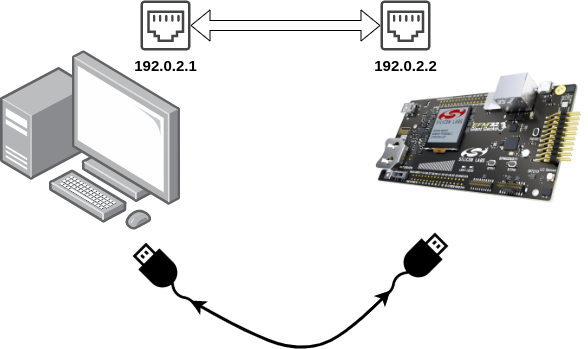

Our experiments where conducted on a STK3701a Giant Gecko Board. This board needs to be connected to a host computer running the benchmarks/experiments. The board needs to be connected via Ethernet (for networking) and via its JLink USB connector (for flashing and resetting). Everything else, like the embedded tool chain should be included in the Docker container.

The software setup was tested on Arch Linux.

The scripts make heavy use of Docker and docker-compose to achieve reproducible builds.

To flash the binaries onto the board, the Jlink tools are required.

Specifically, the JLinkExe binary has to be in the PATH.

For the PQTLS benchmarks, also the cmake and an up-to-date gcc have to be available.

To run the experiments, the ethernet adapter connected to the giant gecko needs to be set to the static IP address 192.0.2.1.

Then, the IFACE_NAME variable in the run_experiments.sh (see blow) scripts have to be set to the network interface connected to the giant gecko (e.g. IFACE_NAME=eth0).

Four main scripts are needed to run the experiment:

scripts/generate_certificates.sh, generates 1000 KEMTLS certificates per available PQC algorithm. This will run for a couple of hours.scripts/pqtls/generate_certificates.sh, generates 1000 PQTLS certificates per available PQC algorithm. This is slightly faster but will also run for an hour.scripts/run_experiments.sh, runs the KEMTLS experiments. This will run for a week.scripts/pqtls/run_experiments.sh, runs the PQTLS experiments. This will run for multiple days.

To run the full experiments, aka to generate all certificates then run KEMTLS and PQTLS in all possible PQC combinations with 1000 different certificates, you can just call:

./scripts/prepare_and_run_experiments.shThe resulting benchmarks are stored in the benchmarks folder.

In case you don't have the time to wait for so many results, you can reduce the number of iterations.

To do so in KEMTLS, do:

- Change

NUM_CERTSvariable inkemtls-server-reproducible/scripts/gen_key.shto number of certificates desired per PQC algorithm combination. - Change

NUM_ITERSvariable inscripts/run_experiments.shto number of iterations per PQC algorithm combination.

To do so in PQTLS, do:

- Change

NUM_CERTSvariable inOQS/scripts/gen_certs.shto number of certificates desired per PQC algorithm combination. - Change

NUM_ITERSvariable inscripts/pqtls/run_experiments.shto number of iterations per PQC algorithm combination.

To collect the benchmark results and save them in one directory, do:

./scripts/merge_benchmarks.py benchmarks/pqlts /tmp/pqtls

./scripts/merge_benchmarks.py benchmarks/kemtls /tmp/kemtlsYou can now print the result tables of the paper with:

./scripts/print_tables.py /tmp/kemtls --paper

./scripts/print_tables.py /tmp/pqtls --paper --pqtlsIf you want to print all collected bencharks, you can do that with:

./scripts/print_tables.py /tmp/kemtls --all

./scripts/print_tables.py /tmp/pqtls --pqtls --allIf you want to export the results to a csv file, you can do a:

./scripts/print_tables.py /tmp/kemtls --all --csv

./scripts/print_tables.py /tmp/pqtls --pqtls --all --csv