by Minyuan Ye, Dong Lyu and Gengsheng Chen

pdf [main][backup]

(a) Result of Nah et al. (b) Result of Tao et al. (c) Result of Zhang et al. (d) Our result.

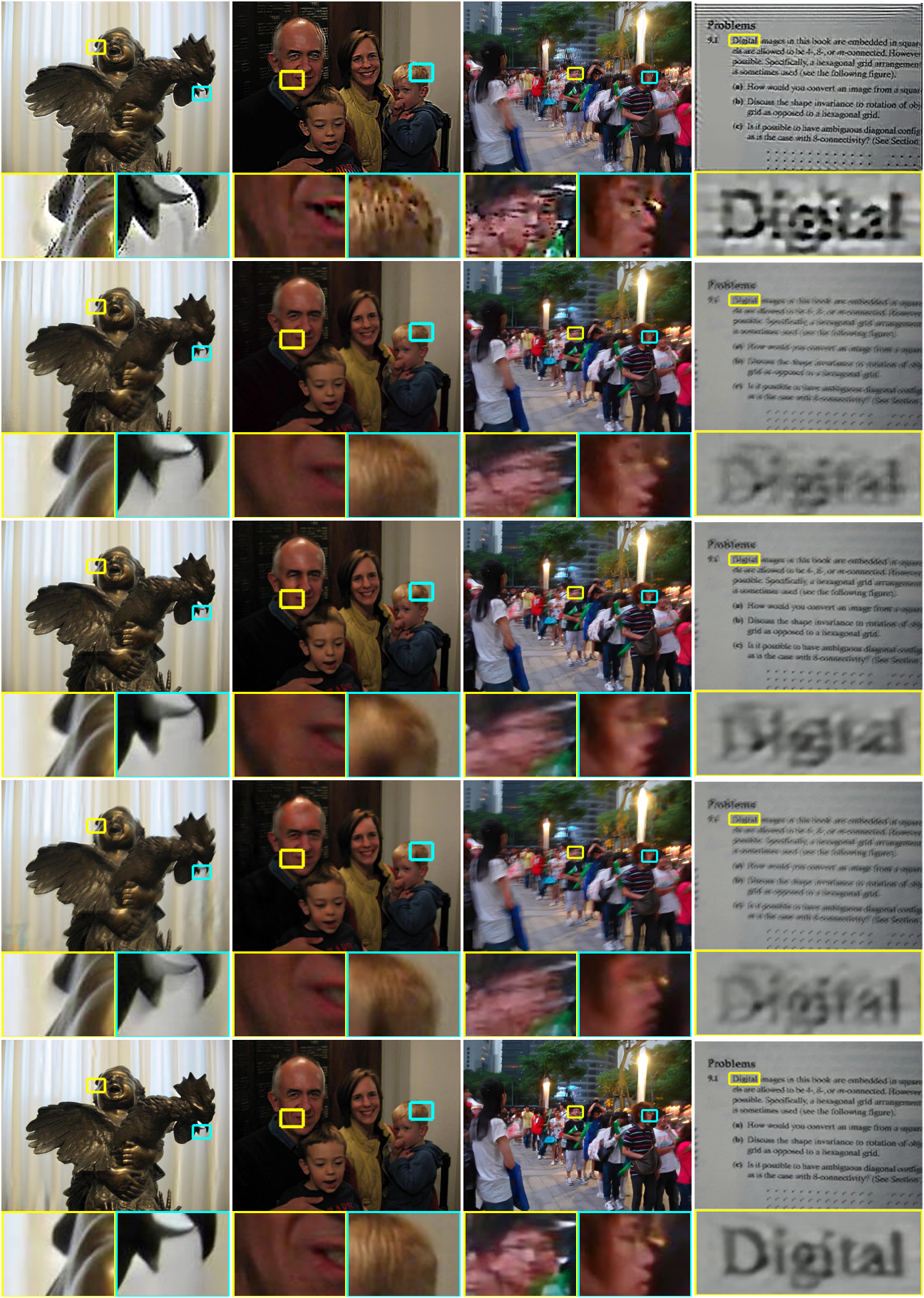

From top to bottom are blurry input, deblurring results of Nah et al., Tao et al., Zhang et al. and ours.

From top to bottom are images restored by Pan et al., Nah et al., Tao et al., Zhang et al. and ours. As space limits, the original blurry images are omitted here.

They can be viewed in Lai dataset with their names, from left to right: boy_statue, pietro, street4 and text1.

Please refer to "/code/requirements.txt".

git clone https://github.com/minyuanye/SIUN.git

cd code

You can always add '--gpu=<gpu_id>' to specify GPU ID, the default ID is 0.

-

For deblurring an image:

python deblur.py --apply --file-path='</testpath/test.png>' -

For deblurring all images in a folder:

python deblur.py --apply --dir-path='</testpath/testDir>'

Add '--result-dir=</output_path>' to specify output path. If it is not specified, the default path is './output'. -

For testing the model:

python deblur.py --test

Note that this command can only be used to test GOPRO dataset. And it will load all images into memory first. We recommand to use '--apply' as an alternative (Item 2).

Please set value of 'test_directory_path' to specify the GOPRO dataset path in file 'config.py'. -

For training a new model:

python deblur.py --train

Please remove the model file in 'model' first and set value of 'train_directory_path' to specify the GOPRO dataset path in file 'config.py'.

When it finishes, run:

python deblur.py --verify

Please refer to the source code. Most configuration parameters are listed in '/code/src/config.py'.

If you use any part of our code, or SIUN is useful for your research, please consider citing:

@ARTICLE{8963625,

author={M. {Ye} and D. {Lyu} and G. {Chen}},

journal={IEEE Access},

title={Scale-Iterative Upscaling Network for Image Deblurring},

year={2020},

volume={8},

number={},

pages={18316-18325},

keywords={Blind deblurring;curriculum learning;scale-iterative;upscaling network},

doi={10.1109/ACCESS.2020.2967823},

ISSN={2169-3536},

month={},}