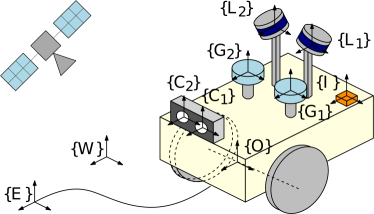

An efficient, robust, and tightly-coupled Multisensor-aided Inertial Navigation System (MINS) which is capable of flexibly fusing all five sensing modalities (IMU, wheel encoders, camera, GNSS, and LiDAR) in a filtering fashion by overcoming the hurdles of computational complexity, sensor asynchronicity, and intra-sensor calibration.

Exemplary use case of MINS:

- VINS (mono, stereo, multi-cam)

- GPS-IMU (single, multiple)

- LiDAR-IMU (single, multiple)

- wheel-IMU

- Camera-GPS-LiDAR-wheel-IMU or more combinations.

- Publication reference - https://arxiv.org/pdf/2309.15390.pdf

- Inertial(IMU)-based multi-sensor fusion including wheel odometry and arbitrary numbers of cameras, LiDARs, and GNSSs (+ VICON or loop-closure) for localization.

- Online calibration of all onboard sensors (check exemplary results).

- Consistent high-order state on manifold interpolation improved from our prior work (MIMC-VINS) and dynamic cloning strategy for light-weight estimation performance.

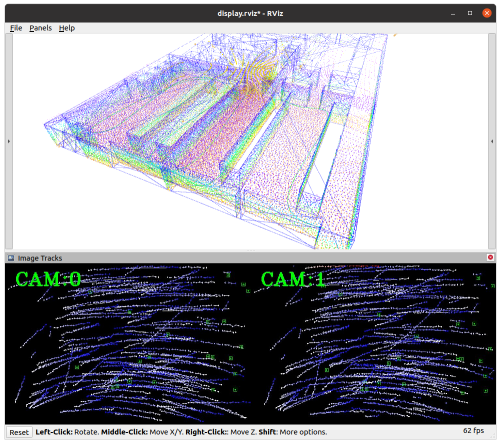

- Multi-sensor simulation toolbox for IMU, camera, LiDAR, GNSS, and wheel enhanced from our prior work (OpenVINS)

- Evaluation toolbox for consistency, accuracy, and timing analysis.

- Very detailed options for each sensor enabling general multi-sensor application.

MINS is tested on Ubuntu 18 and 20 and only requires corresponding ROS (Melodic and Noetic).

- Default Eigen version will be 3.3.7 (Noetic) or lower, but if one has a higher version the compilation can be failed due to thirdparty library (libpointmatcher) for LiDAR.

mkdir -p $MINS_WORKSPACE/catkin_ws/src/ && cd $MINS_WORKSPACE/catkin_ws/src/

git clone https://github.com/rpng/MINS

cd .. && catkin build

source devel/setup.bash

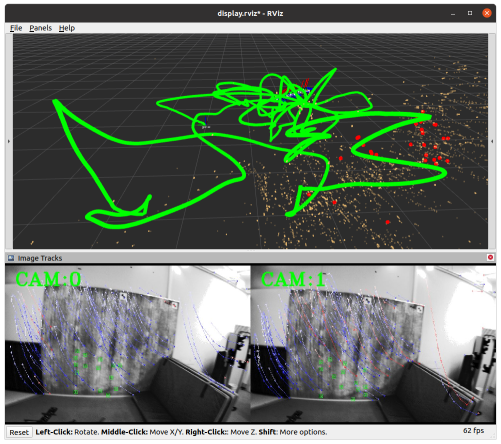

roslaunch mins simulation.launch cam_enabled:=true lidar_enabled:=true

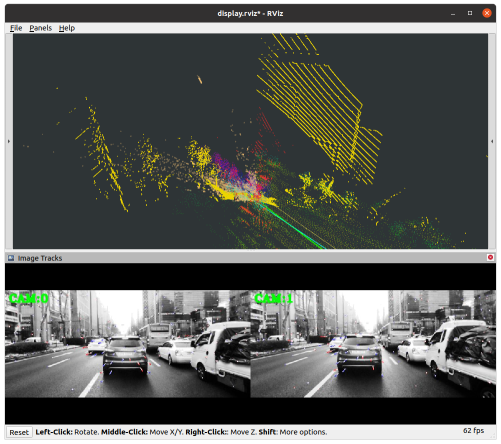

roslaunch mins rosbag.launch config:=kaist/kaist_LC path_gt:=urban30.txt path_bag:=urban30.bag

Here are the rosbag files and ground truths we used in the evaluation. To be specific, we used kaist2bag to convert all sensor readings to rosbag files. All rights reserved to KAIST urban dataset.

roslaunch mins subscribe.launch config:=euroc_mav rosbag:=V1_03_difficult.bag bag_start_time:=0

rviz -d mins/launch/display.rviz

This project was built on top of the following libraries which are in the thirdparty folder.

- OpenVINS: Open-source filter-based visual-inertial estimator.

- ikd-tree: Incremental k-d tree.

- libpointmatcher: Modular Iterative Closest Point library based on libnabo

This code was written by the Robot Perception and Navigation Group (RPNG) at the University of Delaware. If you have any issues with the code please open an issue on our GitHub page with relevant implementation details and references. For researchers that have leveraged or compared to this work, please cite the following:

The publication reference will be updated soon.

@article{Lee2023arxiv,

title = {MINS: Efficient and Robust Multisensor-aided Inertial Navigation System},

author = {Woosik Lee and Patrick Geneva and Chuchu Chen and Guoquan Huang},

year = 2023,

journal = {arXiv preprint arXiv:2309.15390},

url = {https://github.com/rpng/MINS},

}The codebase and documentation is licensed under the GNU General Public License v3 (GPL-3). You must preserve the copyright and license notices in your derivative work and make available the complete source code with modifications under the same license (see this; this is not legal advice).