OHMG is a web application that facilitates public participation in the process of georeferencing and mosaicking historical maps. This is a standalone project that requires an external instance of Titiler to serve the mosaicked layers. See Dependencies below for more about the tech stack.

At present, the system is structured around the Sanborn Map Collection at the Library of Congress (loc.gov/collections/sanborn-maps). More generic ingestion methods are in the works.

- Implementation: oldinsurancemaps.net

- Documentation: ohmg.dev

Please don't hesitate to open a ticket if you have trouble with the site, find a bug, or have suggestions otherwise.

You can browse content in the platform by map, by place name, or by map name.

Each volume's summary page has an interactive Map Overview showing all of the sheets that have been georeferenced so far.

Each volume's summary page also lists the progress and georeferencing stage of each sheet.

Finally, each resource itself has it's own page, showing a complete lineage of the work that has been performed on it by various users.

The georeferencing process generally consists of three operations, each with their own browser interface.

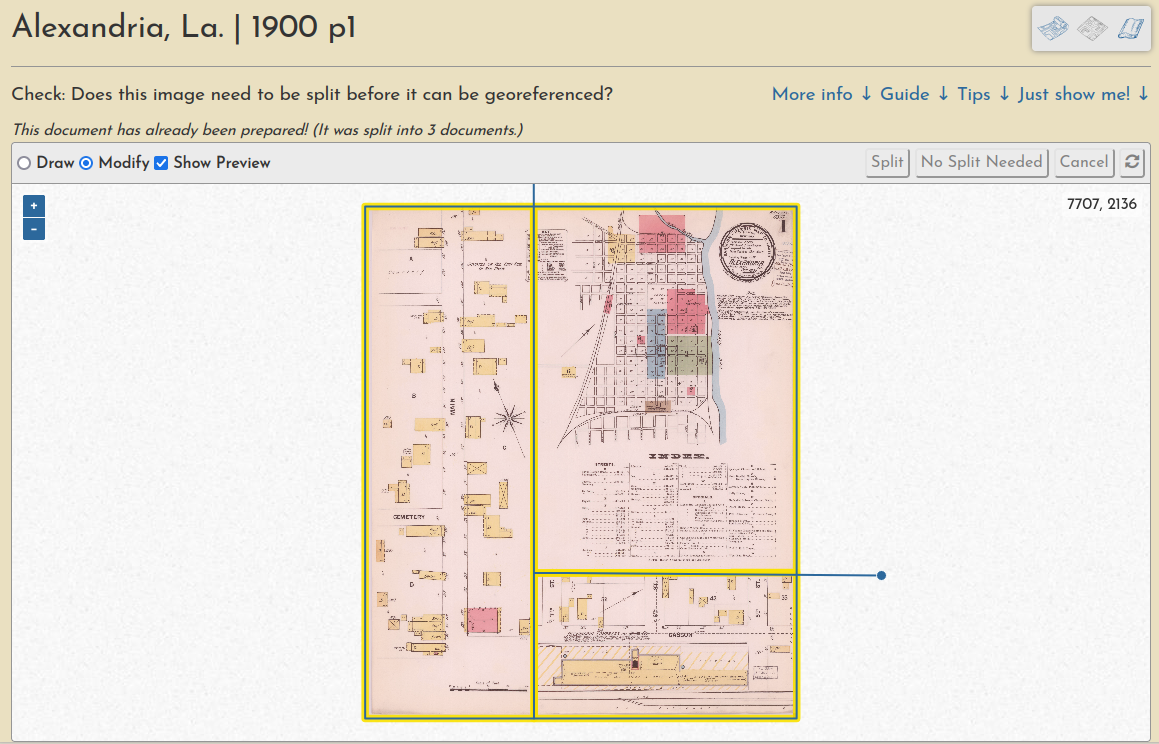

Document preparation (sometimes they must be split into multiple pieces):

Ground control point creation (these are used to warp the document into a geotiff):

And a "multimask" that allows a volume's sheets to be trimmed en masse, a quick way to create a seamless mosaic from overlapping sheets:

Learn much more about each step in the docs.

All user input is tracked through registered accounts, which allows for a comprehensive understanding of user engagement and participation, as well as a complete database of all input georeferencing information, like ground control points, masks, etc.

This is a Django project, with a frontend built (mostly) with Svelte, using OpenLayers for all map interfaces. OpenStreetMap and Mapbox are the basemap sources.

- Django Ninja - API

- Django Newsletter - Newsletter (optional feature)

- Postgres/PostGIS

- Celery + RabbitMQ

- GDAL >= 3.5

- TiTiler

Running the application requires a number of components to be installed and configured properly. This aspect of the application is not optimized, but getting it documented is the first step.

Install Postgres/PostGIS as you like. Once running, create a database like this

psql -U postgres -c "CREATE USER ohmg WITH ENCRYPTED PASSWORD '$DB_PASSWORD'"

psql -U postgres -c "CREATE DATABASE oldinsurancemaps WITH OWNER ohmg;"

psql -U postgres -d oldinsurancemaps -c "CREATE EXTENSION PostGIS;"See also ./scripts/create_database.sh.

Make virtual env

python3 -m venv env

source env/bin/activateInstall Python deps

git clone https://github.com/mradamcox/ohmg && cd ohmg

pip install -r requirements.txtSet environment variables

cp .env.original .envInitialize database, create admin user

python manage.py migrate

python manage.py createsuperuserLoad all the place objects to create geography scaffolding

python manage.py place import-allThe frontend uses a suite of independently built svelte components.

cd ohmg/frontend/svelte

pnpm install

pnpm run devYou can now run

python manage.py runserverand view the site at https://localhost:8000.

However, few more components will need to be set up independently before the app will be fully functional. Complete the following sections and then rerun the dev server so that any new .env values will be properly aqcuired.

In development, RabbitMQ can be run via Docker like so:

docker run --name rabbitmq --hostname my-rabbit \

-p 5672:5672 \

-p 15672:15672 \

-e RABBITMQ_DEFAULT_USER=username \

-e RABBITMQ_DEFAULT_PASS=password \

--rm \

rabbitmq:3-alpineFor convenience, this command is in the following script:

source ./scripts/rabbit_dev.shOnce RabbitMQ is running, update .env with the RABBITMQ_DEFAULT_USER and RABBITMQ_DEFAULT_PASS credentials you used above when creating the container.

Now you are ready to run Celery in development with:

source ./scripts/celery_dev.shTiTiler can also be run via Docker, using a slightly modified version of the official container (it is only modified to include the WMS endpoint extension):

docker run --name titiler \

-p 8008:8000 \

-e PORT=8000 \

-e MOSAIC_SCRIPT_ZOOM=False \

-e WORKERS_PER_CORE=1 \

--rm \

-it \

ghcr.io/mradamcox/titiler:0.11.6-ohmgOr the same command is wrapped in:

source ./scripts/titiler_dev.shThis will start a container running TiTiler and expose it to localhost:8008.

Make sure you have TITILER_HOST=https://localhost:8008 in .env.

One hitch during development is that the Django dev server does not serve range requests, meaning that TiTiler will need to be fed urls to local files that are running behind Apache or Nginx, not just the Django dev server.

sudo apt install nginxOnce nginx is running, make sure the default server config includes an alias that points directly to the same directory that your Django app will use for MEDIA_ROOT.

/etc/nginx/sites-enabled/default

server {

...

location /uploaded {

alias /home/ohmg/uploaded;

}

...

}Finally, make sure that the following environment variables are set, MEDIA_HOST being a prefix that is appended to any uploaded media paths that are passed to TiTiler.

.env

MEDIA_HOST=https://localhost

MEDIA_ROOT=/home/ohmg/uploadedIn production, you will already be using Nginx, so these steps would be redundant. If there is no MEDIA_ROOT environment variable set locally, it will default to SITEURL.

![Alexandria, La, 1900, p1 [2]](https://github.com/roger120981/ohmg/raw/main/ohmg/frontend/static/img/example-resource-alex-1900.jpg)