Main project page.

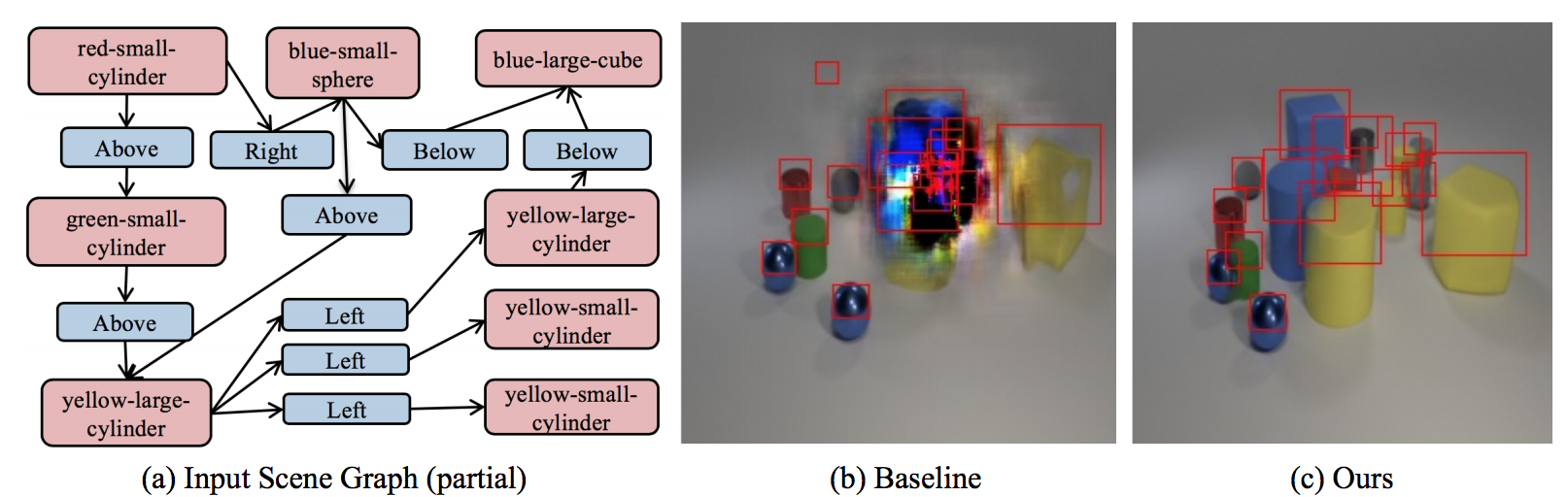

Generation of scenes with many objects. Our method achieves better performance on such scenes than previous methods. Left: A partial input scene graph. Middle: Generation using [1]. Right: Generation using our proposed method.

- We propose a model that uses canonical representations of SGs, thus obtaining stronger invariance properties. This in turn leads to generalization on semantically equivalent graphs and improved robustness to graph size and noise in comparison to existing methods.

- We show how to learn the canonicalization process from data.

- We use our canonical representations within an SG-to-image model and demonstrate our approach results in an improved generation on Visual Genome, COCO, and CLEVR, compared to the state-of-the-art baselines.

To get started with the framework, install the following dependencies:

- Python 3.7

pip install -r requirments.txt

Follow the commands below to build the data.

./scripts/download_coco.sh

./scripts/download_vg.sh

Please download the CLEVR-Dialog Dataset from here.

python -m scripts.train --dataset={packed_coco, packed_vg, packed_clevr}

Optional arguments:

--output_dir=output_path_dir/%s (s is the run_name param) --run_name=folder_name --checkpoint_every=N (default=5000) --dataroot=datasets_path --debug (a flag for debug)

Train on COCO (with boxes):

python -m scripts.train --dataset=coco --batch_size=16 --loader_num_workers=0 --skip_graph_model=0 --skip_generation=0 --image_size=256,256 --min_objects=1 --max_objects=1000 --gpu_ids=0 --use_cuda

Train on VG:

python -m scripts.train --dataset=vg --batch_size=16 --loader_num_workers=0 --skip_graph_model=0 --skip_generation=0 --image_size=256,256 --min_objects=3 --max_objects=30 --gpu_ids=0 --use_cuda

Train on CLEVR:

python -m scripts.train --dataset=packed_clevr --batch_size=6 --loader_num_workers=0 --skip_graph_model=0 --skip_generation=0 --image_size=256,256 --use_img_disc=1 --gpu_ids=0 --use_cuda

To produce layout outputs and IOU results, run:

python -m scripts.layout_generation --checkpoint=<trained_model_folder> --gpu_ids=<0/1/2>

A new folder with the results will be created in: <trained_model_folder>

Packed COCO: link

Packed Visual Genome: link

Please use LostGANs implementation

To produce the image from a dataframe, run:

python -m scripts.generation_dataframe --checkpoint=<trained_model_folder>

A new folder with the results will be created in: <trained_model_folder>

python -m scripts.generation_dataframe --gpu_ids=<0/1/2> --checkpoint=<model_path> --output_dir=<output_path> --data_frame=<dataframe_path> --mode=<gt/pred>

mode=gt defines use gt_boxes while mode=pred use predicted box by our WSGC model from the paper (see the dataframe for more details).

dataframe: link; 128x128 resolution: link; 256x256 resolution: link

dataframe: link; 128x128 resolution: link; 256x256 resolution: link

python -m scripts.generation_attspade --gpu_ids=<0/1/2> --checkpoint=<model/path> --output_dir=<output_path>

This script generates CLEVR images on large scene graphs from scene_graphs.pkl. It generates the CLEVR results for both WSGC + AttSPADE and Sg2Im + AttSPADE. For more information, please refer to the paper.

python -m scripts.generate_clevr --gpu_ids=<0/1/2> --layout_not_learned_checkpoint=<model_path> --layout_learned_checkpoint=<model_path> --output_dir=<output_path>

Baseline (Sg2Im): link; WSGC: link

- This implementation is built on top of [1]: https://github.com/google/sg2im.

[1] Justin Johnson, Agrim Gupta, Li Fei-Fei, Image Generation from Scene Graphs, 2018.

@inproceedings{herzig2019canonical,

author = {Herzig, Roei and Bar, Amir and Xu, Huijuan and Chechik, Gal and Darrell, Trevor and Globerson, Amir},

title = {Learning Canonical Representations for Scene Graph to Image Generation},

booktitle = {Proc. of the European Conf. on Computer Vision (ECCV)},

year = {2020}

}