-

Notifications

You must be signed in to change notification settings - Fork 117

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Merge branch 'roboflow:main' into main

- Loading branch information

Showing

11 changed files

with

82 additions

and

20 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,23 @@ | ||

| [YOLOv10](https://github.com/THU-MIG/yolov10), released on May 23, 2024, is a real-time object detection model developed by researchers from Tsinghua University. YOLOv10 follows in the long-running series of YOLO models, created by authors from a wide variety of researchers and organizations. | ||

|

|

||

| ## Supported Model Types | ||

|

|

||

| You can deploy the following YOLOv9 model types with Inference: | ||

|

|

||

| - Object Detection | ||

|

|

||

| ## Supported Inputs | ||

|

|

||

| Click a link below to see instructions on how to run a YOLOv9 model on different inputs: | ||

|

|

||

| - [Image](/quickstart/run_model_on_image/) | ||

| - [Video, Webcam, or RTSP Stream](/quickstart/run_model_on_rtsp_webcam/) | ||

|

|

||

| ## License | ||

|

|

||

| See our [Licensing Guide](/quickstart/licensing/) for more information about how your use of YOLOv9 is licensed when using Inference to deploy your model. | ||

|

|

||

| ## See Also | ||

|

|

||

| - [How to Train a YOLOv10 Model](https://blog.roboflow.com/yolov10-how-to-train/) | ||

| - [Deploy a YOLOv10 Model with Roboflow](https://blog.roboflow.com/deploy-yolov10-model/) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,24 +1,24 @@ | ||

| # Inference Workflows | ||

|

|

||

| !!! note | ||

| ## What is a Workflow? | ||

|

|

||

| Workflows is an alpha product undergoing active development. Stay tuned for updates as we continue to | ||

| refine and enhance this feature. | ||

| Workflows allow you to define multi-step processes that run one or more models to return results based on model outputs and custom logic. | ||

|

|

||

| Inference Workflows allow you to define multi-step processes that run one or more models and returns a result based on the output of the models. | ||

|

|

||

| With Inference workflows, you can: | ||

| With Workflows, you can: | ||

|

|

||

| - Detect, classify, and segment objects in images. | ||

| - Apply filters (i.e. process detections in a specific region, filter detections by confidence). | ||

| - Apply logic filters such as establish detection consensus or filter detections by confidence. | ||

| - Use Large Multimodal Models (LMMs) to make determinations at any stage in a workflow. | ||

|

|

||

| You can build simple workflows in the Roboflow web interface that you can then deploy to your own device or the cloud using Inference. | ||

| <div class="button-holder"> | ||

| <a href="https://inference.roboflow.com/workflows/blocks/" class="button half-button">Explore all Workflows blocks</a> | ||

| <a href="https://app.roboflow.com/workflows" class="button half-button">Begin building with Workflows</a> | ||

| </div> | ||

|

|

||

| You can build more advanced workflows for use on your own devices by writing a workflow configuration directly in JSON. | ||

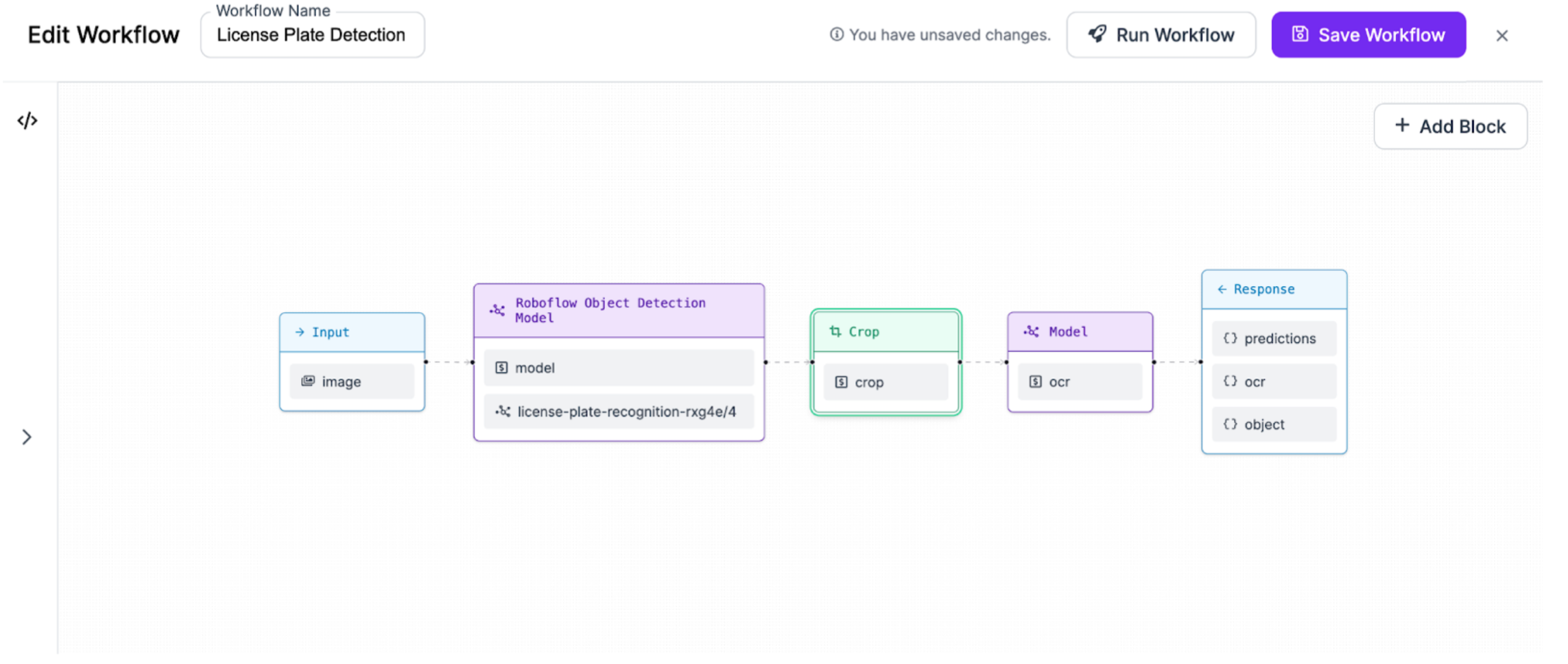

|  | ||

|

|

||

| In this section of documentation, we describe what you need to know to create workflows. | ||

| You can build and configure Workflows in the Roboflow web interface that you can then deploy using the Roboflow Hosted API, self-host locally and on the cloud using inference, or offline to your hardware devices. You can also build more advanced workflows by writing a Workflow configuration directly in the JSON editor. | ||

|

|

||

| Here is an example structure for a workflow you can build with Inference Workflows: | ||

| In this section of documentation, we walk through what you need to know to create and run workflows. Let’s get started! | ||

|

|

||

|  | ||

| [Create and run a workflow.](/workflows/create_and_run/) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file was deleted.

Oops, something went wrong.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,4 +1,4 @@ | ||

| __version__ = "0.12.0" | ||

| __version__ = "0.12.1" | ||

|

|

||

|

|

||

| if __name__ == "__main__": | ||

|

|

||

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -3,4 +3,4 @@ requests | |

| pytest | ||

| pillow | ||

| requests_toolbelt | ||

| numpy | ||

| numpy<=1.26.4 | ||