In the next-frame prediction problem, we strive to generate the subsequent frame of a given video. Inherently, video has two kinds of information to take into account, i.e., image (spatial) and temporal. Using the Convolutional LSTM model, we can manage to feature-extract and process both pieces of information with their inductive biases. In Convolutional LSTM, instead of utilizing fully connected layers within the LSTM cell, convolution operations are adopted. To evaluate the model, the moving MNIST dataset is used. To evalute the model, the Moving MNIST dataset is used.

Have a dive into this link and immerse yourself in the next-frame prediction implementation.

Inspect this table to catch sight of the model's feat.

| Test Metric | Score |

|---|---|

| Loss | 0.006 |

| MAE | 0.021 |

| PSNR | 22.120 |

| SSIM | 0.881 |

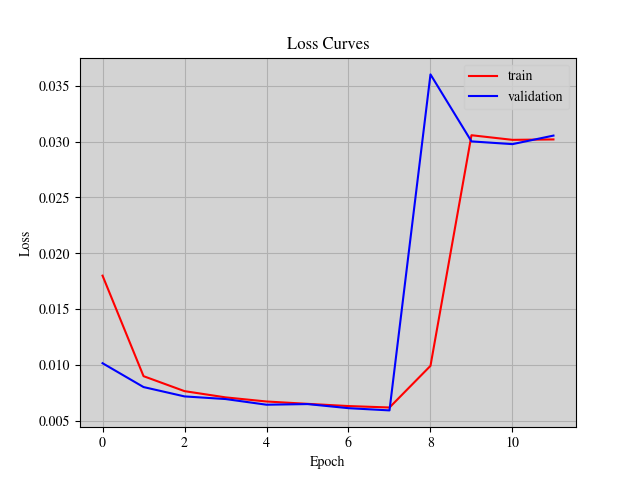

The loss curve on the training and validation sets of the Convolutional LSTM model.

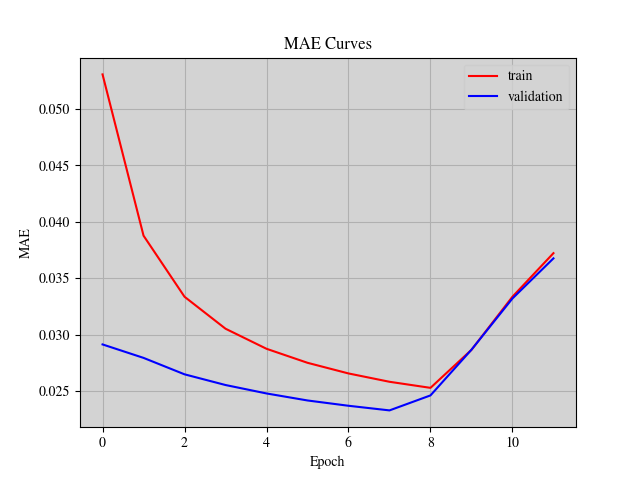

The MAE curve on the training and validation sets of the Convolutional LSTM model.

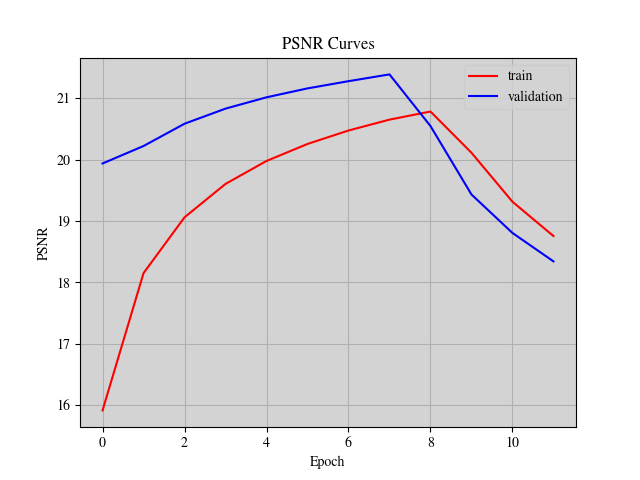

The PSNR curve on the training and validation sets of the Convolutional LSTM model.

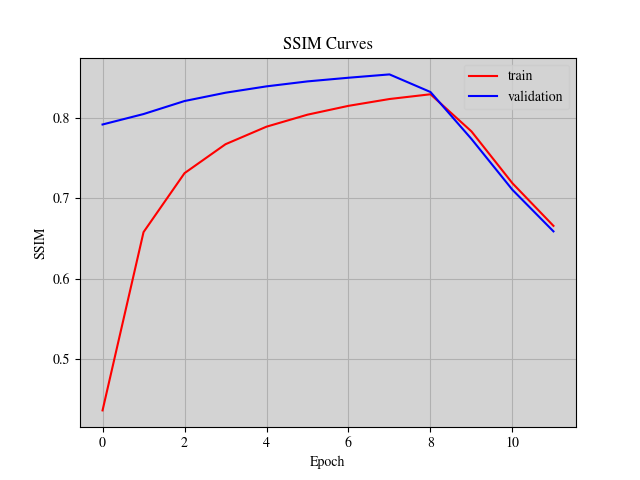

The SSIM curve on the training and validation sets of the Convolutional LSTM model.

This GIF displays the qualitative result of the frame-by-frame prediction of the Convolutional LSTM model.

The Convolutional LSTM model predicts the ensuing frame-by-frame from t = 1 to t = 19.

- Next-Frame Video Prediction with Convolutional LSTMs

- Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting

- On the difficulty of training Recurrent Neural Networks

- Statistical Language Models Based on Neural Networks

- Unsupervised Learning of Video Representations using LSTMs

- Moving MNIST

- Long Short-Term Memory

- Long Short-Term Memory Based Recurrent Neural Network Architectures for Large Vocabulary Speech Recognition

- Forked Torchvision by Henry Xia

- PyTorch Lightning