Back in 2015, I attended PyCon in Montreal, Canada. It was awesome. There were lots of stalls from big names, and some offered interesting programming challenges. Thumbtack was among them.

To quote Thumbtack Engineering,

When I was little, my family went to our town’s district math night. We came back with a game that we still play as a family. The game is called Inspiration. It’s played with a normal deck of cards, with the picture cards taken out. Everyone gets four cards and one card is turned face up for everyone to see. You then have to mathematically combine your four cards with addition, subtraction, multiplication, and division to get the center card. The person who does it the fastest wins.

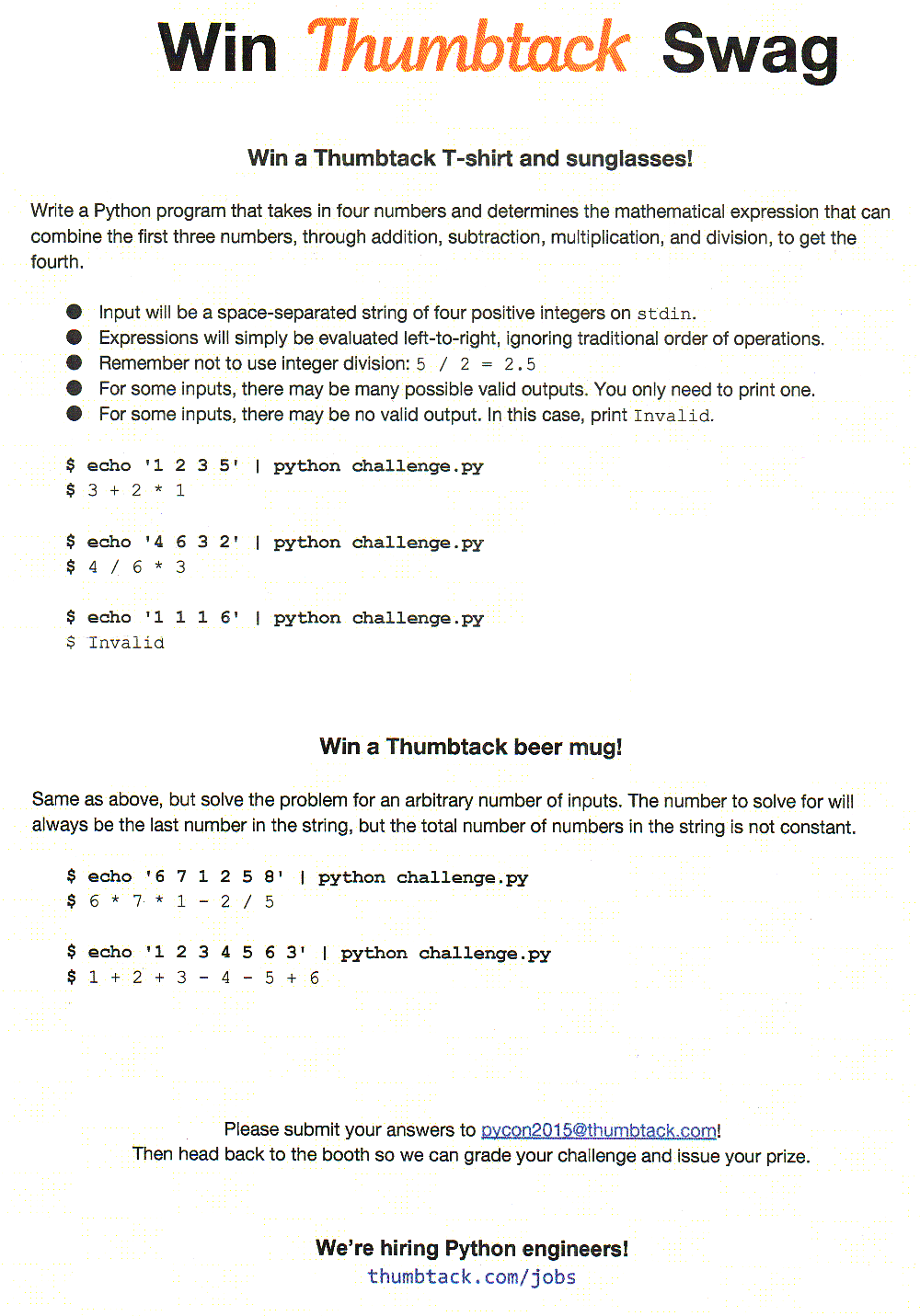

This year, our challenge was inspired by Inspiration, no pun intended. The first part asked people to write a Python program that takes in four numbers and determines the mathematical expression that can combine the first three numbers to get the fourth. If they could solve this, they were awarded a t-shirt and sunglasses. The harder challenge was to solve the same problem, but with an arbitrary number of inputs. The number to solve for was always the last number in the string, but the total number of operands was not constant. These solvers won the coveted Thumbtack beer glass.

Other than on Thumbtack's own blog, discussion and solutions (other than a dead link here and there) seem to be unfortunately non-existent. This kind of problem is dangerous for me because it's an ideal nerd snipe: simple on its face, but with lots of potential for complexity given any cursory thought. And snipe it did: I've been occasionally thinking on and off about this for two years and counting.

Stated more formally, let there be n integers xi on the left-hand side of an equation, and an integer y on the right-hand side of that equation, where

n ≥ 2

0 ≤ i < n

1 ≤ xi ≤ 9

1 ≤ y ≤ 9

A strict interpretation would enforce a maximum count of four cards (suits) per card value, but that criterion is disregarded for the purposes of this discussion.

A strict interpretation would also impose an upper bound on n. Since there are 4 suits, 9 cards per suit, a minimum of 2 players, and n cards per player plus one shared y card, the upper bound for n is:

2 ≤ n ≤ (4 * 9 - 1)/2

2 ≤ n ≤ 17

There are n - 1 operators ƒi between the elements of xi and xi + 1 . Each ƒ is a binary arithmetic operator with conventional associativity and commutativity but non-conventional order of operations. The equation is assumed to be evaluated in a flat, left-to-right manner, that is,

x0 [ƒ0] x1 [ƒ1] x2 [ƒ2] ... [ƒn - 2] xn - 1= y

ƒn - 2( ... ƒ1(ƒ0(x0, x1), x2) ... xn - 1) = y

Each ƒ may be any of:

ƒ(a, b) ∈ { a + b, a - b, a * b, a / b }

The goal is to permute x and choose each ƒ to satisfy the equation. Where there are multiple solutions, the first one found is taken and the rest are discarded.

The cardinality of the ƒ function set is:

|ƒ| = 4

Assuming that the entries of x are (in the worst case) unique, the number of permutations of x is n! .

The size N of the (naïve) search space is then

N = 4n - 1 ( n ! )

For given x and y the solution is not necessarily unique, nor is there necessarily a solution. By example, there is no solution for

x = (1, 1), y = 9

By contrast, given these inputs:

x = (1, 2, 3), y = 4

There are 5 solutions out of a search space for which N = 96 :

2 - 1 + 3 = 4

2 + 3 - 1 = 4

3 - 1 + 2 = 4

3 - 1 * 2 = 4

3 + 2 - 1 = 4

Some inputs are thus more difficult than others, difficulty being measured by both the number of solutions in the problem space as well as (more importantly) the number of candidates assessed before the first solution is found.

For example, evaluating all of the possible inputs where n = 4, N = 1536; and using the naïve algorithm described later, the problem difficulty is heavily dependent on which inputs are chosen. For the following inputs, a solution is found after only 7 iterations. There are 480 solutions (without discarding non-unique solutions):

x = (1, 1, 1, 1), y = 1

1 + 1 - 1 * 1 = 1

...

However, the following inputs only produce a solution after 1309 iterations, exhausting 85% of the search space; and there are only two solutions:

x = (2, 1, 5, 9), y = 9

9 - 1 / 2 + 5 = 9

9 - 5 * 2 + 1 = 9

The naïve (or brute-force) algorithm is the easiest to implement but certainly not the most efficient. It was the most popular (perhaps only?) algorithm seen in use at PyCon. In pseudocode it looks like:

for each of n! permutations of x:

for each of the 4^(n-1) combinations of ƒ:

evaluate LHS

if LHS == y:

print solution

exit

A brief implementation of this algorithm (indeed, briefer than what I submitted in 2015) is:

#!/usr/bin/env python3

import functools, itertools, operator as opr, re, sys

operators = tuple(zip((opr.add, opr.sub, opr.mul, opr.truediv), '+-*/'))

inputs = [int(i) for i in re.findall('\S+', sys.stdin.readline())]

for perm in itertools.permutations(inputs[:-1]):

for ops in itertools.product(operators, repeat=len(perm)-1):

funcs = tuple(zip(ops, perm[1:]))

if inputs[-1] == functools.reduce(lambda n, fun: fun[0][0](n, fun[1]), funcs, perm[0]):

print(perm[0], ' '.join('%s %d' % (o[1], n) for o, n in funcs))

sys.exit()

print('Invalid')There are surely ways to code-golf this down even further, but this implementation is non-magical - it simply uses:

- itertools.permutations to search through all x;

- itertools.product (as in, the Cartesian product) with repeat set to n - 1 to search through all ƒ; and

- functools.reduce to evaluate the result.

This brute-force method can be very slow: since the search through x is O(n!), the search through ƒ is O(4n - 1), and the evaluation of the LHS is O(n), the overall worst-case runtime is O(n! 4n - 1 n) .

Whenever a new ƒ is attempted in the naïve algorithm, it takes O(n) time to re-evaluate the LHS in order to compare it to y on the RHS - but this O(n) factor can be reduced.

A recursive function can be used that, either from left to right or right to left, iterates through the possibilities for ƒi at four times the speed of ƒi - 1. Even though it is more complicated than calling itertools.product, it offers speedup by reducing evaluation redundancy. This is achieved on every recursion at depth i, reusing the prior value computed at upper depths 0 through i - 1.

As with the recursive-ƒ algorithm, the O(n) factor can be reduced by eliminating some redundant re-evaluation of sections of the LHS. In this case, we attempt this by ensuring that only one ƒi changes at a time, reducing this factor to O(1).

Gray code is a method of incrementing numbers such that only one digit changes at a time, but all possible values are still visited. For instance, for a 3-bit binary integer:

0 0 0

0 0 1

0 1 1

0 1 0

1 1 0

1 1 1

1 0 1

1 0 0

ƒ can be modelled as a radix-4 integer with n - 1 digits, where each digit represents one operator, and the integer increments from 0 through 4n - 1 - 1. There is benefit to having it increment by (4,n)-Gray code instead of linearly. Whenever an ƒi changes, the computed value from ƒ0 through ƒi - 1 can stay the same; and the computed composite function equivalent to ƒi + 1 through ƒn - 2 can also stay the same.

For n = 4 and a single permutation of x, the search through all ƒ would look like:

+ + +

+ + -

+ + *

+ + /

+ - /

+ - +

+ - -

+ - *

+ * *

+ * /

+ * +

+ * -

...

This optimization will have the greatest effect when the player has a large hand of up to 17 cards.

Similar in spirit to the Gray code approach, there is benefit to reducing the number of changed elements between permutations of x. Heap's permutation algorithm is designed specifically for this purpose. It guarantees that iteration through all permutations only does a single swap of two elements at a time. The canonical implementation of the algorithm swaps most frequently at the beginning of the set, but a simple index reversal allows swaps to occur most frequently at the end, allowing more of the computed portion of the LHS to be saved from iteration to iteration.

For n = 4, disregarding ƒ, the search through x would look like:

(0, 1, 2, 3)

(0, 1, 3, 2)

(0, 2, 3, 1)

(0, 2, 1, 3)

(0, 3, 1, 2)

(0, 3, 2, 1)

(1, 3, 2, 0)

(1, 3, 0, 2)

(1, 2, 0, 3)

(1, 2, 3, 0)

(1, 0, 3, 2)

(1, 0, 2, 3)

...

It would be interesting to try combined Gray-Heap iteration using the above methods. This would produce a series of LHS candidates looking like:

1 + 2 + 3 + 4

1 + 2 + 3 - 4

1 + 2 + 3 * 4

1 + 2 + 3 / 4

1 + 2 - 3 / 4

...

4 - 3 + 2 - 1

4 - 3 + 2 * 1

4 - 3 + 2 / 1

4 - 3 + 2 + 1

4 - 3 + 1 + 2

4 - 3 + 1 - 2

4 - 3 + 1 * 2

4 - 3 + 1 / 2

...

4 + 1 / 2 - 3

4 + 1 + 2 - 3

4 + 1 + 2 * 3

4 + 1 + 2 / 3

4 + 1 + 2 + 3

todo.

The equation with the maximal intermediate value is where n takes its maximum of 17, x and y take their maxima of 9, and only multiplication and division are used:

9^8 / 9^8 * 9 = 9

The maximal intermediate value then requires an integer width of:

ceil( 8*ln(9)/ln(2) ) = 26 bits

If using integer math with a fraction, 32-bit signed integers will suffice.

Single-precision (32-bit) floating-point has a 24-bit significand. As such, it would suffice for n ≤ 15, since 97 < 224. Above that, it is insufficient for the worst edge cases, and double (64-bit) floating-point would be required instead.

The naïve algorithm is easily parallelized (and is, perhaps, even one of Moler's embarassingly parallel problems). The simplest method is to perform the permutation in a parent thread and assign subdivided ƒ search space to m children, such that all children have the same set of inputs in the same order but attempt different combinations for ƒ. Each child will have a search space size of 4n - 1/m . Whichever child finds the first solution returns it to the parent, the parent cancels all children and completes execution. In pseudocode,

for each of n! permutations of x:

fork m children each searching 4^(n-1) / m combinations of ƒ

join on any child completion

if a child found a solution:

cancel other children

print solution

exit

join on all remaining children

A more carefully optimized solution would be to use SIMD on an appropriate processor architecture - either on a CPU or a GPU.

Contemporary Intel CPUs offer up to 28 cores, 2 semi-parallel "hyper-threads" per core, and 512-bit-wide vectorized math. In 512-bit single-precision vectorized mode, 16 values can be operated upon at once. This all allows a theoretical parallel speedup factor of up to 896.

Using GPGPU can offer even greater parallel speedup. For example, nVidia offers GPUs with core counts well in excess of 4,000 before taking other parallelism into account. There are various Python bindings for OpenCL able to target this hardware.

Using a SIMD scheme, there would be two levels of parallelism: core/thread children, and vectorized elements within each of those cores. Within a single core, each element of the vectorized operation would have to have a different set of x inputs but the same ƒ operations. Division of the x search space would then need to occur across SIMD elements, with all elements in the core using the same ƒ set. The top-level parent would divide the ƒ search space across cores.