The aim of this repository is to easily deploy our OpenSource LLMs in OpenShift or Kubernetes clusters using GitOps:

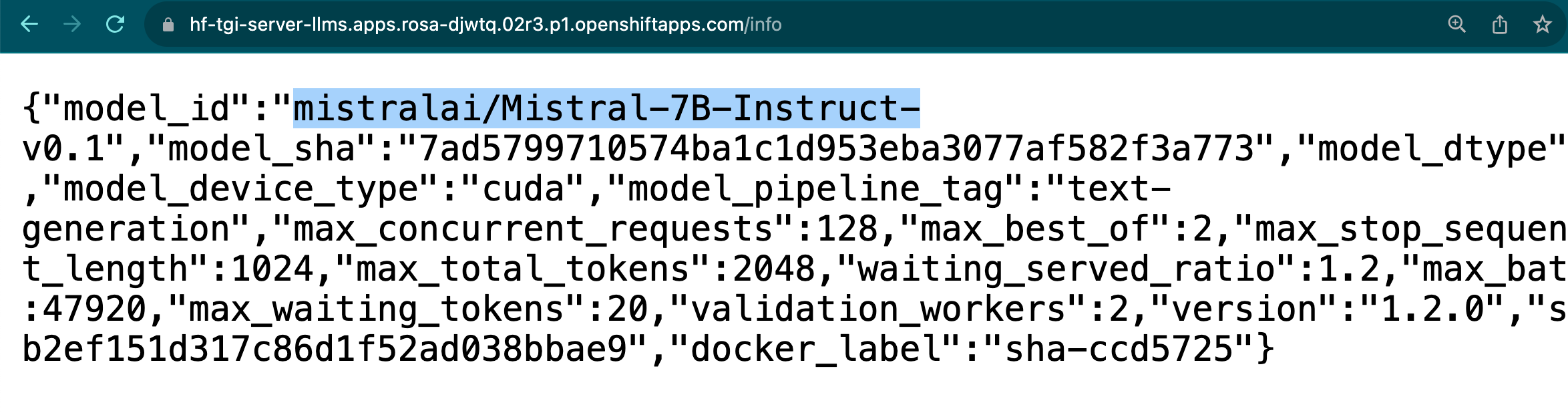

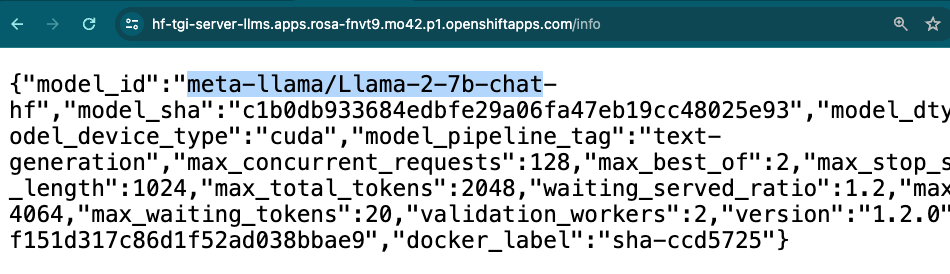

Text Generation Inference (TGI) is a toolkit for deploying and serving Large Language Models (LLMs). TGI enables high-performance text generation for the most popular open-source LLMs, including Llama, Falcon, StarCoder, BLOOM, GPT-NeoX, and T5.

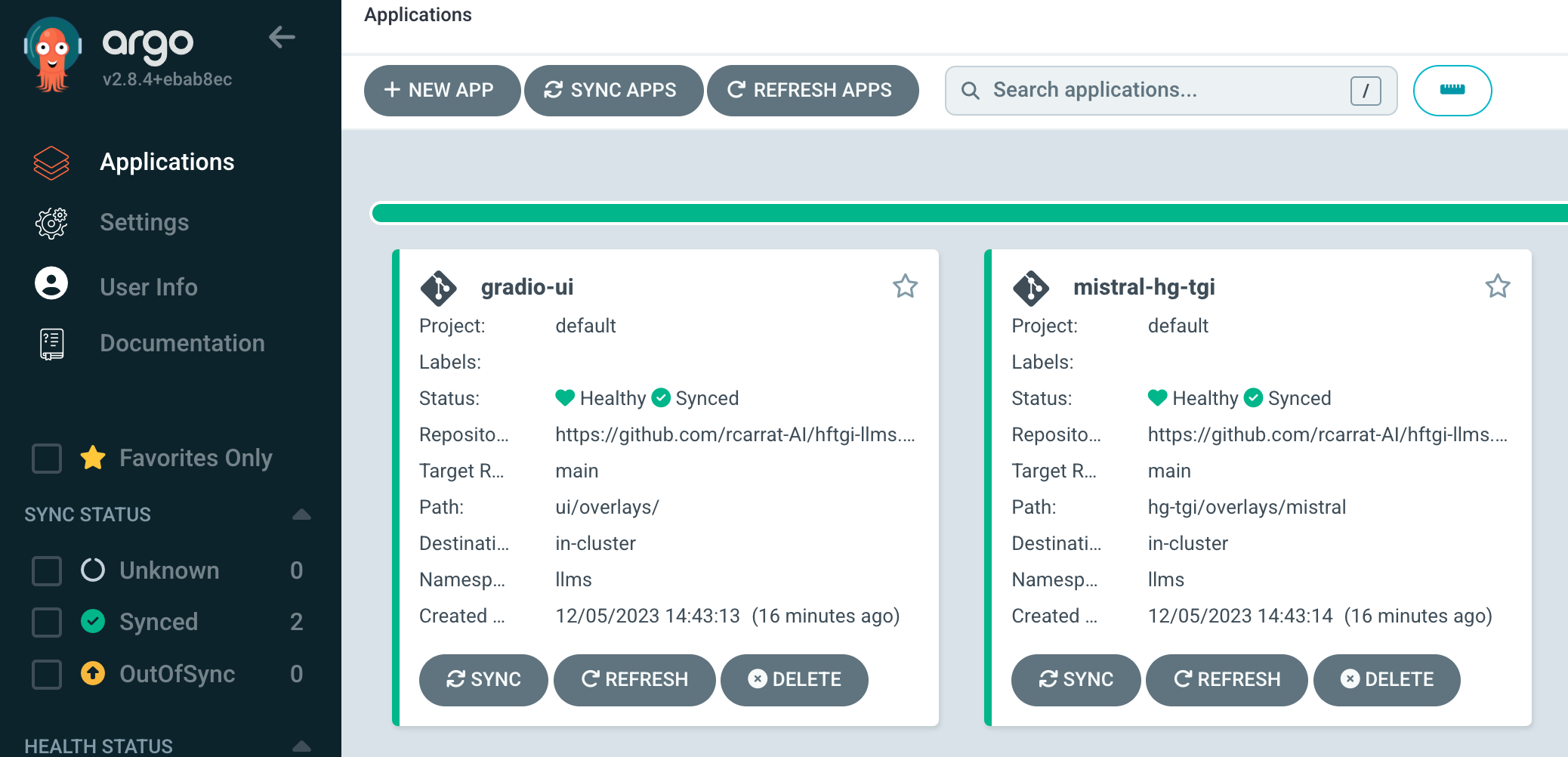

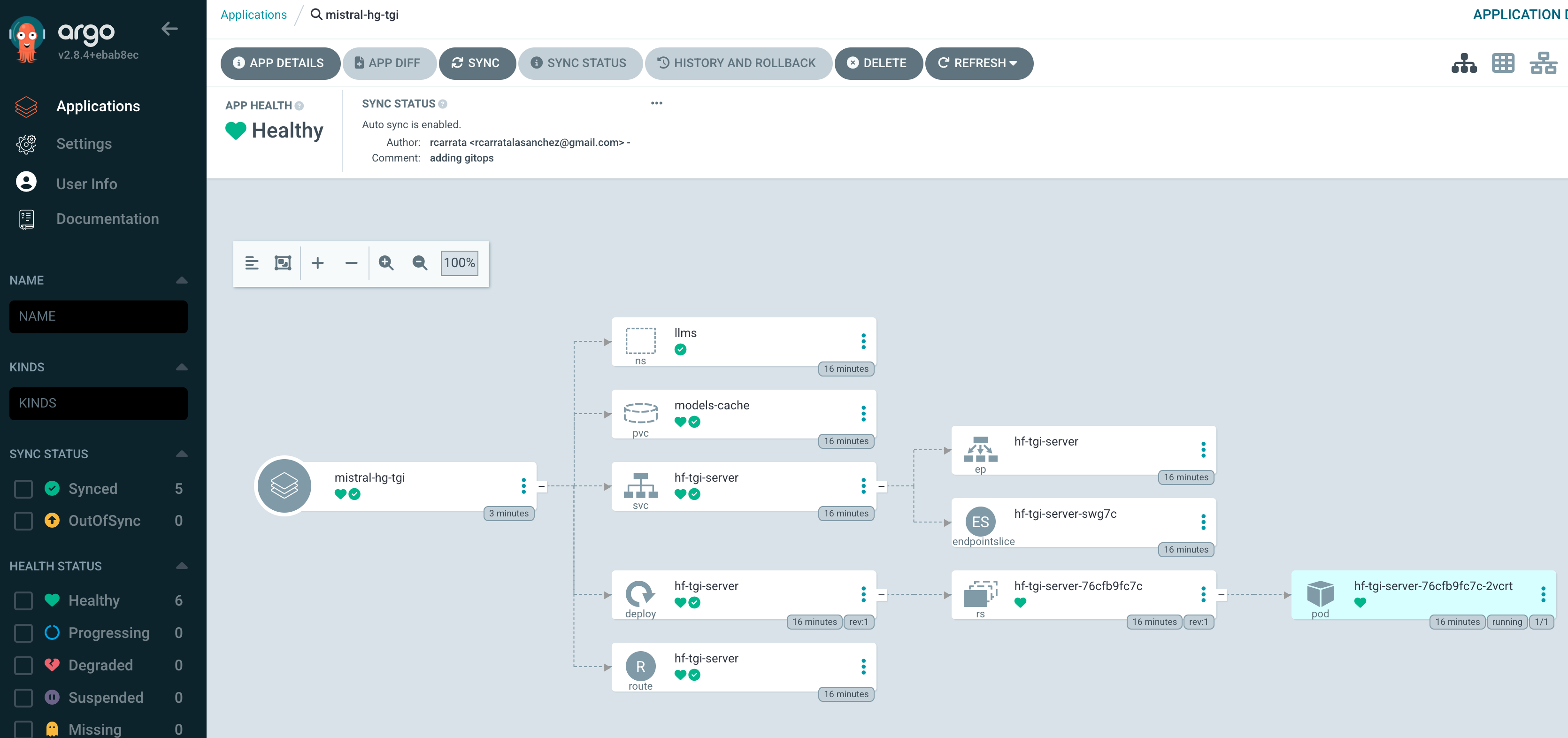

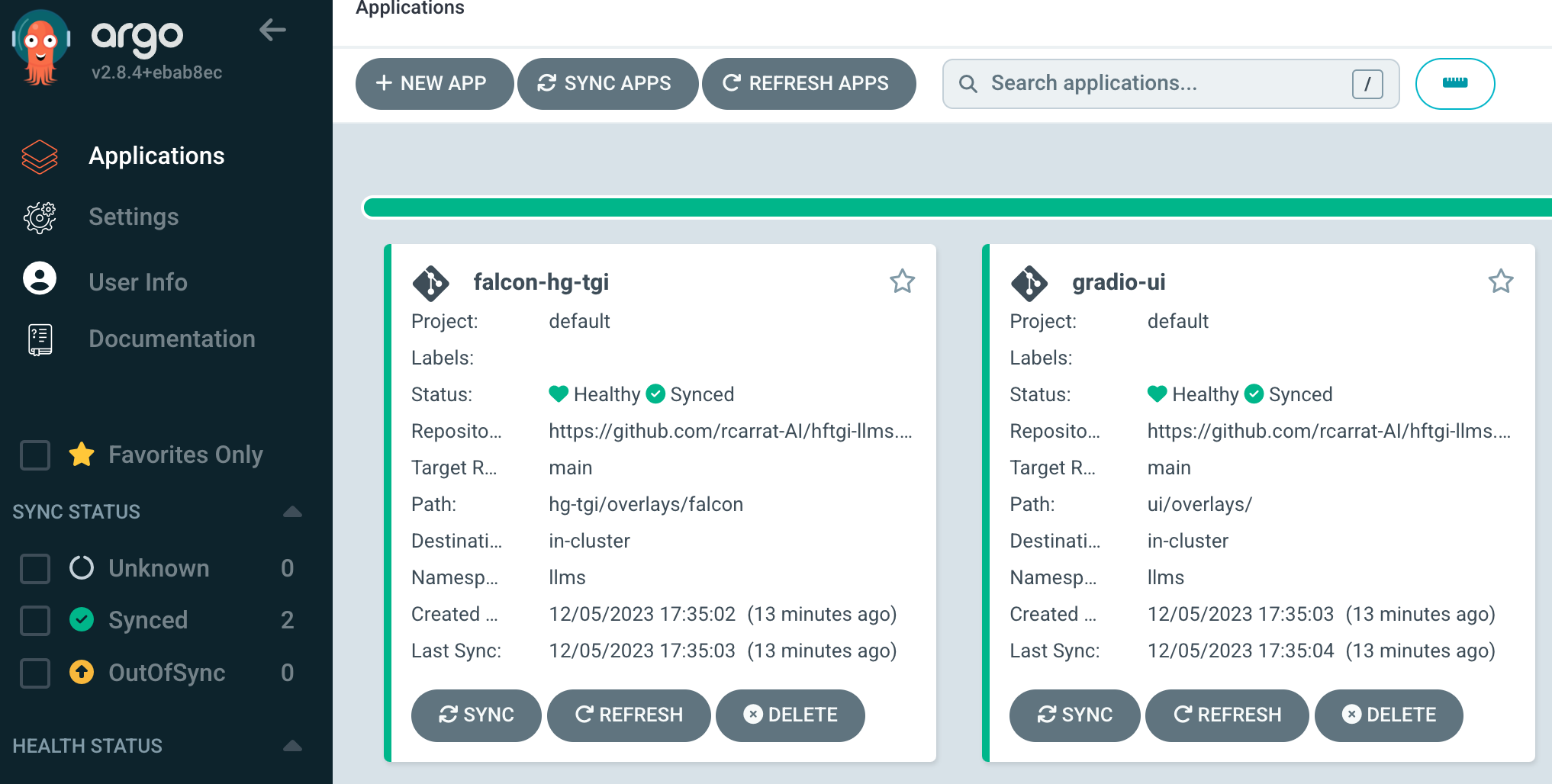

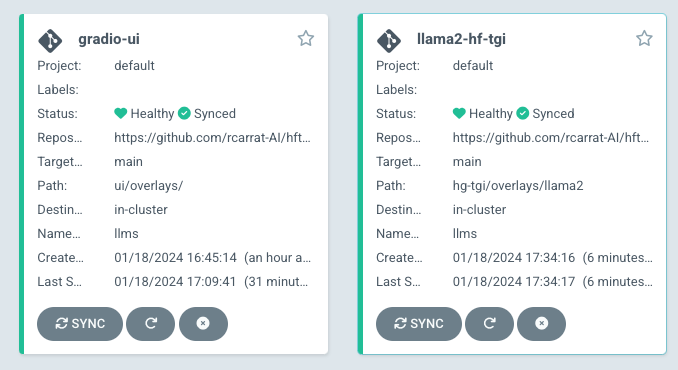

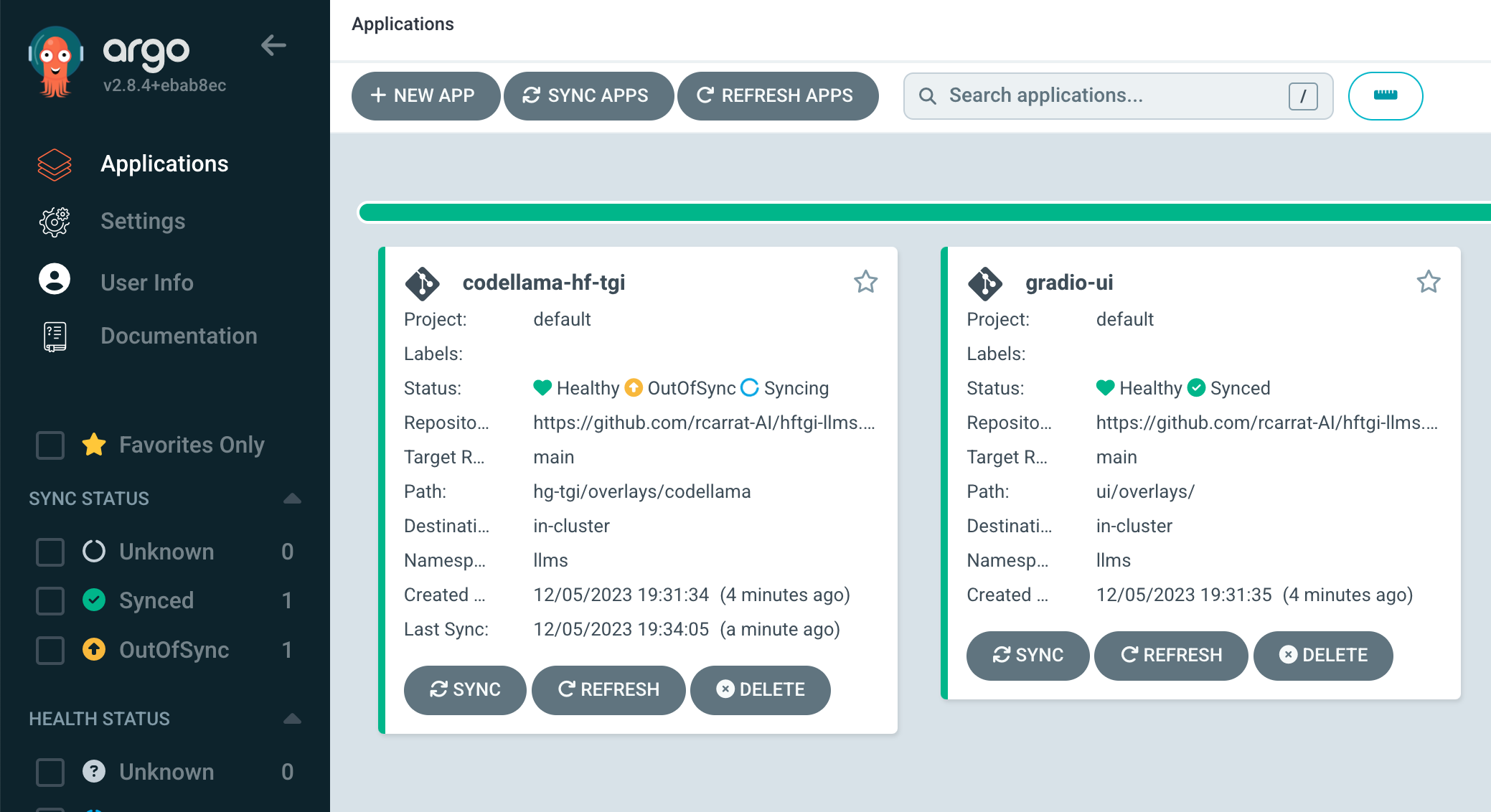

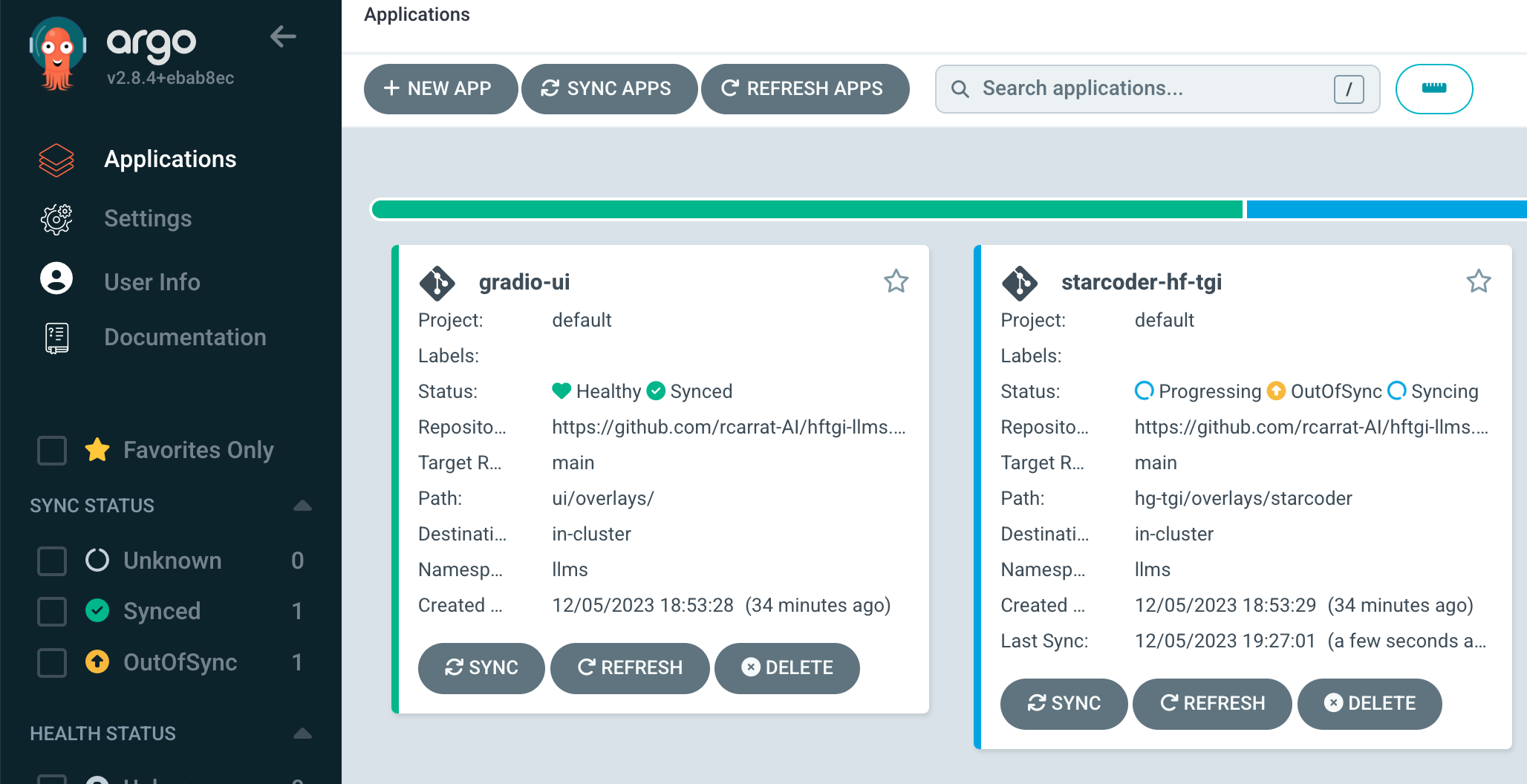

This repo will deploy HuggingFace Text Generation Inference server deployments in K8s/OpenShift with GitOps:

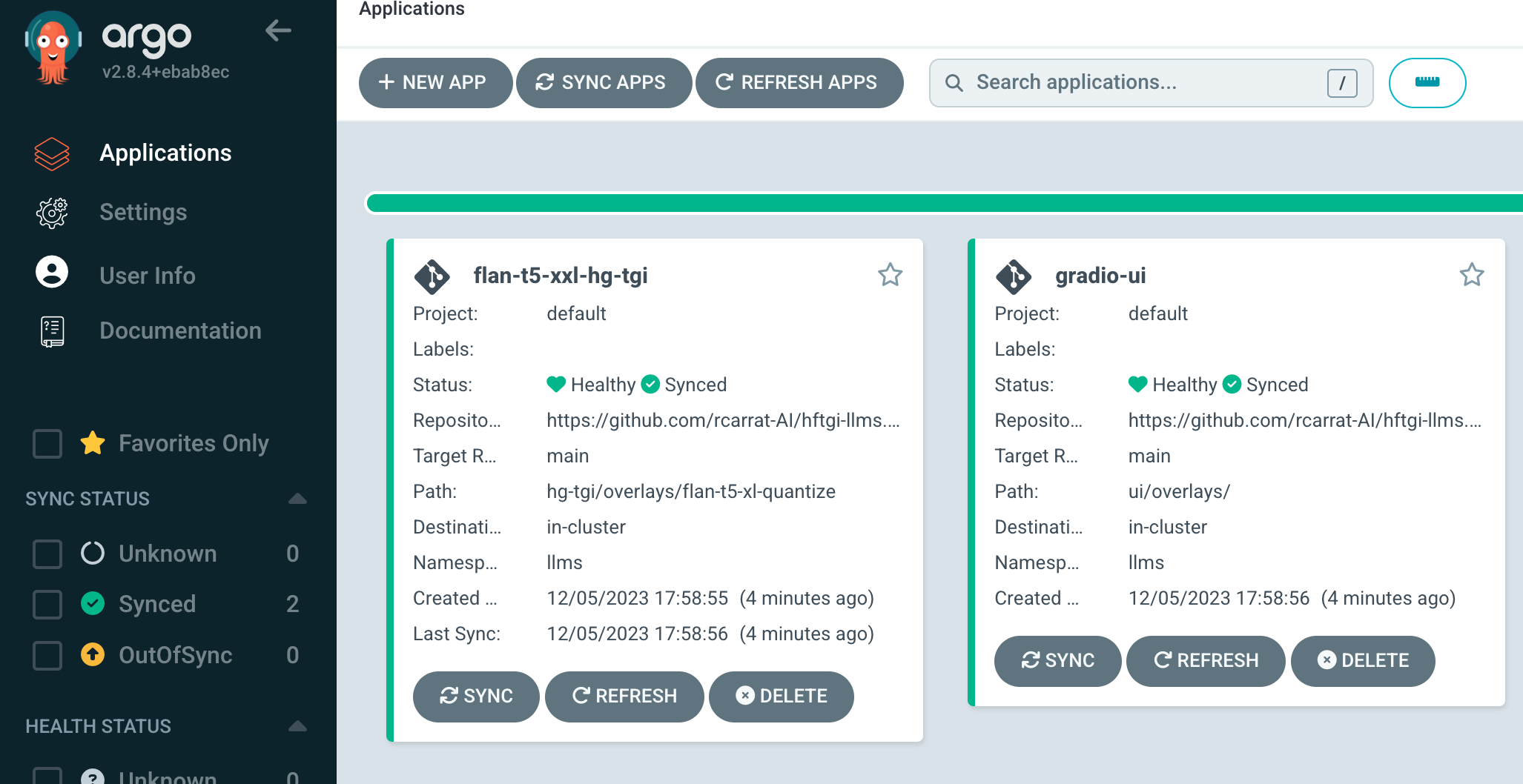

With this we can easily deploy different Open Source LLMs such as Llama2, Falcon, Mistral or FlanT5-XL among others in our OpenShift / Kubernetes clusters to be consumed as another application:

- ROSA or OpenShift Clusters (can be also deployed in K8s with some tweaks)

- GPU available (24gb vRAM recommended)

- Node Feature Discovery Operator

- NVIDIA GPU Operator

- ArgoCD / OpenShift GitOps

Tested with A10G (g5.2xlarge) with Spot Instances using a ROSA cluster with 4.13 version and RHODS with 2.14.0

kubectl apply -k gitops/mistralkubectl apply -k gitops/flant5xxlkubectl apply -k gitops/falconkubectl apply -k gitops/llama2NOTE: this model needs to set the HUGGING_FACE_HUB_TOKEN_BASE64 in a Secret to be downloaded.

export HUGGING_FACE_HUB_TOKEN_BASE64=$(echo -n 'your-token-value' | base64)

envsubst < hg-tgi/overlays/llama2-7b/hf-token-secret-template.yaml > /tmp/hf-token-secret.yaml

kubectl apply -f /tmp/hf-token-secret.yaml -n llmskubectl apply -k gitops/codellamakubectl apply -k gitops/starcoderNOTE: this model needs to set the HF_TOKEN in a Secret to be downloaded.

export HUGGING_FACE_HUB_TOKEN_BASE64=$(echo -n 'your-token-value' | base64)

envsubst < hg-tgi/overlays/llama2-7b/hf-token-secret-template.yaml > /tmp/hf-token-secret.yaml

kubectl apply -f /tmp/hf-token-secret.yaml -n llms- Check the Inference Guide to test your LLM deployed with Hugging Face Text Generation Inference

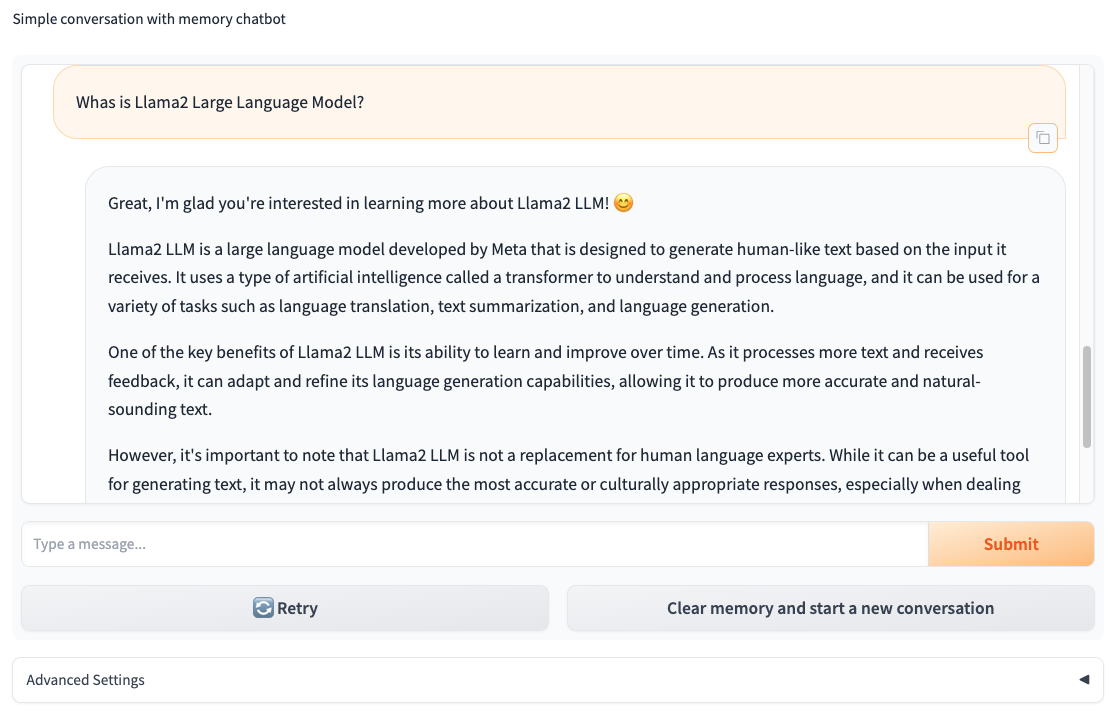

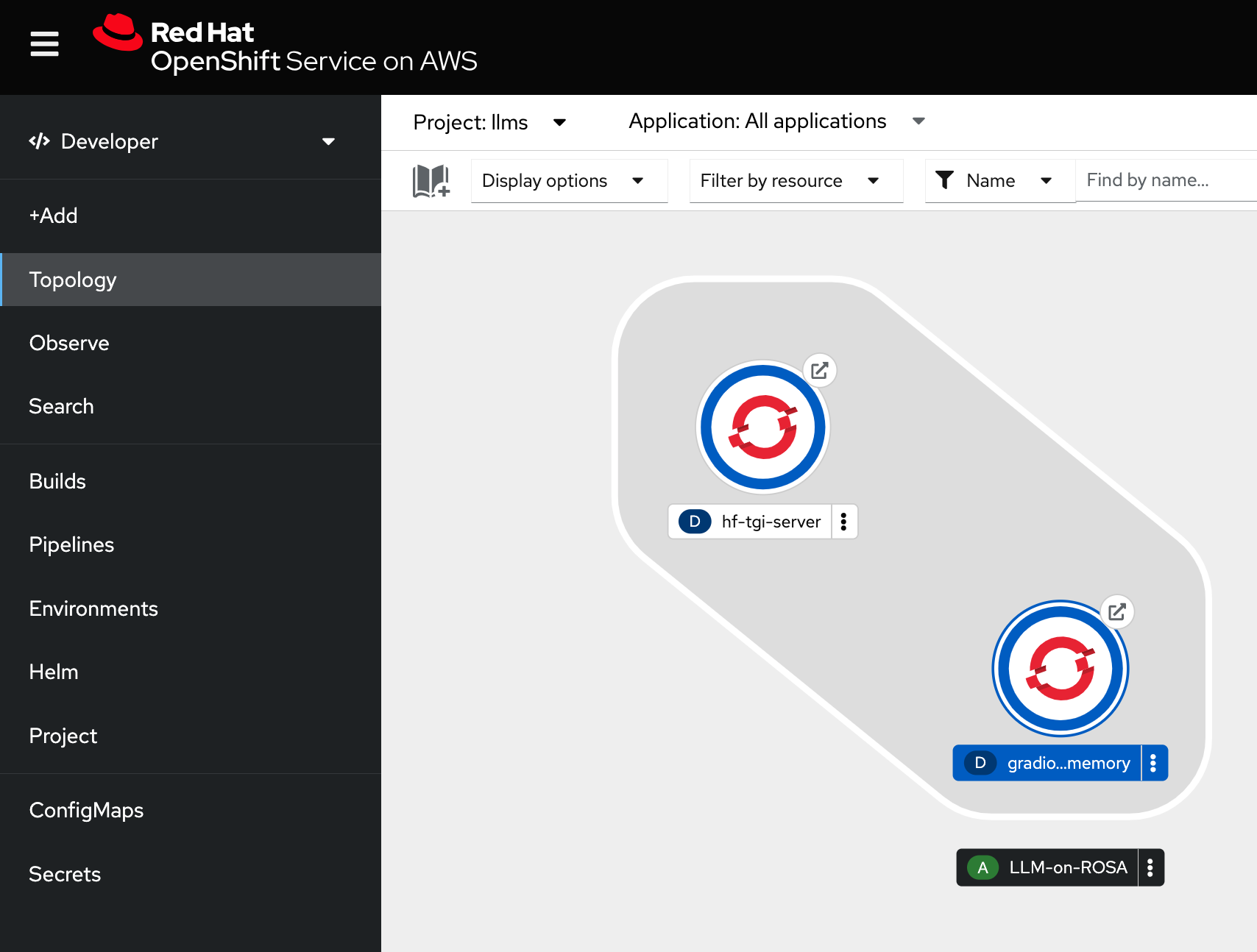

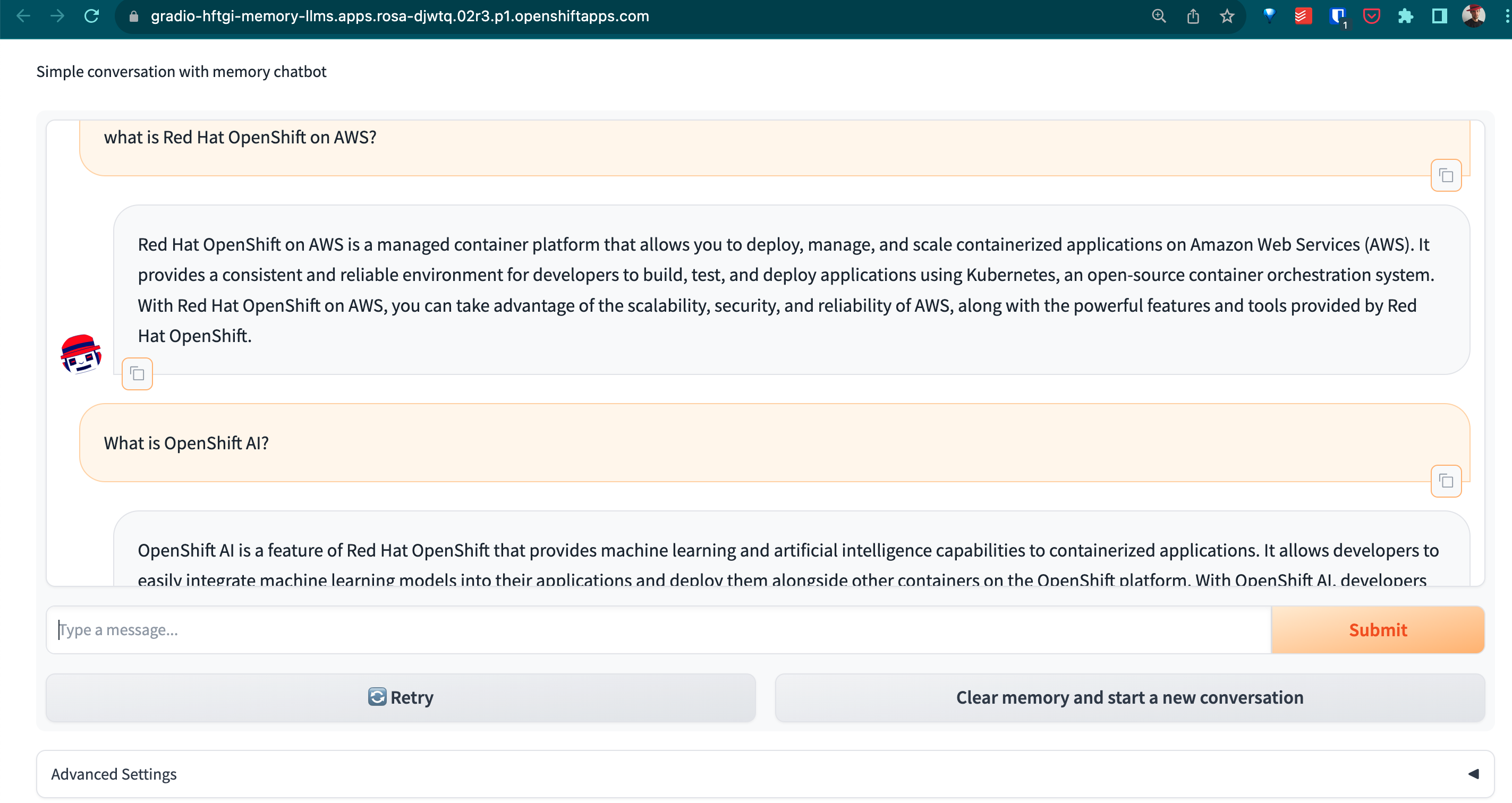

We will deploy alongside the HF-TGI a Gradio ChatBot application with Memory powered by LangChain.

This FrontEnd will be using the HF-TGI deployed as a backend, powering and fueling the AI NPL Chat capabilities of this FrontEnd Chatbot App.

Once the Gradio ChatBot is deployed, will access directly to the HF-TGI Server that serves the LLM of your choice (see section below), and will answer your questions:

NOTE: If you want to know more, check the original source rh-aiservices-bu repository.

- Repo is heavily based in the llm-on-openshift repo. Kudos to the team!