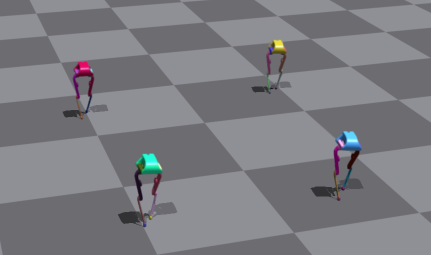

This repository contains the training code for Bolt's journey of learning to walk using Deep Reinforcement Learning.

This project was done by the following students during ISAE-SUPAERO's PIE. The full report (in french) can be found on docs/.

Bolt is an open-source biped robot developed by Open Dynamics Robot Initiave. The properties and configurations for the robot, as well as its base URDF can be found here.

This repo comes prepacked with IsaacGymEnvs. To setup your environment on a fresh server, follow these steps.

-

Clone the repository:

git clone https://github.com/rafacelente/bolt.git

-

Miniconda installation

mkdir -p ~/miniconda3 wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -O ~/miniconda3/miniconda.sh bash ~/miniconda3/miniconda.sh -b -u -p ~/miniconda3 rm -rf ~/miniconda3/miniconda.sh ~/miniconda3/bin/conda init bash # To make changes take into effect, close the terminal and re-open it.

-

Environment creation

conda create -n isaac python=3.8 conda activate isaac conda install -y gxx_linux-64 cd /path/to/bolt/isaacgym/python && pip install --user --no-cache-dir -e . cd /path/to/notebooks/bolt/IsaacGymEnvs && pip install --user --no-cache-dir -e . pip install "numpy<1.24" # After each of these steps, do conda deactivate -> conda activate isaac conda env config vars set LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CONDA_PREFIX/lib --name $CONDA_DEFAULT_ENV conda env config vars set VK_ICD_FILENAMES=/usr/share/vulkan/icd.d/nvidia_icd.json --name $CONDA_DEFAULT_ENV

All training/simulation is done through the the script ./IsaacGymEnvs/isaacgymenvs/train.py. The default configuration parameters can be found on .IsaacGymEnvs/isaacgymenvs/cfg/config.yaml. An example training job is:

python train.py python train.py task=Bolt wandb_activate=True headless=False num_envs=1024 headless=True max_iterations=1000Some (not all) pertinent flags:

test=True: launches a simulation session (no training)max_iterations=1000: Sets the number of training epochs.checkpoint=/path/to/model.pth: Executes a training/simulation sessions starting from a given pre-trained model.num_envs=1024: Number of vectorized environments in the training run.wandb_activate=True: activates Weights and Biases logging. Make sure you havewandband log in with your wandb account.

The URDF used for this project was slightly changed from the base URDF from ODRI. All URDF files can be found on ./IsaacGymEnvs/assets/urdf, and meshes can also be found on ./IsaacGymEnvs/assets/meshes.

The environment, agent, and reward definitions can be found on ./IsaacGymEnvs/isaacgymenvs/tasks/bolt.py. Its configuration file can be found on ./IsaacGymEnvs/isaacgymenvs/cfg/task/Bolt.yaml. For the RL algorithm, its configuration file can be found on ./IsaacGymEnvs/isaacgymenvs/cfg/train/BoltPPO.yaml.

- Lucie Mouille: [email protected]

- Guillaume Berthelot : [email protected]

- Rafael Celente: [email protected]

- Maxime Ségura:

- Alexandre Gouiller: