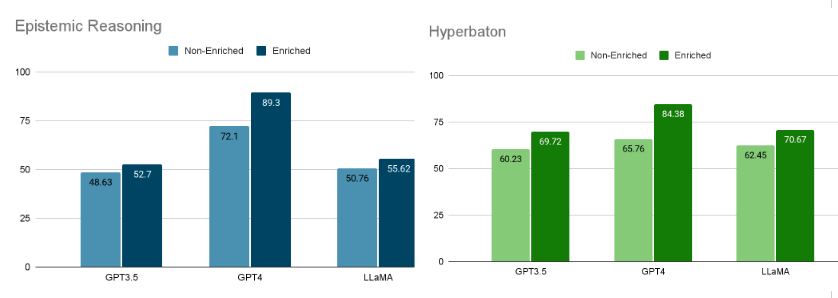

- We have reproduced the algorithm of AutoHint for automatic prompt optimisation for BBII 2 tasks: Epistemic Reasoning and Hyperbaton

- Improved the runtime of algorithm by sampling before hint generation( ~2.5x speedup)

- Reproduced and evaluated on GPT3.5 and LLaMA2 in addition to GPT-4

- Generalised the summarisation prompt to improve the quality as well as give more general enrichments

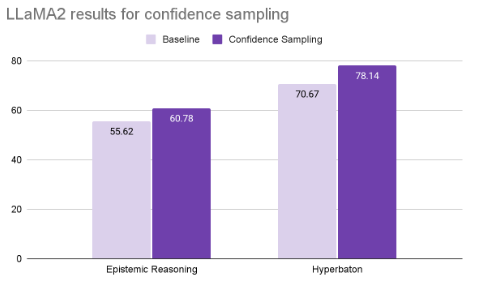

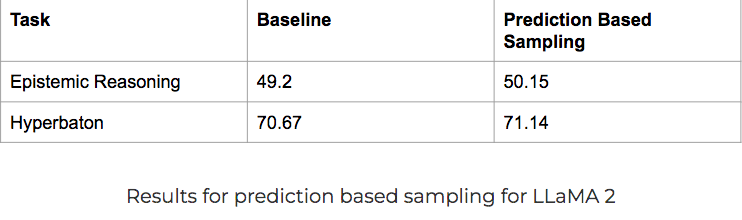

- Proposed 2 sampling methods to improve the selection of hints in terms of diversity and specificity:

- Confidence based sampling: Multi-turn CoT prompting to give confidence of prediction from LLMs, and then select top-k most wrong predicitons or samples for max information gain

- Prediction based sampling: Random balanced sampling based on class(label) and type of prediction(incorrect and correct)

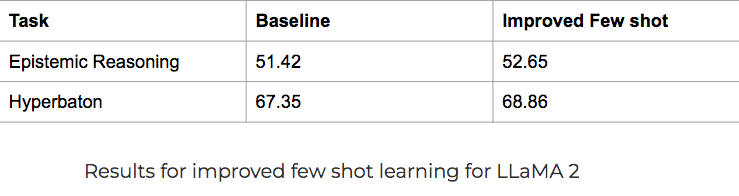

- Improved candidate generation for few-shot demonstrations: Retrieve the K-nearest neighbour based on BERT embedding during few shot inference and provide them as input-output demonstrations

pip install -r requirements.txt- For the BigBenchII dataset clone this repoistory automatic_prompt_engineer

- For LLaMA2 access, register on this link and fill the form

- notebooks

-llama

|- llama-fewshot.ipynb (for improved and baseline fewshot inference)

|- llama-confidence-sampling.ipynb (multi-turn CoT confidence based sampling)

|- llama-baseline.ipynb (reproduction of baseline for LLaMA2)

-GPT

|- GPT-baseline.ipynb(reproduction of baseline for GPT3.5/GPT4)

|- GPT-class-balanced-right-wrong-sampling.ipynb (class and inference based balanced sampling)

- data: data splits to reproduce

|- train_entail.json (entailment)

|- val_entail.json

|- test_entail.json

|- train_hyper.json (hyperbaton)

|- val_hyper.json

|- test_hyper.json

|- train_implicatures.json (implicatures)

|- val_implicatures.json

|- test_implicatures.json

- requirements.txt