kubernetes-deploy is a command line tool that helps you ship changes to a Kubernetes namespace and understand the result. At Shopify, we use it within our much-beloved, open-source Shipit deployment app.

Why not just use the standard kubectl apply mechanism to deploy? It is indeed a fantastic tool; kubernetes-deploy uses it under the hood! However, it leaves its users with some burning questions: What just happened? Did it work?

Especially in a CI/CD environment, we need a clear, actionable pass/fail result for each deploy. Providing this was the foundational goal of kubernetes-deploy, which has grown to support the following core features:

:eyes: Watches the changes you requested to make sure they roll out successfully.

🔢 Predeploys certain types of resources (e.g. ConfigMap, PersistentVolumeClaim) to make sure the latest version will be available when resources that might consume them (e.g. Deployment) are deployed.

🔐 Creates Kubernetes secrets from encrypted EJSON, which you can safely commit to your repository

:running: Running tasks at the beginning of a deploy using bare pods (example use case: Rails migrations)

This repo also includes related tools for running tasks and restarting deployments.

KUBERNETES-DEPLOY

KUBERNETES-RESTART

KUBERNETES-RUN

KUBERNETES-RENDER

DEVELOPMENT

- Setup

- Running the test suite locally

- Releasing a new version (Shopify employees)

- CI (External contributors)

CONTRIBUTING

- Ruby 2.3+

- Your cluster must be running Kubernetes v1.10.0 or higher1

- Each app must have a deploy directory containing its Kubernetes templates (see Templates)

- You must remove the

kubectl.kubernetes.io/last-applied-configurationannotation from any resources in the namespace that are not included in your deploy directory. This annotation is added automatically when you create resources withkubectl apply.kubernetes-deploywill prune any resources that have this annotation and are not in the deploy directory.2 - Each app managed by

kubernetes-deploymust have its own exclusive Kubernetes namespace.

1 We run integration tests against these Kubernetes versions. You can find our offical compatibility chart below.

2 This requirement can be bypassed with the --no-prune option, but it is not recommended.

| Kubernetes version | Last officially supported in gem version |

|---|---|

| 1.5 | 0.11.2 |

| 1.6 | 0.15.2 |

| 1.7 | 0.20.6 |

| 1.8 | 0.21.1 |

- Install kubectl (requires v1.10.0 or higher) and make sure it is available in your $PATH

- Set up your kubeconfig file for access to your cluster(s).

gem install kubernetes-deploy

kubernetes-deploy <app's namespace> <kube context>

Environment variables:

$REVISION(required): the SHA of the commit you are deploying. Will be exposed to your ERB templates ascurrent_sha.$KUBECONFIG(required): points to one or multiple valid kubeconfig files that include the context you want to deploy to. File names are separated by colon for Linux and Mac, and semi-colon for Windows.$ENVIRONMENT: used to set the deploy directory toconfig/deploy/$ENVIRONMENT. You can use the--template-dir=DIRoption instead if you prefer (one or the other is required).$GOOGLE_APPLICATION_CREDENTIALS: points to the credentials for an authenticated service account (required if your kubeconfiguser's auth provider is GCP)

Options:

Refer to kubernetes-deploy --help for the authoritative set of options.

--template-dir=DIR: Used to set the deploy directory. Set$ENVIRONMENTinstead to useconfig/deploy/$ENVIRONMENT.--bindings=BINDINGS: Makes additional variables available to your ERB templates. For example,kubernetes-deploy my-app cluster1 --bindings=color=blue,size=largewill exposecolorandsize.--no-prune: Skips pruning of resources that are no longer in your Kubernetes template set. Not recommended, as it allows your namespace to accumulate cruft that is not reflected in your deploy directory.--max-watch-seconds=seconds: Raise a timeout error if it takes longer than seconds for any resource to deploy.

Each app's templates are expected to be stored in a single directory. If this is not the case, you can create a directory containing symlinks to the templates. The recommended location for app's deploy directory is {app root}/config/deploy/{env}, but this is completely configurable.

All templates must be YAML formatted. You can also use ERB. The following local variables will be available to your ERB templates by default:

current_sha: The value of$REVISIONdeployment_id: A randomly generated identifier for the deploy. Useful for creating unique names for task-runner pods (e.g. a pod that runs rails migrations at the beginning of deploys).

You can add additional variables using the --bindings=BINDINGS option which can be formated as comma separated string, JSON string or path to a JSON or YAML file. Complex JSON or YAML data will be converted to a Hash for use in templates. To load a file the argument should include the relative file path prefixed with an @ sign. An argument error will be raised if the string argument cannot be parsed, the referenced file does not include a valid extension (.json, .yaml or .yml) or the referenced file does not exist.

# Comma separated string. Exposes, 'color' and 'size'

$ kubernetes-deploy my-app cluster1 --bindings=color=blue,size=large

# JSON string. Exposes, 'color' and 'size'

$ kubernetes-deploy my-app cluster1 --bindings='{"color":"blue","size":"large"}'

# Load JSON file from ./config

$ kubernetes-deploy my-app cluster1 --bindings='@config/production.json'

# Load YAML file from ./config (.yaml or .yml supported)

$ kubernetes-deploy my-app cluster1 --bindings='@config/production.yaml'

kubernetes-deploy supports composing templates from so called partials in order to reduce duplication in Kubernetes YAML files. Given a template directory DIR, partials are searched for in DIR/partialsand in 'DIR/../partials', in that order. They can be embedded in other ERB templates using the helper method partial. For example, let's assume an application needs a number of different CronJob resources, one could place a template called cron in one of those directories and then use it in the main deployment.yaml.erb like so:

<%= partial "cron", name: "cleanup", schedule: "0 0 * * *", args: %w(cleanup), cpu: "100m", memory: "100Mi" %>

<%= partial "cron", name: "send-mail", schedule: "0 0 * * *", args: %w(send-mails), cpu: "200m", memory: "256Mi" %>Inside a partial, parameters can be accessed as normal variables, or via a hash called locals. Thus, the cron template could like this:

---

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: cron-<%= name %>

spec:

schedule: <%= schedule %>

successfulJobsHistoryLimit: 3

failedJobsHistoryLimit: 3

concurrencyPolicy: Forbid

jobTemplate:

spec:

template:

spec:

containers:

- name: cron-<%= name %>

image: ...

args: <%= args %>

resources:

requests:

cpu: "<%= cpu %>"

memory: <%= memory %>

restartPolicy: OnFailureBoth .yaml.erb and .yml.erb file extensions are supported. Templates must refer to the bare filename (e.g. use partial: 'cron' to reference cron.yaml.erb).

Partials can be included almost everywhere in ERB templates. Note: when using a partial to insert additional key-value pairs to a map you must use YAML merge keys. For example, given a partial p defining two fields 'a' and 'b',

a: 1

b: 2you cannot do this:

x: yz

<%= partial 'p' %>hoping to get

x: yz

a: 1

b: 2but you can do:

<<: <%= partial 'p' %>

x: yzThis is a limitation of the current implementation.

kubernetes-deploy.shopify.io/timeout-override: Override the tool's hard timeout for one specific resource. Both full ISO8601 durations and the time portion of ISO8601 durations are valid. Value must be between 1 second and 24 hours.- Example values: 45s / 3m / 1h / PT0.25H

- Compatibility: all resource types

kubernetes-deploy.shopify.io/required-rollout: Modifies how much of the rollout needs to finish before the deployment is considered successful.- Compatibility: Deployment

full: The deployment is successful when all pods in the newreplicaSetare ready.none: The deployment is successful as soon as the newreplicaSetis created for the deployment.maxUnavailable: The deploy is successful when minimum availability is reached in the newreplicaSet. In other words, the number of new pods that must be ready is equal tospec.replicas-strategy.RollingUpdate.maxUnavailable(converted from percentages by rounding up, if applicable). This option is only valid for deployments that use theRollingUpdatestrategy.- Percent (e.g. 90%): The deploy is successful when the number of new pods that are ready is equal to

spec.replicas* Percent.

kubernetes-deploy.shopify.io/prunable: Allows a Custom Resource to be pruned during deployment.- Compatibility: Custom Resource Definition

true: The custom resource will be pruned if the resource is not in the deploy directory.- All other values: The custom resource will not be pruned.

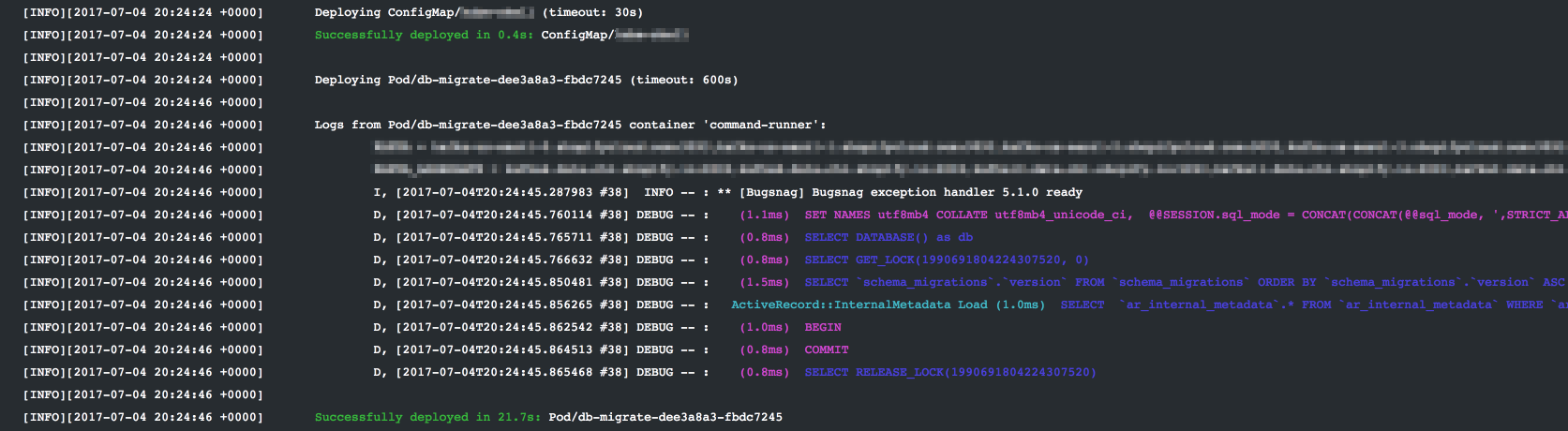

To run a task in your cluster at the beginning of every deploy, simply include a Pod template in your deploy directory. kubernetes-deploy will first deploy any ConfigMap and PersistentVolumeClaim resources in your template set, followed by any such pods. If the command run by one of these pods fails (i.e. exits with a non-zero status), the overall deploy will fail at this step (no other resources will be deployed).

Requirements:

- The pod's name should include

<%= deployment_id %>to ensure that a unique name will be used on every deploy (the deploy will fail if a pod with the same name already exists). - The pod's

spec.restartPolicymust be set toNeverso that it will be run exactly once. We'll fail the deploy if that run exits with a non-zero status. - The pod's

spec.activeDeadlineSecondsshould be set to a reasonable value for the performed task (not required, but highly recommended)

A simple example can be found in the test fixtures: test/fixtures/hello-cloud/unmanaged-pod.yml.erb.

The logs of all pods run in this way will be printed inline.

Note: If you're a Shopify employee using our cloud platform, this setup has already been done for you. Please consult the CloudPlatform User Guide for usage instructions.

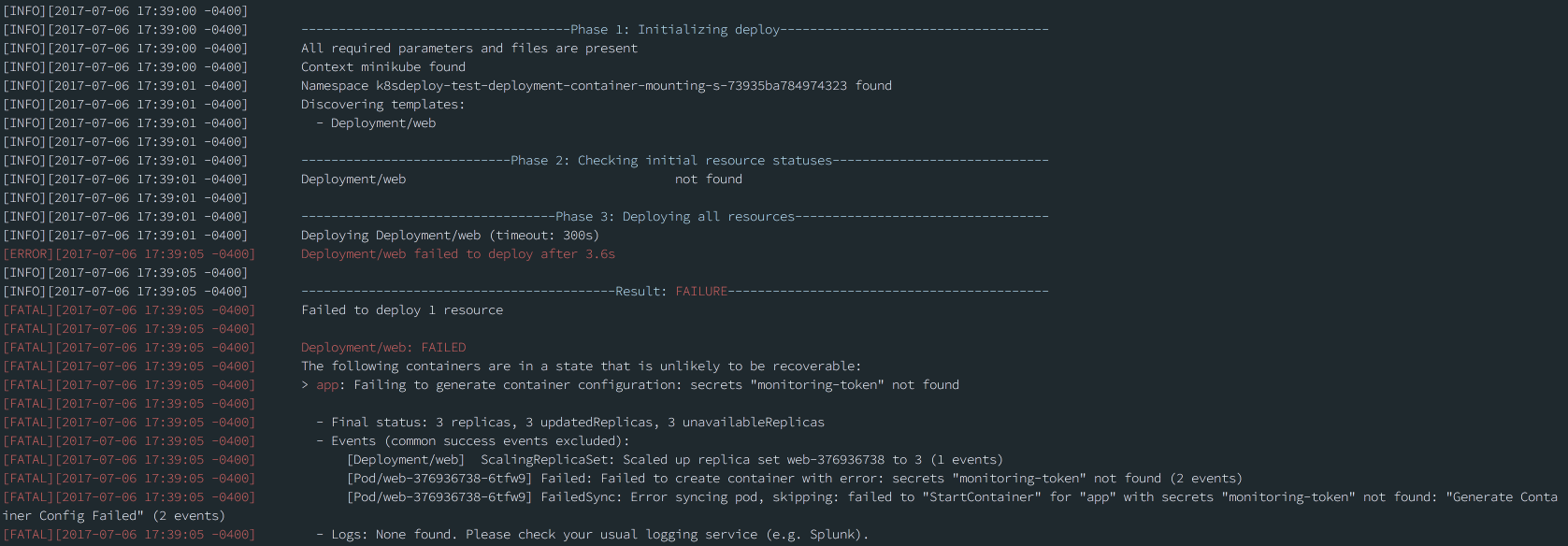

Since their data is only base64 encoded, Kubernetes secrets should not be committed to your repository. Instead, kubernetes-deploy supports generating secrets from an encrypted ejson file in your template directory. Here's how to use this feature:

- Install the ejson gem:

gem install ejson - Generate a new keypair:

ejson keygen(prints the keypair to stdout) - Create a Kubernetes secret in your target namespace with the new keypair:

kubectl create secret generic ejson-keys --from-literal=YOUR_PUBLIC_KEY=YOUR_PRIVATE_KEY --namespace=TARGET_NAMESPACE - (optional but highly recommended) Back up the keypair somewhere secure, such as a password manager, for disaster recovery purposes.

- In your template directory (alongside your Kubernetes templates), create

secrets.ejsonwith the format shown below. The_typekey should have the value “kubernetes.io/tls” for TLS secrets and “Opaque” for all others. Thedatakey must be a json object, but its keys and values can be whatever you need.

{

"_public_key": "YOUR_PUBLIC_KEY",

"kubernetes_secrets": {

"catphotoscom": {

"_type": "kubernetes.io/tls",

"data": {

"tls.crt": "cert-data-here",

"tls.key": "key-data-here"

}

},

"monitoring-token": {

"_type": "Opaque",

"data": {

"api-token": "token-value-here"

}

}

}

}- Encrypt the file:

ejson encrypt /PATH/TO/secrets.ejson - Commit the encrypted file and deploy as usual. The deploy will create secrets from the data in the

kubernetes_secretskey.

Note: Since leading underscores in ejson keys are used to skip encryption of the associated value, kubernetes-deploy will strip these leading underscores when it creates the keys for the Kubernetes secret data. For example, given the ejson data below, the monitoring-token secret will have keys api-token and property (not _property):

{

"_public_key": "YOUR_PUBLIC_KEY",

"kubernetes_secrets": {

"monitoring-token": {

"_type": "kubernetes.io/tls",

"data": {

"api-token": "EJ[ENCRYPTED]",

"_property": "some unencrypted value"

}

}

}By default, kubernetes-deploy does not check the status of custom resources; it simply assumes that they deployed successfully. In order to meaningfully monitor the rollout of custom resources, kubernetes-deploy supports configuring pass/fail conditions using annotations on CustomResourceDefinitions (CRDs).

Note: This feature is only available on clusters running Kubernetes 1.11+ since it relies on the

metadata.generationfield being updated when custom resource specs are changed.

Requirements:

- The custom resource must expose a

statussubresource with anobservedGenerationfield. - The

kubernetes-deploy.shopify.io/instance-rollout-conditionsannotation must be present on the CRD that defines the custom resource. - (optional) The

kubernetes-deploy.shopify.io/instance-timeoutannotation can be added to the CRD that defines the custom resource to override the global default timeout for all instances of that resource. This annotation can use ISO8601 format or unprefixed ISO8601 time components (e.g. '1H', '60S').

The presence of a valid kubernetes-deploy.shopify.io/instance-rollout-conditions annotation on a CRD will cause kubernetes-deploy to monitor the rollout of all instances of that custom resource. Its value can either be "true" (giving you the defaults described in the next section) or a valid JSON string with the following format:

'{

"success_conditions": [

{ "path": <JsonPath expression>, "value": <target value> }

... more success conditions

],

"failure_conditions": [

{ "path": <JsonPath expression>, "value": <target value> }

... more failure conditions

]

}'

For all conditions, path must be a valid JsonPath expression that points to a field in the custom resource's status. value is the value that must be present at path in order to fulfill a condition. For a deployment to be successful, all success_conditions must be fulfilled. Conversely, the deploy will be marked as failed if any one of failure_conditions is fulfilled. success_conditions are mandatory, but failure_conditions can be omitted (the resource will simply time out if it never reaches a successful state).

In addition to path and value, a failure condition can also contain error_msg_path or custom_error_msg. error_msg_path is a JsonPath expression that points to a field you want to surface when a failure condition is fulfilled. For example, a status condition may expose a message field that contains a description of the problem it encountered. custom_error_msg is a string that can be used if your custom resource doesn't contain sufficient information to warrant using error_msg_path. Note that custom_error_msg has higher precedence than error_msg_path so it will be used in favor of error_msg_path when both fields are present.

Warning:

You must ensure that your custom resource controller sets .status.observedGeneration to match the observed .metadata.generation of the monitored resource once its sync is complete. If this does not happen, kubernetes-deploy will not check success or failure conditions and the deploy will time out.

As an example, the following is the default configuration that will be used if you set kubernetes-deploy.shopify.io/instance-rollout-conditions: "true" on the CRD that defines the custom resources you wish to monitor:

'{

"success_conditions": [

{

"path": "$.status.conditions[?(@.type == \"Ready\")].status",

"value": "True",

},

],

"failure_conditions": [

{

"path": '$.status.conditions[?(@.type == \"Failed\")].status',

"value": "True",

"error_msg_path": '$.status.conditions[?(@.type == \"Failed\")].message',

},

],

}'

The paths defined here are based on the typical status properties as defined by the Kubernetes community. It expects the status subresource to contain a conditions array whose entries minimally specify type, status, and message fields.

You can see how these conditions relate to the following resource:

apiVersion: stable.shopify.io/v1

kind: Example

metadata:

generation: 2

name: example

namespace: namespace

spec:

...

status:

observedGeneration: 2

conditions:

- type: "Ready"

status: "False"

reason: "exampleNotReady"

message: "resource is not ready"

- type: "Failed"

status: "True"

reason: "exampleFailed"

message: "resource is failed"

observedGeneration == metadata.generation, so kubernetes-deploy will check this resource's success and failure conditions.- Since

$.status.conditions[?(@.type == "Ready")].status == "False", the resource is not considered successful yet. $.status.conditions[?(@.type == "Failed")].status == "True"means that a failure condition has been fulfilled and the resource is considered failed.- Since

error_msg_pathis specified, kubernetes-deploy will log the contents of$.status.conditions[?(@.type == "Failed")].message, which in this case is:resource is failed.

kubernetes-restart is a tool for restarting all of the pods in one or more deployments. It triggers the restart by touching the RESTARTED_AT environment variable in the deployment's podSpec. The rollout strategy defined for each deployment will be respected by the restart.

Option 1: Specify the deployments you want to restart

The following command will restart all pods in the web and jobs deployments:

kubernetes-restart <kube namespace> <kube context> --deployments=web,jobs

Option 2: Annotate the deployments you want to restart

Add the annotation shipit.shopify.io/restart to all the deployments you want to target, like this:

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: web

annotations:

shipit.shopify.io/restart: "true"With this done, you can use the following command to restart all of them:

kubernetes-restart <kube namespace> <kube context>

kubernetes-run is a tool for triggering a one-off job, such as a rake task, outside of a deploy.

- You've already deployed a

PodTemplateobject with fieldtemplatecontaining aPodspecification that does not include theapiVersionorkindparameters. An example is provided in this repo intest/fixtures/hello-cloud/template-runner.yml. - The

Podspecification in that template has a container namedtask-runner.

Based on this specification kubernetes-run will create a new pod with the entrypoint of the task-runner container overridden with the supplied arguments.

kubernetes-run <kube namespace> <kube context> <arguments> --entrypoint=<entrypoint> --template=<template name>

Options:

--template=TEMPLATE: Specifies the name of the PodTemplate to use (default istask-runner-templateif this option is not set).--env-vars=ENV_VARS: Accepts a comma separated list of environment variables to be added to the pod template. For example,--env-vars="ENV=VAL,ENV2=VAL2"will makeENVandENV2available to the container.--entrypoint=ENTRYPOINT: Specify the entrypoint to use to start the task runner container.--skip-wait: Skip verification of pod success--max-watch-seconds=seconds: Raise a timeout error if the pod runs for longer than the specified number of seconds

kubernetes-render is a tool for rendering ERB templates to raw Kubernetes YAML. It's useful for seeing what kubernetes-deploy does before actually invoking kubectl on the rendered YAML. It's also useful for outputting YAML that can be passed to other tools, for validation or introspection purposes.

kubernetes-renderdoes not require a running cluster or an active kubernetes context, which is nice if you want to run it in a CI environment, potentially alongside something like https://github.com/garethr/kubeval to make sure your configuration is sound.- Like the other

kubernetes-deploycommands,kubernetes-renderrequires the$REVISIONenvironment variable to be set, and will make it available ascurrent_shain your ERB templates.

To render all templates in your template dir, run:

kubernetes-render --template-dir=./path/to/template/dir

To render some templates in a template dir, run kubernetes-render with the names of the templates to render:

kubernetes-render --template-dir=./path/to/template/dir this-template.yaml.erb that-template.yaml.erb

Options:

--template-dir=DIR: Used to set the directory to interpret template names relative to. This is often the same directory passed as--template-dirwhen runningkubernetes-deployto actually deploy templates. Set$ENVIRONMENTinstead to useconfig/deploy/$ENVIRONMENT.--bindings=BINDINGS: Makes additional variables available to your ERB templates. For example,kubernetes-render --bindings=color=blue,size=large some-template.yaml.erbwill exposecolorandsizetosome-template.yaml.erb.

If you work for Shopify, just run dev up, but otherwise:

- Install kubectl version 1.10.0 or higher and make sure it is in your path

- Install minikube (required to run the test suite)

- Check out the repo

- Run

bin/setupto install dependencies

To install this gem onto your local machine, run bundle exec rake install.

Using minikube:

- Start minikube (

minikube start [options]). - Make sure you have a context named "minikube" in your kubeconfig. Minikube adds this context for you when you run

minikube start. You can check for it usingkubectl config get-contexts. - Run

bundle exec rake test(ordev testif you work for Shopify).

Using another local cluster:

- Start your cluster.

- Put the name of the context you want to use in a file named

.local-contextin the root of this project. For example:echo "dind" > .local-context. - Run

bundle exec rake test(ordev testif you work for Shopify).

To make StatsD log what it would have emitted, run a test with STATSD_DEV=1.

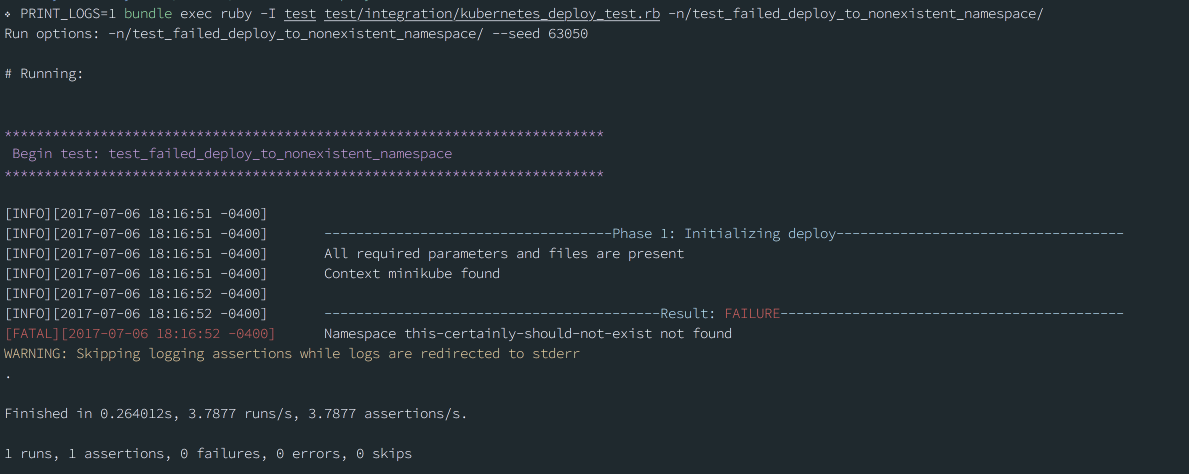

To see the full-color output of a specific integration test, you can use PRINT_LOGS=1. For example: PRINT_LOGS=1 bundle exec ruby -I test test/integration/kubernetes_deploy_test.rb -n/test_name/.

- Make sure all merged PRs are reflected in the changelog before creating the commit for the new version.

- Update the version number in

version.rband commit that change with message "Version x.y.z". Don't push yet or you'll confuse Shipit. - Tag the version with

git tag vx.y.z -a -m "Version x.y.z" - Push both your bump commit and its tag simultaneously with

git push origin master --follow-tags(note that you can setgit config --global push.followTags trueto turn this flag on by default) - Use the Shipit Stack to build the

.gemfile and upload to rubygems.org.

If you push your commit and the tag separately, Shipit usually fails with You need to create the v0.7.9 tag first.. To make it find your tag, go to Settings > Resynchronize this stack > Clear git cache.

Please make sure you run the tests locally before submitting your PR (see Running the test suite locally). After reviewing your PR, a Shopify employee will trigger CI for you.

Go to the kubernetes-deploy-gem pipeline and click "New Build". Use branch external_contrib_ci and the specific sha of the commit you want to build. Add BUILDKITE_REFSPEC="refs/pull/${PR_NUM}/head" in the Environment Variables section.

Bug reports and pull requests are welcome on GitHub at https://github.com/Shopify/kubernetes-deploy.

Contributions to help us support additional resource types or increase the sophistication of our success heuristics for an existing type are especially encouraged! (See tips below)

- This project's mission is to make it easy to ship changes to a Kubernetes namespace and understand the result. Features that introduce new classes of responsibility to the tool are not usually accepted.

- Deploys can be a very tempting place to cram features. Imagine a proposed feature actually fits better elsewhere—where might that be? (Examples: validator in CI, custom controller, initializer, pre-processing step in the CD pipeline, or even Kubernetes core)

- The basic ERB renderer included with the tool is intended as a convenience feature for a better out-of-the box experience. Providing complex rendering capabilities is out of scope of this project's mission, and enhancements in this area may be rejected.

- The deploy command does not officially support non-namespaced resource types.

- This project strives to be composable with other tools in the ecosystem, such as renderers and validators. The deploy command must work with any Kubernetes templates provided to it, no matter how they were generated.

- This project is open-source. Features tied to any specific organization (including Shopify) will be rejected.

- The deploy command must remain performant when given several hundred resources at a time, generating 1000+ pods. (Technical note: This means only

syncmethods can make calls to the Kuberentes API server during result verification. This both limits the number of API calls made and ensures a consistent view of the world within each polling cycle.) - This tool must be able to run concurrent deploys to different targets safely, including when used as a library.

The list of fully supported types is effectively the list of classes found in lib/kubernetes-deploy/kubernetes_resource/.

This gem uses subclasses of KubernetesResource to implement custom success/failure detection logic for each resource type. If no subclass exists for a type you're deploying, the gem simply assumes kubectl apply succeeded (and prints a warning about this assumption). We're always looking to support more types! Here are the basic steps for contributing a new one:

- Create a the file for your type in

lib/kubernetes-deploy/kubernetes_resource/ - Create a new class that inherits from

KubernetesResource. Minimally, it should implement the following methods:sync-- Gather the data you'll need to determinedeploy_succeeded?anddeploy_failed?. The superclass's implementation fetches the corresponding resource, parses it and stores it in@instance_data. You can define your own implementation if you need something else.deploy_succeeded?deploy_failed?

- Adjust the

TIMEOUTconstant to an appropriate value for this type. - Add the a basic example of the type to the hello-cloud fixture set and appropriate assertions to

#assert_all_upinhello_cloud.rb. This will get you coverage in several existing tests, such astest_full_hello_cloud_set_deploy_succeeds. - Add tests for any edge cases you foresee.

Everyone is expected to follow our Code of Conduct.

The gem is available as open source under the terms of the MIT License.