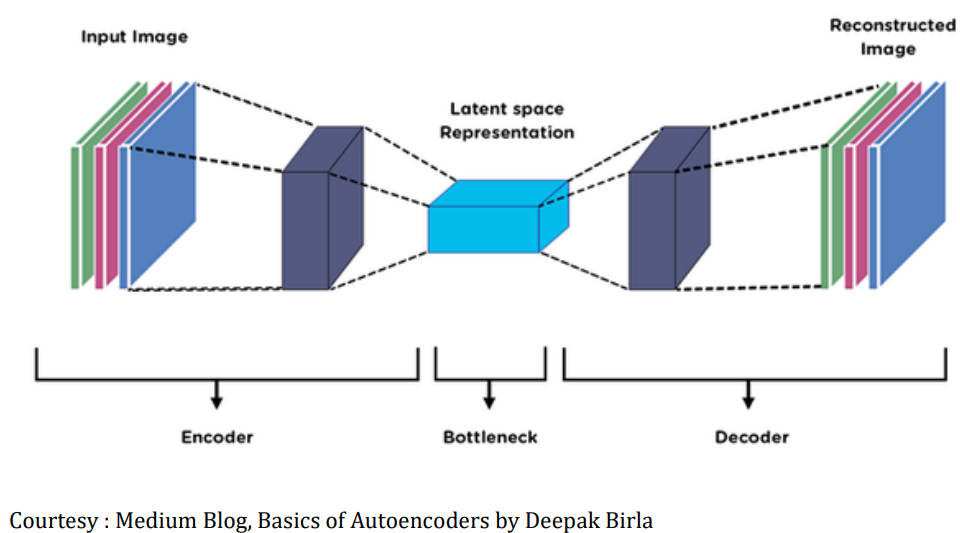

Image colorization using autoencoders is an innovative approach that infuses grayscale images with vibrant hues. Autoencoders, a type of neural network, learn to encode and decode images, forming an internal representation of input data. The aim of this project is to automatically add color to grayscale images using an autoencoder architecture. The process involves several steps, including data preparation, defining the model architecture, training the model, and deploying it for practical use.

For this project, a suitable dataset of grayscale images and their corresponding color versions is required. The dataset should be preprocessed to ensure compatibility with the model architecture. This process may include resizing, normalization, and splitting into training and validation sets.

- The Encoder architecture is a convolutional neural network segment designed for image feature extraction.

- It begins with an input layer accommodating (120, 120, 3) images. Each convolutional layer is accompanied by batch normalization and max pooling.

- Sequentially, the number of filters increases (64, 128, 256, 512), enabling hierarchical feature capture.

- This architecture progressively abstracts image features for image colorization, providing efficient information encapsulation through its layers.

- Then, Decoder, a pivotal component of the Autoencoder, reconstructs images from a compact representation. Initiated by an input layer shaped to match the Encoder's output, the architecture unfolds with batch normalization. Conv2DTranspose layers reverse convolution, expanding the representation with strides of 2 and batch normalization.

The training phase involves optimizing the model's parameters to minimize the difference between the predicted colorized images and the ground truth color images. This is achieved by feeding the grayscale images into the autoencoder and adjusting the weights.

- The model was trained with an optimal 14 epochs and with 120 x 120 grayscale and color landscape image sets.

- Python 3.10.6

- Tensorflow

- NumPy

- Pillow

- Streamlit

Install the required packages using pip:

pip install -r requirements.txtThis project uses Streamlit for deployment. Streamlit is a web application framework that allows for easy integration of machine learning models into web applications. To run the Streamlit application script and follow the instructions provided.

streamlit run app.pyYou can also click here to view the deployment

Distributed under the MIT License. MIT