optillm is an OpenAI API compatible optimizing inference proxy which implements several state-of-the-art techniques that can improve the accuracy and performance of LLMs. The current focus is on implementing techniques that improve reasoning over coding, logical and mathematical queries. It is possible to beat the frontier models using these techniques across diverse tasks by doing additional compute at inference time.

| Model | pass@1 | pass@5 | pass@10 |

|---|---|---|---|

| plansearch-gpt-4o-mini | 44.03 | 59.31 | 63.5 |

| gpt-4o-mini | 43.9 | 50.61 | 53.25 |

| claude-3.5-sonnet | 51.3 | ||

| gpt-4o-2024-05-13 | 45.2 | ||

| gpt-4-turbo-2024-04-09 | 44.2 |

Just clone the repository with git and use pip install to setup the dependencies.

git clone https://github.com/codelion/optillm.git

cd optillm

python3 -m venv .venv

source .venv/bin/activate

pip install -r requirements.txtYou can then run the optillm proxy as follows.

python optillm.py

2024-09-06 07:57:14,191 - INFO - Starting server with approach: auto

2024-09-06 07:57:14,191 - INFO - Server configuration: {'approach': 'auto', 'mcts_simulations': 2, 'mcts_exploration': 0.2, 'mcts_depth': 1, 'best_of_n': 3, 'model': 'gpt-4o-mini', 'rstar_max_depth': 3, 'rstar_num_rollouts': 5, 'rstar_c': 1.4, 'base_url': ''}

* Serving Flask app 'optillm'

* Debug mode: off

2024-09-06 07:57:14,212 - INFO - WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on https://127.0.0.1:8000

* Running on https://192.168.10.48:8000

2024-09-06 07:57:14,212 - INFO - Press CTRL+C to quitOnce the proxy is running, you can just use it as a drop in replacement for an OpenAI client by setting the base_url as https://localhost:8000/v1.

import os

from openai import OpenAI

OPENAI_KEY = os.environ.get("OPENAI_API_KEY")

OPENAI_BASE_URL = "https://localhost:8000/v1"

client = OpenAI(api_key=OPENAI_KEY, base_url=OPENAI_BASE_URL)

response = client.chat.completions.create(

model="moa-gpt-4o",

messages=[

{

"role": "user",

"content": "Write a Python program to build an RL model to recite text from any position that the user provides, using only numpy."

}

],

temperature=0.2

)

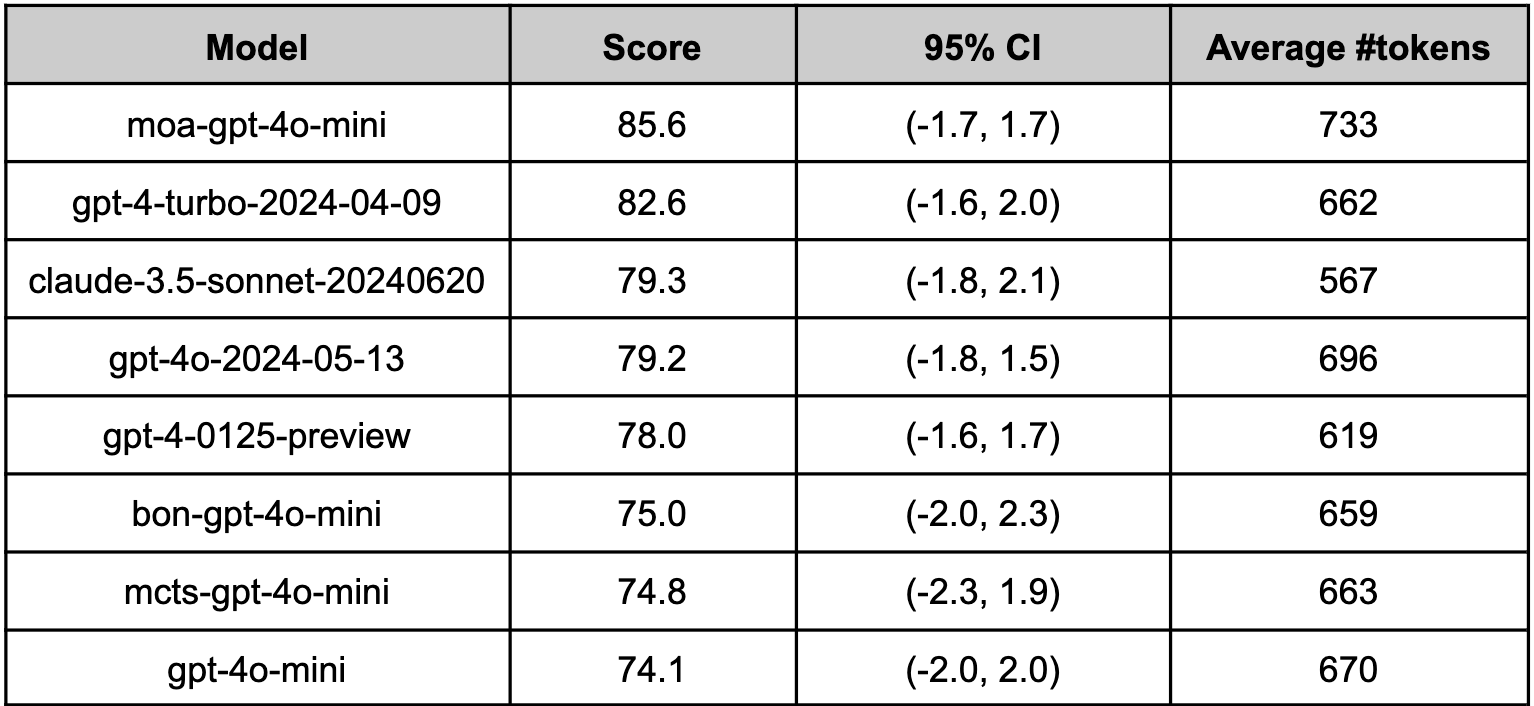

print(response)You can control the technique you use for optimization by prepending the slug to the model name {slug}-model-name. E.g. in the above code we are using moa or

mixture of agents as the optimization approach. In the proxy logs you will see the following showing the moa is been used with the base model as gpt-4o-mini.

2024-09-06 08:35:32,597 - INFO - Using approach moa, with gpt-4o-mini

2024-09-06 08:35:35,358 - INFO - HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

2024-09-06 08:35:39,553 - INFO - HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

2024-09-06 08:35:44,795 - INFO - HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

2024-09-06 08:35:44,797 - INFO - 127.0.0.1 - - [06/Sep/2024 08:35:44] "POST /v1/chat/completions HTTP/1.1" 200 -| Technique | Slug | Description |

|---|---|---|

| Monte Carlo Tree Search | mcts |

Uses MCTS for decision-making in chat responses |

| Best of N Sampling | bon |

Generates multiple responses and selects the best one |

| Mixture of Agents | moa |

Combines responses from multiple critiques |

| Round Trip Optimization | rto |

Optimizes responses through a round-trip process |

| Z3 Solver | z3 |

Utilizes the Z3 theorem prover for logical reasoning |

| Self-Consistency | self_consistency |

Implements an advanced self-consistency method |

| PV Game | pvg |

Applies a prover-verifier game approach at inference time |

| R* Algorithm | rstar |

Implements the R* algorithm for problem-solving |

| CoT with Reflection | cot_reflection |

Implements chain-of-thought reasoning with <thinking>, <reflection> and <output> sections |

| PlanSearch | plansearch |

Implements a search algorithm over candidate plans for solving a problem in natural language |

| LEAP | leap |

Learns task-specific principles from few shot examples |

- In-Context Principle Learning from Mistakes

- Planning In Natural Language Improves LLM Search For Code Generation

- Self-Consistency Improves Chain of Thought Reasoning in Language Models

- Mutual Reasoning Makes Smaller LLMs Stronger Problem-Solvers

- Mixture-of-Agents Enhances Large Language Model Capabilities

- Prover-Verifier Games improve legibility of LLM outputs

- Monte Carlo Tree Search Boosts Reasoning via Iterative Preference Learning

- Unsupervised Evaluation of Code LLMs with Round-Trip Correctness

- Patched MOA: optimizing inference for diverse software development tasks

- Patched RTC: evaluating LLMs for diverse software development tasks