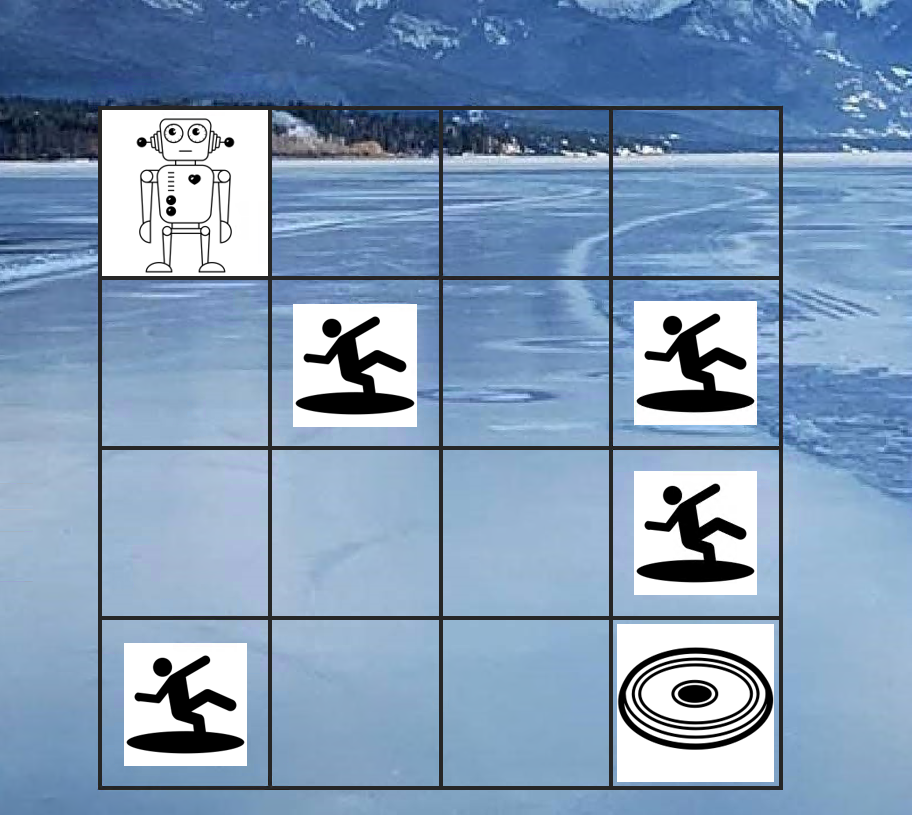

Path planning using reinforcement learning in a n x n grid environment:

Starting position at (0, 0) (top left), and goal position at (n-1, n-1) (bottom right).

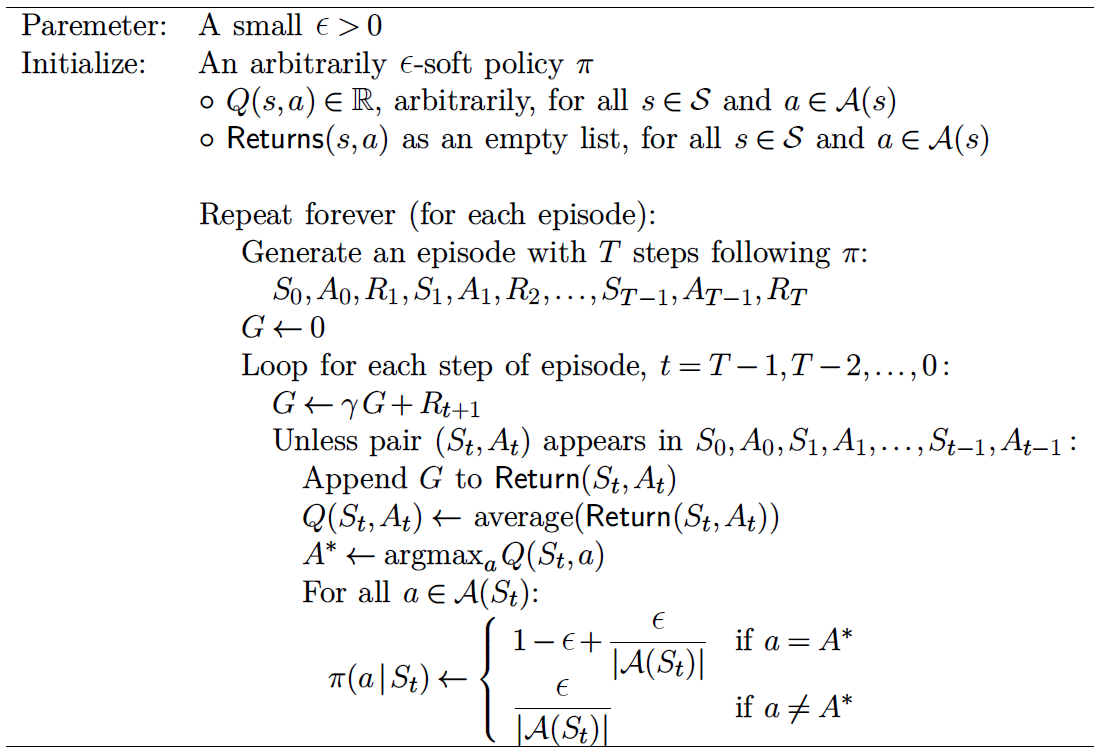

Current techniques

- Monte-Carlo control without exploring starts

- SARSA with an

$\epsilon$ -greedy behavior policy - Q-learning with an

$\epsilon$ -greedy behavior policy

Update rule:

Update rule: