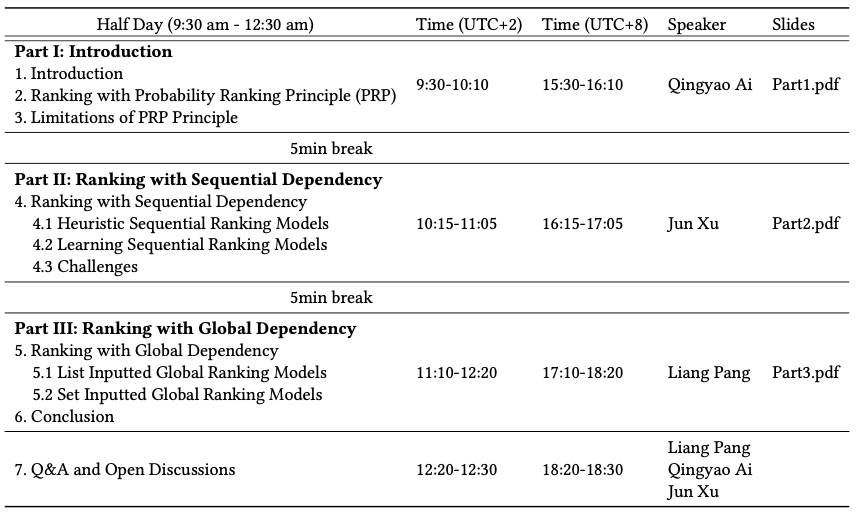

This WSDM2021 tutorial will be held on Monday, March 8th, 2021, with online mode, by Liang Pang <pangliang[AT]ict.ac.cn>, Qingyao Ai <aiqy[AT]cs.utah.edu>, and Jun Xu <junxu[AT]ruc.edu.cn>.

Probability Ranking Principle (PRP) is the fundamental principle for ranking, which assumes that each document has a unique and independent probability to satisfy a particular information need. Previously, traditional heuristic features and well-known learning-to-rank approaches are designed following PRP principle. Besides, recent deep learning enhanced ranking models, also referred to as ``deep text matching'', also obey PRP principle. However, PRP is not an optimal for ranking, due to each document is not independent from the rest in many recent ranking tasks, such as pseudo relevance feedback, interactive information retrieval and so on. To solve this problem, a new trend of ranking models turn to model the dependencies among documents. In this tutorial, we aim to give a comprehensive survey on recent progress that the ranking models go beyond PRP principle. In this way, we expect researchers focus on this field which will lead to a big improvement in the information retrieval.

This tutorial mainly consists of three parts. Firstly, we introduce the ranking problem and the well-known probability ranking principle. Secondly, we present traditional approaches under PRP principle. Lastly, we illustrate the limitations of PRP principle and introduce most recent work that model the dependencies among document in a sequential way and in a global way.

A more detailed introduction can be found here.

- [Part1] Introduction [Slide] [B站] [YouTube]

- [Part2] Ranking with Sequential Dependency [Slide] [B站] [YouTube]

- [Part3] Ranking with Global Dependency [Slide] [B站] [YouTube]

- Text Matching as Image Recognition. Liang Pang, Yanyan Lan, Jiafeng Guo, Jun Xu, Shengxian Wan, Xueqi Cheng. AAAI 2016.

- DeepRank: a New Deep Architecture for Relevance Ranking in Information Retrieval. Liang Pang, Yanyan Lan, Jiafeng Guo, Jun Xu, Jingfang Xu, Xueqi Cheng. CIKM 2017.

- A Deep Architecture for Semantic Matching with Multiple Positional Sentence Representations. Shengxian Wan, Yanyan Lan, Jiafeng Guo, Jun Xu, Liang Pang and Xueqi Cheng. AAAI 2016.

- Match-SRNN: Modeling the Recursive Matching Structure with Spatial RNN. Shengxian Wan, Yanyan Lan, Jiafeng Guo, Jun Xu, Liang Pang and Xueqi Cheng. IJCAI 2016.

- A Deep Look into Neural Ranking Models for Information Retrieval. Jiafeng Guo, Yixing Fan, Liang Pang, Liu Yang, Qingyao Ai, Hamed Zamani, Chen Wu, W. Bruce Croft and Xueqi Cheng. Information Processing & Management (IPM).

- For more references, please see Part1-Introduction.

- Reinforcement Learning to Rank with Markov Decision Process. Wei Zeng, Jun Xu, Yanyan Lan, Jiafeng Guo, and Xueqi Cheng. SIGIR 2017.

- Adapting Markov Decision Process for Search Result Diversification. Long Xia, Jun Xu, Yanyan Lan, Jiafeng Guo, Wei Zeng, and Xueqi Cheng. SIGIR 2017.

- From Greedy Selection to Exploratory Decision-Making: Diverse Ranking with Policy-Value Networks. Yue Feng, Jun Xu, Yanyan Lan, Jiafeng Guo, Wei Zeng, and Xueqi Cheng. SIGIR 2018.

- For more references, please see Part2-Ranking with Sequential Dependency.

- Learning a Deep Listwise Context Model for Ranking Refinement. Qingyao Ai, Keping Bi, Jiafeng Guo and W. Bruce Croft. SIGIR 2018.

- Learning Groupwise Multivariate Scoring Functions Using Deep Neural Networks. Qingyao Ai, Xuanhui Wang, Sebastian Bruch, Nadav Golbandi, Michael Bendersky and Marc Najork. ICTIR 2019.

- SetRank: Learning a Permutation-Invariant Ranking Model for Information Retrieval. Liang Pang, Jun Xu, Qingyao Ai, Yanyan Lan, Xueqi Cheng, and Jirong Wen. SIGIR 2020.

- Analysis of Multivariate Scoring Functions for Automatic Unbiased Learning to Rank. Yang, Tao, Shikai Fang, Shibo Li, Yulan Wang, and Qingyao Ai. CIKM 2020.

- For more references, please see Part3-Ranking with Global Dependency