- 2024.5.27 code released

- 2024.5.24 arXiv preprint released

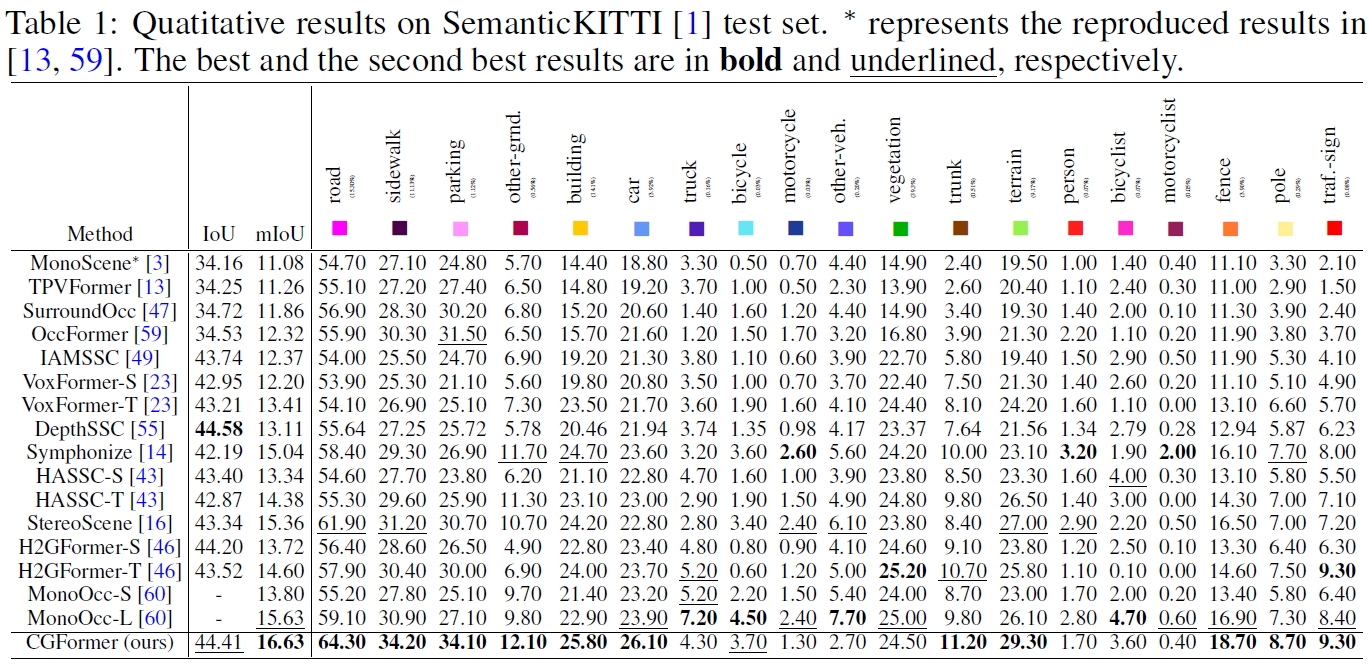

Vision-based Semantic Scene Completion (SSC) has gained much attention due to its widespread applications in various 3D perception tasks. Existing sparse-to-dense methods typically employ shared context-independent queries across various input images, which fails to capture distinctions among them as the focal regions of different inputs vary and may result in undirected feature aggregation of cross attention. Additionally, the absence of depth information may lead to points projected onto the image plane sharing the same 2D position or similar sampling points in the feature map, resulting in depth ambiguity. In this paper, we present a novel context and geometry aware voxel transformer. It utilizes a context aware query generator to initialize context-dependent queries tailored to individual input images, effectively capturing their unique characteristics and aggregating information within the region of interest. Furthermore, it extend deformable cross-attention from 2D to 3D pixel space, enabling the differentiation of points with similar image coordinates based on their depth coordinates. Building upon this module, we introduce a neural network named CGFormer to achieve semantic scene completion. Simultaneously, CGFormer leverages multiple 3D representations (i.e., voxel and TPV) to boost the semantic and geometric representation abilities of the transformed 3D volume from both local and global perspectives. Experimental results demonstrate that CGFormer achieves state-of-the-art performance on the SemanticKITTI and SSCBench-KITTI-360 benchmarks, attaining a mIoU of 16.87 and 20.05, as well as an IoU of 45.99 and 48.07, respectively.

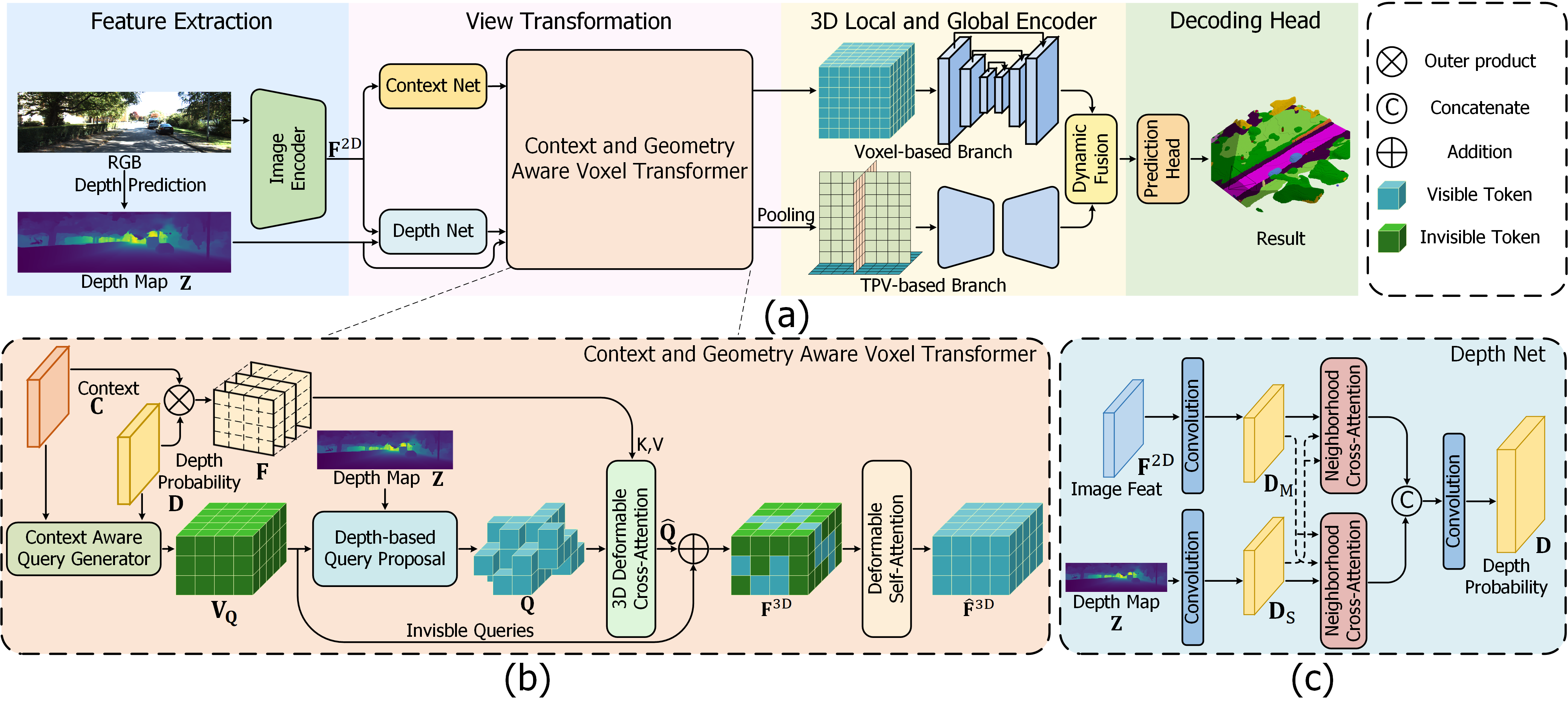

Schematics and detailed architectures of CGFormer. (a) The framework of the proposed CGFormer for camera-based semantic scene completion. The pipeline consists of the image encoder for extracting 2D features, the context and geometry aware voxel (CGVT) transformer for lifting the 2D features to 3D volumes, the 3D local and global encoder (LGE) for enhancing the 3D volumes and a decoding head to predict the semantic occupancy. (b) Detailed structure of the context and geometry aware voxel transformer. (c) Details of the Depth Net.

step 1. Refer to install.md to install the environment.

step 2. Refer to dataset.md to prepare SemanticKITTI and KITTI360 dataset.

step 3. Refer to train_and_eval.md for training and evaluation.

We provide the pretrained weights on SemanticKITTI and KITTI360 datasets, reproduced with the released codebase.

| Dataset | Backbone | IoU | mIoU | Model Weights | Training Logs |

|---|---|---|---|---|---|

| SemanticKITTI | EfficientNetB7 | 44.41, 45.99 (val) | 16.63, 16.89 (val) | Link | Link |

| KITTI360 | EfficientNetB7 | 48.07 | 20.05 | Link | Link |

Many thanks to these exceptional open source projects:

As it is not possible to list all the projects of the reference papers. If you find we leave out your repo, please contact us and we'll update the lists.

If you find our work beneficial for your research, please consider citing our paper and give us a star:

@misc{CGFormer,

title={Context and Geometry Aware Voxel Transformer for Semantic Scene Completion},

author={Zhu Yu and Runming Zhang and Jiacheng Ying and Junchen Yu and Xiaohai Hu and Lun Luo and Siyuan Cao and Huiliang Shen},

year={2024},

eprint={2405.13675},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

If you encounter any issues, please contact [email protected].

- Visualization scripts