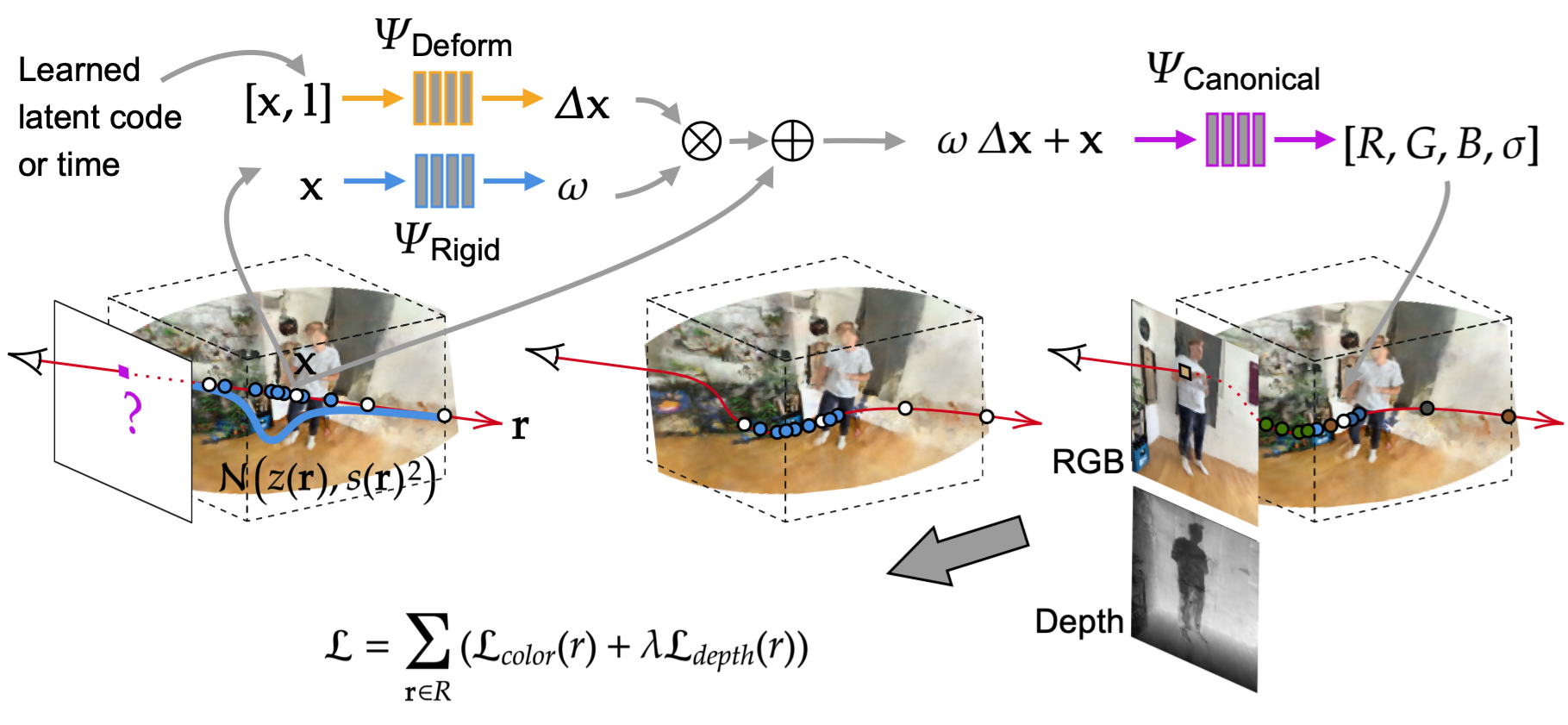

DGD-NeRF is a method for synthesizing novel views, at an arbitrary point in time, of dynamic scenes with complex non-rigid geometries. We optimize an underlying deformable volumetric function from a sparse set of input monocular views without the need of ground-truth geometry nor multi-view images.

This project is an extension of D-NeRF improving modelling of dynamic scenes. We thank the authors of NeRF-pytorch, Dense Depth Priors for NeRF and Non-Rigid NeRF from whom be borrow code.

git clone https://github.com/philippwulff/D-NeRF.git

cd D-NeRF

conda create -n dgdnerf python=3.7

conda activate dgdnerf

pip install -r requirements.txt

If you want to directly explore the models or use our training data, you can download pre-trained models and the data:

Download Pre-trained Weights. You can download the pre-trained models as logs.zip from here. Unzip the downloaded data to the project root dir in order to test it later. This is what the directory structure looks like:

├── logs

│ ├── human

│ ├── bottle

│ ├── gobblet

│ ├── ...

DeepDeform. This is a RGB-D dataset of dynamic scenes with fixed camera poses. You can request access on the project's GitHub page.

Own Data. Download our own dataset as data.zip from here.

This is what the directory structure looks like with pretrained weights and dataset:

├── data

│ ├── human

│ ├── bottle

│ ├── gobblet

│ ├── ...

├── logs

│ ├── human

│ ├── bottle

│ ├── gobblet

│ ├── ...

Generate Own scenes Own scenes can be easily generated and integrated. We used an iPad with a Lidar Sensor (App: Record3d --> export Videos as EXR + RGB). Extract the dataset the format which our model expects by running load_owndataset.py (you will need to create a scene configuration in this file).

If you have downloaded our data and the pre-trained weights, you can test our models without training them. Otherwise, you can also train a model with our or your own data from scratch.

You can use these jupyter notebooks to explore the model.

| Description | Jupyter Notebook |

|---|---|

| Synthesize novel views at an arbitrary point in time. (Requires trained model) | render.ipynb |

| Reconstruct the mesh at an arbitrary point in time. (Requires trained model) | reconstruct.ipynb |

| See the camera trajectory the training frames. | eda_owndataset_train.ipynb |

| See the camera poses of novel views. | eda_virtual_camera.ipynb |

| Visualize the sampling along camera rays. (Requires training logs) | eda_ray_sampling.ipynb |

The followinf instructions use the human scene as an example which can be replaced by the other scenes.

First download the dataset. Then,

conda activate dgdnerf

export PYTHONPATH='path/to/DGD-NeRF'

export CUDA_VISIBLE_DEVICES=0

python run_dgdnerf.py --config configs/human.txt

This command will run the human experiment with the specified args in the config human.txt.

Our extensions can be modularly enabled or disabled in human.txt.

First train the model or download pre-trained weights and dataset. Then,

python run_dgdnerf.py --config configs/human.txt --render_only --render_test

This command will render the test set images of the human experiment. When finished, quantitative (metrics.txt) and qualitative results (rgb and depth images/videos) are saved to ./logs/human/renderonly_test_400000.

To render novel view images you can use the notebook render.ipynb. To render novel view videos run

python run_dgdnerf.py --config configs/human.txt --render_only --render_pose_type spherical

Select the novel view camera trajectory by setting render_pose_type. Options depend on the scene.