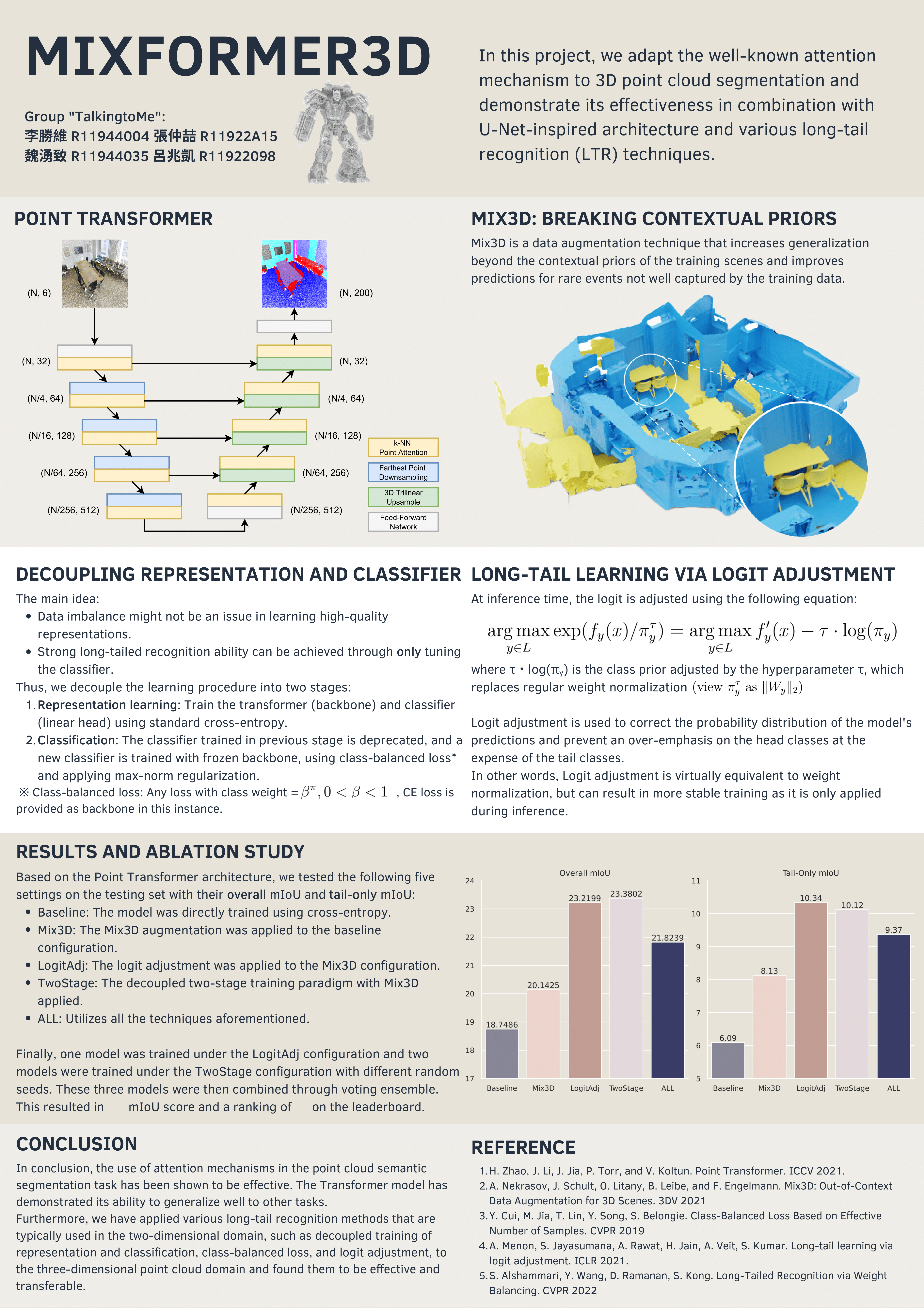

In this project, we adapt the well-known attention mechanism to 3D point cloud segmentation and demonstrate its effectiveness in combination with U-Net-inspired architecture and various long-tail recognition (LTR) techniques on the SCANNET200 dataset.

🎉We were voted as the best by 123 classmates and 29 teams through inter-team evaluation!🎉

Our GPU is a single 4090 with 24GB VRAM

cd point-transformer

bash conda_env_setup.sh pt-

Install torch 1.9.0 corresponds to your cuda version (11.1 in our case)

-

pip install h5py pyyaml sharedarray tensorboardx plyfile -

cd point-transformer/lib/pointops python3 setup.py installto install custom kernel functions

Only place the dataset if you plan to train the model.

Place the train and test set in point-transformer/dataset/scannet200

The directory should look like:

point-transfomer/

├─ dataset/

│ ├─ scannet200/

│ │ ├─ train/

│ │ │ ├─ scene0000_00.ply

│ │ │ ├─ ...

│ │ ├─ test/

│ │ │ ├─ scene0500_00.ply

│ │ │ ├─ ...

│ │ ├─ train_split.txt

│ │ ├─ val_split.txt

│ │ ├─ test_split.txt

cd point-transformer

bash train.shSince we use five models as ensemble voting classifier, we provide five different weights to download.

Follow the procedure to repreduce the results: \

cd point-transformer\- For i in [0, 1, 2, 3, 4]:

a.bash download{i}.sh

"${1}" is the path to test set folder (e.g., ./dataset/test)

"${2}" is the path to test.txt (e.g., ./dataset/test.txt)

"${3}" is the path to output folder (e.g., ./submission)

b.bash inference.sh $1 $2 $3

c. Move the output folder (e.g., ./submission) into../Ensemble/all_submissions cd ../Ensemble- Run

python3 Ensemble.py - The result is in

Ensemble/ensemble_results

"${1}" is the path to test set folder (e.g., ./dataset/test)

"${2}" is the path to test.txt (e.g., ./dataset/test.txt)

"${3}" is the path to output folder (e.g., ./submission)

Run:

cd point-transformer

bash download0.sh

bash inference.sh $1 $2 $3