| Dataset | Metric | Value |

|---|---|---|

| Validation | Classification Accuracy | 96.10% |

| Validation | Classification Recall | 96.10% |

| Validation | Classification F1-Score | 96.10% |

| Validation | Detection Mean Average Precision | 76.8% @ 0.5(IoU) |

| Validation | Detection Average Precision | 76.8% |

| Validation | Detection Intersection over Union (IoU) | 80.75% |

We Recommend you to explore our dataset and trained models on the Roboflow Universe platform. It has multiple versions of the dataset, more data has been added and annotated (6345 Images), 6 models have been trained, deployed and evaluated, which can be easily visualized and used!

-

- This directory contains the YOLOv8-X trained model, named best.pt, along with any other relevant training data or model-related files. All the Predictions and Evaluations for classification and Detection are done with this model.

-

- Inside this directory, the All_Predictions.csv file contains the classification prediction of both test_dataset_1 and 2 from the YOLOv8-X model. Along with it, there are validation predictions and evaluations.

-

Code:

- This section contains the code for training, testing, and validation.

-

- The Datasets directory holds our annotated dataset in YOLO format, split into an 80/20 ratio for training and validation (all evaluations are done on this validation data).

-

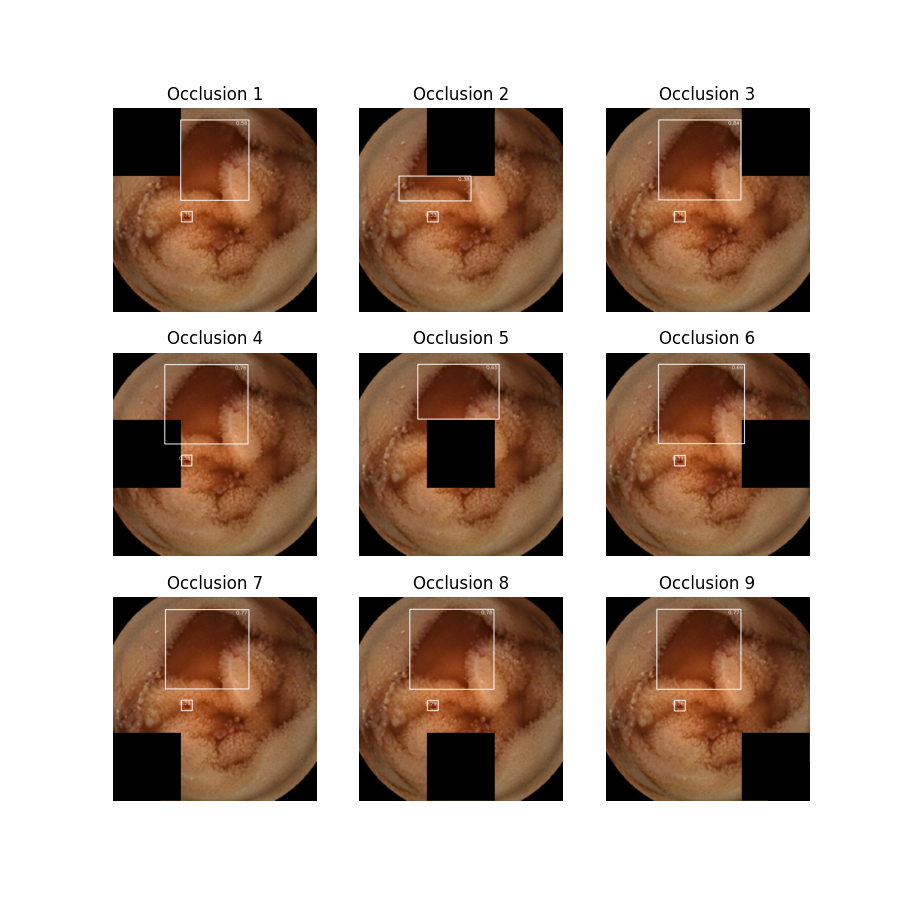

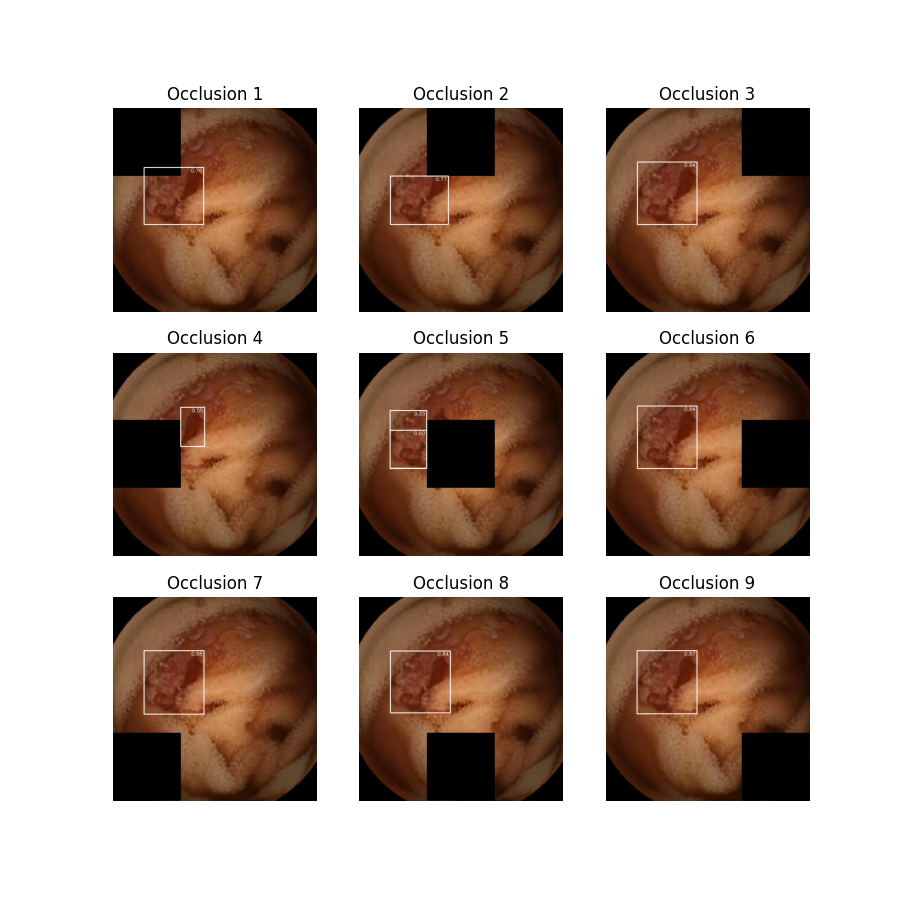

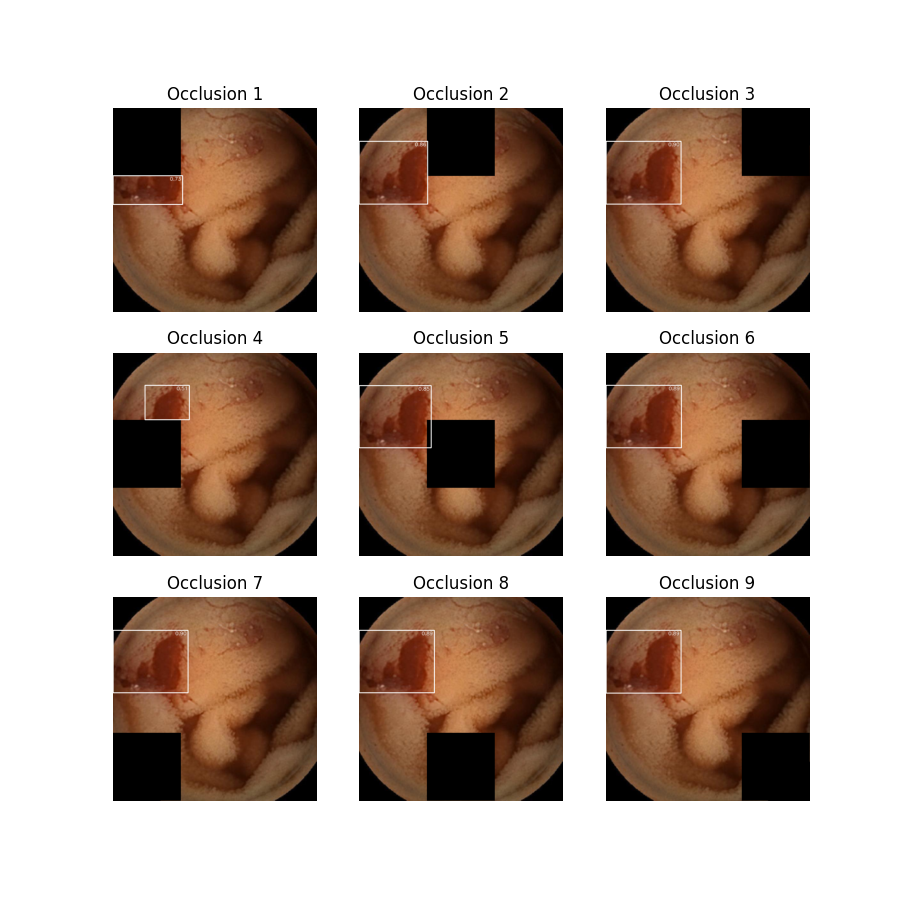

- This directory includes predictions of the YOLOv8-X model on the Validation Dataset, Test Dataset 1, and Test Dataset 2 with the labels.

-

- This directory contains a separate classification model, based on the MobileNet architecture, built using MATLAB. All relevant code and results related to this model are stored here.

-

- This directory contains a separate YOLO Detection model, trained on a dataset with annotations extracted from the given binary masks. The Model weights, dataset, and results are stored here and can be viewed in Roboflow. This is not the best model, just another model trained with different dataset annotations(sticking to the original training dataset and experts binary masks). The First model(YOLOv8-X) can generalize and perform better as it has been trained on more data and gone through more iterations.

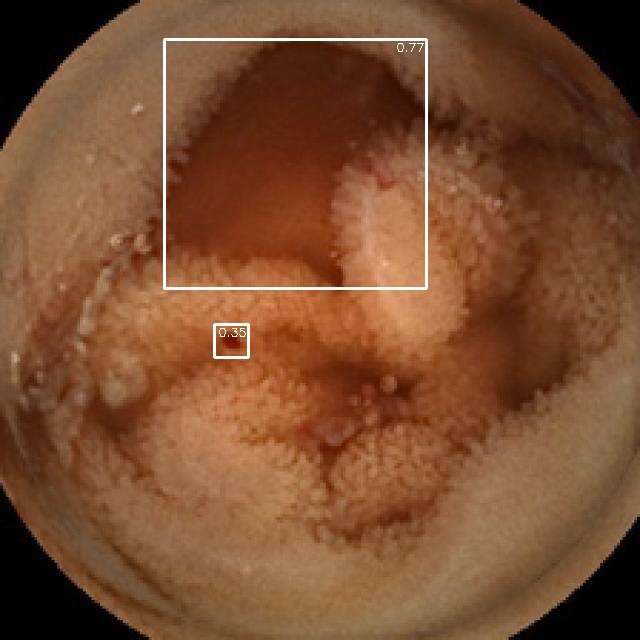

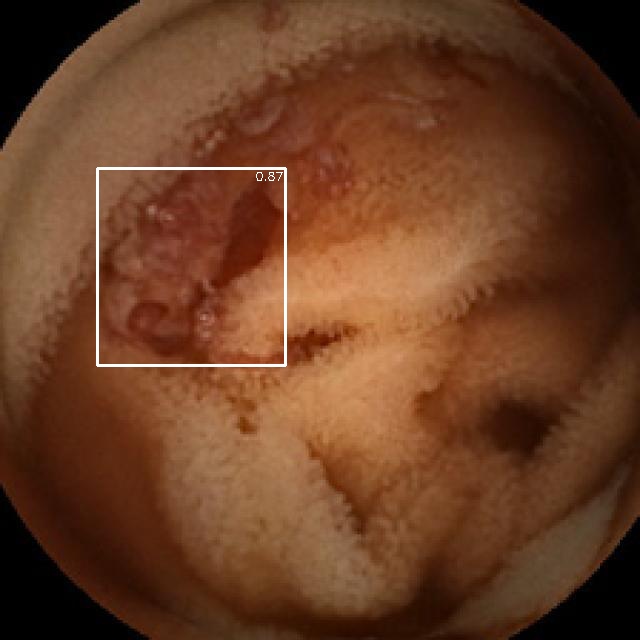

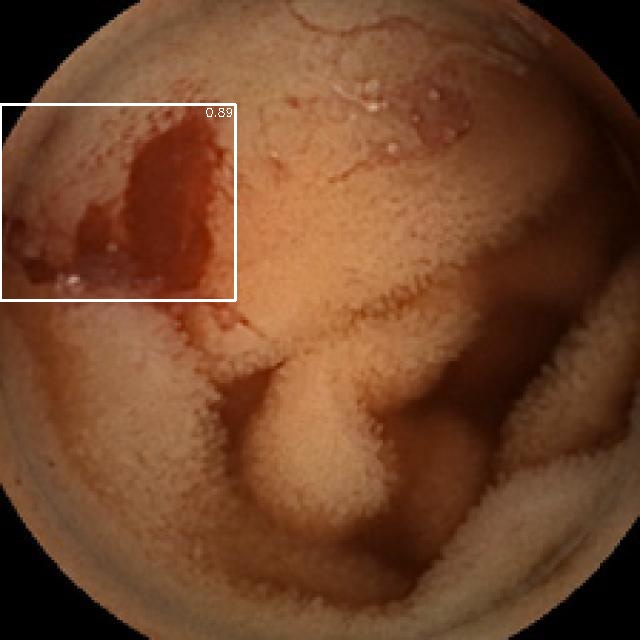

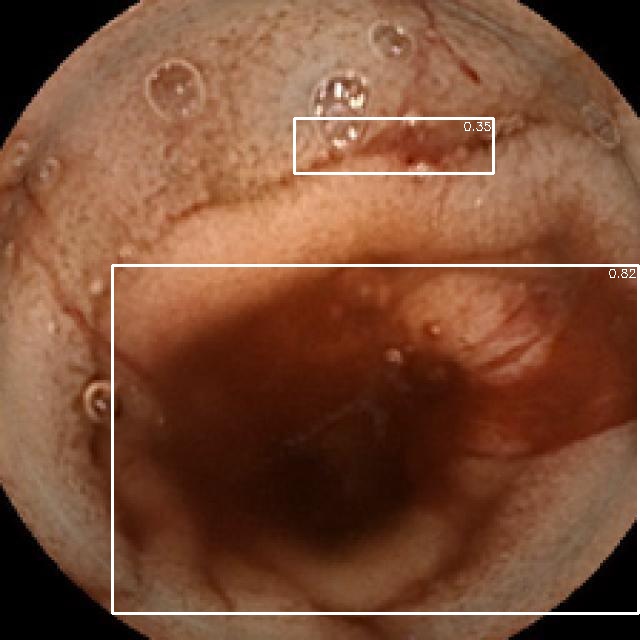

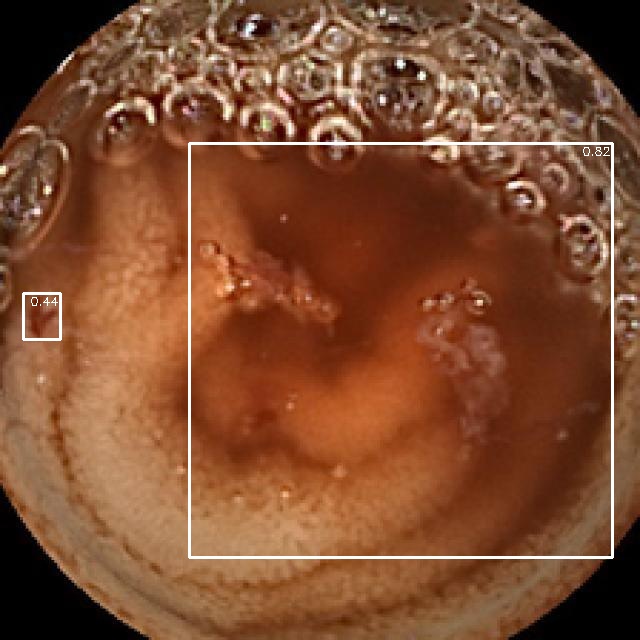

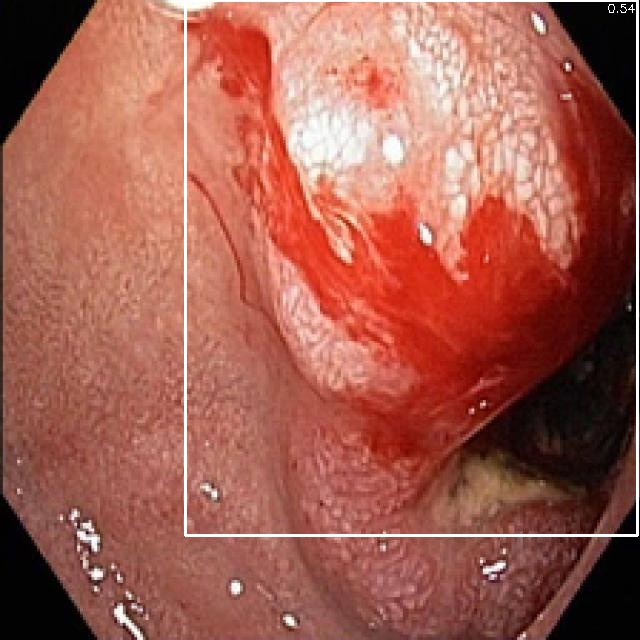

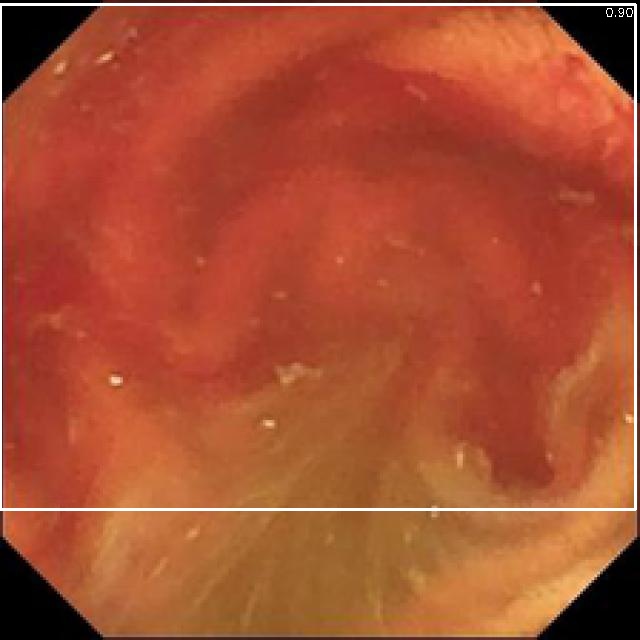

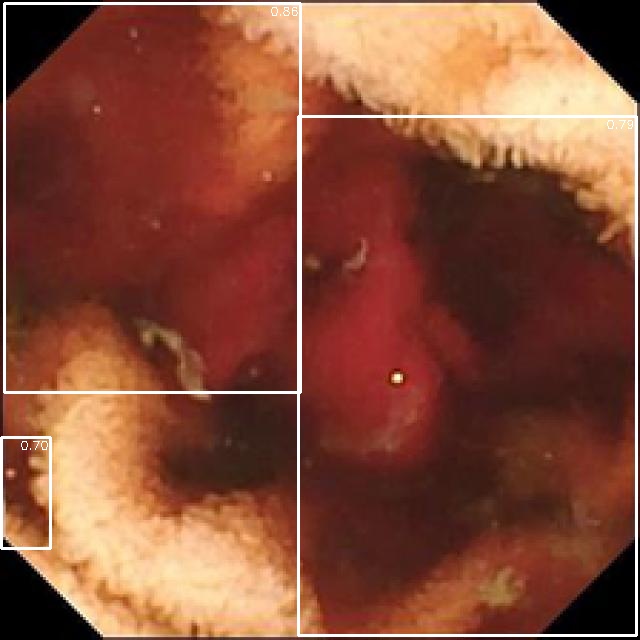

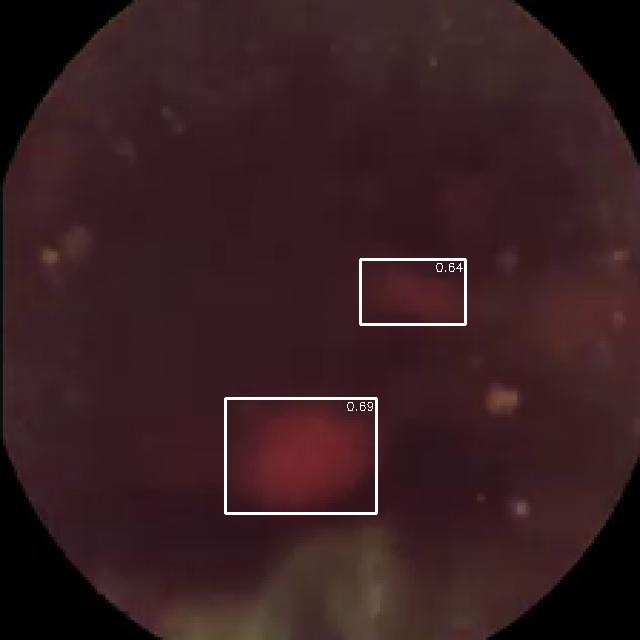

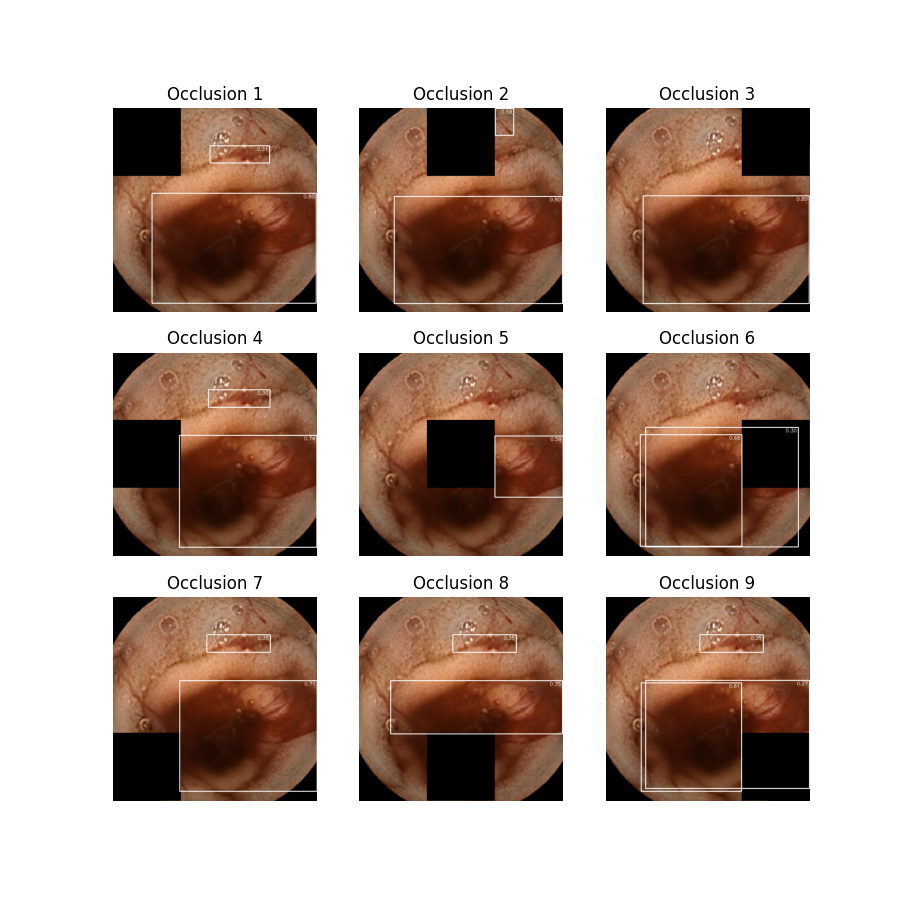

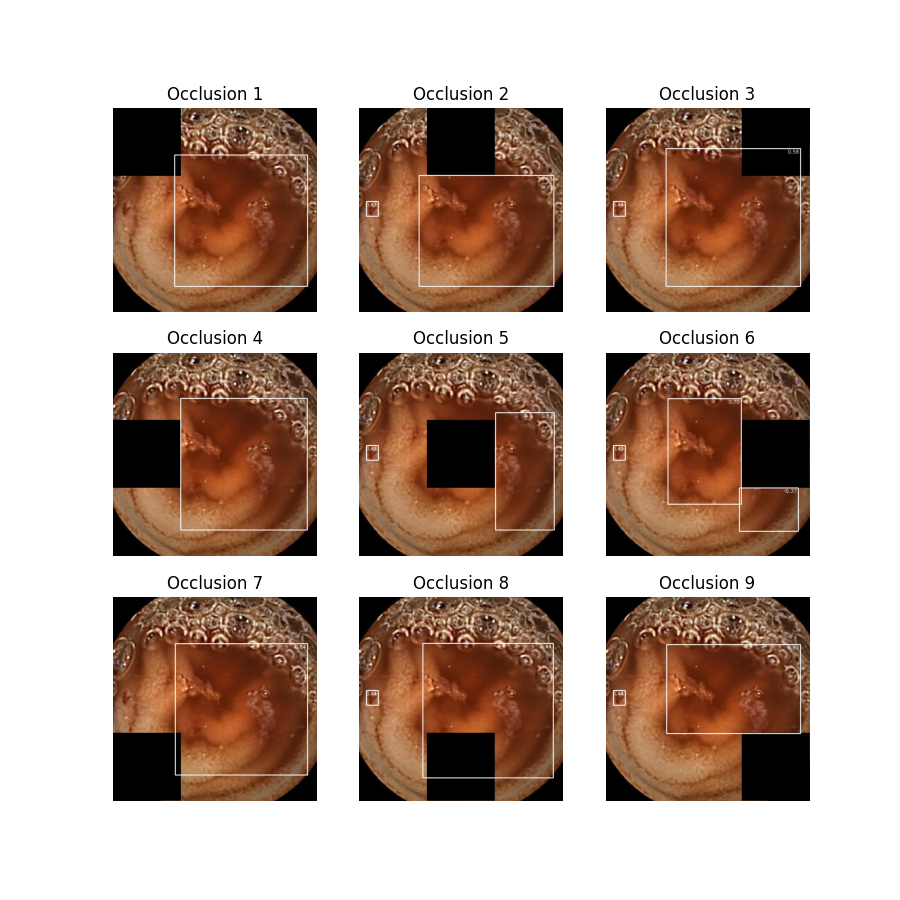

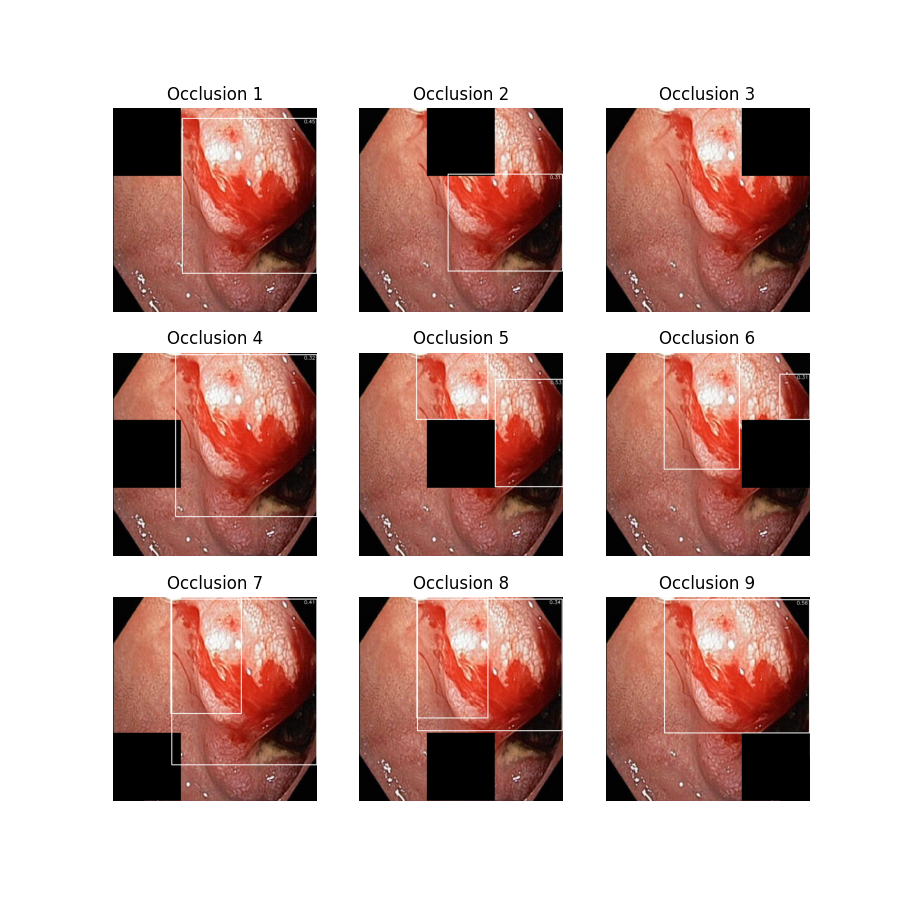

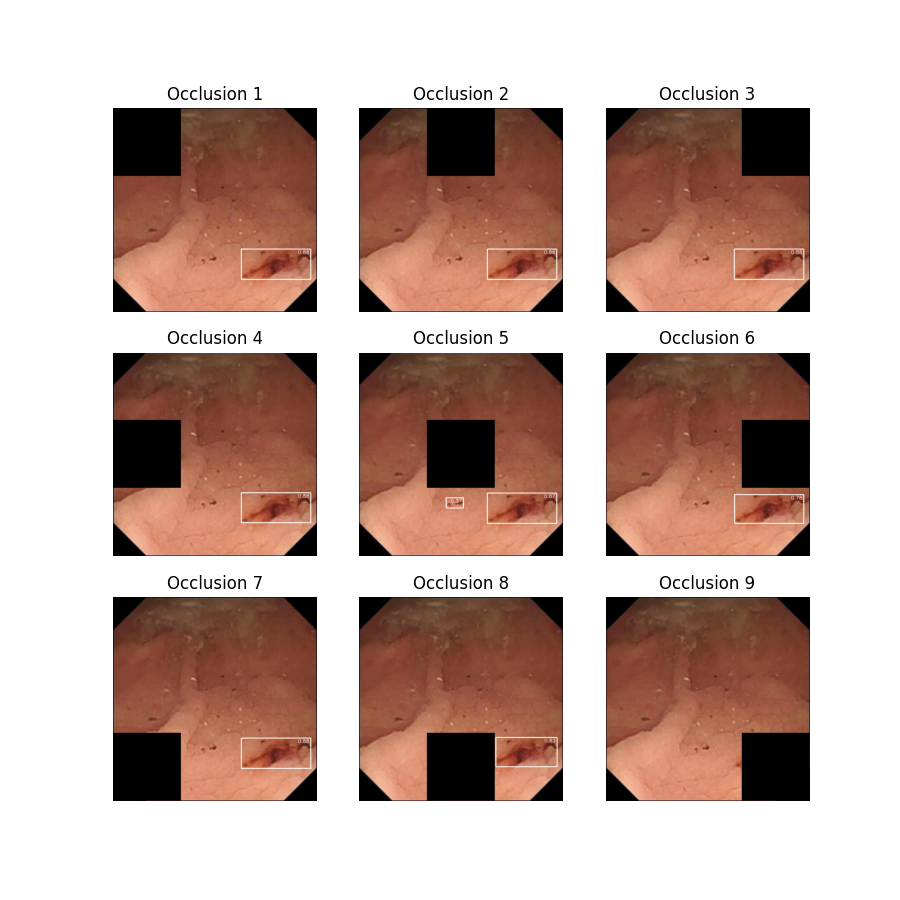

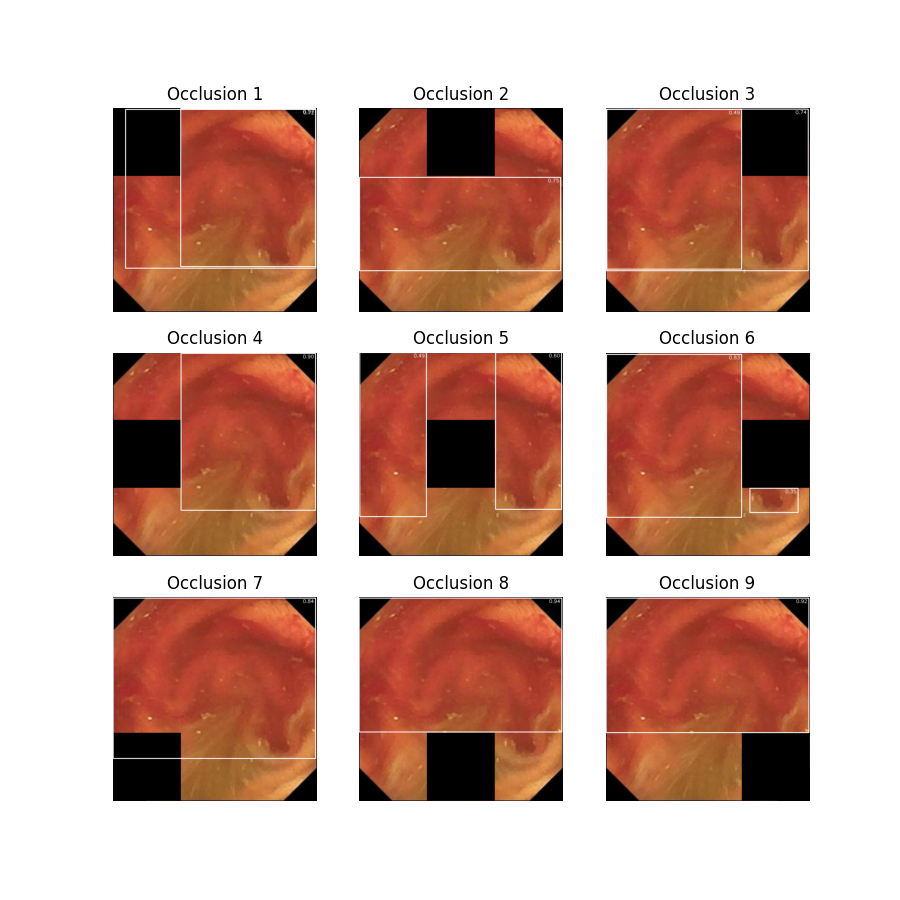

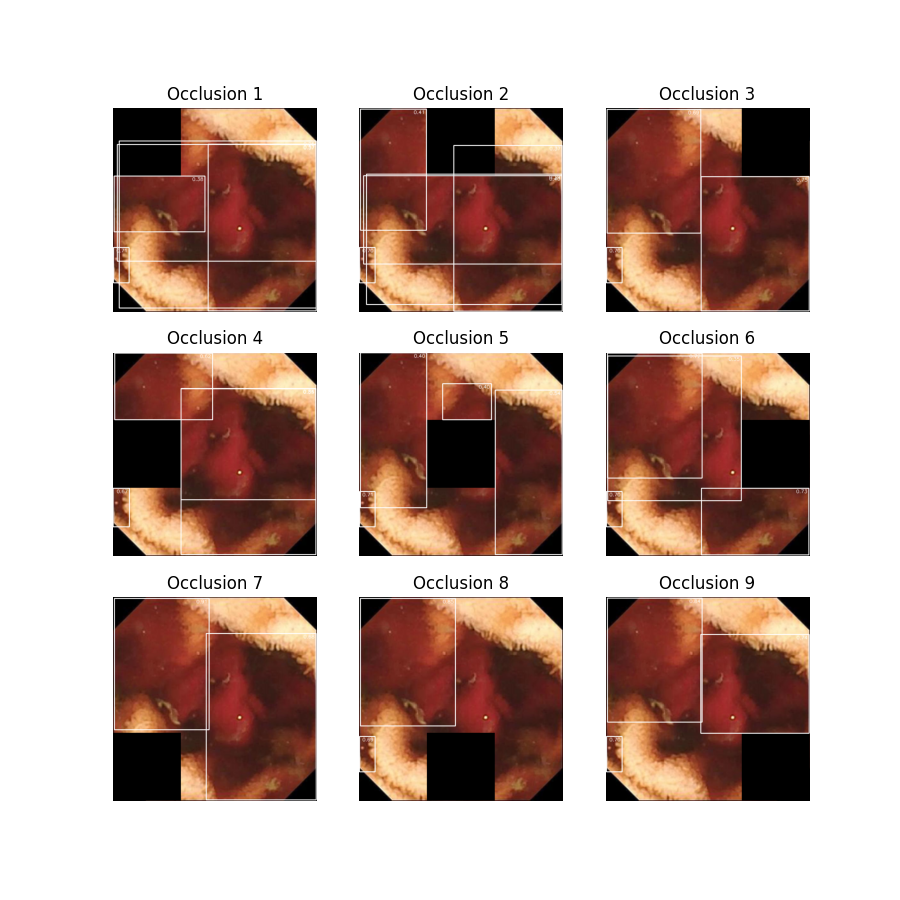

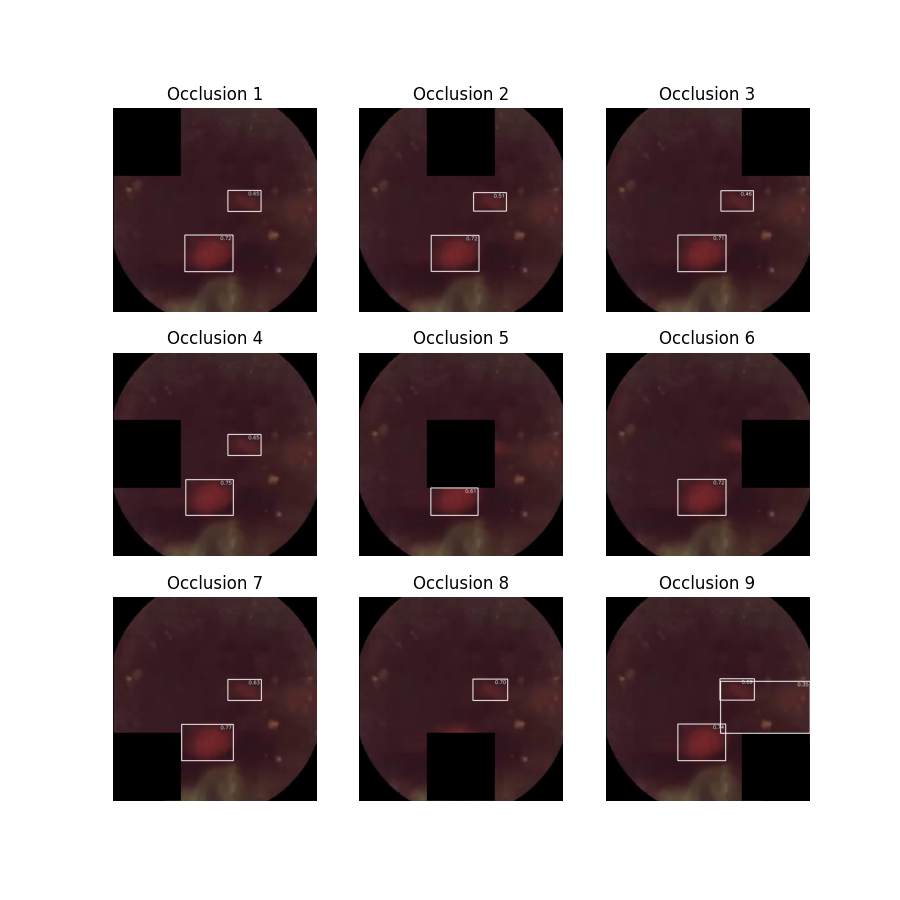

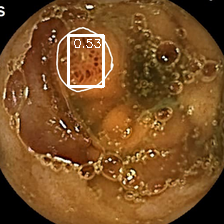

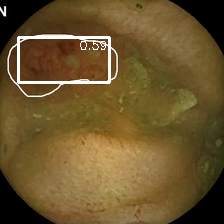

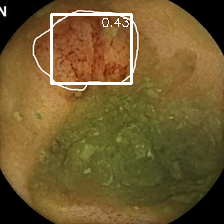

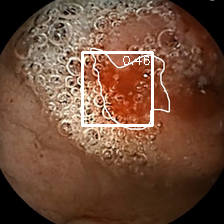

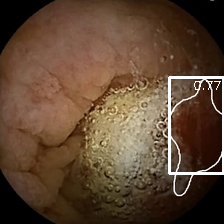

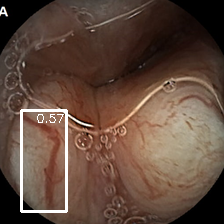

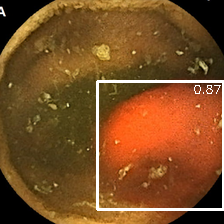

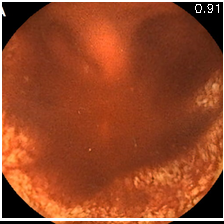

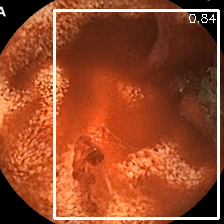

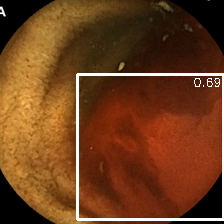

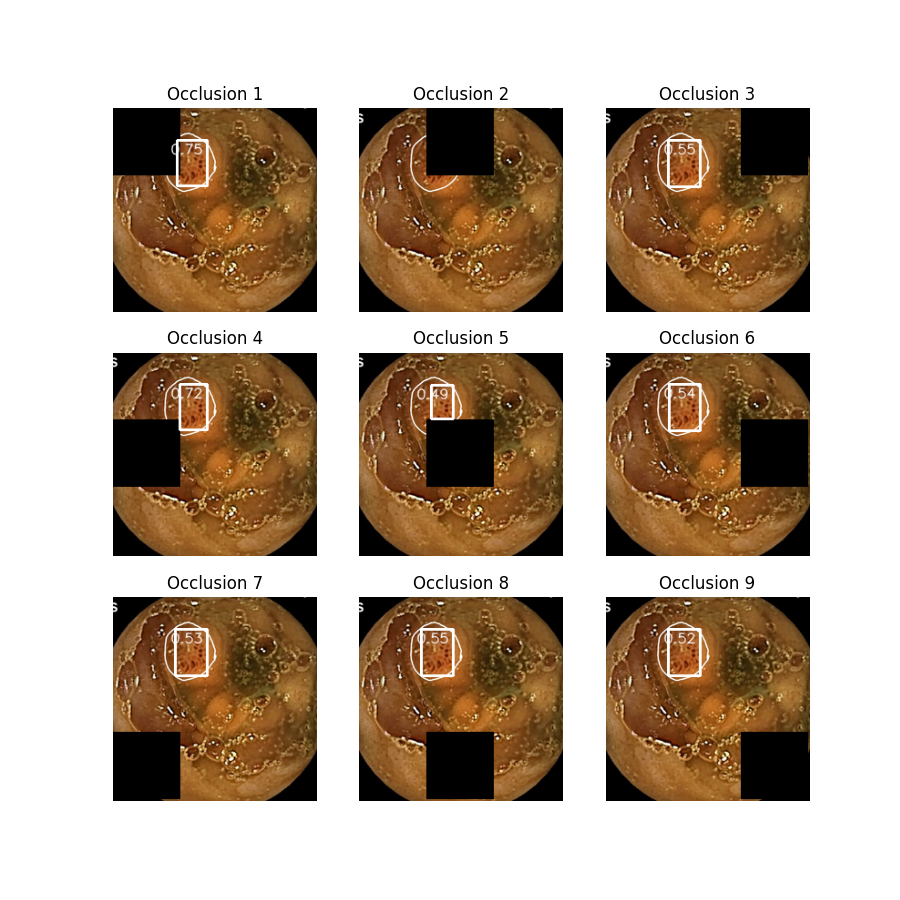

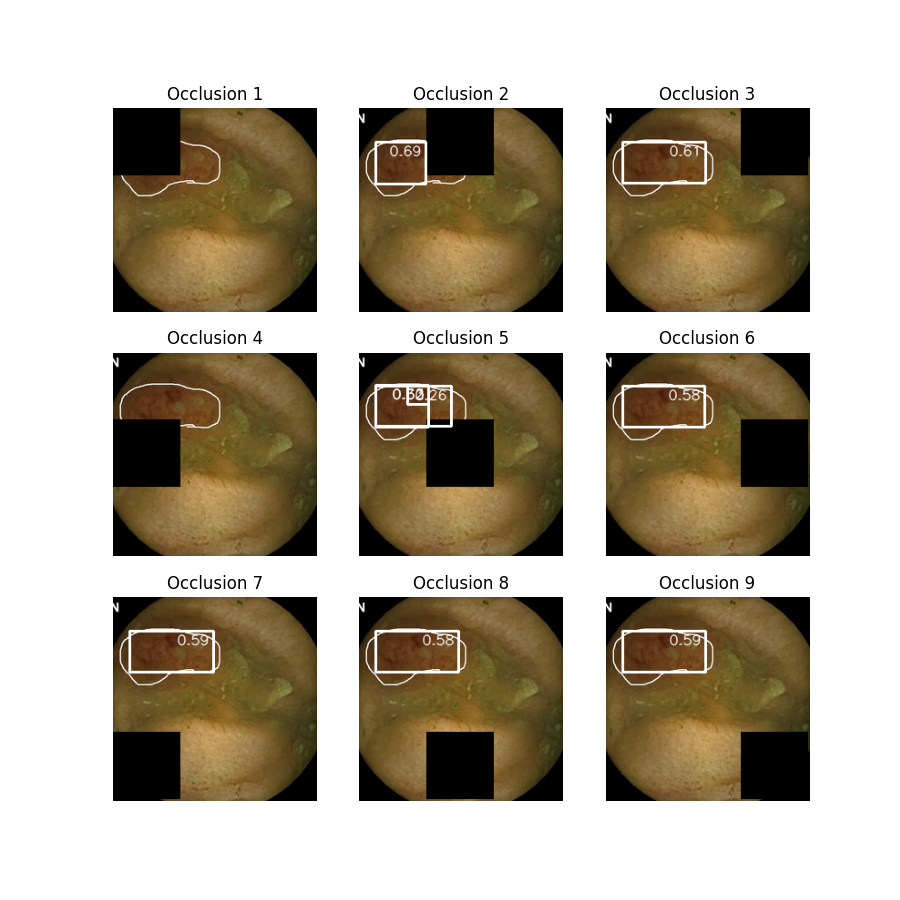

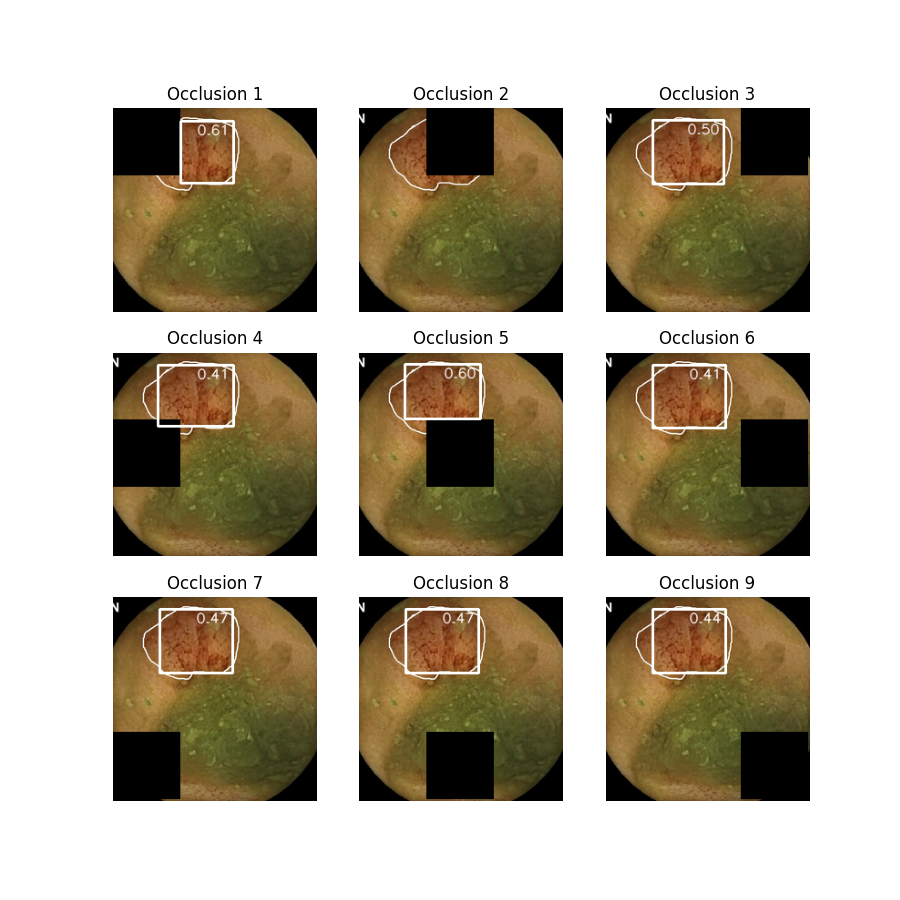

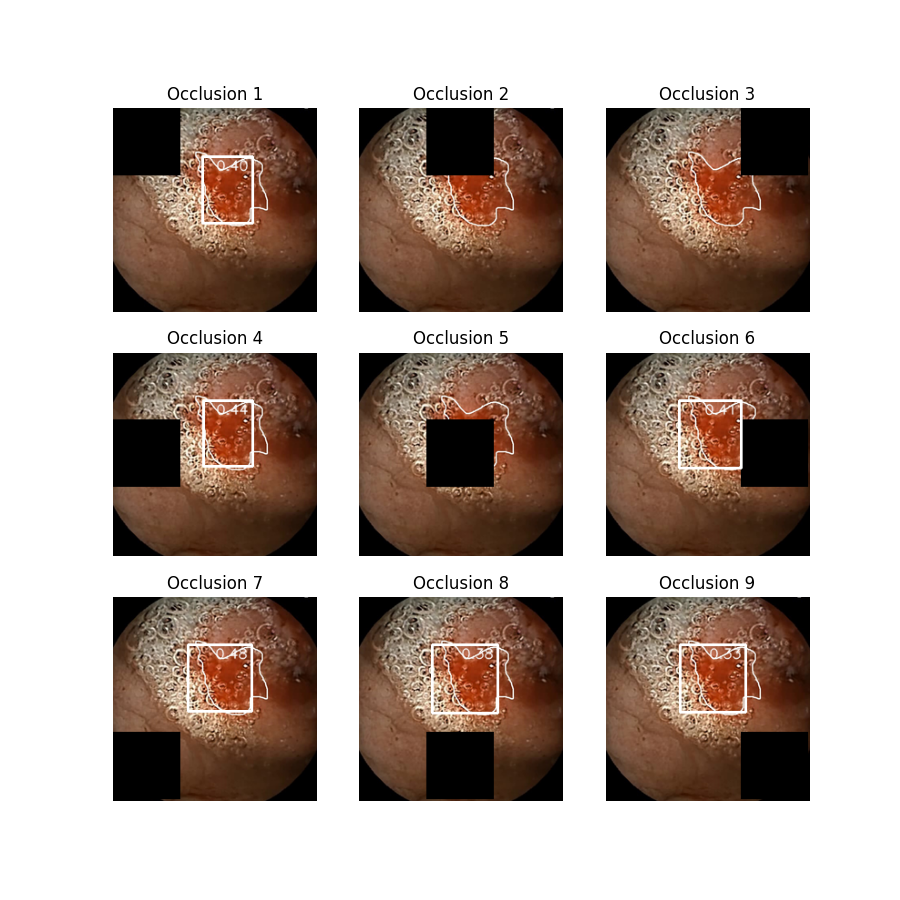

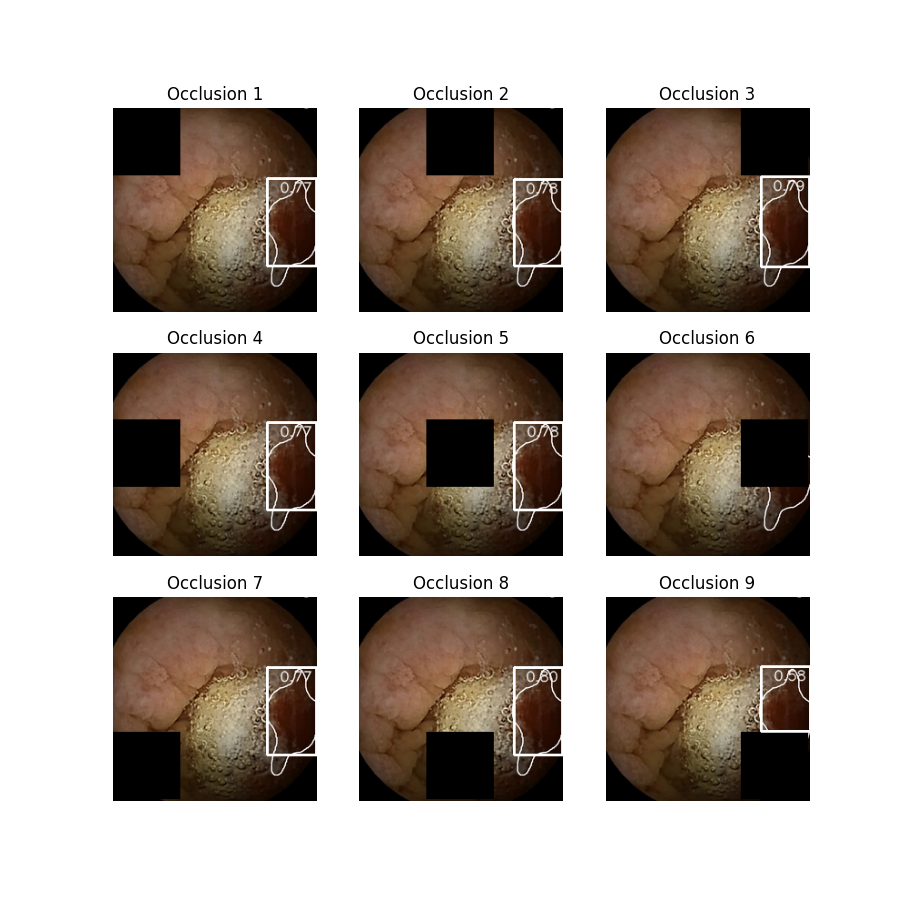

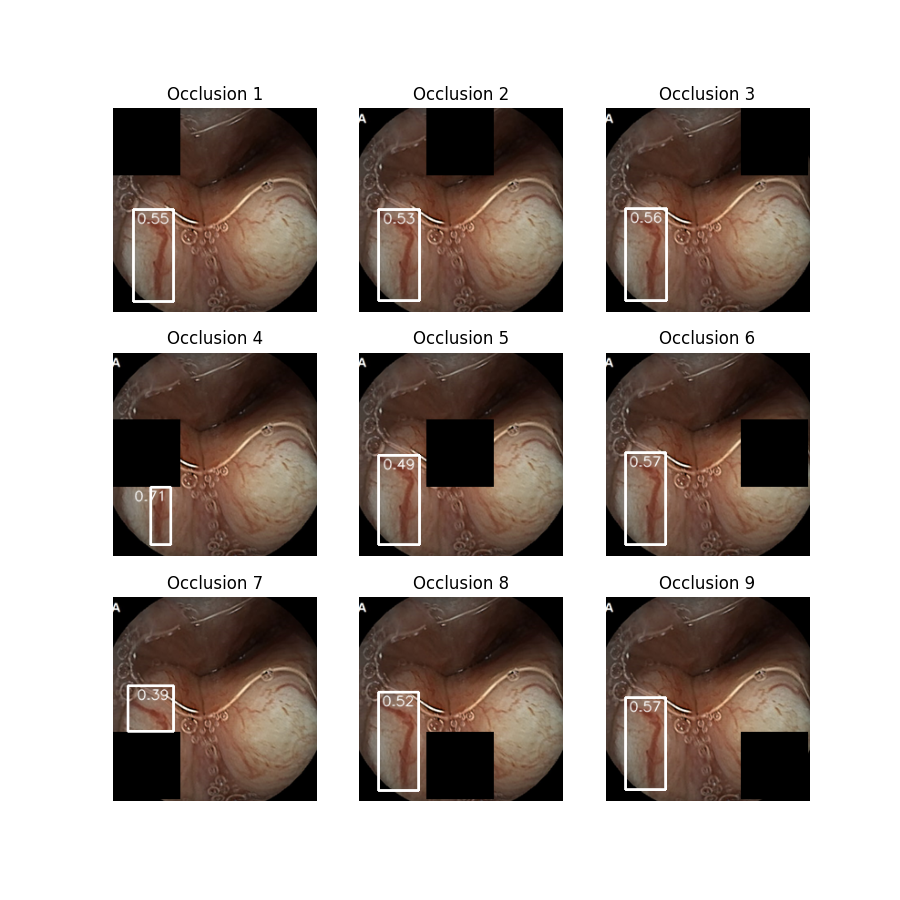

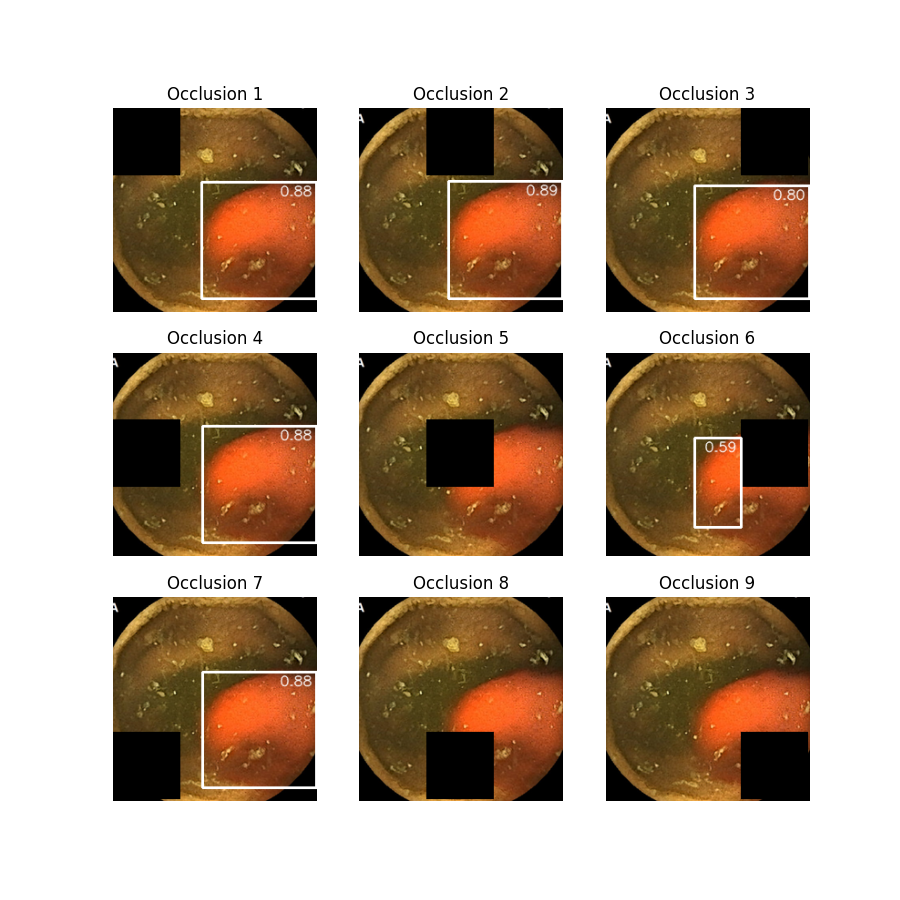

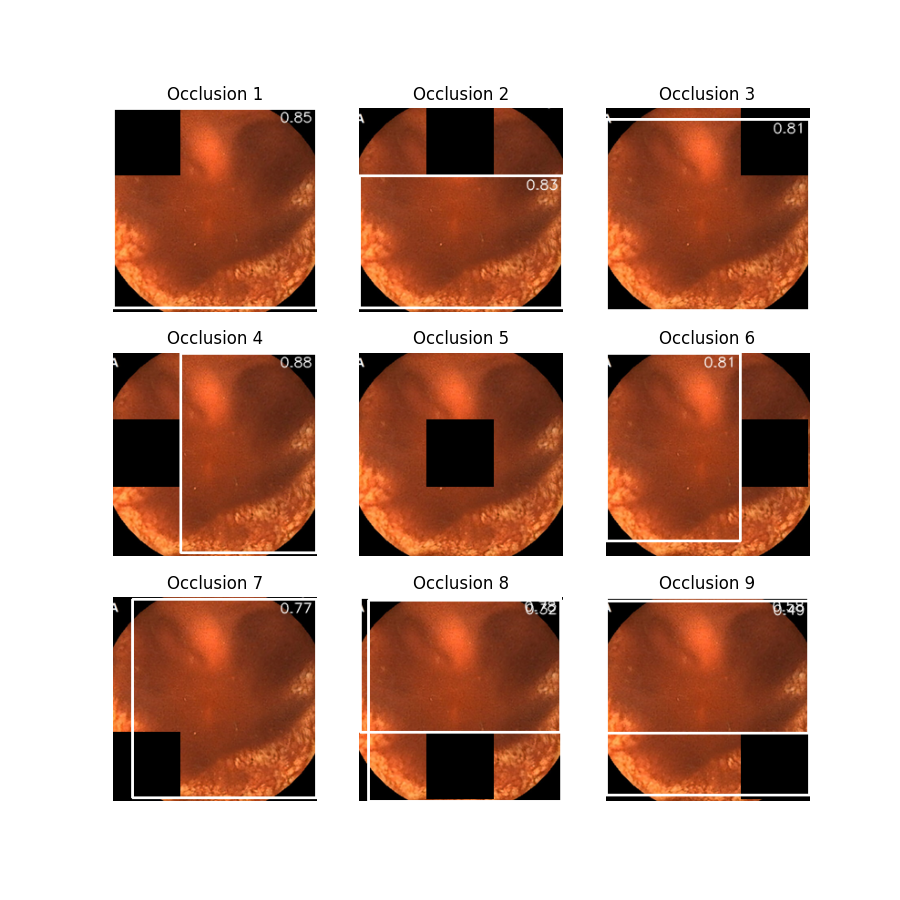

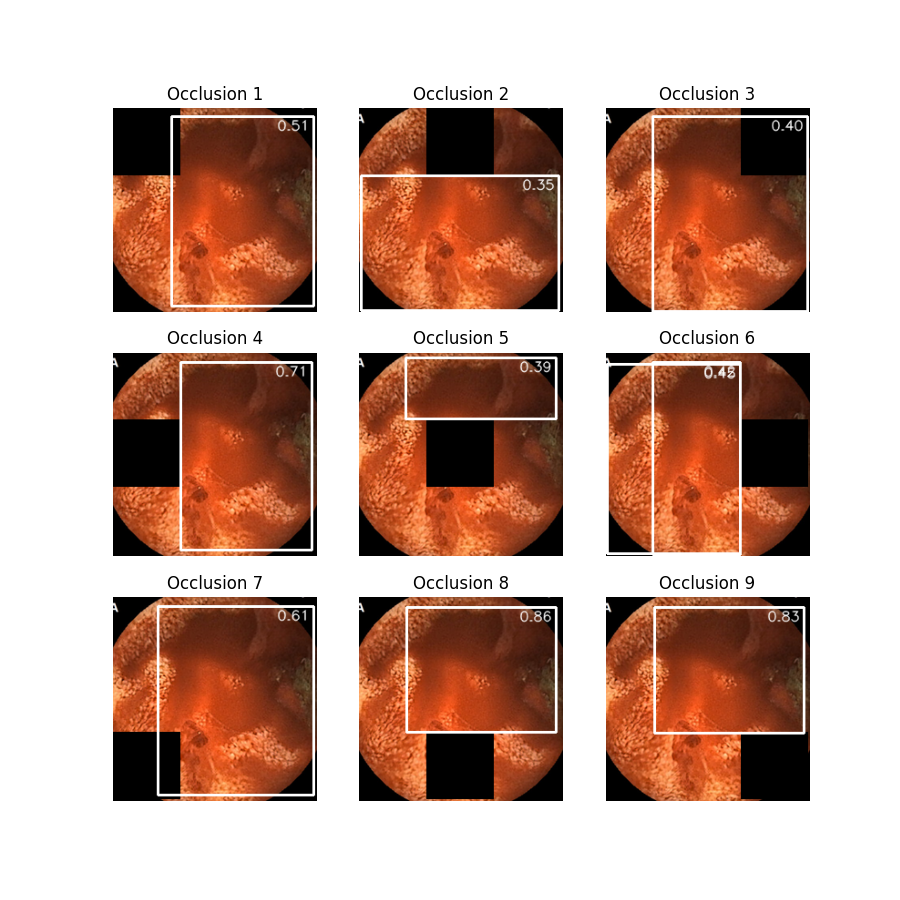

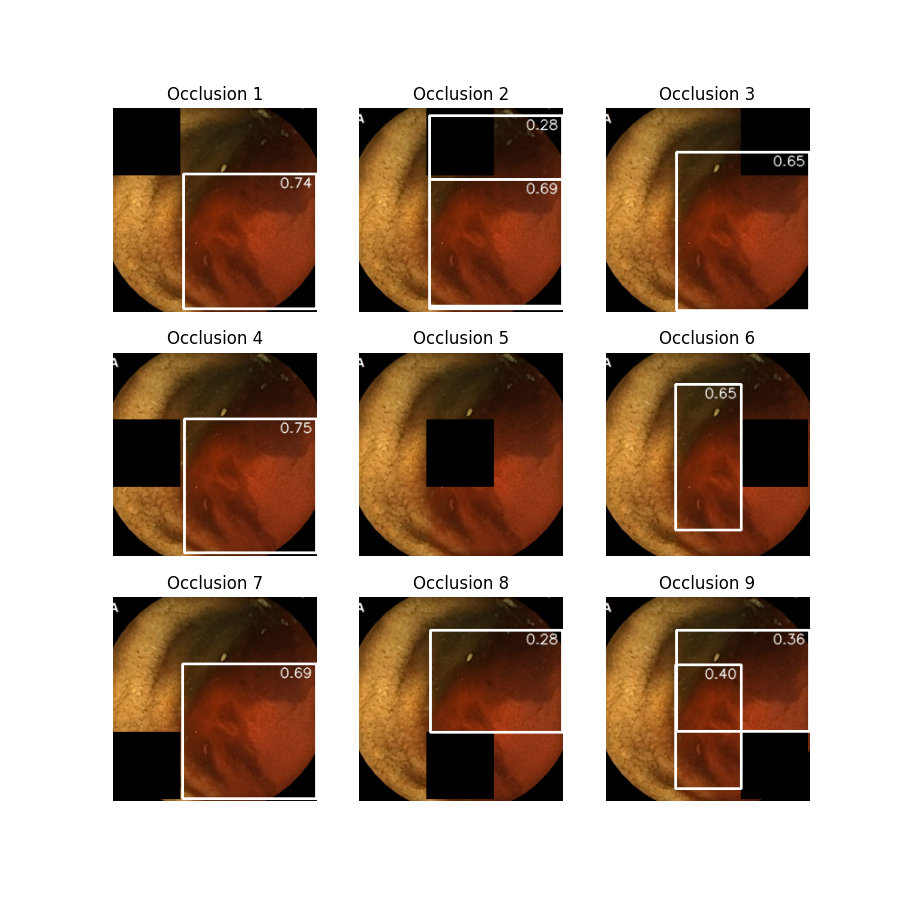

We have trained a YOLOv8-X model, specifically designed to identify bleeding regions in Wireless capsule endoscopy (WCE) images. This model is used for both Detection and classification problems. By default it does Detection, and whenever we get detections (bounding boxes), we automatically classify the frames as bleeding, or else non-bleeding. Please Visit Testing_with_classification.py and Model_Weights files to use it.

Before you begin, ensure you have the following prerequisites installed on your system:

- Python (Python 3.x)

- PyTorch

- OpenCV-Python

- Ultralytics

You can install the required Python packages (PyTorch, OpenCV-Python, Ultralytics) using pip:

pip install torch opencv-python ultralytics

To train the model, just download the weights(Model_weights/best.pt) and wce_dataset, then run the training script or training_notebook or Command below:

yolo task=detect mode=train model=/path/to/your/model.pt data=/path/to/your/data.yaml epochs=50 imgsz=800 plots=True

The above command will train the YOLOv8 model for given epochs and save the best model weights in the weights folder. The training results will be saved in the runs/train folder.The original YOLOv8 models can be downloaded from here!

To predict on data, run Testing_with_classification.py script for getting both detection and classification results or Testing.py for only detection. In both the scripts, in the end give the path to model weights, input folder, output folder and the predictions will be stored. Or through command line:

yolo task=detect mode=predict model=/path/to/your/model.pt data=/path/to/your/data.yaml source=/path/to/your/images_or_video_or_folder save=True conf=0.25

The above command will run YOLOv8 inference on the images or video or folder and save the results in the runs/detect/predict folder.

To validate the model, run the validation script or Command below:

yolo task=detect mode=val model=/path/to/your/model.pt data=/path/to/your/data.yaml

The above command will run YOLOv8 validation on the validation dataset and save the results in the runs/detect/val folder.

We have trained the YOLOv8-X Model.The first five folder in the repository-Model_Weights,Classification_Predictions,Code,Datasets,Detection_Predictions are from this model.Both the Classification and Detection tasks, along with all the evaluations are done with this model on the Validation dataset. There was some problem with the bounding box annotations in the training data given,there were many wrong,less accurate and missed annotations. So we reannotated the dataset,and added more data from other internet sources, annotated them too and trained this Model.

The sixth folder Matlab_Classification_Model, contains a seperate Classification model with all the related folders.

The seventh folder Other_Detection_Model, is also a seperate Detection model. As I mentioned above due to the data problem, We created a seperate version of the dataset, by reannotating it completely from binary masks and trained this Model.Please take a look at it if you want to see how the model performs, when trained just on the training dataset.

So, the first YOLOv8-X Model is the best Model so far, for both Detection and Classification.It has been trained on more data (6345 Images) and gone through several iterations!

-

Enhanced Dataset: We can create a larger, diverse dataset, with good quality annotations by collaborating with medical professionals.

-

Model Exploration: We can experiment with different model architectures, including larger models, and maybe go forward and develop segmentation models, which can potentially be more accurate and useful for medical purposes!