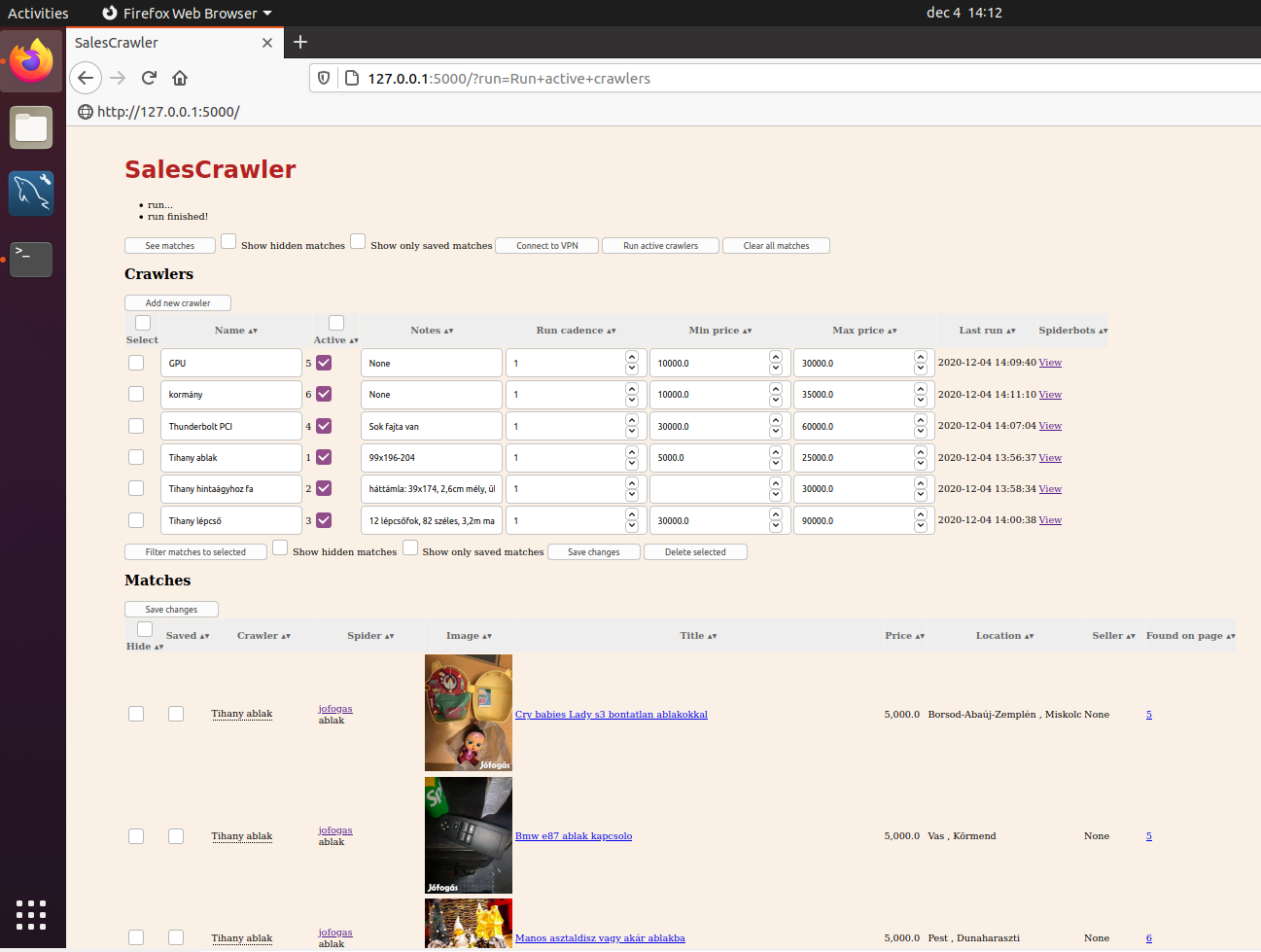

Backend, WebGUI and some custom spiders to host regular searches of scrapy spiders. User can hide matches to avoid repeative checks of same found items. Still working in progress, 1st version with pip package coming soon...

- Webserver to provide webGUI on local machine

- Store found matches in MySQL database

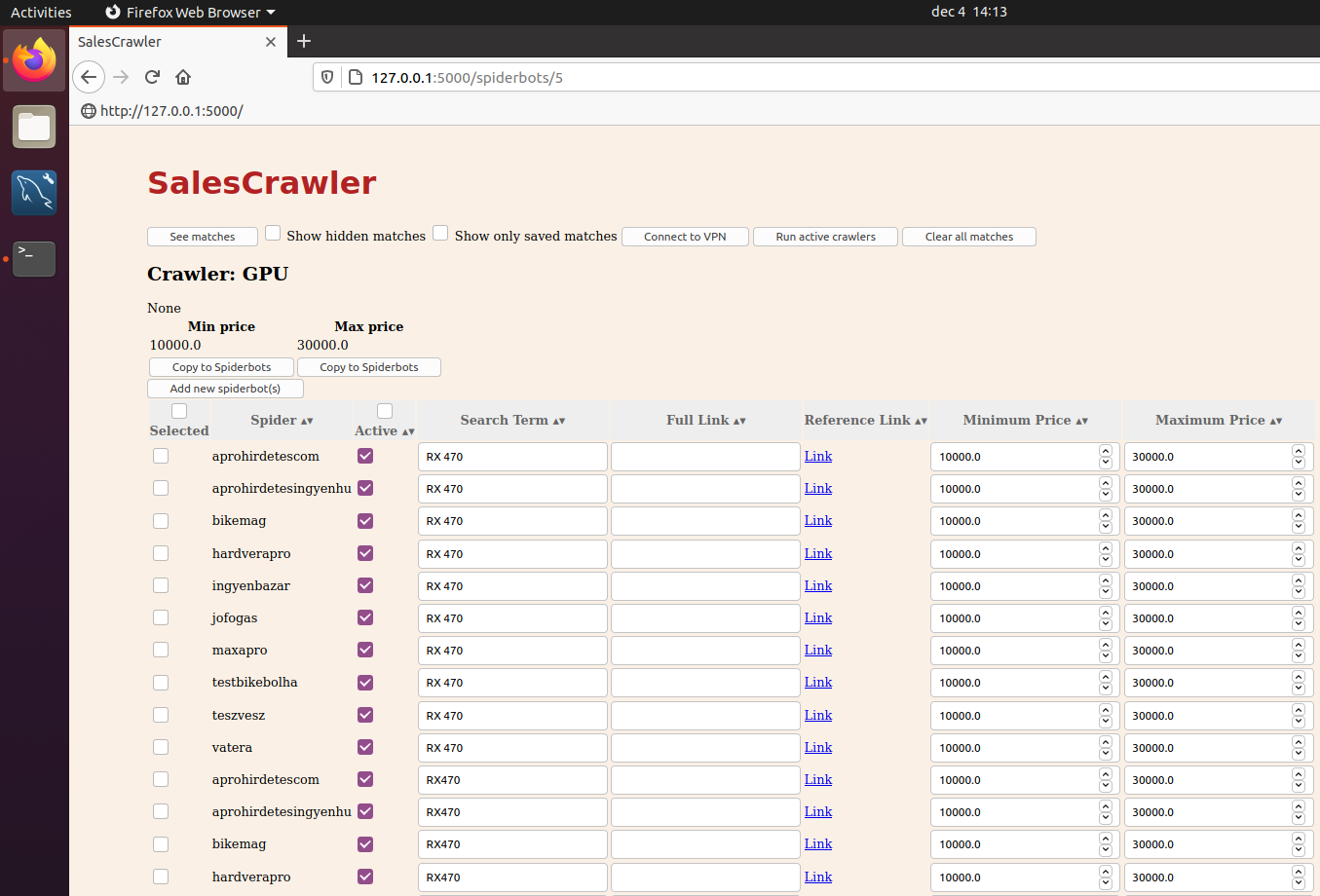

- Crawlers: a set of spiders for a specific item with min/max price and notes

- Spiderbots: Crawdy spiders with specific item to search or direct url for searchpage

- Matches: can be hidden or saved as favorites.

- Python3 is required

- Follow/run install_VM.sh