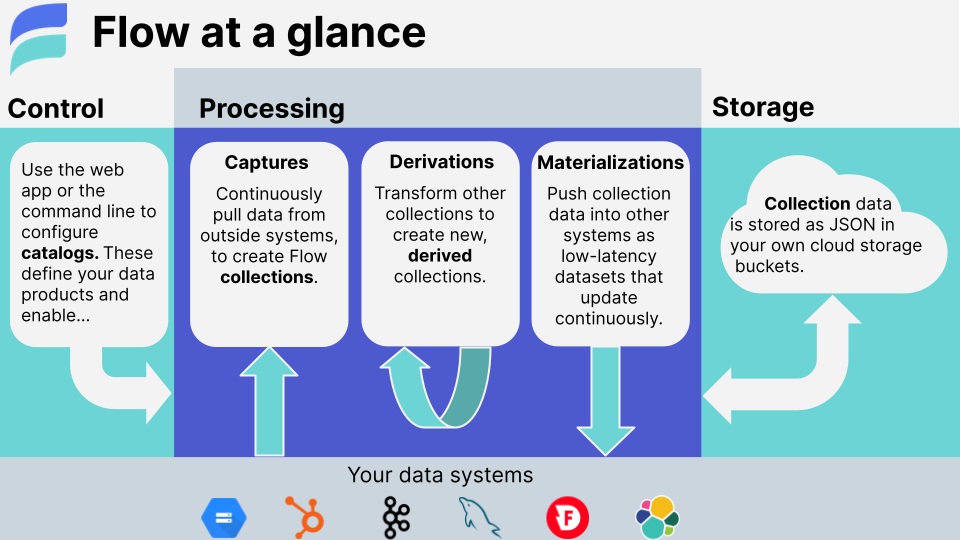

Estuary Flow is a DataOps platform that integrates all of the systems you use to produce, process, and consume data.

Flow unifies today's batch and streaming paradigms so that your systems – current and future – are synchronized around the same datasets, updating in milliseconds.

With a Flow pipeline, you:

-

📷 Capture data from your systems, services, and SaaS into collections: millisecond-latency datasets that are stored as regular files of JSON data, right in your cloud storage bucket.

-

🎯 Materialize a collection as a view within another system, such as a database, key/value store, Webhook API, or pub/sub service.

-

🌊 Derive new collections by transforming from other collections, using the full gamut of stateful stream workflow, joins, and aggregations.

❗️ Our UI-based web application is coming in Q2 of 2022. Learn about the beta program here. In future releases, we'll combine the CLI and UI into a unified platform. Flow is a tool meant to allow all data stakeholders to meaningfully collaborate: engineers, analysts, and everyone in between.❗️

-

🧐 Many examples/ are available in this repo, covering a range of use cases and techniques.

Flow works with catalog specifications, written in declarative YAML and JSON Schema:

captures:

# Capture Citi Bike's public system ride data.

examples/citi-bike/rides-from-s3:

endpoint:

connector:

# Docker image which implements a capture from S3.

image: ghcr.io/estuary/source-s3:dev

# Configuration for the S3 connector.

# This can alternatively be provided as a file, and Flow integrates with

# https://github.com/mozilla/sops for protecting credentials at rest.

config:

# The dataset is public and doesn't require credentials.

awsAccessKeyId: ""

awsSecretAccessKey: ""

region: "us-east-1"

bindings:

# Bind files starting with s3:https://tripdata/JC-201703 into a collection.

- resource:

stream: tripdata/JC-201703

syncMode: incremental

target: examples/citi-bike/rides

collections:

# A collection of Citi Bike trips.

examples/citi-bike/rides:

key: [/bike_id, /begin/timestamp]

# JSON schema against which all trips must validate.

schema: https://raw.githubusercontent.com/estuary/flow/master/examples/citi-bike/ride.schema.yaml

# Projections relate a tabular structure (like SQL, or the CSV in the "tripdata" bucket)

# with a hierarchical document like JSON. Here we define projections for the various

# column headers that Citi Bike uses in their published CSV data. For example some

# files use "Start Time", and others "starttime": both map to /begin/timestamp

projections:

bikeid: /bike_id

birth year: /birth_year

end station id: /end/station/id

end station latitude: /end/station/geo/latitude

end station longitude: /end/station/geo/longitude

end station name: /end/station/name

start station id: /begin/station/id

start station latitude: /begin/station/geo/latitude

start station longitude: /begin/station/geo/longitude

start station name: /begin/station/name

start time: /begin/timestamp

starttime: /begin/timestamp

stop time: /end/timestamp

stoptime: /end/timestamp

tripduration: /duration_seconds

usertype: /user_type

materializations:

# Materialize rides into a PostgreSQL database.

examples/citi-bike/to-postgres:

endpoint:

connector:

image: ghcr.io/estuary/materialize-postgres:dev

config:

# Try this by standing up a local PostgreSQL database.

# docker run --rm -e POSTGRES_PASSWORD=password -p 5432:5432 postgres -c log_statement=all

# (Use host: host.docker.internal when running Docker for Windows/Mac).

address: localhost:5432

password: password

database: postgres

user: postgres

bindings:

# Flow creates a 'citi_rides' table for us and keeps it up to date.

- source: examples/citi-bike/rides

resource:

table: citi_rides

storageMappings:

# Flow builds out data lakes for your collections in your cloud storage buckets.

# A storage mapping relates a prefix, like examples/citi-bike/, to a storage location.

# Here we tell Flow to store everything in one bucket.

"": { stores: [{ provider: S3, bucket: my-storage-bucket }] }❗ These workflows are under active development and may change. Note that Flow doesn't work yet on Apple M1. For now, we recommend CodeSpaces or a separate Linux server.

Start a PostgreSQL server on your machine:

$ docker run --rm -e POSTGRES_PASSWORD=password -p 5432:5432 postgres -c log_statement=allStart a Flow data plane on your machine:

$ flowctl-admin temp-data-plane

export BROKER_ADDRESS=http:https://localhost:8080

export CONSUMER_ADDRESS=http:https://localhost:9000In another tab, apply the exported BROKER_ADDRESS and CONSUMER_ADDRESS,

and save the example to flow.yaml. Then apply it to the data plane:

$ flowctl-admin deploy --source flow.yamlYou'll see a table created and loaded within your PostgreSQL server.

Captures and materializations use connectors: plug-able Docker images which implement connectivity to a specific external system. Estuary is implementing connectors on an ongoing basis. Flow can also run any connector implemented to the AirByte specification.

Flow builds on Gazette, a streaming broker created by the same founding team. Collections have logical and physical partitions which are implemented as Gazette journals. Derivations and materializations leverage the Gazette consumer framework, which provide durable state management, exactly-once semantics, high availability, and dynamic scale-out.

Flow collections are both a batch dataset – they're stored as a structured "data lake" of general-purpose files in cloud storage – and a stream, able to commit new documents and forward them to readers within milliseconds. New use cases read directly from cloud storage for high scale back-fills of history, and seamlessly transition to low-latency streaming on reaching the present.

Gazette, on which Flow is built, has been operating at large scale (GB/s) for many years now and is very stable.

Flow itself is winding down from an intense period of research and development. Estuary is running production pilots now, for a select group of beta customers (you can reach out for a free consult with the team). For now, we encourage you to use Flow in a testing environment, but you might see unexpected behaviors or failures simply due to the pace of development.

It depends on the use case, of course, but... fast. On a modest machine, we're seeing performance of complex, real-world use cases achieve 10K inputs / second, where each input involves many downstream derivations and materializations. We haven't begun any profile-guided optimization work, though, and this is likely to improve.

Flow mixes a variety of architectural techniques to achieve great throughput without adding latency:

- Optimistic pipelining, using the natural back-pressure of systems to which data is committed

- Leveraging

reduceannotations to group collection documents by-key wherever possible, in memory, before writing them out - Co-locating derivation states (registers) with derivation compute: registers live in an embedded RocksDB that's replicated for durability and machine re-assignment. They update in memory and only write out at transaction boundaries.

- Vectorizing the work done in external Remote Procedure Calls (RPCs) and even process-internal operations.

- Marrying the development velocity of Go with the raw performance of Rust, using a zero-copy CGO service channel.

-

To cross-compile

muslbinaries from a darwin arm64 (M1) machine, you need to installmusl-crossand link it:brew install filosottile/musl-cross/musl-cross sudo ln -s /opt/homebrew/opt/musl-cross/bin/x86_64-linux-musl-gcc /usr/local/bin/musl-gcc -

Install GNU

coreutilswhich are used in the build process using:brew install coreutils -

If you encounter build errors complaining about missing symbols for x86_64 architecture, try setting the following environment variables:

export GOARCH=arm64 export CGO_ENABLED=1 -

If you encounter build errors related to openssl, you probably have openssl 3 installed, rather than openssl 1.1:

$ brew uninstall openssl@3 $ brew install [email protected]Also make sure to follow homebrew's prompt about setting

LDFLAGSandCPPFLAGS -

If you encounter build errors complaining about

invalid linker name in argument '-fuse-ld=lld', you probably need to install llvm:$ brew install llvmAlso make sure to follow homebrew's prompt about adding llvm to your PATH