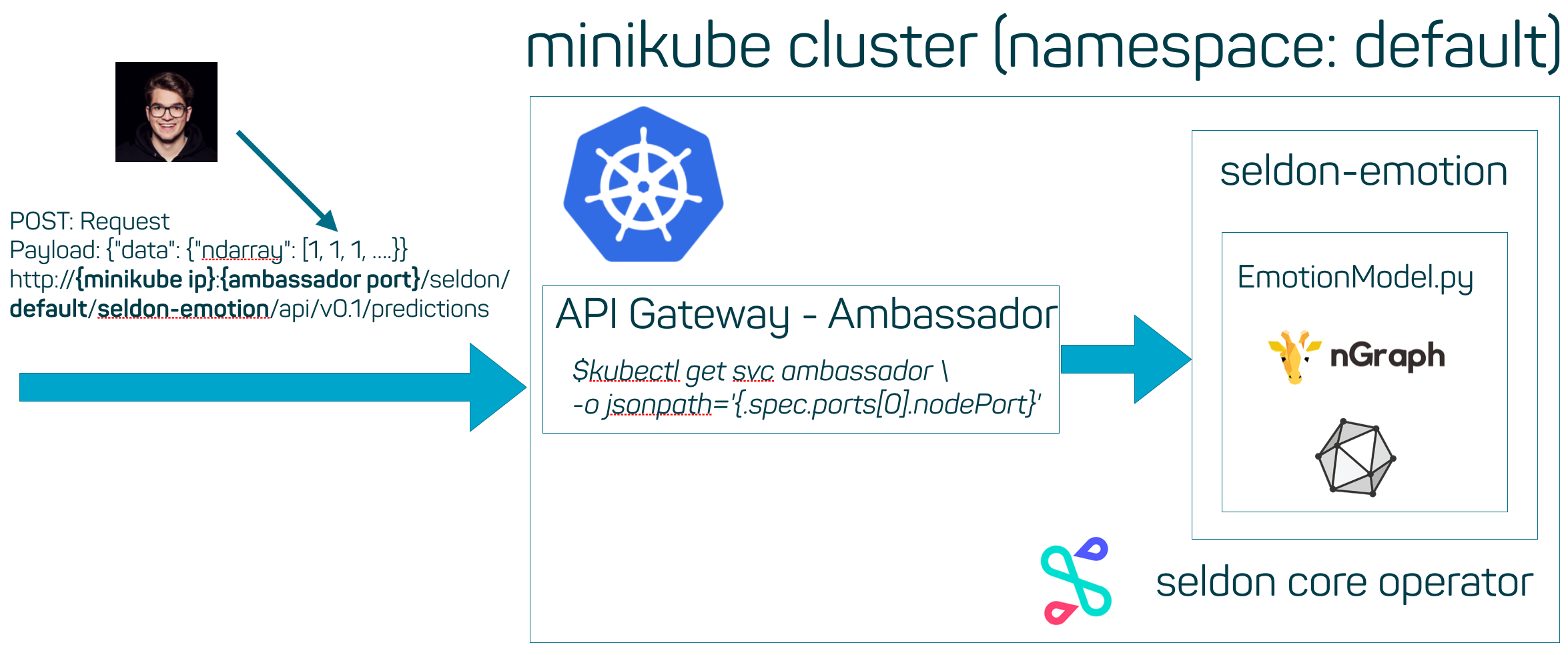

This repository shows how to serve an ONNX model with seldon-core. We are deploying a deep convolutional neural network for emotion recognition in faces in a local Kubernetes cluster. The ONNX model can be found in the onnx/models repository.

- English:

- German:

We are deploying a model in a Kubernetes to perform emotion recogniton on a face.

We are deploying a model in a Kubernetes to perform emotion recogniton on a face.

- images: Contains the test image smile.jpg and documentation images

- model/model.onnx: emotion_ferplus

- Dockerfile: Dockerfile to run application local

- EmotionModel.py: Python wrapper for seldon-core

- Seldon_Kubernetes.ipynb: Tutorial code to deploy model on Kubernetes

- client.py: Client script to test the deployed Kubernetes model

- emotion_service_deployment.json: Kubernetes deployment template for seldon-core-operator

- nGraph_ONNX_Example.ipynb: nGraph ONNX example

- payload.json: smile.jpg as json representation for testing.

We need the following requirements:

- minikube: local Kubernetes cluster

- helm: Package manager for Kubernetes

- s2i (source-to-image): Create containers through templates and source code

Clone repository and CD into the folder.

docker build -t emotion_service:0.1 . && docker run -p 5000:5000 -it emotion_service:0.1

Run the following script with Python:

from PIL import Image

import numpy as np

import requests

path_to_image = "images/smile.jpg"

image = Image.open(path_to_image).convert('L')

resized = image.resize((64, 64))

values = np.array(resized).reshape(1, 1, 64, 64)

req = requests.post("http:https://localhost:5000/predict", json={"data":{"ndarray": values.tolist()}})All commands to set up the model on the Kubernetes cluster can be found in the Seldon_Kubernetes.ipynb notebook.

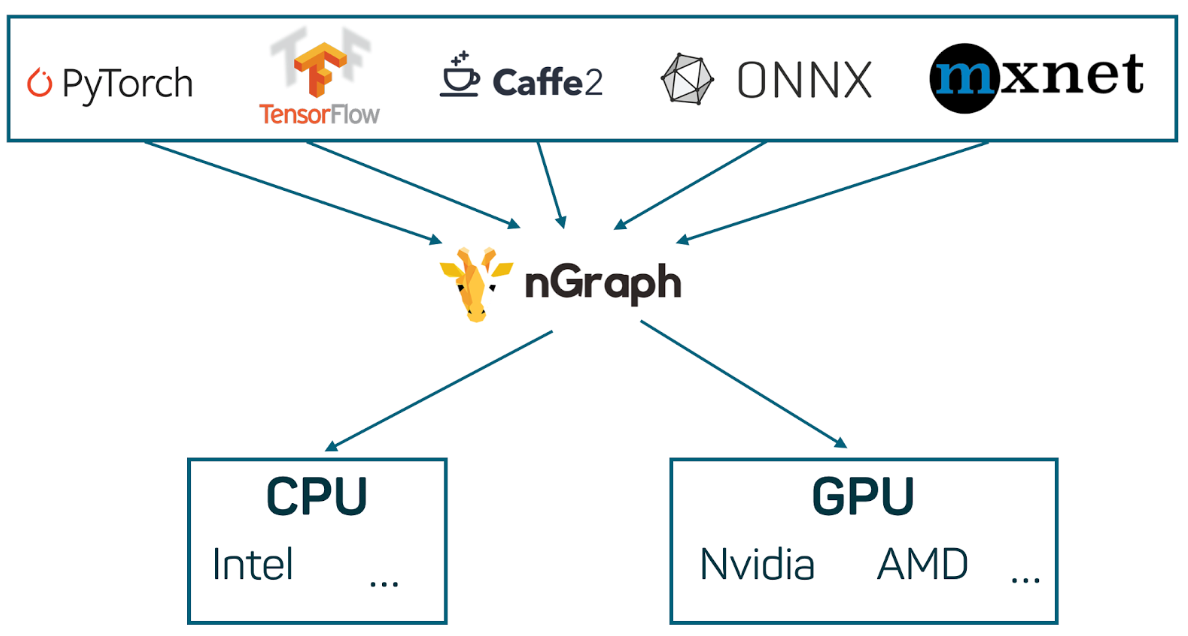

Take a look into the nGraph compiler repository.

Take a look into the nGraph compiler repository.

from ngraph_onnx.onnx_importer.importer import import_onnx_file

import ngraph as ng

# Import the ONNX file

model = import_onnx_file('model/model.onnx')

# Create an nGraph runtime environment

runtime = ng.runtime(backend_name='CPU')

# Select the first model and compile it to a callable function

emotion_cnn = runtime.computation(model)minikube ip

kubectl get svc ambassador -o jsonpath='{.spec.ports[0].nodePort}'

curl -vX POST http:https://192.168.99.100:30809/seldon/default/seldon-emotion/api/v0.1/predictions -d @payload.json --header "Content-Type: application/json"