This repo is for the paper Evaluating and Analyzing Relationship Hallucinations in Large Vision-Language Models (ICML2024).

@InProceedings{pmlr-v235-wu24l,

title = {Evaluating and Analyzing Relationship Hallucinations in Large Vision-Language Models},

author = {Wu, Mingrui and Ji, Jiayi and Huang, Oucheng and Li, Jiale and Wu, Yuhang and Sun, Xiaoshuai and Ji, Rongrong},

booktitle = {Proceedings of the 41st International Conference on Machine Learning},

pages = {53553--53570},

year = {2024},

editor = {Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix},

volume = {235},

series = {Proceedings of Machine Learning Research},

month = {21--27 Jul},

publisher = {PMLR},

pdf = {https://raw.githubusercontent.com/mlresearch/v235/main/assets/wu24l/wu24l.pdf},

url = {https://proceedings.mlr.press/v235/wu24l.html},

}

Download R-Bench. The main annotation files include:

- image-level_filterd.json

- instance-level_filterd.json

- nocaps_pope_obj_random_image.json

- nocaps_pope_obj_popular_image.json

- nocaps_pope_obj_adversarial_image.json

- web_data

- instance_mask

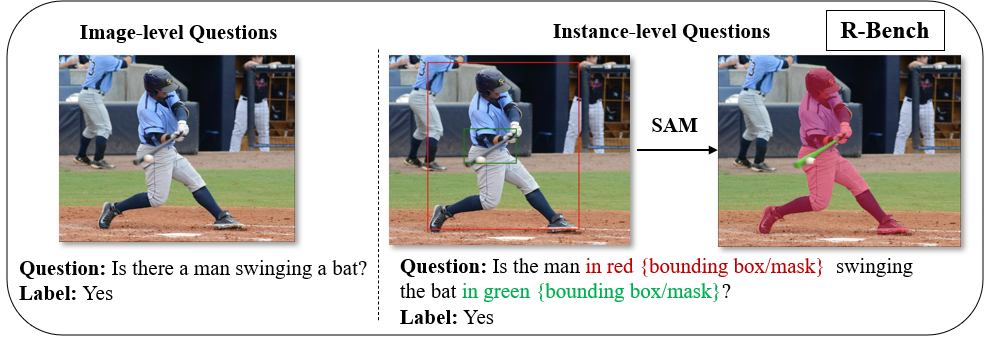

These files contain annotations for image-level, instance-level(box and mask share same questions), pope-object, and web-data questions. For image-level and instance-level questions, we randomly sampled five subsets into the [type]_ids_[subset].json files.

Download the images from image (source from Open Image validation set (v4)).

Step1: To run LVLM on R-Bench using the official inference script of the LVLMs.

For Image-level, pseudocode is as follows,

for line in questions:

question_id = line['question_id']

question = line['text']

image = open(line['image'])

text = model(question, image)

answer_file.write(json.dumps("question_id": question_id, "text":text))

For Instance-level, pseudocode is as follows, set instance_level_box=True or instance_level_mask=True to get result for Instance-Level result with Box or Mask.

import instance_qs_construct, draw_box, draw_mask

for line in questions:

question_id = line['question_id']

question = instance_qs_construct(line, type='mask' if instance_level_mask else 'box')

if instance_level_box:

image = draw_box(line)

elif instance_level_mask:

image = draw_mask(line)

text = model(question, image)

answer_file.write(json.dumps("question_id": question_id, "text":text))

and format the result.json file as follows:

{"question_id": 0, "text":[model output]}

{"question_id": 1, "text":[model output]}

...

Tips: We provide instance-level question tools in utils.py. Please use the draw_mask and draw_box functions to draw the mask or box on input images, respectively. Additionally, use the instance_qs_construct function to reformat the instance questions.

Step2: Eval with,

sh eval.sh

Tips: You can just replace --result-file with the file you generated, and the eval.py script will automatically calculate the average results for different subsets. The results obtained through our script are for the Subset, and the results for Image(All) in the paper are for reference only.

The evaluation code is based on POPE.