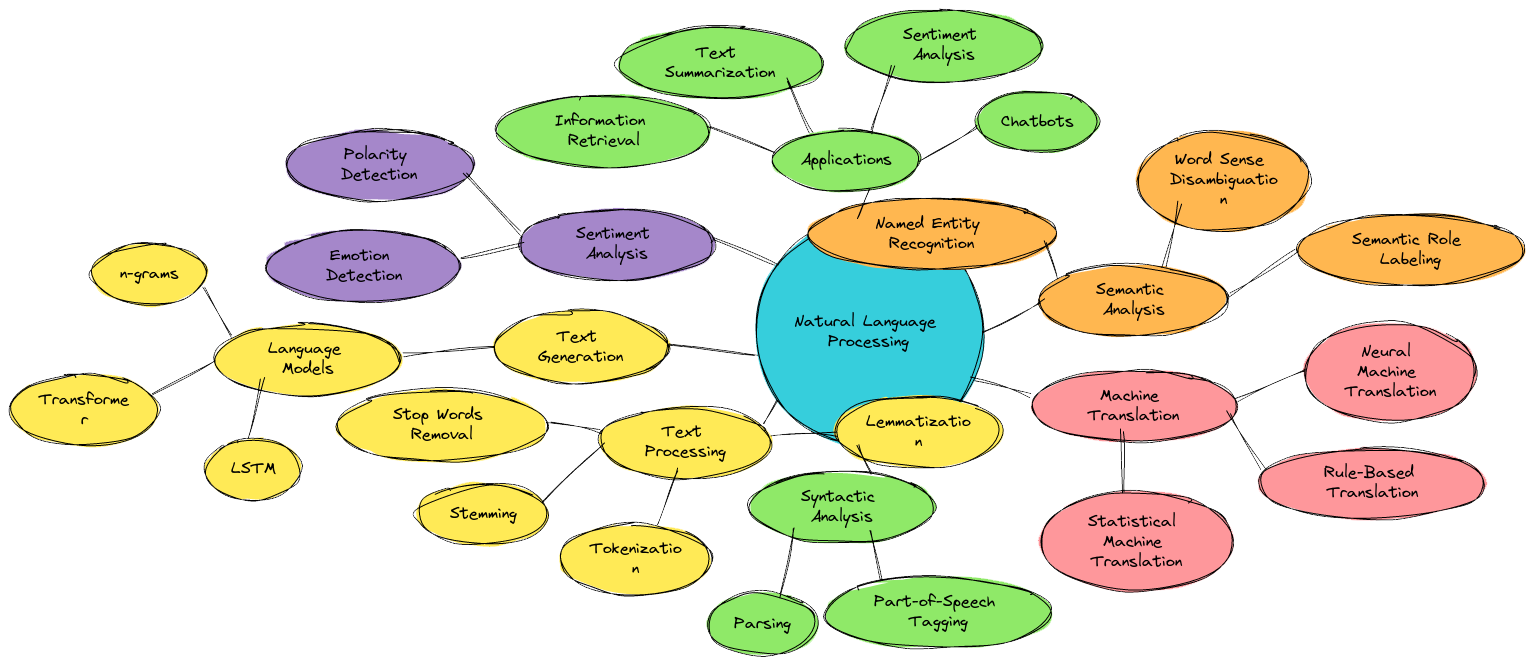

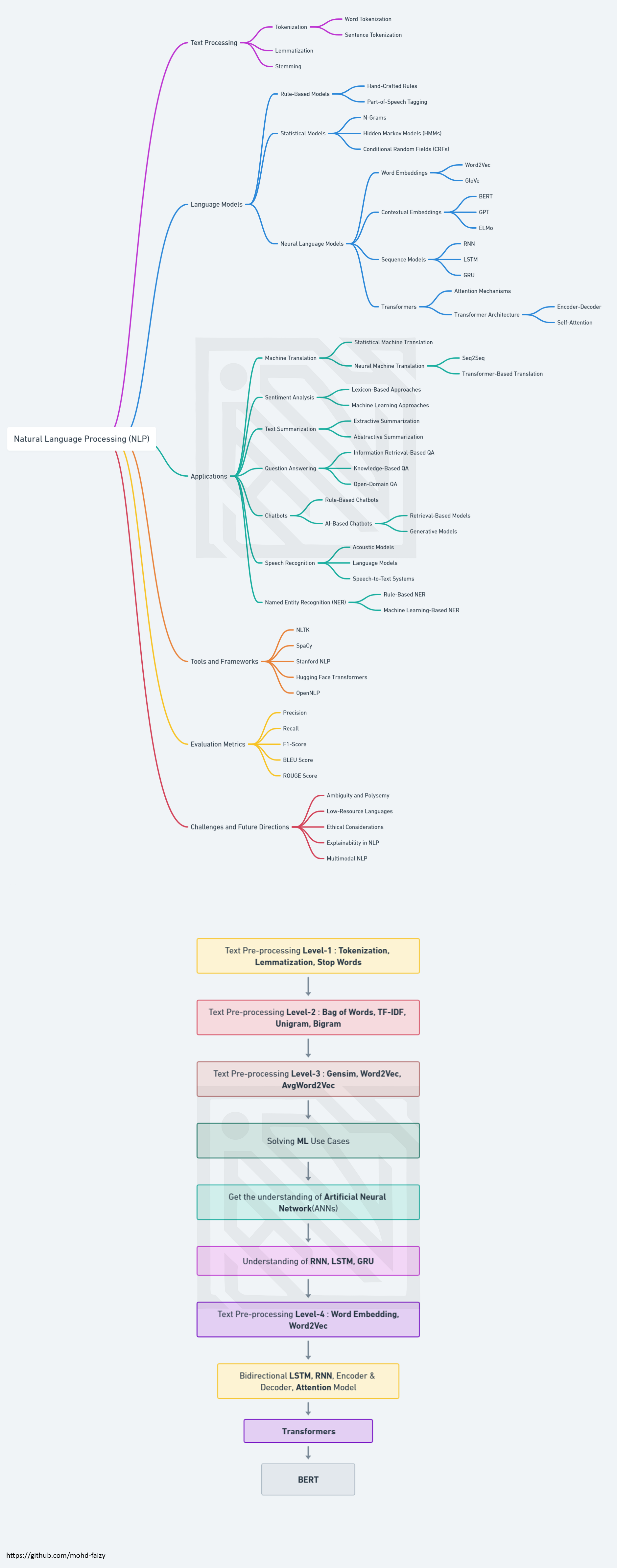

Welcome to the Natural Language Processing repository!. This repository serves as a comprehensive resource for mastering NLP techniques in Python.

- ✨NLP Basics

- 🌟Sentiment Analysis

- 🤖Building Chatbots

- 🚀spaCy

- 🗣️Spoken Language Processing

- 📊Feature Engineering for NLP

- 📚Additional NLP Topics

- 🔥BERT (Bidirectional Encoder Representations from Transformers)

- 🌌Large Language Models (LLMs)

- 👁️

| Library/Function | Description |

|---|---|

NLTK |

NLTK (Natural Language Toolkit) is a comprehensive library for natural language processing tasks. It offers a wide range of tools and resources for text analysis, including tokenization, part-of-speech tagging, and sentiment analysis. |

Scikit-learn |

Scikit-learn, a versatile machine learning library, empowers you to build sophisticated models for various NLP applications. Its extensive set of algorithms and tools enables intelligent data-driven decision-making. |

spaCy |

spaCy is a robust NLP library suitable for both small-scale projects and enterprise-level applications. It excels in tasks like text processing, named entity recognition, and dependency parsing, offering high-performance processing. |

Speech Recognition |

Speech Recognition opens the door to voice data analysis, allowing you to explore and extract valuable insights from spoken language, facilitating voice-related NLP projects. |

Gensim |

Gensim is a specialized library for topic modeling and document similarity analysis. Widely used for text summarization and document clustering, it helps you extract meaningful information from textual data. |

TextBlob |

TextBlob is a user-friendly NLP library offering an intuitive interface for common NLP tasks such as sentiment analysis, part-of-speech tagging, and translation, simplifying the NLP process. |

Transformers |

The Transformers library from Hugging Face is the preferred choice for working with pre-trained language models like BERT, GPT-2, and T5. It empowers you to leverage state-of-the-art NLP capabilities with ease. |

Word2Vec |

Word2Vec is an algorithm dedicated to learning word embeddings from extensive text corpora. Leveraged by Gensim, it facilitates advanced text analysis and semantic understanding. |

Stanford NLP |

Stanford NLP provides a suite of advanced NLP tools, including tokenization, part-of-speech tagging, named entity recognition, and dependency parsing, enabling precise and comprehensive text analysis. |

Pattern |

Pattern is a versatile library for web mining, natural language processing, and machine learning. It equips you with a wide range of NLP tools and features for in-depth text analysis. |

PyNLPIR |

PyNLPIR serves as a Python wrapper for the Chinese text segmentation tool NLPIR, an essential component for Chinese NLP tasks, ensuring efficient and accurate text processing. |

VADER Sentiment Analysis |

VADER (Valence Aware Dictionary and sEntiment Reasoner) is a pre-trained sentiment analysis tool tailored for social media text. It aids in assessing sentiment polarity with precision in online content. |

| Category | Component | Description |

|---|---|---|

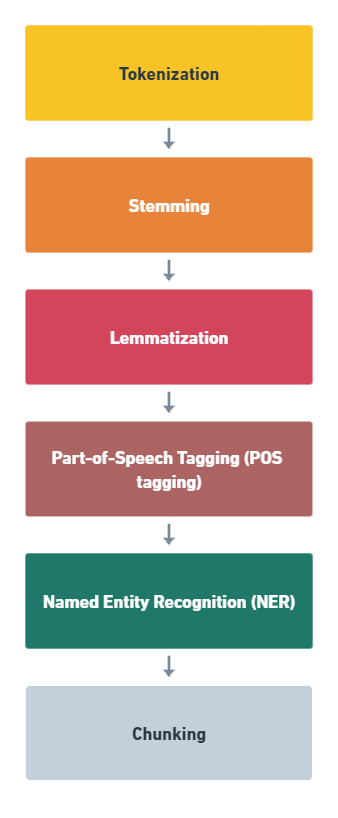

| Text Tokenization and Preprocessing | Tokenization | Splits text into words or sentences. |

| Stopwords Removal | Removes common words (e.g., "the," "and") that may not be informative for NLP tasks. | |

| Stemming | Reduces words to their root form (e.g., "running" becomes "run") to normalize text. | |

| Lemmatization | Similar to stemming but reduces words to their base or dictionary form (e.g., "better" becomes "good"). | |

| Part-of-Speech Tagging | POS Tagging | Assigns grammatical tags to words in a sentence (e.g., noun, verb, adjective). |

| Named Entity Recognition (NER) | Identifies and classifies named entities such as names of people, places, and organizations. | |

| Text Corpora and Resources | Corpus Data | Provides access to various text corpora and datasets for NLP research and practice. |

| Lexical Resources | Includes resources like WordNet, a lexical database, for synonym and semantic analysis. | |

| Parsing and Syntax Analysis | Parsing | Parses sentences to determine their grammatical structure. |

| Dependency Parsing | Analyzes the grammatical relationships between words in a sentence. | |

| Machine Learning and Classification | Naive Bayes | Implements Naive Bayes classifiers for text classification. |

| Decision Trees | Uses decision trees for text classification and other NLP tasks. | |

| Concordance and Frequency Analysis | Concordance | Provides concordance views of words within a corpus for context analysis. |

| Frequency Analysis | Analyzes word frequency and distribution in text data. | |

| Sentiment Analysis | Sentiment Analysis | Performs sentiment analysis to determine the sentiment (positive, negative, neutral) of text. |

| Word Similarity and Semantics | Word Similarity | Measures the similarity between words or phrases based on their meaning. |

| Semantic Relations | Analyzes semantic relations between words and concepts in text data. | |

| Language Processing Pipelines | NLP Pipelines | Constructs and customizes NLP processing pipelines for various tasks. |

| Language Models | Language Models | Provides access to pre-trained language models for various NLP tasks. |

| Categorization and Topic Modeling | Text Categorization | Categorizes documents into predefined topics or classes. |

| Topic Modeling | Identifies topics within a corpus of text documents using techniques like LDA (Latent Dirichlet Allocation). | |

| Language Translation | Translation | Supports machine translation of text between different languages. |

| Category | Component | Description |

|---|---|---|

| Feature Extraction and Preprocessing | CountVectorizer | Converts a collection of text documents into a matrix of token counts. |

| Each row represents a document, and each column represents a unique word (token). | ||

| Useful for creating a Bag of Words (BoW) representation of text data. | ||

| TfidfVectorizer | Converts text documents into a matrix of TF-IDF (Term Frequency-Inverse Document Frequency) features. | |

| TF-IDF considers both the frequency of a word in a document and its rarity across all documents. | ||

| Helps in weighting words based on their importance in a document corpus. | ||

| HashingVectorizer | Hashes words into a fixed-dimensional space. | |

| Useful for dealing with large datasets where traditional vectorization methods may be memory-intensive. | ||

| LabelEncoder | Encodes class labels as integer values. | |

| Useful for converting text-based class labels into a format suitable for machine learning models. | ||

| Text Classification | LogisticRegression | A simple linear model used for binary and multiclass text classification. |

| MultinomialNB | Naive Bayes classifier specifically designed for text data. | |

| Assumes that features (words) are conditionally independent. | ||

| SVM (SVC) | Support Vector Machine classifier, which can be used for text classification. | |

| Effective in high-dimensional spaces, which is common in text data. | ||

| RandomForestClassifier | Ensemble method for text classification that combines multiple decision trees. | |

| Robust and capable of handling high-dimensional feature spaces. | ||

| Model Evaluation | cross_val_score | Performs k-fold cross-validation to evaluate model performance. |

| Helps estimate how well a model will generalize to unseen data. | ||

| GridSearchCV | Performs a grid search over hyperparameters to find the best model configuration. | |

| Useful for hyperparameter tuning. | ||

| metrics | Module containing various metrics for evaluating classification models. | |

| Includes accuracy, precision, recall, F1-score, and more. | ||

| Dimensionality Reduction | TruncatedSVD | Dimensionality reduction technique using Singular Value Decomposition (SVD). |

| Useful for reducing the dimensionality of high-dimensional text data while preserving important information. | ||

| Feature Selection | SelectKBest | Selects the top k features based on statistical tests. |

| Helps in choosing the most informative features for text classification. | ||

| SelectFromModel | Selects features based on the importance assigned to them by a specific model (e.g., decision trees). | |

| Pipelines | Pipeline | Allows you to chain together multiple transformers and estimators into a single object. |

| Useful for creating a streamlined workflow for text preprocessing and modeling. | ||

| FeatureUnion | Combines the results of multiple transformer objects into a single feature space. | |

| Preprocessing and Transformation | StandardScaler | Standardizes features by removing the mean and scaling to unit variance. |

| Useful for ensuring that features have similar scales, which can be important for certain algorithms. | ||

| MinMaxScaler | Scales features to a specified range, typically [0, 1]. | |

| LabelBinarizer | Converts categorical labels into a one-hot encoding format. | |

| Clustering | KMeans | A popular clustering algorithm that can be applied to group text documents into clusters based on similarity. |

| DBSCAN | Density-based clustering algorithm that can be used for text data to discover clusters with varying shapes and sizes. | |

| Model Serialization | joblib | A library used to save trained models to disk and load them for future use. |

| Category | Component | Description |

|---|---|---|

| Tokenization and Text Preprocessing | Tokenization | Splits text into words, punctuation, and spaces, creating tokens. |

| Named Entity Recognition (NER) | Identifies and classifies named entities such as names of people, places, and organizations. | |

| Part-of-Speech Tagging | Assigns grammatical tags to words in a sentence (e.g., noun, verb, adjective). | |

| Lemmatization | Reduces words to their base or dictionary form (e.g., "better" becomes "good"). | |

| Dependency Parsing | Analyzes grammatical relationships between words in a sentence. | |

| Word Vectors and Embeddings | Word Vectors | Provides word vectors (word embeddings) for words in various languages. |

| Pre-trained Models | Offers pre-trained models with word embeddings for common NLP tasks. | |

| Similarity Analysis | Measures word and document similarity based on word vectors. | |

| Text Classification | Text Classification | Supports text classification tasks using machine learning models. |

| Custom Models | Allows training custom text classification models with spaCy. | |

| Rule-Based Matching | Rule-Based Matching | Defines rules to identify and extract information based on patterns in text data. |

| Phrase Matching | Matches phrases and entities using custom rules. | |

| Entity Linking and Disambiguation | Entity Linking | Links named entities to external knowledge bases or databases (e.g., Wikipedia). |

| Disambiguation | Resolves entity mentions to the correct entity in a knowledge base. | |

| Text Summarization | Text Summarization | Generates concise summaries of longer text documents. |

| Extractive Summarization | Summarizes text by selecting and extracting important sentences. | |

| Abstractive Summarization | Summarizes text by generating new sentences that capture the essence of the content. | |

| Dependency Visualization | Dependency Visualization | Creates visual representations of sentence grammatical structure and dependencies. |

| Language Detection | Language Detection | Detects the language of text data. |

| Named Entity Recognition (NER) Customization | NER Training | Allows training custom named entity recognition models for specific entities or domains. |

| Language Support | Multilingual Support | Provides language models and support for multiple languages. |

| Language Models | Includes pre-trained language models for various languages. |

| Category | Component | Description |

|---|---|---|

| Word Embeddings and Word Vector Models | Word2Vec | Implements Word2Vec models for learning word embeddings from text data. |

| FastText | Provides FastText models for learning word embeddings, including subword information. | |

| Doc2Vec | Learns document-level embeddings, allowing you to represent entire documents as vectors. | |

| Topic Modeling | Latent Dirichlet Allocation (LDA) | Implements LDA for discovering topics within a collection of documents. |

| Latent Semantic Analysis (LSA) | Performs LSA for extracting topics and concepts from large document corpora. | |

| Non-Negative Matrix Factorization (NMF) | Applies NMF for topic modeling and feature extraction from text data. | |

| Similarity and Document Comparison | Cosine Similarity | Measures cosine similarity between vectors, useful for document and word similarity comparisons. |

| Similarity Queries | Supports similarity queries to find similar documents or words based on embeddings. | |

| Text Preprocessing | Tokenization | Provides text tokenization for splitting text into words or sentences. |

| Stopwords Removal | Removes common words from text data to improve the quality of topic modeling. | |

| Phrase Detection | Detects common phrases or bigrams in text data. | |

| Model Training and Customization | Model Training | Trains custom word embeddings models on your text data for specific applications. |

| Model Serialization | Allows you to save and load trained models for future use. | |

| Integration with Other Libraries | Integration | Can be integrated with other NLP libraries like spaCy and NLTK for enhanced text processing. |

| Data Formats | Supports various data formats for input and output, including compatibility with popular text formats. |

| Category | Component | Description |

|---|---|---|

| Hugging Face Transformers | Transformers Library | Provides easy-to-use access to a wide range of pre-trained transformer models for NLP tasks. |

| Pre-trained Models | Includes models like BERT, GPT-2, RoBERTa, T5, and more, each specialized for specific NLP tasks. | |

| Fine-Tuning | Supports fine-tuning pre-trained models on custom NLP datasets for various downstream applications. | |

| BERT (Bidirectional Encoder Representations from Transformers) | BERT Models | Pre-trained BERT models capture contextual information from both left and right context in text. |

| Fine-Tuning | Fine-tuning BERT for tasks like text classification, NER, and question-answering is widely adopted. | |

| Sentence Embeddings | BERT embeddings can be used for sentence and document-level embeddings. | |

| GPT (Generative Pre-trained Transformer) | GPT Models | GPT-2 and GPT-3 models are popular for generating text and performing various NLP tasks. |

| Text Generation | GPT models are known for their text generation capabilities, making them useful for creative tasks. | |

| RoBERTa (A Robustly Optimized BERT Pretraining Approach) | RoBERTa Models | RoBERTa builds upon BERT with optimization techniques, achieving better performance on many tasks. |

| Fine-Tuning | Fine-tuning RoBERTa for text classification and other tasks is common for improved accuracy. | |

| T5 (Text-to-Text Transfer Transformer) | T5 Models | T5 models are designed for text-to-text tasks, allowing you to frame various NLP tasks in a unified manner. |

| Task Agnostic | T5 can handle a wide range of NLP tasks, from translation to summarization and question-answering. | |

| XLNet (eXtreme MultiLabelNet) | XLNet Models | XLNet improves upon BERT by considering all permutations of input tokens, enhancing context modeling. |

| Pre-training | XLNet is pre-trained on vast text data and can be fine-tuned for various NLP applications. | |

| DistilBERT | DistilBERT Models | DistilBERT is a distilled version of BERT, offering a smaller and faster alternative for NLP tasks. |

| Efficiency | DistilBERT provides similar performance to BERT with reduced computational requirements. | |

| Transformers for Other Languages | Multilingual Models | Many transformer models are available for languages other than English, supporting global NLP tasks. |

| Translation | Transformers can be used for machine translation between multiple languages. |

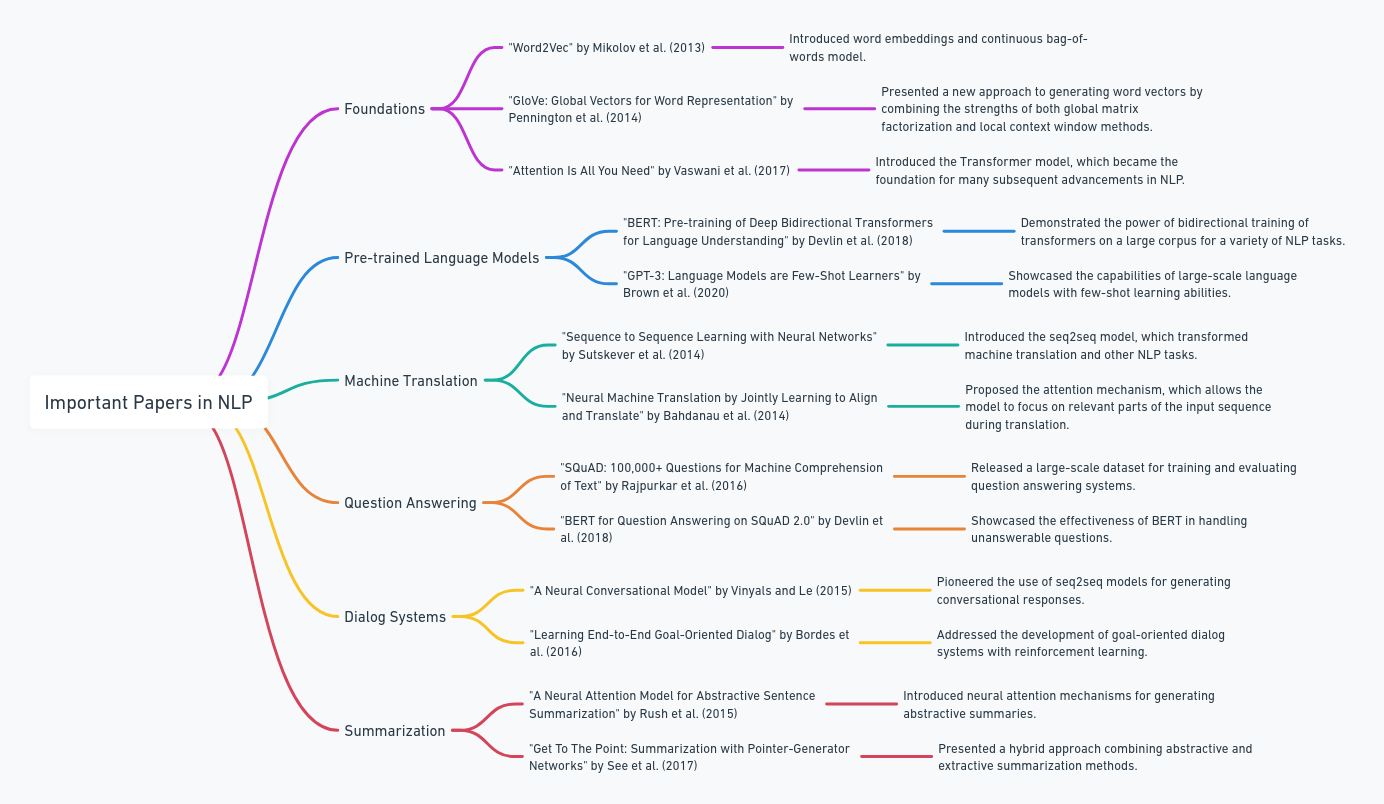

| Category | Research Papers | link |

|---|---|---|

| Word Embeddings | Word2Vec: "Efficient Estimation of Word Representations in Vector Space" by Mikolov et al. | click |

| GloVe: "GloVe: Global Vectors for Word Representation" by Pennington et al. | click | |

| FastText: "Enriching Word Vectors with Subword Information" by Bojanowski et al. | click | |

| Sequence Models | LSTM: "Long Short-Term Memory" by Hochreiter and Schmidhuber. | click |

| GRU: "Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation" by Cho et al. | click | |

| Attention Mechanisms | "Attention Is All You Need" by Vaswani et al. (Transformer paper). | click |

| BERT: "BERT: Bidirectional Encoder Representations from Transformers" by Devlin et al. | click | |

| GPT (Generative Pretrained Transformer): "Improving Language Understanding by Generative Pretraining" by Radford et al. | click | |

| Language Modeling | ELMO: "Deep contextualized word representations" by Peters et al. | click |

| XLNet: "XLNet: Generalized Autoregressive Pretraining for Language Understanding" by Yang et al. | click | |

| Named Entity Recognition (NER) | "Named Entity Recognition: A Review" by Nadeau and Sekine. | click |

| Machine Translation | "Neural Machine Translation by Jointly Learning to Align and Translate" by Bahdanau et al. (Bahdanau Attention). | click |

| Text Classification | "Convolutional Neural Networks for Sentence Classification" by Kim. | click |

| Semantic Parsing | "A Gentle Introduction to Semantic Role Labeling" by Palmer et al. | click |

| Question Answering | "A Thorough Examination of the CNN/Daily Mail Reading Comprehension Task" by Hermann et al. | click |

| Sentiment Analysis | "A Sentiment Treebank and Morphologically Rich Tokenization for German" by Tomanek et al. (for multilingual sentiment analysis). | click |

| Ethical and Bias Considerations | "Algorithmic Bias Detectable in Amazon Delivery Service" by Mehrabi et al. | click |

| "Automated Bias Detection in Natural Language Processing" by Zhang et al. | click | |

| "Debiasing Language Models: A Survey" by Chuang et al. | click | |

| Transfer Learning | "Universal Language Model Fine-tuning for Text Classification" by Howard and Ruder. | click |

| Reinforcement Learning for NLP | "Reinforcement Learning for Dialogue Generation" by Li et al. | click |

| Conversational AI | "BERT for Conversational AI" by Henderson et al. | click |

| Multimodal NLP | "ImageBERT: Cross-Modal Pretraining with Large-Scale Weak Supervision" by Tan et al. | click |

| "VL-BERT: Pre-training of Vision and Language Transformers for Language Understanding" by Radford et al. | click | |

| NLP Datasets | "The GLUE Benchmark: Evaluating Natural Language Understanding" by Wang et al. | click |

| "The SQuAD 2.0 Benchmark for Evaluating Machine Comprehension Systems" by Rajpurkar et al. | click | |

| Topic Modeling | Latent Dirichlet Allocation (LDA): "Latent Dirichlet Allocation" by Blei et al. | click |

| Non-Negative Matrix Factorization (NMF): "Algorithms for Non-negative Matrix Factorization" by Lee and Seung | click | |

| Machine Learning for Text | "A Few Useful Things to Know About Machine Learning" by Domingos. | click |

| Text Summarization | "Abstractive Text Summarization using Sequence-to-Sequence RNNs and Beyond" by See et al. | click |

| Syntax and Parsing | Constituency Parsing: "A Fast and Accurate Dependency Parser using Neural Networks" by Chen and Manning | click |

| Dependency Parsing: "Neural Dependency Parsing with Transition-Based and Graph-Based Systems" by Dozat et al. | click | |

| Semantic Similarity | "Learning to Rank Short Text Pairs with Convolutional Deep Neural Networks" by Severyn and Moschitti. | click |

| Cross-Lingual NLP | "Cross-lingual Word Embeddings" by Mikolov et al. | click |

| "Massively Multilingual Sentence Embeddings for Zero-Shot Cross-Lingual Transfer and Beyond" by Conneau et al. | click | |

| Dialogue Systems | "A Survey of User Simulators in Dialogue Systems" by Pietquin and Hastie. | click |

| "End-to-End Neural Dialogue Systems" by Wen et al. | click | |

| Knowledge Graphs and NLP | "A Survey of Knowledge Graph Embedding Approaches" by Cai et al. | click |

| "KG-BERT: BERT for Knowledge Graph Completion" by Han et al. | click | |

| Neural Machine Translation | "Transformer: A Novel Neural Network Architecture for Language Understanding" by Vaswani et al. | click |

| BERT Variants | "RoBERTa: A Robustly Optimized BERT Pretraining |

For a comprehensive collection of NLP projects and resources, check out repository, NLP Projects. It contains a wide range of projects and materials related to Natural Language Processing, from beginner to advanced levels. Explore the repository to further enhance your NLP skills and discover exciting projects in the field.

This project is licensed under the MIT License. Feel free to explore, innovate, and share the NLP magic with the world!

Join us on this epic journey to redefine the boundaries of text data analysis. Embrace the future of NLP in Python and unleash the full potential of unstructured data. Your adventure begins now!