DISLCLAIMER: THIS IS NOT THE PAPERS CODE. THIS DOES NOT HAVE SPARSITY. THIS IS TEACHER FORCED LEARNING. Only tried to replicate the simple example without sparsity. Enhancing the Locality and Breaking the Memory Bottleneck of Transformer on Time Series Forecasting (NeurIPS 2019)

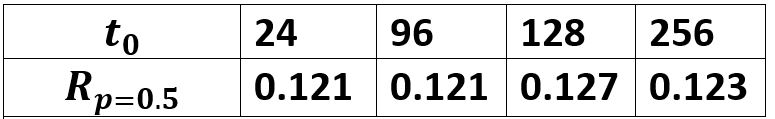

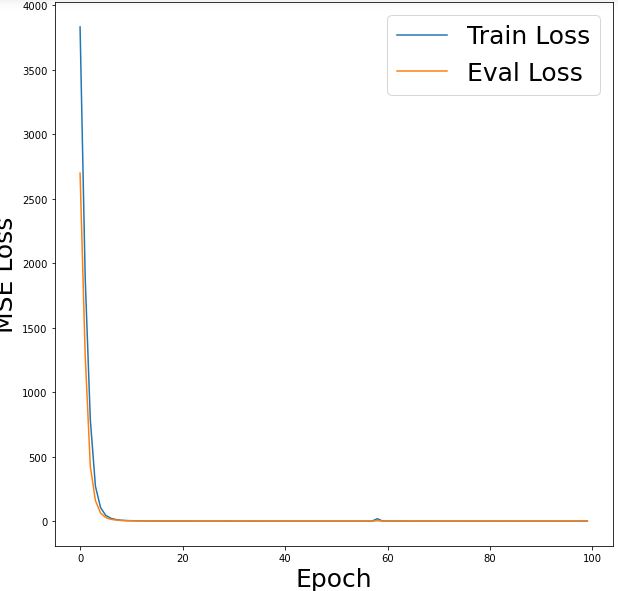

Able to match the results of the paper for the synthetic dataset as shown in the table below

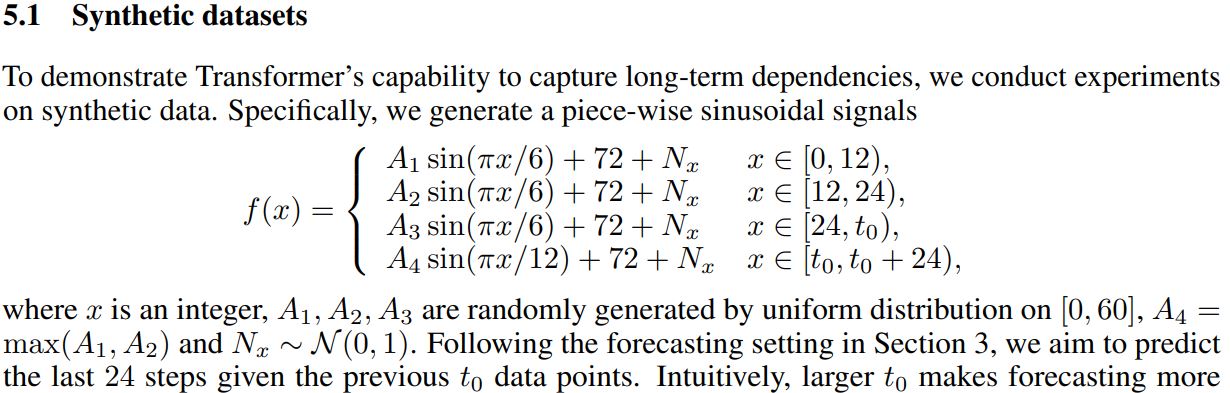

The synthetic dataset was constructed as shown below

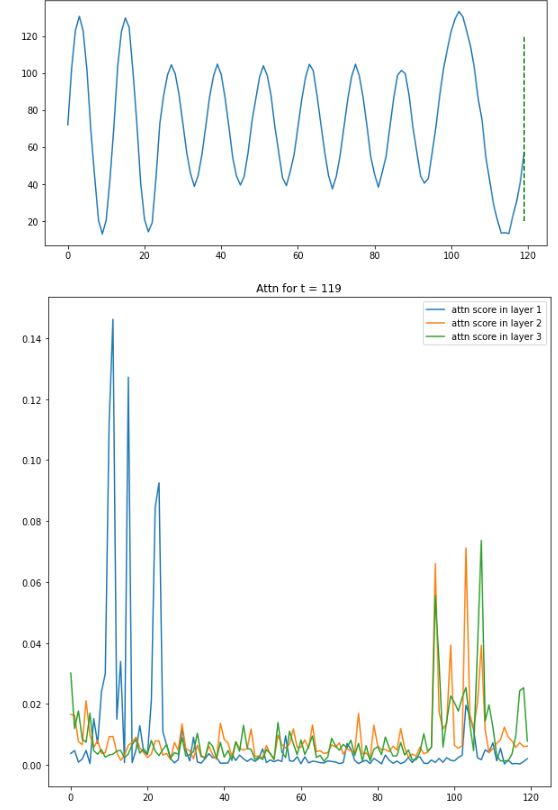

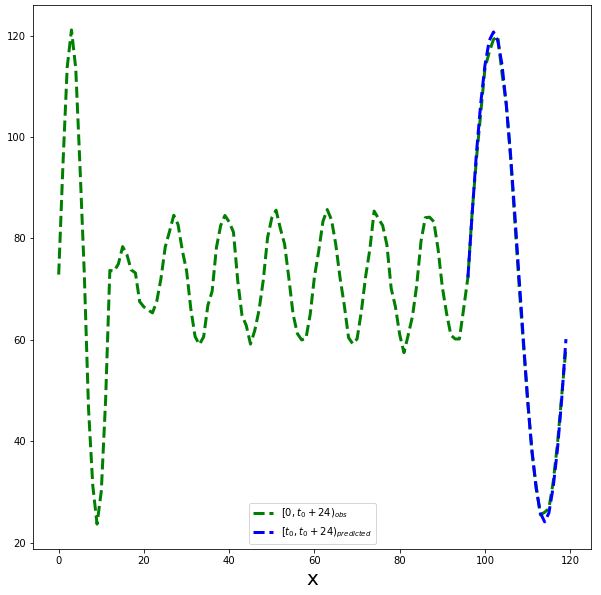

A nice visualization of how the attention layers look at the signal for predicting the last timestep t=t0+24-1