Official PyTorch implementation of the paper

SEDONA: Search for Decoupled Neural Networks toward Greedy Block-wise Learning

Myeongjang Pyeon, Jihwan Moon, Taeyoung Hahn, and Gunhee Kim

ICLR 2021

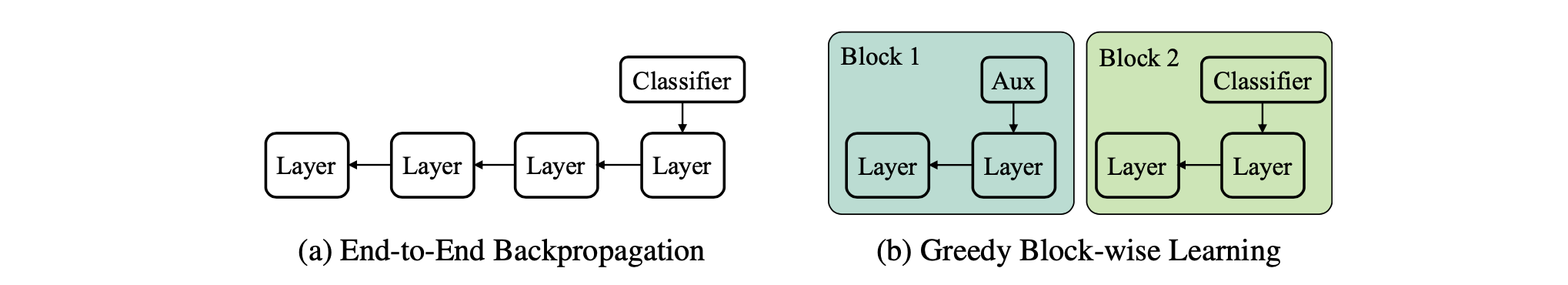

In greedy block-wise learning, layers are grouped into gradient-isolated blocks and trained with local error signals. The local error signals of each block are generated by its own auxiliary network.

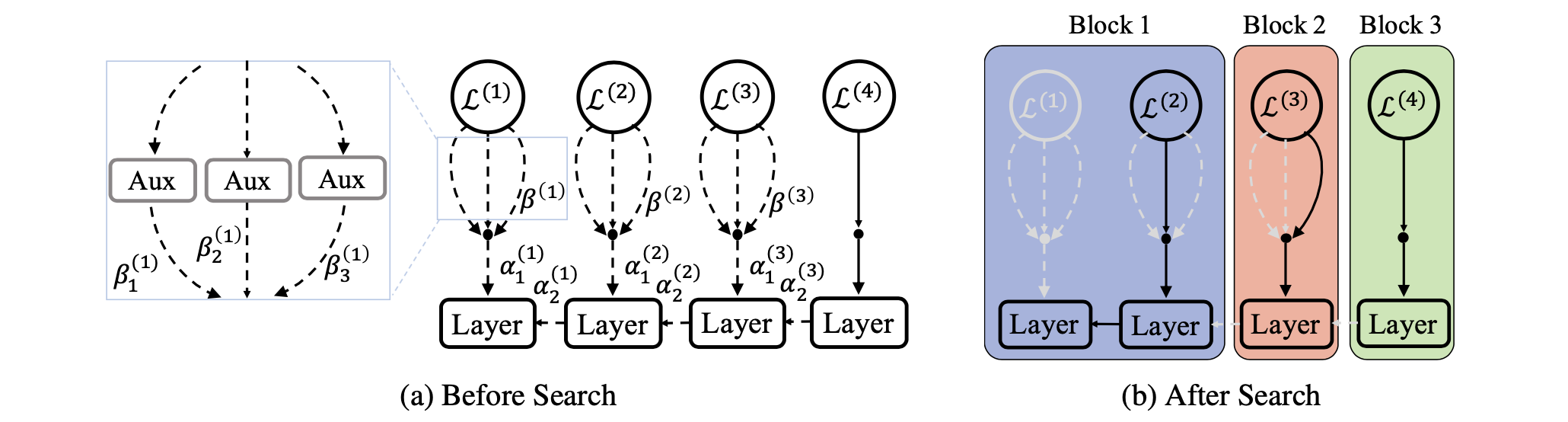

Given an architecture, SEDONA automatically finds a block configuration for greedy learning. The block configuration consists of two types of decisions: 1) how to group layers into blocks and 2) which auxiliary network to use for each block.

Python >= 3.8, PyTorch >= 1.6.0, torchvision >= 0.7.0

Install required packages by:

pip3 install -r requirements.txt

We already upload found decouplings by SEDONA for VGG-19, ResNet-50/101/152. If you want to search them manually, you can use the following scripts.

python init_memory.py --name [ARCHITECTURE] --train-iters 40000 --valid-size 0.4

ARCHITECTURE should be one of [vgg19, resnet50, resnet101, resnet152].

python search.py --train-iters 40000 --meta-train-iters 2000 --name [ARCHITECTURE] --num-inner-steps [T] --valid-batch-size 1024 --valid-size 0.4

Here T deontes number of the inner step size for meta learning (default: 5).

For meta-learning, we use a modified version of higher, which is included in this repository.

See config.py for more options and their descriptions.

For evaluation, you can use evaluate.py. In this literature, evaluating includes training a found decoupling. In order to select the number of blocks for greedy learning (i.e. K in the paper), you can modify --target-num-blocks.

See config.py for more options and their descriptions.

python evaluate.py --batch-size 128 --train-iters 64000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset cifar10 \

--target-num-blocks [K] --eval-base-dir sedona_outputs/[ARCHITECTURE] --name [ARCHITECTURE]

python evaluate.py --batch-size 128 --train-iters 64000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset cifar10 \

--target-num-blocks [K] --baseline-dec-type [BASELINE] --name [ARCHITECTURE]-[BASELINE]

BASELINE should be either mlp_sr or predsim.

python evaluate.py --batch-size 128 --train-iters 64000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset cifar10 \

--target-num-blocks 1 --name [ARCHITECTURE]-backprop

python evaluate.py --batch-size 256 --train-iters 30000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset tiny-imagenet \

--target-num-blocks [K] --eval-base-dir sedona_outputs/[ARCHITECTURE] --feat-mult 4 --fp16 --num-workers 32 \

--name [ARCHITECTURE]

python evaluate.py --batch-size 256 --train-iters 30000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset tiny-imagenet \

--target-num-blocks [K] --baseline-dec-type predsim --feat-mult 4 --fp16 --num-workers 32 \

--optimizer adam --classes-per-batch 40 --classes-per-batch-until-iter 15000 --name [ARCHITECTURE]-predsim

python evaluate.py --batch-size 256 --train-iters 30000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset tiny-imagenet \

--target-num-blocks [K] --baseline-dec-type mlp_sr --feat-mult 4 --fp16 --num-workers 32 --name [ARCHITECTURE]-dgl

python evaluate.py --batch-size 256 --train-iters 30000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset tiny-imagenet \

--target-num-blocks 1 --feat-mult 4 --fp16 --num-workers 32 --name [ARCHITECTURE]-backprop

python evaluate.py --batch-size 256 --train-iters 600000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset imagenet \

--target-num-blocks [K] --eval-base-dir sedona_outputs/[ARCHITECTURE] --feat-mult 4 --fp16 --num-workers 32 \

--valid-freq 5000 --name [ARCHITECTURE]

python evaluate.py --batch-size 256 --train-iters 600000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset imagenet \

--target-num-blocks [K] --baseline-dec-type mlp_sr --feat-mult 4 --fp16 --num-workers 32 --valid-freq 5000 \

--name [ARCHITECTURE]-dgl

python evaluate.py --batch-size 256 --train-iters 600000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset imagenet \

--target-num-blocks 1 --feat-mult 4 --fp16 --num-workers 32 --valid-freq 5000 --name [ARCHITECTURE]-backprop

python evaluate.py --batch-size 256 --train-iters 600000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset imagenet \

--target-num-blocks [K] --eval-base-dir sedona_outputs/[ARCHITECTURE] --feat-mult 4 --fp16 --num-workers 32 \

--valid-freq 5000 -name [ARCHITECTURE] --test --num-ensemble 2

python evaluate.py --batch-size 256 --train-iters 600000 --weight-decay [WEIGHT_DECAY] --lr [LR] --lr-min [LR_MIN] --dataset imagenet \

--target-num-blocks [K] --feat-mult 4 --fp16 --num-workers 32 --valid-freq 5000 --name [ARCHITECTURE]-[BASELINE] \

--test --num-ensemble 2

@inproceedings{

pyeon2021sedona,

title={{\{}SEDONA{\}}: Search for Decoupled Neural Networks toward Greedy Block-wise Learning},

author={Myeongjang Pyeon and Jihwan Moon and Taeyoung Hahn and Gunhee Kim},

booktitle={International Conference on Learning Representations},

year={2021},

}