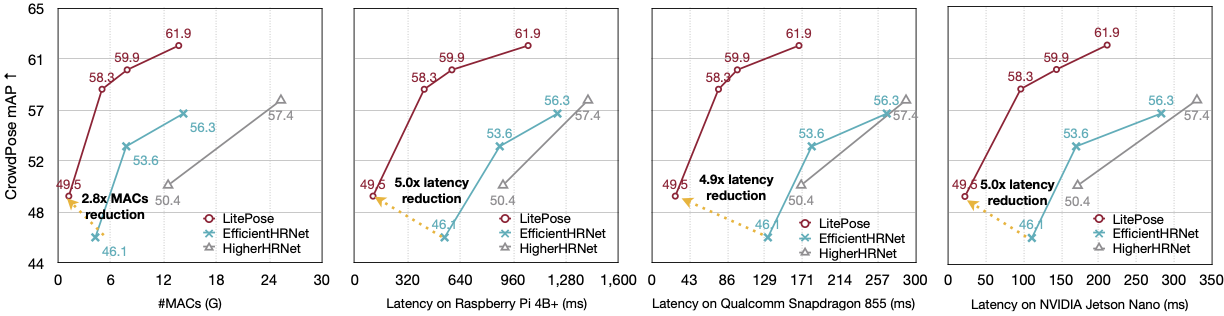

Pose estimation plays a critical role in human-centered vision applications. However, it is difficult to deploy state-of-the-art HRNet-based pose estimation models on resource-constrained edge devices due to the high computational cost (more than 150 GMACs per frame). In this paper, we study efficient architecture design for real-time multi-person pose estimation on edge. We reveal that HRNet's high-resolution branches are redundant for models at the low-computation region via our gradual shrinking experiments. Removing them improves both efficiency and performance. Inspired by this finding, we design LitePose, an efficient single-branch architecture for pose estimation, and introduce two simple approaches to enhance the capacity of LitePose, including Fusion Deconv Head and Large Kernel Convs. Fusion Deconv Head removes the redundancy in high-resolution branches, allowing scale-aware feature fusion with low overhead. Large Kernel Convs significantly improve the model's capacity and receptive field while maintaining a low computational cost. With only 25% computation increment,

| Model | mAP | #MACs | Latency (ms) | ||

| Nano | Mobile | Pi | |||

| HigherHRNet-W24 | 57.4 | 25.3G | 330 | 289 | 1414 |

| EfficientHRNet-H-1 | 56.3 | 14.2G | 283 | 267 | 1229 |

| LitePose-Auto-S (Ours) | 58.3 | 5.0G | 97 | 76 | 420 |

| LitePose-Auto-XS (Ours) | 49.4 | 1.2G | 22 | 27 | 109 |

| Model | mAP (val) |

mAP (test-dev) |

#MACs | Latency (ms) | ||

| Nano | Mobile | Pi | ||||

| EfficientHRNet-H-1 | 59.2 | 59.1 | 14.4G | 283 | 267 | 1229 |

| Lightweight OpenPose | 42.8 | - | 9.0G | - | 97 | - |

| LitePose-Auto-M (Ours) | 59.8 | 59.7 | 7.8G | 144 | 97 | 588 |

Note: For more details, please refer to our paper.

- Install PyTorch and other dependencies:

pip install -r requirements.txt

- Install COCOAPI and CrowdPoseAPI following Official HigherHRNet Repository.

- Please download from COCO download, 2017 Train/Val is needed for training and evalutation.

- Please download from CrowdPose download, Train/Val is training and evaluation.

- Refer to Official HigherHRNet Repository for more details about the data arrangement.

To train a supernet from scratch with the search space specified by arch_manager.py, use

python dist_train.py --cfg experiments/crowd_pose/mobilenet/supermobile.yaml

After training the super-net, you may want to extract a specific sub-network (e.g. search-XS) from the super-net. The following script will be useful:

python weight_transfer.py --cfg experiments/crowd_pose/mobilenet/supermobile.yaml --superconfig mobile_configs/search-XS.json TEST.MODEL_FILE your_supernet_checkpoint_path

To train a normal network with a specific architecture (e.g. search-XS), please use the following script:

Note: Please change the resolution in configuration (e.g. experiments/crowd_pose/mobilenet/mobile.yaml) in accord with the architecture configuration (e.g. search-XS.json) before training.

python dist_train.py --cfg experiments/crowd_pose/mobilenet/mobile.yaml --superconfig mobile_configs/search-XS.json

To evaluate the model with a specific architecture (e.g. search-XS), please use the following script:

python valid.py --cfg experiments/crowd_pose/mobilenet/mobile.yaml --superconfig mobile_configs/search-XS.json TEST.MODEL_FILE your_checkpoint_path

To re-implement results in the paper, we need to load pre-trained checkpoints before training super-nets. These checkpoints are provided in COCO-Pretrain and CrowdPose-Pretrain.

We provide the checkpoints corresponding to the results in our paper.

| Dataset | Model | #MACs | mAP |

| CrowdPose | LitePose-Auto-L | 13.7 | 61.9 |

| LitePose-Auto-M | 7.8 | 59.9 | |

| LitePose-Auto-S | 5.0 | 58.3 | |

| LitePose-Auto-XS | 1.2 | 49.5 | |

| COCO | LitePose-Auto-L | 13.8 | 62.5 |

| LitePose-Auto-M | 7.8 | 59.8 | |

| LitePose-Auto-S | 5.0 | 56.8 | |

| LitePose-Auto-XS | 1.2 | 40.6 |

Lite Pose is based on HRNet-family, mainly on HigherHRNet. Thanks for their well-organized code!

About Large Kernel Convs, several recent papers have found similar conclusions: ConvNeXt, RepLKNet. We are looking forward to more applications of large kernels on different tasks!

If Lite Pose is useful or relevant to your research, please kindly recognize our contributions by citing our paper:

@article{wang2022lite,

title={Lite Pose: Efficient Architecture Design for 2D Human Pose Estimation},

author={Wang, Yihan and Li, Muyang and Cai, Han and Chen, Wei-Ming and Han, Song},

journal={arXiv preprint arXiv:2205.01271},

year={2022}

}