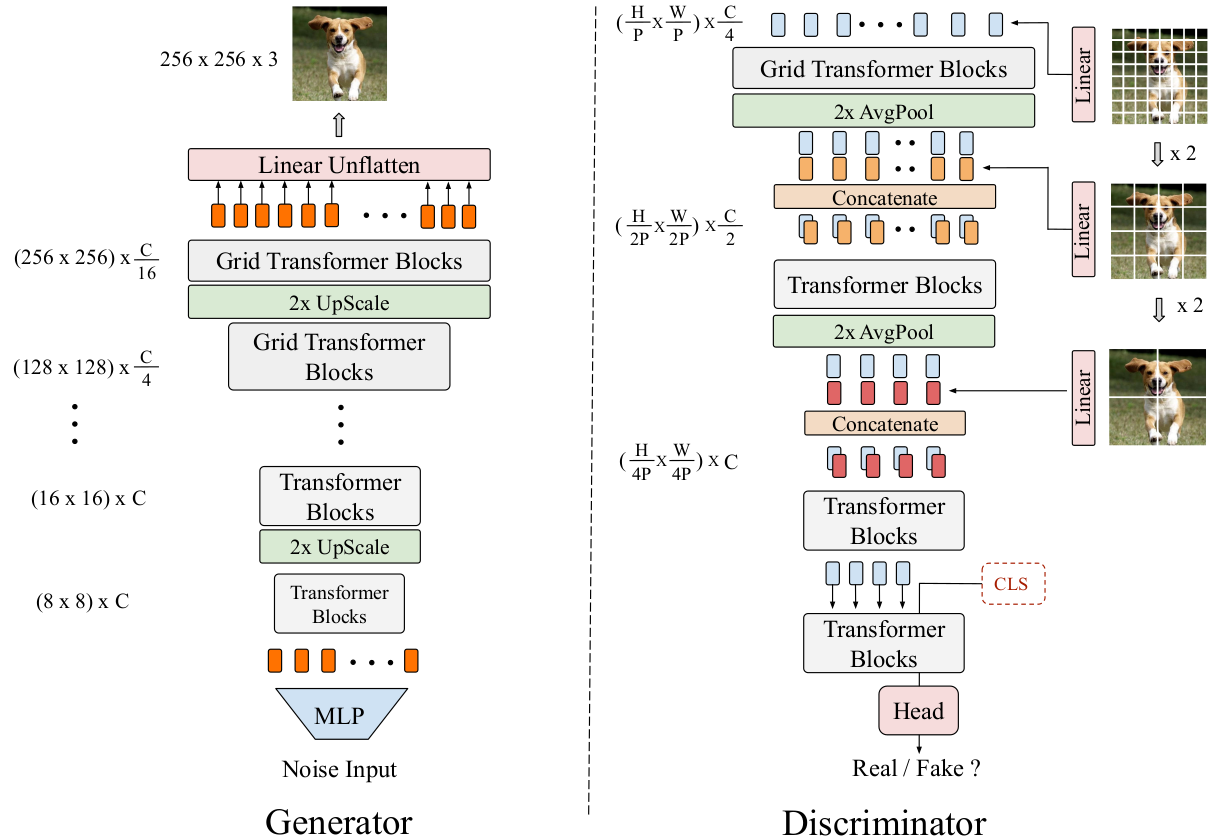

Implementation of the Transformer-based GAN model in the paper:

TransGAN: Two Pure Transformers Can Make One Strong GAN, and That Can Scale Up.

See here for the official Pytorch implementation.

- Python 3.8

- Tensorfow 2.5

- Use

--dataset_path=<path>to specify the dataset path (default builds CIFAR-10 dataset), and--model_name=<name>to specify the checkpoint directory name.

python train.py --dataset_path=<path> --model_name=<name>

Adjust hyperparameters in the hparams.py file.

Run tensorboard --logdir ./.

- CIFAR-10 training progress

Code:

- This model depends on other files that may be licensed under different open source licenses.

- TransGAN uses Differentiable Augmentation. Under BSD 2-Clause "Simplified" License.

- Small-TransGAN models are instances of the original TransGAN architecture with a smaller number of layers and lower-dimensional embeddings.

Implementation notes:

- Single layer per resolution Generator.

- Orthogonal initializer and 4 heads in both Generator and Discriminator.

- WGAN-GP loss.

- Adam with β1 = 0.0 and β2 = 0.99.

- Noise dimension = 64.

- Batch size = 64

MIT