We assume you have the following configured or installed.

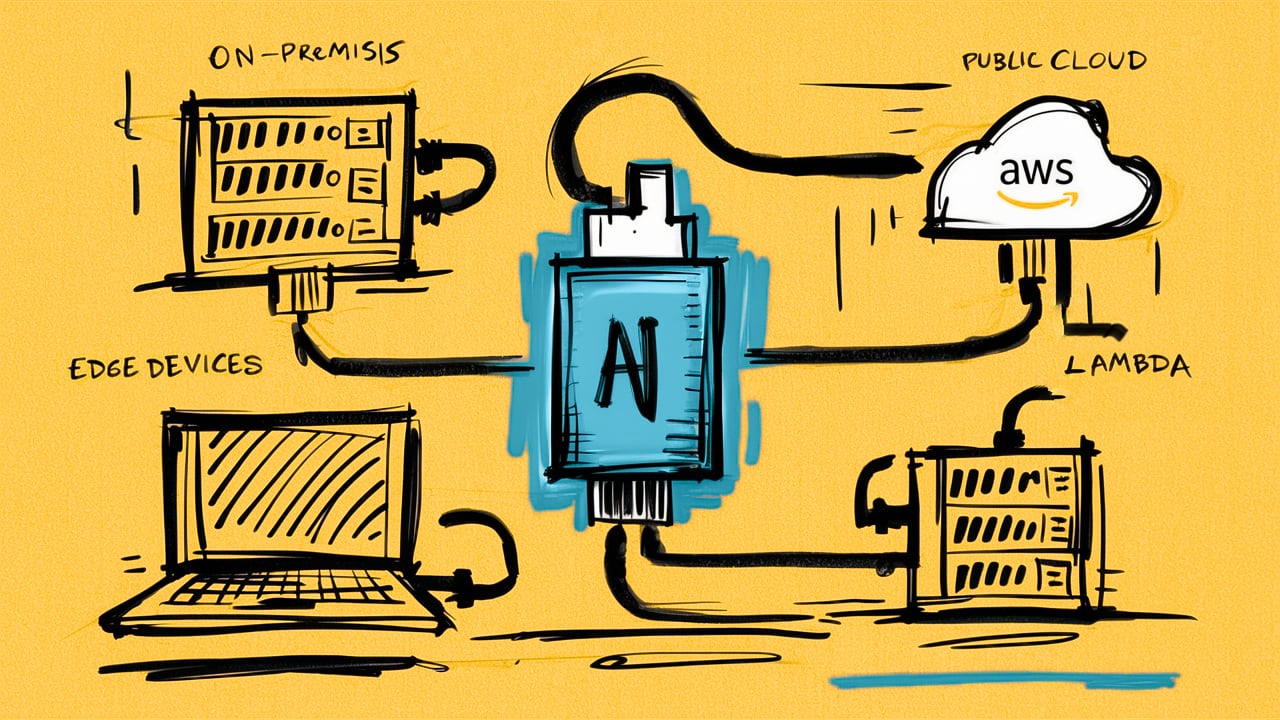

- An AWS account with credentials configured.

- The AWS SAM CLI installed for fast and easy serverless deployments.

- Docker installed for easy container builds and deployments.

After you clone the repo, setup your dependencies with the following command:

npm installNow you can run the following commands from the root directory.

./bin/build- To download and build a llamafile container for deployment../bin/server- To run the download (above) llamafile server locally../bin/deploy- Deploy to AWS Lambda. Also does a build if needed.

This project uses Inquirer.js to chat with the model using OpenAI's API. The model can be running locally using bin/server or deployed to Lambda using bin/deploy. Inquirer will ask for your local or function URL at the beginning of the chat session.