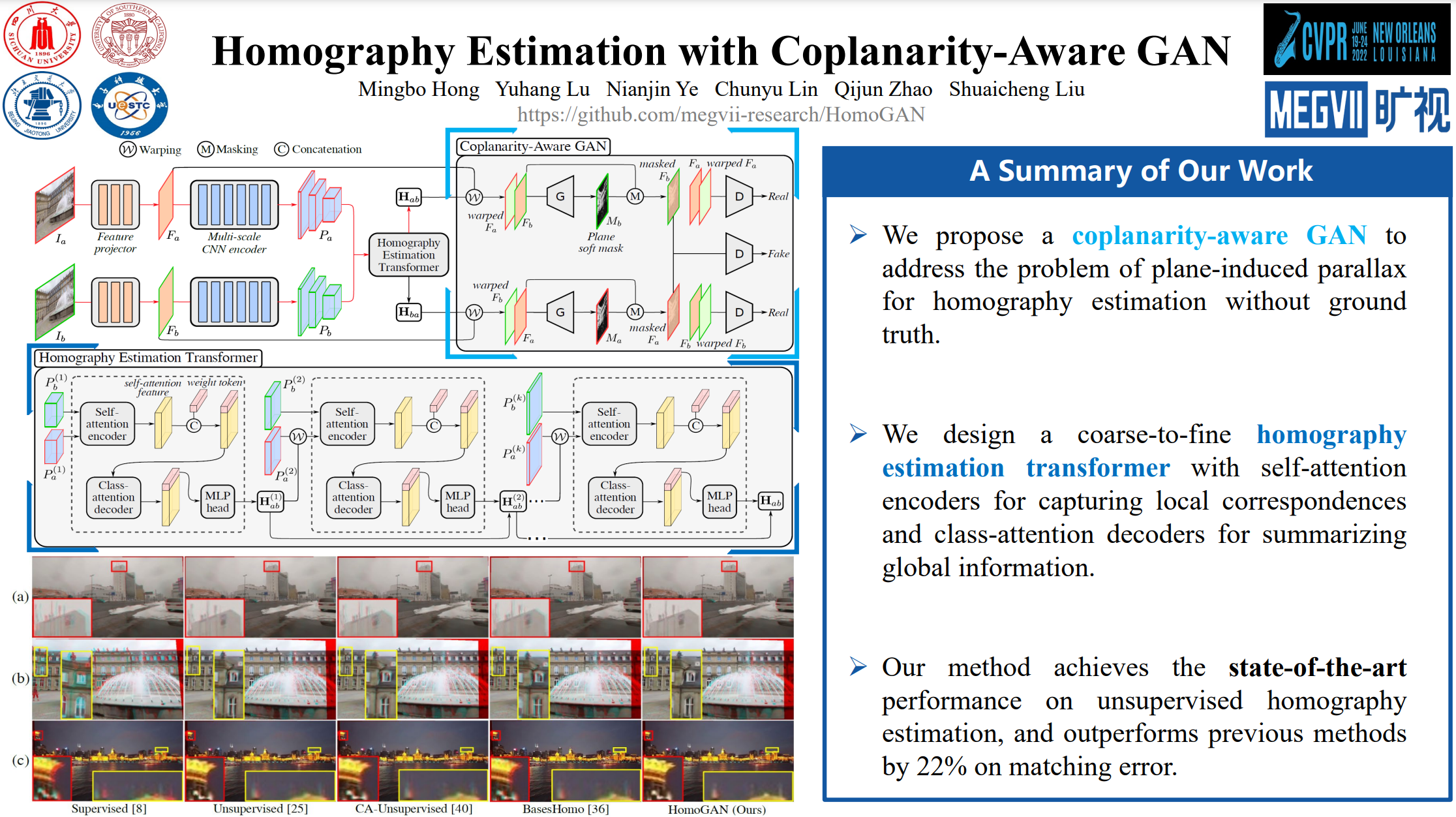

This is the official implementation of HomoGAN, CVPR2022, [PDF]

pip install -r requirements.txt

Please refer to Content-Aware Unsupervised Deep Homography Estimation..

- Download raw dataset

# GoogleDriver

https://drive.google.com/file/d/19d2ylBUPcMQBb_MNBBGl9rCAS7SU-oGm/view?usp=sharing

# BaiduYun

https://pan.baidu.com/s/1Dkmz4MEzMtBx-T7nG0ORqA (key: gvor)

-

Unzip the data to directory "./dataset"

-

Run "video2img.py"

Be sure to scale the image to (640, 360) since the point coordinate system is based on the (640, 360).

e.g. img = cv2.imresize(img, (640, 360))

The models provided below are the retrained version(with minor differences in quantitative results)

| model | RE | LT | LL | SF | LF | Avg | Model |

|---|---|---|---|---|---|---|---|

| Pre-trained | 0.24 | 0.47 | 0.59 | 0.62 | 0.43 | 0.47 | Baidu Google |

| Fine-tuning | 0.22 | 0.38 | 0.57 | 0.47 | 0.30 | 0.39 | Baidu Google |

python evaluate.py --model_dir ./experiments/HomoGAN/ --restore_file xxx.pth

You need to modify ./dataset/data_loader.py slightly for your environment, and you can also refer to Content-Aware Unsupervised Deep Homography Estimation.

1) set "pretrain_phase" in ./experiments/HomoGAN/params.json as True

2) python train.py --model_dir ./experiments/HomoGAN/

1) set "pretrain_phase" in ./experiments/HomoGAN/params.json as False

2) python train.py --model_dir ./experiments/HomoGAN/ --restore_file xxx.pth

If you use this code or ideas from the paper for your research, please cite our paper:

@InProceedings{Hong_2022_CVPR,

author = {Hong, Mingbo and Lu, Yuhang and Ye, Nianjin and Lin, Chunyu and Zhao, Qijun and Liu, Shuaicheng},

title = {Unsupervised Homography Estimation With Coplanarity-Aware GAN},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {17663-17672}

}

In this project we use (parts of) the official implementations of the following works:

We thank the respective authors for open sourcing their methods.