English | 中文

Chatways provides a simple way to build chat applications with LLMs, offering templates, interfaces, and support for multiple LLM backends.

- Template-driven application creation for non-coders, enabling app launching with a single command.

- Simplified API interfaces for developers, eliminating complex development workflows.

- Integration with multiple LLM backends, including OpenAI and Hugging Face.

-

Install from PyPI:

pip install chatways

or from source:

git clone https://github.com/zhangsibo1129/chatways.git cd chatways pip install -e .

-

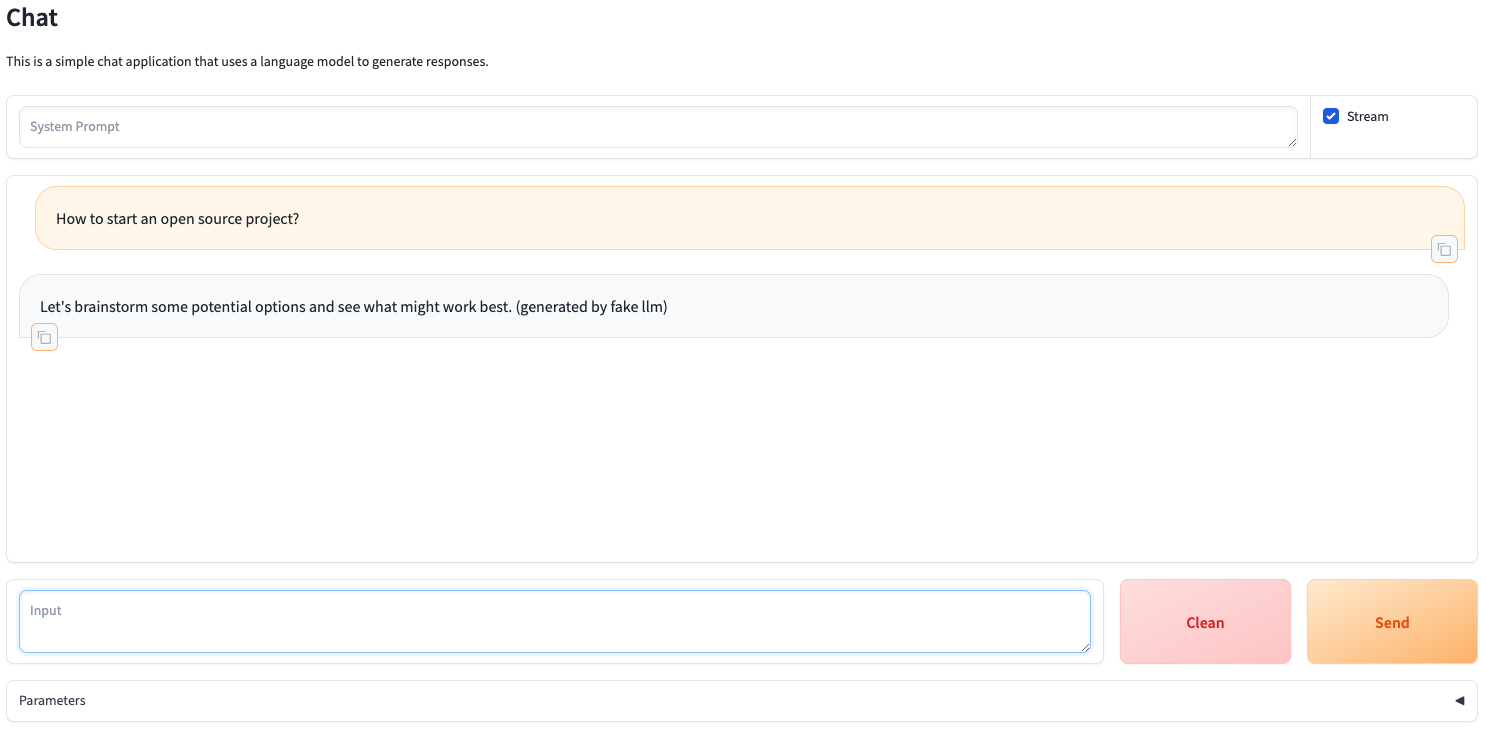

launch the chat application using

chatwayscommand:chatways simple --llm-engine fake --port 7860

simplestarts a basic chat application.--llm-engine fakeuses a simulated LLM engine, requiring no local GPU or remote API.--port 7860sets the port address. For detailed parameter information, refer to the templates.

-

Open your browser and visit

https://localhost:7860to interact with the chat application.

Chatways offers a range of ready-to-use templates. Templates applications can be quickly launched with a chatways command, and LLM backends can be easily swapped, making it ideal for no-code builders. The command structure is as follows:

chatways [template] [options]- template: Specifies the type of application (e.g., simple, comparison, arxiv).

- options: Configuration parameters:

- --llm-engine (-le): LLM engine to use (e.g., openai, huggingface).

- --llm-model (-lm): LLM model to use (e.g., llama).

- --llm-config (-lc): Additional configuration in JSON format (e.g., {"device_map":"auto"}).

Here are some examples:

-

launch a simple chat application with openai backend

export OPENAI_API_KEY="openai_api_key" export OPENAI_BASE_URL="openai_base_url" chatways simple -le openai -lm gpt-3.5-turbo

or

chatways simple \ --llm-engine openai \ --llm-model gpt-3.5-turbo \ --llm-model-config '{"api_key":"openai_api_key","base_url":"openai_base_url"}' -

launch a Chat with arXiv application with huggingface backend:

chatways arxiv \ --llm-engine huggingface \ --llm-model Qwen/Qwen1.5-4B-Chat \ --llm-model-config '{"torch_dtype":"auto","device_map":"auto"}'

The src/chatways/template directory contains templates built with the chatways library. Each application template is an independently runnable Gradio app. Users can quickly customize these templates, making it much easier than developing an application from scratch.

The bot module provides a highly abstract interface designed to simplify integration. This module allows developers to seamlessly integrate LLM into their workflow.

-

Create a chatbot instance

>>> from chatways import SimpleChatBot >>> bot = SimpleChatBot( ... llm_engine = "huggingface", ... model = "Qwen/Qwen1.5-4B-Chat", ... model_config = { ... "torch_dtype":"auto", ... "device_map":"auto" ... } ... )

-

Chat with the bot

>>> history = [] >>> query = "Say three positive words" >>> response = bot.chat(query, history=history, stream=False) >>> print(response) 1. hope 2. confidence 3. inspiration

>>> history.append([query, response]) # Maintain Conversation History >>> query = "Please use the second one in a sentence" >>> response = bot.chat(query, history=history, stream=False) >>> print(response) The confidence he had in himself gave him strength to face any challenge that came his way.

Currently, Chatways provides the following application templates:

| Category | Feature | Command |

|---|---|---|

| Chat | Simple chat application that enables multi-round conversations with LLM | chatways simple [options] |

| Chat Comparison | Chat with 2 LLMs simultaneously and compare their results | chatways comparison [options] |

| Chat with arXiv | Using LLM to make finding academic papers on arXiv more natural and insightful | chatways arxiv [options] |

As this project is in its early stages, all kinds of contributions are welcome, whether it's reporting issues, suggesting new features, or submitting pull requests.

This project is licensed under the MIT License.