SuGaR: Surface-Aligned Gaussian Splatting for Efficient 3D Mesh Reconstruction and High-Quality Mesh Rendering

LIGM, Ecole des Ponts, Univ Gustave Eiffel, CNRS

| Webpage | arXiv | Presentation video | Viewer video |

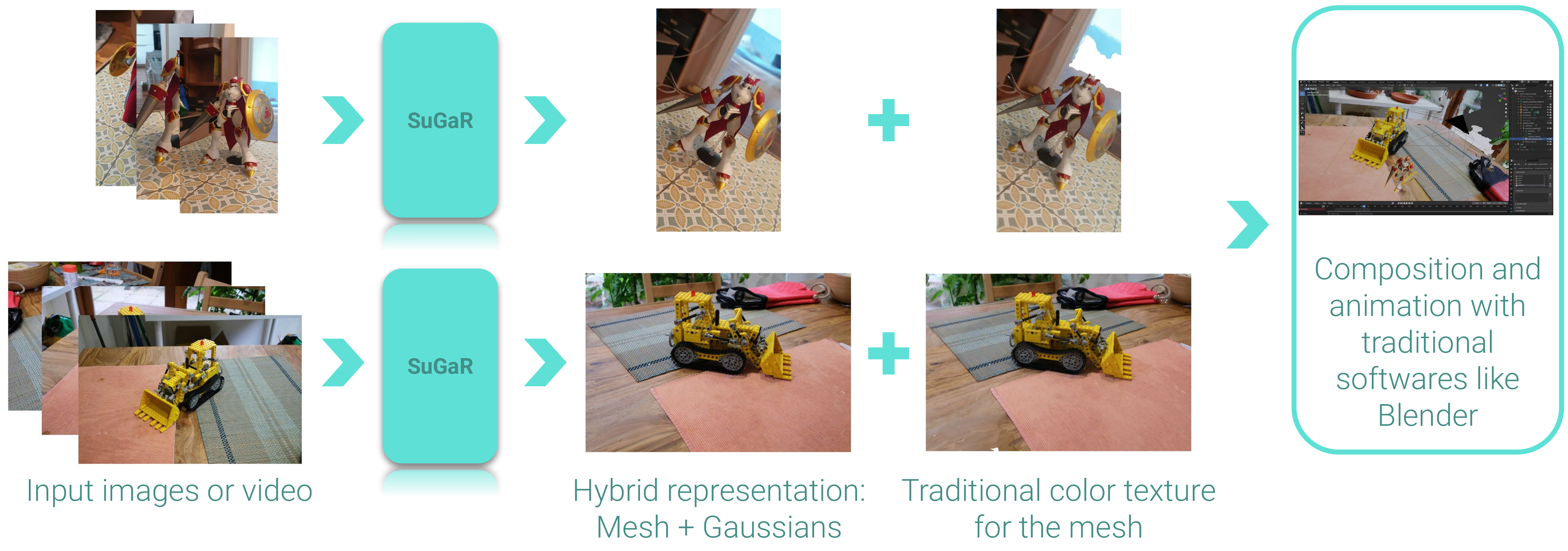

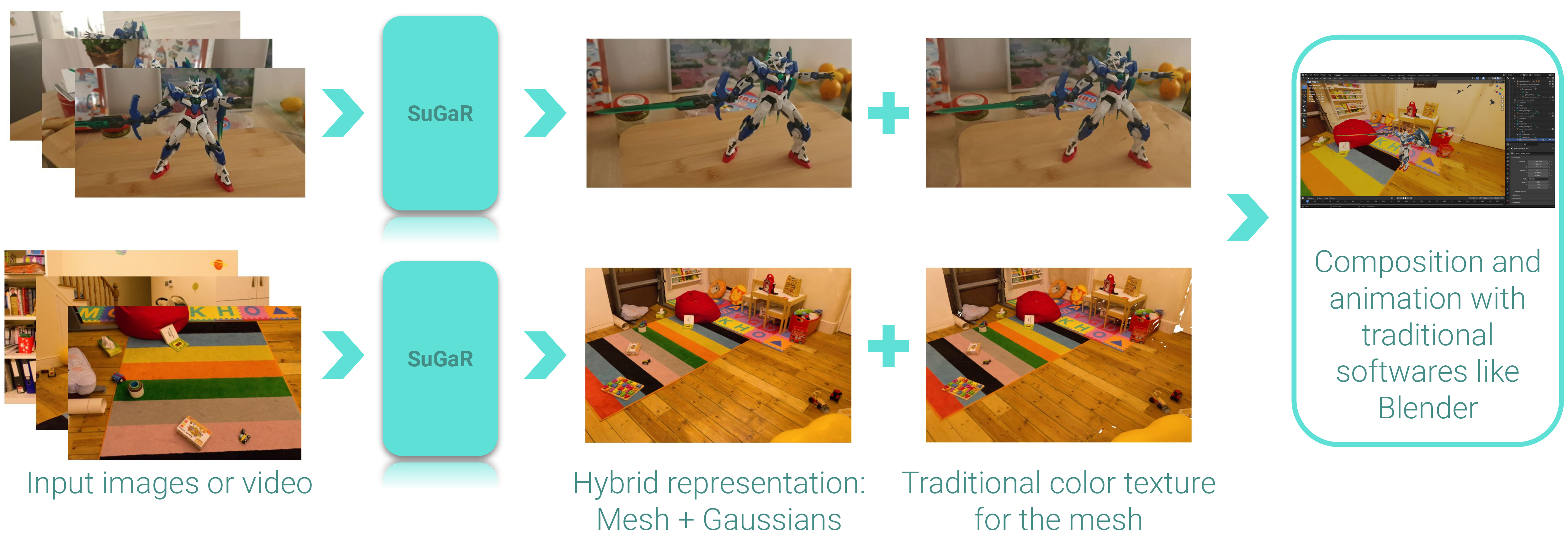

Our method extracts meshes from 3D Gaussian Splatting reconstructions and builds hybrid representations

that enable easy composition and animation in Gaussian Splatting scenes by manipulating the mesh.

We propose a method to allow precise and extremely fast mesh extraction from 3D Gaussian Splatting (SIGGRAPH 2023). Gaussian Splatting has recently become very popular as it yields realistic rendering while being significantly faster to train than NeRFs. It is however challenging to extract a mesh from the millions of tiny 3D Gaussians as these Gaussians tend to be unorganized after optimization and no method has been proposed so far. Our first key contribution is a regularization term that encourages the 3D Gaussians to align well with the surface of the scene. We then introduce a method that exploits this alignment to sample points on the real surface of the scene and extract a mesh from the Gaussians using Poisson reconstruction, which is fast, scalable, and preserves details, in contrast to the Marching Cubes algorithm usually applied to extract meshes from Neural SDFs. Finally, we introduce an optional refinement strategy that binds Gaussians to the surface of the mesh, and jointly optimizes these Gaussians and the mesh through Gaussian splatting rendering. This enables easy editing, sculpting, rigging, animating, or relighting of the Gaussians using traditional softwares (Blender, Unity, Unreal Engine, etc.) by manipulating the mesh instead of the Gaussians themselves. Retrieving such an editable mesh for realistic rendering is done within minutes with our method, compared to hours with the state-of-the-art method on neural SDFs, while providing a better rendering quality in terms of PSNR, SSIM and LPIPS.

@article{guedon2023sugar,

title={SuGaR: Surface-Aligned Gaussian Splatting for Efficient 3D Mesh Reconstruction and High-Quality Mesh Rendering},

author={Gu{\'e}don, Antoine and Lepetit, Vincent},

journal={arXiv preprint arXiv:2311.12775},

year={2023}

}

Updates

- [01/09/2024] Added a dedicated, real-time viewer to let users visualize and navigate in the reconstructed scenes (hybrid representation, textured mesh and wireframe mesh).

- [12/20/2023] Added a short notebook showing how to render images with the hybrid representation using the Gaussian Splatting rasterizer.

- [12/18/2023] Code release.

To-do list

- Improvement: Add an

ifblock tosugar_extractors/coarse_mesh.pyto skip foreground mesh reconstruction and avoid triggering an error if no surface point is detected inside the foreground bounding box. This can be useful for users that want to reconstruct "background scenes". - Using precomputed masks with SuGaR: Add a mask functionality to the SuGaR optimization, to allow the user to mask out some pixels in the training images (like white backgrounds in synthetic datasets).

- Using SuGaR with Windows: Adapt the code to make it compatible with Windows. Due to path-writing conventions, the current code is not compatible with Windows.

- Synthetic datasets: Add the possibility to use the NeRF synthetic dataset (which has a different format than COLMAP scenes)

- Composition and animation: Finish to clean the code for composition and animation, and add it to the

sugar_scene/sugar_compositor.pyscript. - Composition and animation: Make a tutorial on how to use the scripts in the

blenderdirectory and thesugar_scene/sugar_compositor.pyclass to import composition and animation data into PyTorch and apply it to the SuGaR hybrid representation.

As we explain in the paper, SuGaR optimization starts with first optimizing a 3D Gaussian Splatting model for 7k iterations with no additional regularization term. In this sense, SuGaR is a method that can be applied on top of any 3D Gaussian Splatting model, and a Gaussian Splatting model optimized for 7k iterations must be provided to SuGaR.

Consequently, the current implementation contains a version of the original 3D Gaussian Splatting code, and we built our model as a wrapper of a vanilla 3D Gaussian Splatting model. Please note that, even though this wrapper implementation is convenient for many reasons, it may not be the most optimal one for memory usage, so we might change it in the future.

After optimizing a vanilla Gaussian Splatting model, the SuGaR pipeline consists of 3 main steps, and an optional one:

- SuGaR optimization: optimizing Gaussians alignment with the surface of the scene

- Mesh extraction: extracting a mesh from the optimized Gaussians

- SuGaR refinement: refining the Gaussians and the mesh together to build a hybrid representation

- Textured mesh extraction (Optional): extracting a traditional textured mesh from the refined SuGaR model

We provide a dedicated script for each of these steps, as well as a script train.py that runs the entire pipeline. We explain how to use this script in the next sections.

Please note that the final step, Textured mesh extraction, is optional but is enabled by default in the train.py script. Indeed, it is very convenient to have a traditional textured mesh for visualization, composition and animation using traditional softwares such as Blender. However, this step is not needed to produce, modify or animate hybrid representations.

Underlying mesh with a traditional colored UV texture

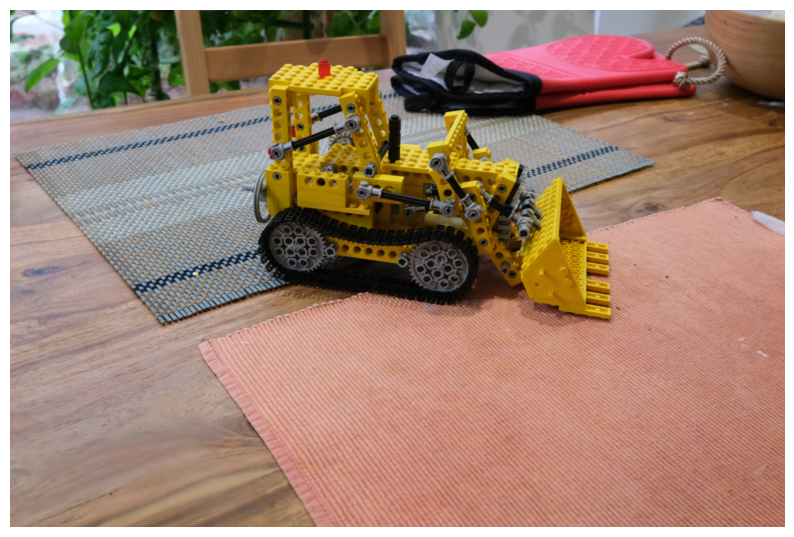

Below is another example of a scene showing a robot with a black and specular material. The following images display the hybrid representation (Mesh + Gaussians on the surface), the mesh with a traditional colored UV texture, and a depth map of the mesh:

The software requirements are the following:

- Conda (recommended for easy setup)

- C++ Compiler for PyTorch extensions

- CUDA toolkit 11.8 for PyTorch extensions

- C++ Compiler and CUDA SDK must be compatible

Please refer to the original 3D Gaussian Splatting repository for more details about requirements.

Start by cloning this repository:

# HTTPS

git clone https://github.com/Anttwo/SuGaR.git --recursiveor

# SSH

git clone [email protected]:Anttwo/SuGaR.git --recursiveTo install the required Python packages and activate the environment, go inside the SuGaR/ directory and run the following commands:

conda env create -f environment.yml

conda activate sugarIf this command fails to create a working environment

Then you can try to install the required packages manually by running the following commands:

conda create --name sugar -y python=3.9

conda activate sugar

conda install pytorch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 pytorch-cuda=11.8 -c pytorch -c nvidia

conda install -c fvcore -c iopath -c conda-forge fvcore iopath

conda install pytorch3d==0.7.4 -c pytorch3d

conda install -c plotly plotly

conda install -c conda-forge rich

conda install -c conda-forge plyfile==0.8.1

conda install -c conda-forge jupyterlab

conda install -c conda-forge nodejs

conda install -c conda-forge ipywidgets

pip install open3d

pip install --upgrade PyMCubesRun the following commands inside the sugar directory to install the additional Python submodules required for Gaussian Splatting:

cd gaussian_splatting/submodules/diff-gaussian-rasterization/

pip install -e .

cd ../simple-knn/

pip install -e .

cd ../../../Please refer to the 3D Gaussian Splatting repository for more details.

Start by optimizing a vanilla Gaussian Splatting model for 7k iterations by running the script gaussian_splatting/train.py, as shown below. Please refer to the original 3D Gaussian Splatting repository for more details. This optimization should be very fast, and last only a few minutes.

python gaussian_splatting/train.py -s <path to COLMAP dataset> --iterations 7000 -m <path to the desired output directory>Then, run the script train.py in the root directory to optimize a SuGaR model.

python train.py -s <path to COLMAP dataset> -c <path to the Gaussian Splatting checkpoint> -r <"density" or "sdf">The most important arguments for the train.py script are the following:

| Parameter | Type | Description |

|---|---|---|

--scene_path / -s |

str |

Path to the source directory containing a COLMAP dataset. |

--checkpoint_path / -c |

str |

Path to the checkpoint directory of the vanilla 3D Gaussian Splatting model. |

--regularization_type / -r |

str |

Type of regularization to use for optimizing SuGaR. Can be "density" or "sdf". For reconstructing detailed objects centered in the scene with 360° coverage, "density" provides a better foreground mesh. For a stronger regularization and a better balance between foreground and background, choose "sdf". |

--eval |

bool |

If True, performs an evaluation split of the training images. Default is True. |

--low_poly |

bool |

If True, uses the standard config for a low poly mesh, with 200_000 vertices and 6 Gaussians per triangle. |

--high_poly |

bool |

If True, uses the standard config for a high poly mesh, with 1_000_000 vertices and 1 Gaussian per triangle. |

--refinement_time |

str |

Default configs for time to spend on refinement. Can be "short" (2k iterations), "medium" (7k iterations) or "long" (15k iterations). |

--export_uv_textured_mesh / -t |

bool |

If True, will optimize and export a traditional textured mesh as an .obj file from the refined SuGaR model, after refinement. Computing a traditional color UV texture should take less than 10 minutes. Default is True. |

--export_ply |

bool |

If True, export a .ply file with the refined 3D Gaussians at the end of the training. This file can be large (+/- 500MB), but is needed for using the dedicated viewer. Default is True. |

We provide more details about the two regularization methods "density" and "sdf" in the next section. For reconstructing detailed objects centered in the scene with 360° coverage, "density" provides a better foreground mesh. For a stronger regularization and a better balance between foreground and background, choose "sdf".

The default configuration is high_poly with refinement_time set to "long". Results are saved in the output/ directory.

As we explain in the paper, this script extracts a mesh in 30~35 minutes on average on a single GPU. After mesh extraction, the refinement time only takes a few minutes when using --refinement_time "short", but can take up to an hour when using --refinement_time "long". A short refinement time is enough to produce a good-looking hybrid representation in most cases.

Please note that the optimization time may vary (from 20 to 45 minutes) depending on the complexity of the scene and the GPU used. Moreover, the current implementation splits the optimization into 3 scripts that can be run separately (SuGaR optimization, mesh extraction, model refinement) so it reloads the data at each part, which is not optimal and takes several minutes. We will update the code in a near future to optimize this.

Below is a detailed list of all the command line arguments for the train.py script.