A repository for a document chatbot

The key advantage with this implementation is that no data ever leaves your AWS account. The model is hosted in a SageMaker endpoint in your account and all inference requests will be sent to that endpoint.

- Go to the SageMaker folder

- Install the required packages for this application with

pip install -r requirements.txt. To avoid conflicts with existing python dependencies, it is best to do so in a virtual environment:

$python3 -m venv .venv

$source .venv/bin/activate

$pip3 install -r requirements.txt - You will need a SageMaker endpoint deployed in your account. If you don't have, one you can use this notebook to deploy the AI21 Jurassic-2 Jumbo Instruct model in your account. Before doing that, you need to go to SageMaker Foundational Model Hub (eg. https://us-west-2.console.aws.amazon.com/sagemaker/home?region=us-west-2#/foundation-models), select the model to be deployed in your SageMaker Notebook above and click "Subscribe". You only need to do it once. Otherwise, you will get an error message during SageMaker notebook execution: "ClientError: An error occurred (ValidationException) when calling the CreateModel operation: Caller is not subscribed to the marketplace offering.". (Caution: This will spin up an ml.p4d.24xlarge instance in your account to host the model, which costs ~$30 per hour!). Alternatively you can deploy a different, smaller model into a SageMaker endpoint or use a ml.g5.48xlarge.

- Amend the app.py file so that it points to your endpoint (variable

endpoint_name) and that it loads your AWS credentials correctly (i.e. setcredentials_profile_namewhen calling theSagemakerEndpointclass) - Run the app with

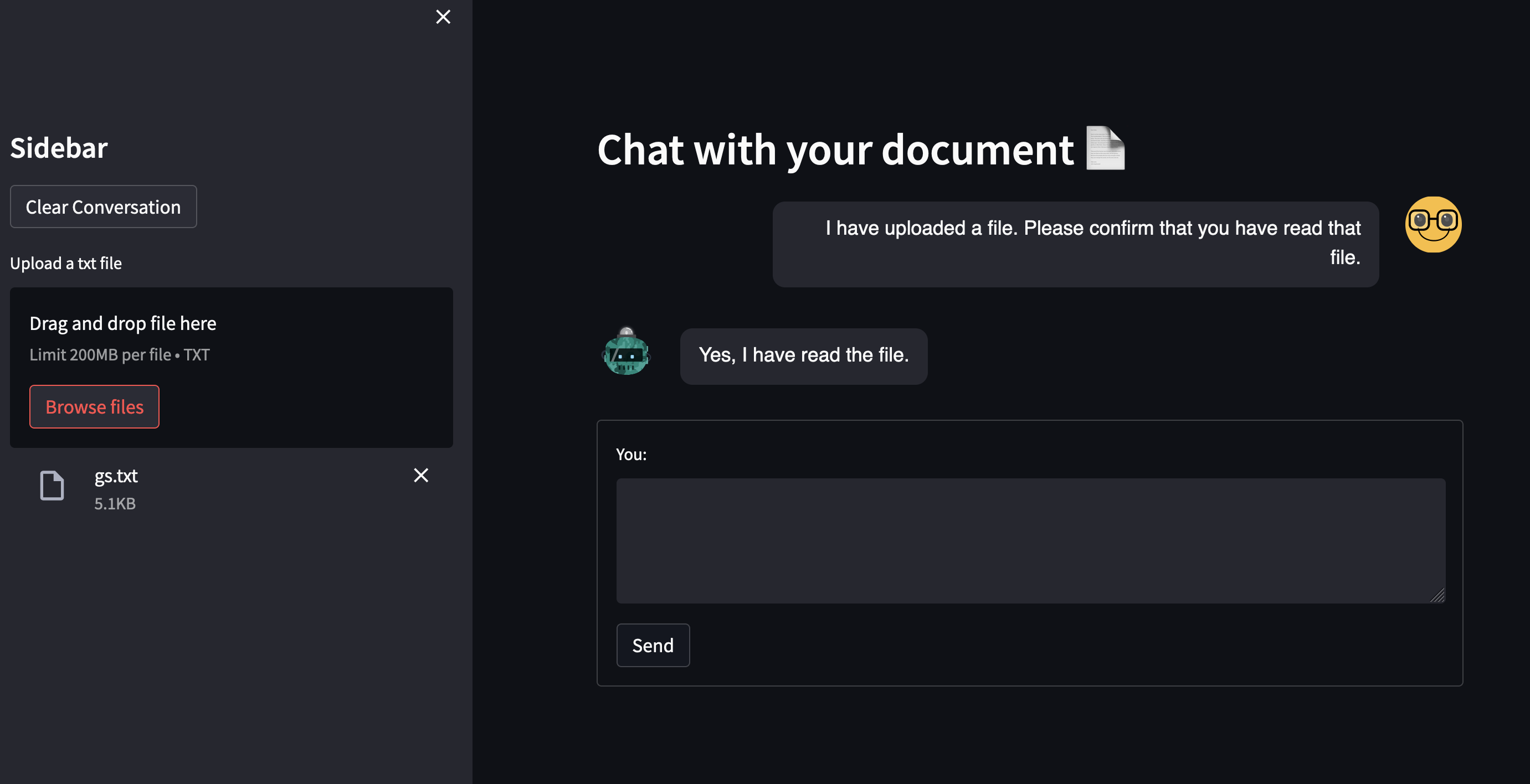

streamlit run app.py - Upload a text file

- Start chatting 🤗

If you want to run Streamlit apps directly in SM Studio, you can do so with command streamlit run <app>.py --server.port 6006. Once the app has started you can go to https://<YOUR_STUDIO_ID>.studio.<YOUR_REGION>.sagemaker.aws/jupyter/default/proxy/6006/ to launch the app.