Reproduced Implementation of Our ECCV-2020 oral paper: Semantic Flow for Fast and Accurate Scene Parsing.

News! SFNet-Lite is accepted by IJCV!! A good end to my last work in the PhD study!!!

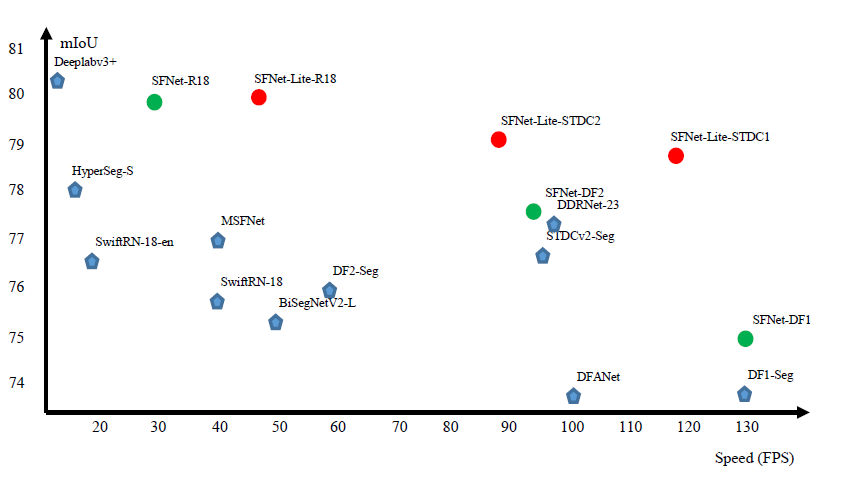

Extension: SFNet-Lite achieve 78.8 mIoU while running 120 FPS, 80.1 mIoU while running at 50 FPS on TITAN-RTX.

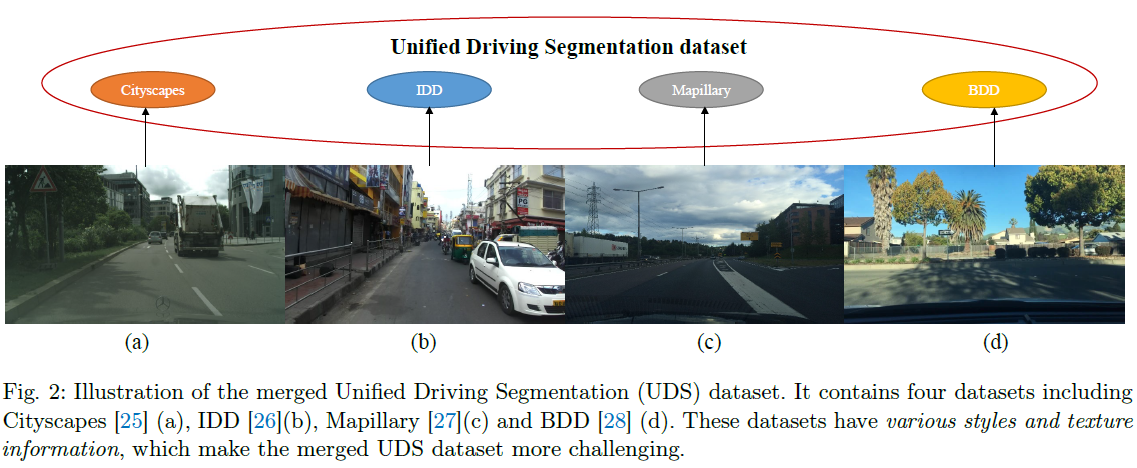

Extension: SFNet-Lite achieve new state-of-the-art results (best speed and accuracy trade-off) on domain agnostic driving

segmentation benchmark (Unified Driving Segmentation).

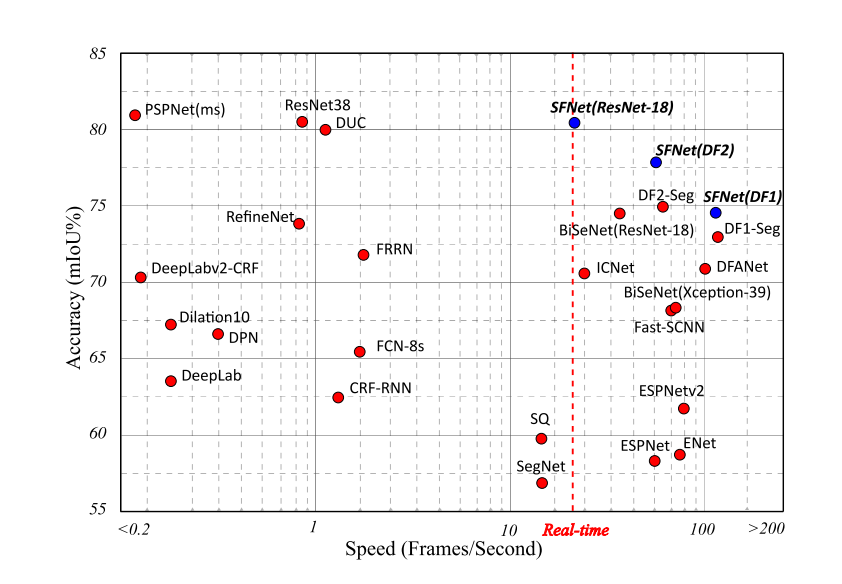

SFNet is the first real time nework which achieves the 80 mIoU on Cityscape test set!!!! It also contains our another concurrent work: SRNet-IEEE-TIP:link.

Our methods achieve the best speed and accuracy trade-off on multiple scene parsing datasets.

Our methods achieve the best speed and accuracy trade-off on multiple scene parsing datasets.

Note that the original paper link is on TorchCV where you can train SFnet models.

However, that repo is over-complex for further research and exploration.

Note that the original paper link is on TorchCV where you can train SFnet models.

However, that repo is over-complex for further research and exploration.

If you have any question and or dissussion on fast segmentation, just open an issue. I will reply asap if I have the spare time.

Please see the DATASETs.md for the details.

pytorch >=1.4.0 apex opencv-python mmcv-cpu

Please download the pretrained models and put them into the pretrained_models dir on the root of this repo.

resnet101-deep-stem-pytorch:link

resnet50-deep-stem-pytorch:link

resnet18-deep-stem-pytorch:link

dfnetv1:link

dfnetv2:link

stdcv1/stdc2:link

sf-resnet18-Mapillary:link

Please download the trained model, the mIoU is on Cityscape validation dataset.

resnet18(no-balanced-sample): 78.4 mIoU

resnet18: 79.0 mIoU link +dsn link

resnet18 + map: 79.9 mIoU link

resnet50: 80.4 mIoU link

dfnetv1: 72.2 mIoU link

dfnetv2: 75.8 mIoU link

sfnet_lite_r18: link

sfnet_lite_r18_coarse_boost: link

sfnet_lite_stdcv2: link

sfnet_lite_stdcv1: link

sfnet_lite_r18: link

sfnet_lite_stdcv1: link

sfnet_lite_stdcv2: link

sfnet_lite_r18: link

sfnet_lite_stdcv1: link

sfnet_lite_stdcv2: link

sfnet_lite_r18: link

sfnet_r18: link

to be release.

python demo_folder.py --arch choosed_architecture --snapshot ckpt_path --demo_floder images_folder --save_dir save_dir_to_disk

All the models are trained with 8 GPUs. The train settings require 8 GPU with at least 11GB memory. Please download the pretrained models before training.

Train ResNet18 model on Cityscapes

SFNet r18

sh ./scripts/cityscapes/train_cityscapes_sfnet_res18.shSFNet-Lite r18

sh ./scripts/cityscapes/train_cityscapes_sfnet_res18_v2_lite_1000e.shTrain ResNet101 models

sh ./scripts/cityscapes/train_cityscapes_sfnet_res101.shsh ./scripts/submit_test_cityscapes/submit_cityscapes_sfnet_res101.shPlease use the DATASETs.md to prepare the UDS dataset.

sh ./scripts/uds/train_merged_sfnet_res18_v2.shIf you find this repo is useful for your research, Please consider citing our paper:

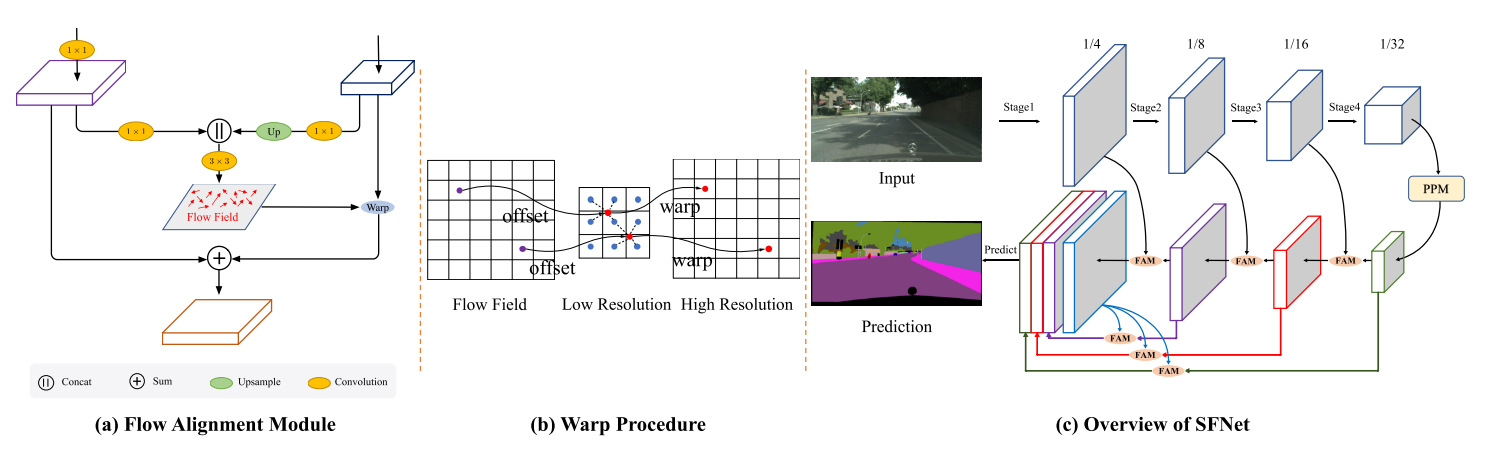

@article{Li2022SFNetFA,

title={SFNet: Faster and Accurate Domain Agnostic Semantic Segmentation via Semantic Flow},

author={Xiangtai Li and Jiangning Zhang and Yibo Yang and Guangliang Cheng and Kuiyuan Yang and Yu Tong and Dacheng Tao},

journal={IJCV},

year={2023},

}

@inproceedings{sfnet,

title={Semantic Flow for Fast and Accurate Scene Parsing},

author={Li, Xiangtai and You, Ansheng and Zhu, Zhen and Zhao, Houlong and Yang, Maoke and Yang, Kuiyuan and Tong, Yunhai},

booktitle={ECCV},

year={2020}

}

@article{Li2020SRNet,

title={Towards Efficient Scene Understanding via Squeeze Reasoning},

author={Xiangtai Li and Xia Li and Ansheng You and Li Zhang and Guang-Liang Cheng and Kuiyuan Yang and Y. Tong and Zhouchen Lin},

journal={IEEE-TIP},

year={2021},

}

This repo is based on Semantic Segmentation from NVIDIA and DecoupleSegNets

Great Thanks to SenseTime Research for Reproducing All these model ckpts and pretrained model.

MIT