Semantic Segmentation under Adverse Conditions: A Weather and Nighttime-aware Synthetic Data-based Approach

This repository contains the original implementation of the paper Semantic Segmentation under Adverse Conditions: A Weather and Nighttime-aware Synthetic Data-based Approach, published at the BMVC 2022.

Recent semantic segmentation models perform well under standard weather conditions and sufficient illumination but struggle with adverse weather conditions and nighttime. Collecting and annotating training data under these conditions is expensive, time-consuming, error-prone, and not always practical. Usually, synthetic data is used as a feasible data source to increase the amount of training data. However, just directly using synthetic data may actually harm the model’s performance under normal weather conditions while getting only small gains in adverse situations. Therefore, we present a novel architecture specifically designed for using synthetic training data. We propose a simple yet powerful addition to DeepLabV3+ by using weather and time-of-the-day su- pervisors trained with multi-task learning, making it both weather and nighttime aware, which improves its mIoU accuracy by 14 percentage points on the ACDC dataset while maintaining a score of 75% mIoU on the Cityscapes dataset.

To reproduce our experiments, please follow these steps:

git clone https://github.com/lsmcolab/Semantic-Segmentation-under-Adverse-Conditions.git

cd Semantic-Segmentation-under-Adverse-Conditions

pip install -r requirements.txt- Update this part in main.py:

Semantic-Segmentation-under-Adverse-Conditions/main.py

Lines 263 to 265 in 00b4c76

- Update datasets/(AWSS.py, cityscapes.py, and ACDC.py) according to where you store these three datasets.

Please update the following line:

- To train using both datasets Cityscapes and AWSS, select MODE = 0.

Please update the following lines:

- To test your trained model on Cityscapes, select MODE = 11.

- To test your trained model on ACDC, select MODE = 21.

Semantic-Segmentation-under-Adverse-Conditions/main.py

Lines 604 to 605 in 00b4c76

- Our AWSS dataset can be downloaded using this link: AWSS.

- The Cityscapes and ACDC datasets can be downloaded from these links: Cityscapes and ACDC.

- The Silver simulator can be downloaded using this link: Silver.

-

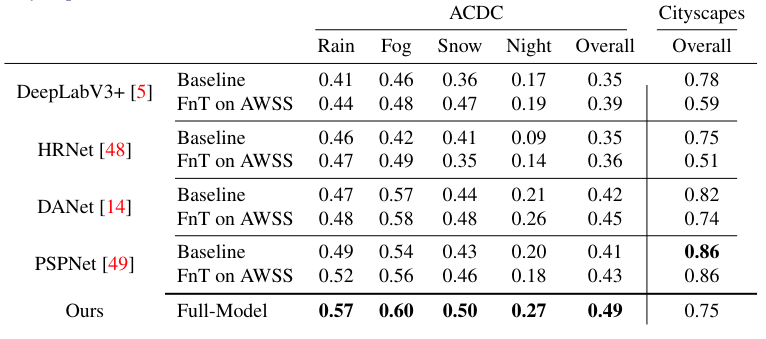

Quantitative Results: mIoU results for our approach Vs. standard domain adaptation methods. Training our weather and nighttime-aware architecture on both Cityscapes and AWSS, improves the performance on ACDC dataset and achieves adequate peformance on Cityscapes. Best results are bolded. Fnt stands for Fine-Tuned.

-

Qualitative Results: Visual comparison between baselines and our approach. Segmentation results are shown on ACDC and Cityscapes dataset, respectively.

- Abdulrahman Kerim - PhD Candidate - Lancaster University - [email protected]

- Felipe Chamone - PhD Candidate - UFMG - [email protected]

- Washington Ramos - PhD Candidate - UFMG - [email protected]

- Leandro S. Marcolino - Lecturer (Assistant Professor) at Lancaster University - [email protected]

- Erickson R. Nascimento - Senior Lecturer (Associate Professor) at UFMG - [email protected]

- Richard Jiang - Senior Lecturer (Associate Professor) at Lancaster University - [email protected]

If you find this code useful for your research, please cite the paper:

@inproceedings{kerim2022Semantic,

title={Semantic Segmentation under Adverse Conditions: A Weather and Nighttime-aware Synthetic Data-based Approach},

author={Kerim, Abdulrahman and Chamone, Felipe and Ramos, Washington LS and Marcolino, Leandro Soriano and Nascimento, Erickson R and Jiang, Richard},

booktitle={33nd British Machine Vision Conference 2022, BMVC 2022},

year={2022}

}

This work was funded by the Faculty of Science and Technology of Lancaster University. We thank the High End Computing facility of Lancaster University for the computing resources. The authors would also like to thank CAPES and CNPq for funding different parts of this work.

Project is distributed under MIT License