This reporitory is modified by DIG

Code for GNN-LRP following the Higher-Order Explanations of Graph Neural Networks via Relevant Walks.

- Ubuntu

- Anaconda

- Cuda 10.2 & Cudnn (>=7.0)

- Clone this repo

- Install the conda environment

xgraph - Download datasets, then download the pretrained models.

$ git clone [email protected]:divelab/DIG.git (or directly clone the xgraph directory by svn)

$ cd DIG/dig/xgraph/GNN-LRP

$ source ./install.bashDownload Datasets to xgraph/datasets/, then

download pre-trained models to xgraph/GNN-LRP/

$ cd GNN-LRP

$ unzip ../datasets/datasets.zip -d ../datasets/

$ unzip checkpoints.zipFor running GNN-LRP or GNN-GI on the given model and the dataset with the first 100 data:

python -m benchmark.kernel.pipeline --task explain --model_name [GCN_2l/GCN_3l/GIN_2l/GIN_3l] --dataset_name [ba_shape/ba_lrp/tox21/clintox] --target_idx [0/2] --explainer [GNN_LRP/GNN_GI] --sparsity [0.5/...]For running GNN-LRP or GNN-GI with the given data, please add the flag --debug, then modify the index at line xx in benchmark/kernel/pipeline.py to choose your data in the dataset. Please add the flag --vis for important edges visualization while add one more flag --walk to visualize the flow view.

Note that the 2-layer models GCN_2l and GIN_3l only work on dataset ba_shape, while 3-layer models work on the left three datasets. Specially, the tox21's target_idx is 2 while others are 0. You can choose any sparsity between 0 to 1 as you like. Higher sparsity means less important edges to be chosen.

If you want to save the visualization result in debug mode, please use --save_fig flag. Then the output figure will be saved

in the ./visual_results/ folder.

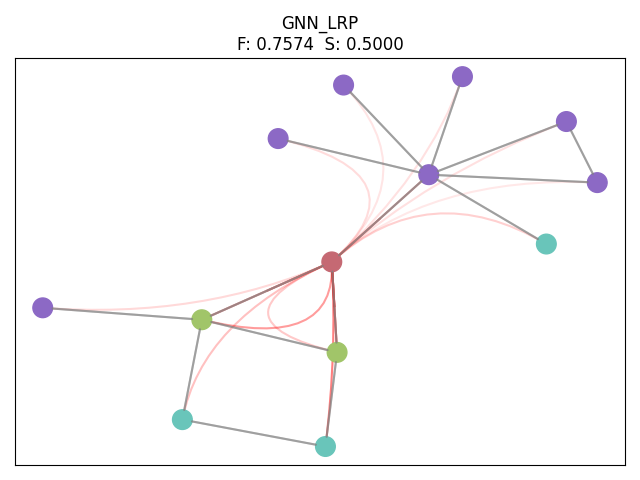

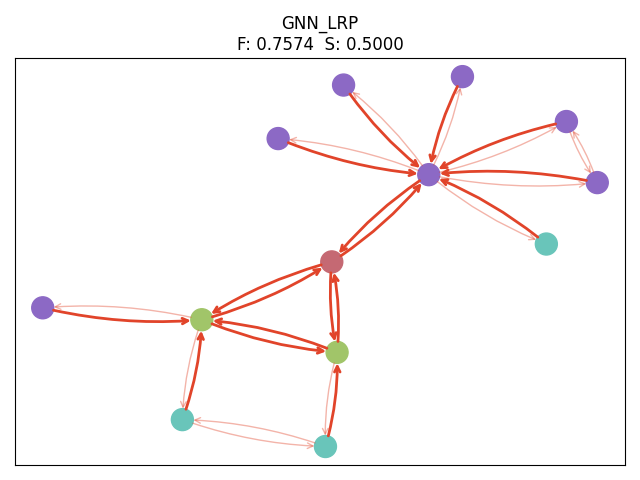

We provide a visualization example:

python -m benchmark.kernel.pipeline --task explain --model_name GCN_2l --dataset_name ba_shape --target_idx 0 --explainer GNN_LRP --sparsity 0.5 --debug --vis [--walk] --nolabelwhere the --nolabel means to remove debug labels on the graph.

The edge view and the path view are:

where F means Fidelity while S means Sparsity.

We closely follow the GNN-LRP's forward reproduction, which is very model dependent; thus, please clearly understand the code for your own model design's modification. And of course, you can add your datasets in benchmark/data/dataset then retrain our given models.

If using our implementation, please cite our work.

@article{yuan2020explainability,

title={Explainability in Graph Neural Networks: A Taxonomic Survey},

author={Yuan, Hao and Yu, Haiyang and Gui, Shurui and Ji, Shuiwang},

journal={arXiv preprint arXiv:2012.15445},

year={2020}

}