A collection of papers and resources about knowledge graph enhanced large language models (KGLLM)

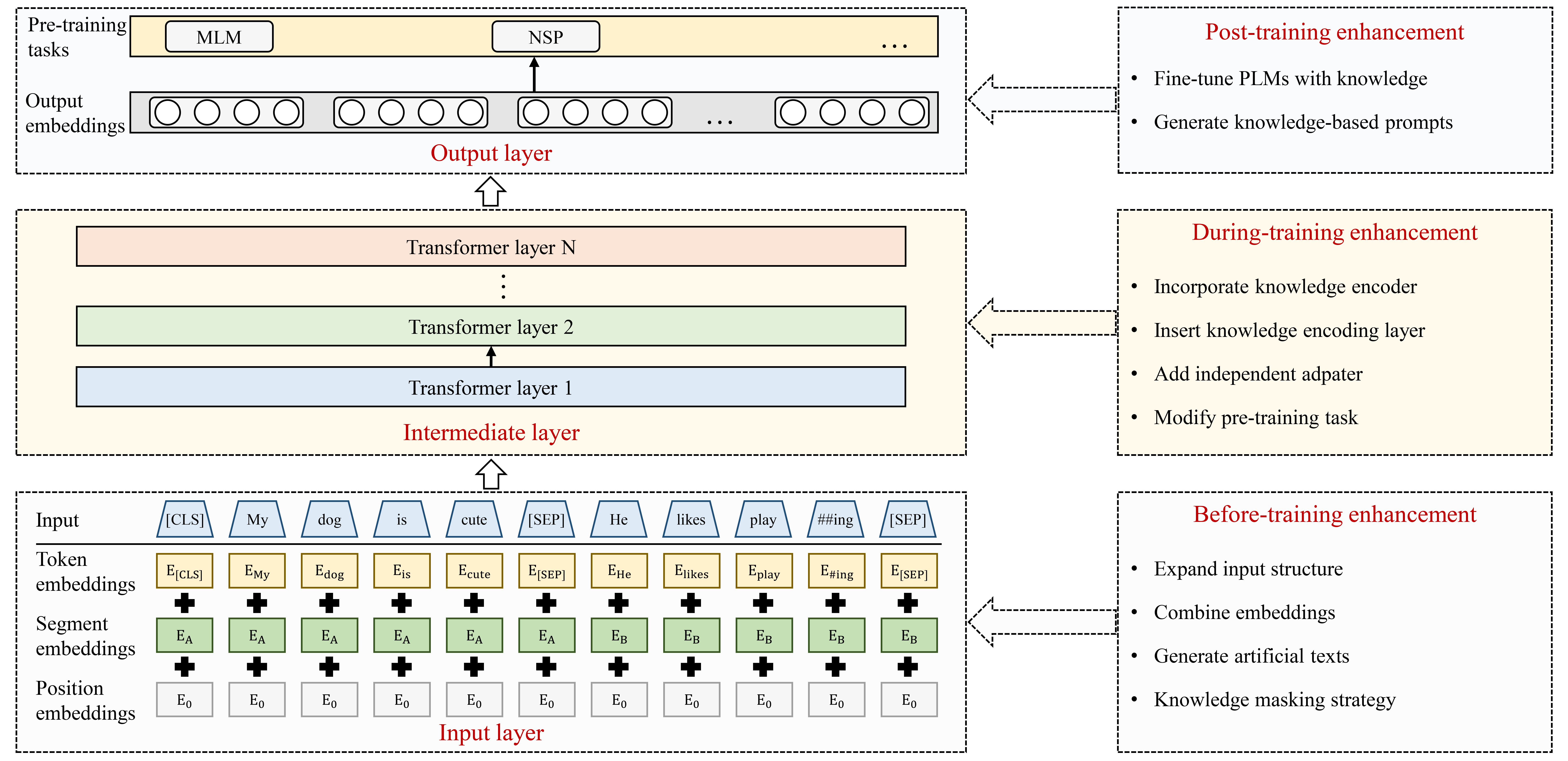

Recently, ChatGPT, a representative large language model (LLM), has gained considerable attention due to its powerful emergent abilities. Some researchers suggest that LLMs could potentially replace structured knowledge bases like knowledge graphs (KGs) and function as parameterized knowledge bases. However, while LLMs are proficient at learning probabilistic language patterns based on large corpus and engaging in conversations with humans, they, like previous smaller pre-trained language models (PLMs), still have difficulty in recalling facts while generating knowledge-grounded contents. To overcome these limitations, researchers have proposed enhancing data-driven PLMs with knowledge-based KGs to incorporate explicit factual knowledge into PLMs, thus improving their performance to generate texts requiring factual knowledge and providing more informed responses to user queries. Therefore, we review the studies on enhancing PLMs with KGs, detailing existing knowledge graph enhanced pre-trained language models (KGPLMs) as well as their applications. Inspired by existing studies on KGPLM, we propose to enhance LLMs with KGs by developing knowledge graph-enhanced large language models (KGLLMs). KGLLM provides a solution to enhance LLMs' factual reasoning ability, opening up new avenues for LLM research.

The organization of these papers refers to our survey: ChatGPT is not Enough: Enhancing Large Language Models with Knowledge Graphs for Fact-aware Language Modeling

Please let us know if you find any mistakes or have any suggestions by email: [email protected]

If you find our survey useful for your research, please cite the following paper:

@article{KGLLM,

title={ChatGPT is not Enough: Enhancing Large Language Models with Knowledge Graphs for Fact-aware Language Modeling},

author={Yang, Linyao and Chen, Hongyang and Li, Zhao and Ding, Xiao and Wu, Xindong},

journal={arXiv preprint arXiv:2306.11489},

year={2023}

}In this repository, we collect recent advances in knowledge graph enhanced large language models. According to the stage at which KGs participate in pre-training, existing methods can be categorized into before-training enhancement, during-training enhancement, and post-training enhancement methods.

- Awesome-KGLLM

- K-bert: Enabling language representation with knowledge graph (AAAI, 2020) [paper]

- CoLAKE: Contextualized Language and Knowledge Embedding (COLING, 2020) [paper]

- Cn-hit-it. nlp at semeval-2020 task 4: Enhanced language representation with multiple knowledge triples (SemEval, 2020) [paper]

- LUKE: Deep Contextualized Entity Representations with Entity-aware Self-attention (EMNLP, 2020) [paper]

- E-BERT: Efficient-Yet-Effective Entity Embeddings for BERT (EMNLP, 2020) [paper]

- Knowledge-Aware Language Model Pretraining [paper]

- OAG-BERT: Towards a Unified Backbone Language Model for Academic Knowledge Services (KDD, 2022) [paper]

- DKPLM: Decomposable Knowledge-enhanced Pre-trained Language Model for Natural Language Understanding (AAAI, 2022) [paper]

- Align, Mask and Select: A Simple Method for Incorporating Commonsense Knowledge into Language Representation Models [paper]

- KGPT: Knowledge-Grounded Pre-Training for Data-to-Text Generation (EMNLP, 2020) [paper]

- Barack's wife hillary: Using knowledge-graphs for fact-aware language modeling (ACL, 2019) [paper]

- Atomic: An atlas of machine commonsense for if-then reasoning (AAAI, 2019) [paper]

- KEPLER: A Unified Model for Knowledge Embedding and Pre-trained Language Representation (TACL, 2021) [paper]

- ERNIE: Enhanced Language Representation with Informative Entities (ACL, 2019) [paper]

- Pretrained Encyclopedia: Weakly Supervised Knowledge-Pretrained Language Model [paper]

- Exploiting Structured Knowledge in Text via Graph-Guided Representation Learning (EMNLP, 2020) [paper]

- ERNIE: Enhanced Language Representation with Informative Entities (ACL, 2019) [paper]

- ERNIE 3.0: Large-scale Knowledge Enhanced Pre-training for Language Understanding and Generation [paper]

- BERT-MK: Integrating Graph Contextualized Knowledge into Pre-trained Language Models (AI Open, 2021) [paper]

- JointLK: Joint Reasoning with Language Models and Knowledge Graphs for Commonsense Question Answering (NAACL, 2022) [paper]

- Knowledge-Enriched Transformer for Emotion Detection in Textual Conversations (EMNLP-IJCNLP, 2019) [paper]

- Relational Memory-Augmented Language Models (TACL, 2022) [paper]

- QA-GNN: Reasoning with Language Models and Knowledge Graphs for Question Answering (NAACL, 2021) [paper]

- GreaseLM: Graph REASoning Enhanced Language Models for Question Answering [paper]

- KLMo: Knowledge graph enhanced pretrained language model with fine-grained relationships (EMNLP, 2021) [paper]

- K-bert: Enabling language representation with knowledge graph (AAAI, 2020) [paper]

- CoLAKE: Contextualized Language and Knowledge Embedding (COLING, 2020) [paper]

- Knowledge Enhanced Contextual Word Representations (EMNLP-IJCNLP, 2019) [paper]

- JAKET: Joint Pre-training of Knowledge Graph and Language Understanding (AAAI, 2022) [paper]

- KG-BART: Knowledge Graph-Augmented BART for Generative Commonsense Reasoning (AAAI, 2021) [paper]

- K-Adapter: Infusing Knowledge into Pre-Trained Models with Adapters (ACL-IJCNLP, 2021) [paper]

- Common Sense or World Knowledge? Investigating Adapter-Based Knowledge Injection into Pretrained Transformers (DEELIO, 2020) [paper]

- Parameter-Efficient Domain Knowledge Integration from Multiple Sources for Biomedical Pre-trained Language Models (EMNLP, 2021) [paper]

- Commonsense knowledge graph-based adapter for aspect-level sentiment classification (Neurocomputing, 2023) [paper]

- SenseBERT: Driving Some Sense into BERT (ACL, 2020) [paper]

- ERNIE: Enhanced Language Representation with Informative Entities (ACL, 2019) [paper]

- LUKE: Deep Contextualized Entity Representations with Entity-aware Self-attention (EMNLP, 2020) [paper]

- OAG-BERT: Towards a Unified Backbone Language Model for Academic Knowledge Services (KDD, 2022) [paper]

- Pretrained Encyclopedia: Weakly Supervised Knowledge-Pretrained Language Model [paper]

- Exploiting Structured Knowledge in Text via Graph-Guided Representation Learning (EMNLP, 2020) [paper]

- ERICA: Improving Entity and Relation Understanding for Pre-trained Language Models via Contrastive Learning (ACL-IJCNLP, 2021) [paper]

- SentiLARE: Sentiment-Aware Language Representation Learning with Linguistic Knowledge (EMNLP, 2020) [paper]

- KALA: Knowledge-Augmented Language Model Adaptation (NAACL, 2022) [paper]

- Pre-trained language models with domain knowledge for biomedical extractive summarization (Knowledge-Based Systems, 2022) [paper]

- KagNet: Knowledge-Aware Graph Networks for Commonsense Reasoning (EMNLP-IJCNLP, 2019) [paper]

- Enriching contextualized language model from knowledge graph for biomedical information extraction (Briefings in bioinformatics, 2021) [paper]

- Incorporating Commonsense Knowledge Graph in Pretrained Models for Social Commonsense Tasks (DeeLIO, 2020) [paper]

- Benchmarking Knowledge-Enhanced Commonsense Question Answering via Knowledge-to-Text Transformation (AAAI, 2021) [paper]

- Enhanced story comprehension for large language models through dynamic document-based knowledge graphs (AAAI, 2022) [paper]

- Knowledge Prompting in Pre-trained Language Model for Natural Language Understanding (EMNLP, 2022) [paper]