Large Language Models (LLMs) are a promising and transformative technology that has rapidly advanced in recent years. These models are capable of generating natural language text and have numerous applications, including chatbots, language translation, and creative writing. However, as the size of these models increases, so do the costs and performance requirements needed to utilize them effectively. This has led to significant challenges in developing on top of large models such as ChatGPT.

To address this issue, we have developed GPT Cache, a project that focuses on caching responses from language models, also known as a semantic cache. The system offers two major benefits:

- Quick response to user requests: the caching system provides faster response times compared to large model inference, resulting in lower latency and faster response to user requests.

- Reduced service costs: most LLM services are currently charged based on the number of tokens. If user requests hit the cache, it can reduce the number of requests and lower service costs.

A good analogy for GptCache is to think of it as a more semantic version of Redis. In GptCache, hits are not limited to exact matches, but rather also include prompts and context similar to previous queries. We believe that the traditional cache design still works for AIGC applications for the following reasons:

- Locality is present everywhere. Like traditional application systems, AIGC applications also face similar hot topics. For instance, ChatGPT itself may be a popular topic among programmers.

- For purpose-built SaaS services, users tend to ask questions within a specific domain, with both temporal and spatial locality.

- By utilizing vector similarity search, it is possible to find a similarity relationship between questions and answers at a relatively low cost.

We provide benchmarks to illustrate the concept. In semantic caching, there are three key measurement dimensions: false positives, false negatives, and hit latency. With the plugin-style implementation, users can easily tradeoff these three measurements according to their needs.

Note:

- You can quickly try GPT cache and put it into a production environment without heavy development. However, please note that the repository is still under heavy development.

- By default, only a limited number of libraries are installed to support the basic cache functionalities. When you need to use additional features, the related libraries will be automatically installed.

- Make sure that the Python version is 3.8.1 or higher.

- If you encounter issues installing a library due to a low pip version, run:

python -m pip install --upgrade pip.

pip install gptcache# clone gpt cache repo

git clone https://github.com/zilliztech/GPTCache.git

cd GPTCache

# install the repo

pip install -r requirements.txt

python setup.py installIf you just want to achieve precise matching cache of requests, that is, two identical requests, you ONLY need TWO steps to access this cache

- Cache init

from gptcache.core import cache

cache.init()

# If you use the `openai.api_key = xxx` to set the api key, you need use `cache.set_openai_key()` to replace it.

# it will read the `OPENAI_API_KEY` environment variable and set it to ensure the security of the key.

cache.set_openai_key()- Replace the original openai package

from gptcache.adapter import openai

# openai requests DON'T need ANY changes

answer = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "foo"}

],

)If you want to experience vector similarity search cache locally, you can use the example Sqlite + Faiss + Towhee.

More Docs:

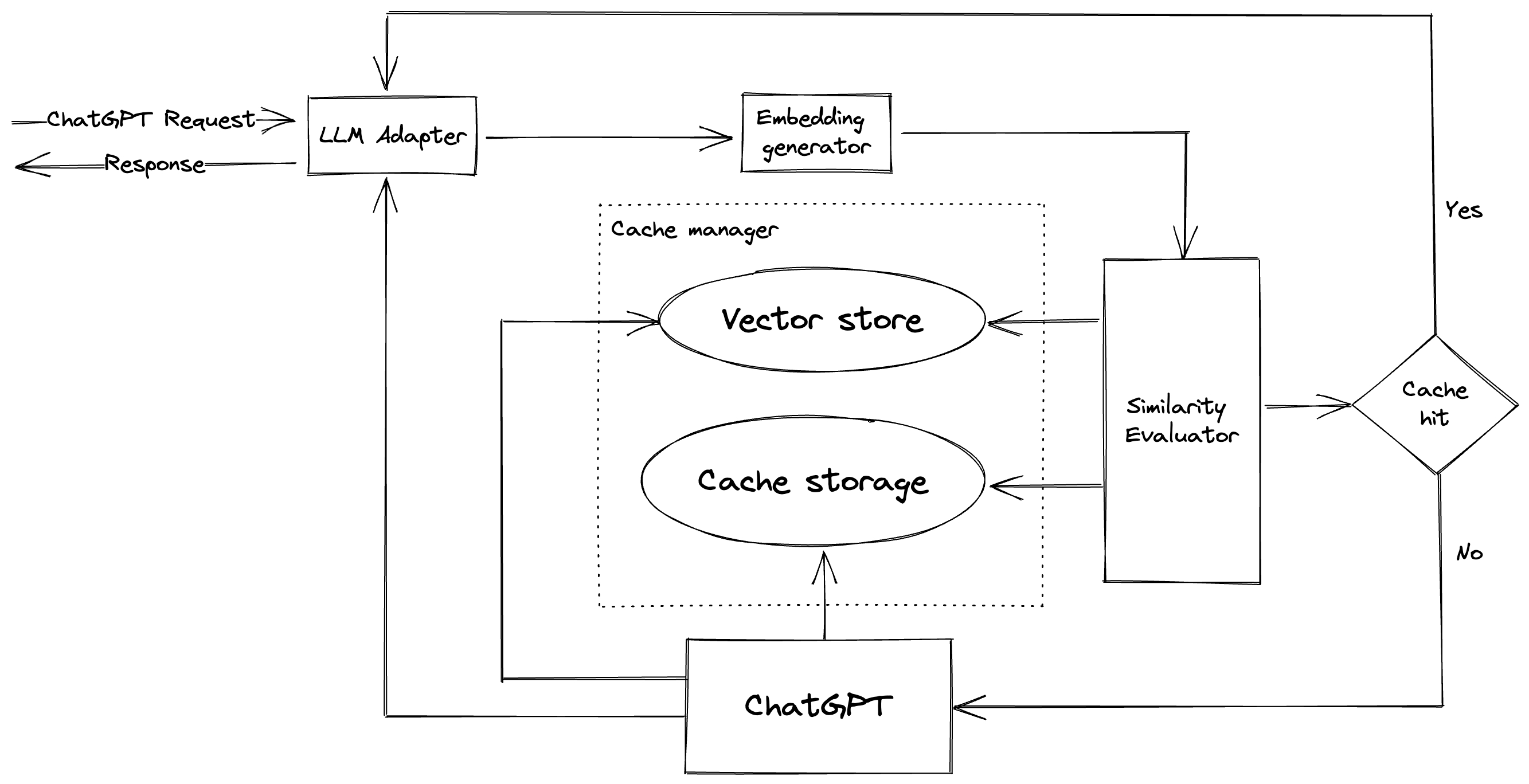

- System Design, how it was constructed

- Features, all features currently supported by the cache

- Examples, learn better custom caching

- LLM Adapter: The user interface to adapt different LLM model requests to the GPT cache protocol.

- Support OpenAI chatGPT API.

- Support other LLMs, such as Hugging Face Hub, Bard, Anthropic, and self-hosted models like LLaMa.

- Embedding Extractor: Embed the text into a dense vector for similarity search.

- Keep the text as a string without any changes.

- Support the OpenAI embedding API.

- Support Towhee with the paraphrase-albert-small-v2 model.

- Support Hugging Face embedding API.

- Support Cohere embedding API.

- Support fastText embedding API.

- Support SentenceTransformers embedding API.

- Cache Storage:

- Support SQLite.

- Support PostgreSQL.

- Support MySQL.

- Support MongoDB.

- Support MariaDB.

- Support SQL Server.

- Support Oracle.

- Support Redis.

- Support Minio.

- Support Habse.

- Support ElasticSearch

- Support zincsearch

- Support other storages

- Vector Store:

- Cache Manager

- Eviction Policy

- LRU eviction policy

- FIFO eviction policy

- More complicated eviction policies

- Eviction Policy

- Similarity Evaluator: Evaluate similarity by judging the quality of cached answers.

- Use the search distance, as described in

simple.py#pair_evaluation. - Towhee uses the albert_duplicate model for precise comparison between questions and answers. It supports only 512 tokens.

- Exact string comparison, judge the cache request and the original request based on the exact match of characters.

- For numpy arrays, use

linalg.norm. - BM25 and other similarity measurements

- Support other models

- Use the search distance, as described in

Would you like to contribute to the development of GPT Cache? Take a look at our contribution guidelines.