A Docker Spark environment setup with a Java driver program.

The idea is to demonstrate how to to setup an apache spark environment with docker container and a Java driver application to test on one container.

- Install Docker(to install docker on your machine go to Docker Setup page).

- Install Docker Compose(to install docker compose on your machine go to Docker Compose Setup page).

- Install Apache Maven(Skip this step if you just want to run the already existing jar file, otherwise follow this Maven Setup page).

-

Pull Docker Image From DockerHub: Open a terminal and run this command

sudo docker pull mesosphere/spark. This may take a while to download the spark docker image. -

Test Image Download: When image download completes, run this command

sudo docker images. If your screen looks like the screenshot below, that means you have successfully downloaded the spark docker image on your machine.

-

Clone docker-spark-setup Repo: Open another terminal and run this git command

git clone https://github.com/arman37/docker-spark-setup.git. After cloning completes, cd to docker-spark-setup directory (cd docker-spark-setup). -

Packaging Driver Program: If you skipped prerequisite step 3, skip this step too. Otherwise follow along. cd to driver-apps/java (

cd driver-apps/java). After that, run maven commandmvn test. If your screen looks like the screenshot below, that means your maven test command successfully executed.

After that, run maven command mvn package .

your screen should look like the screenshot below

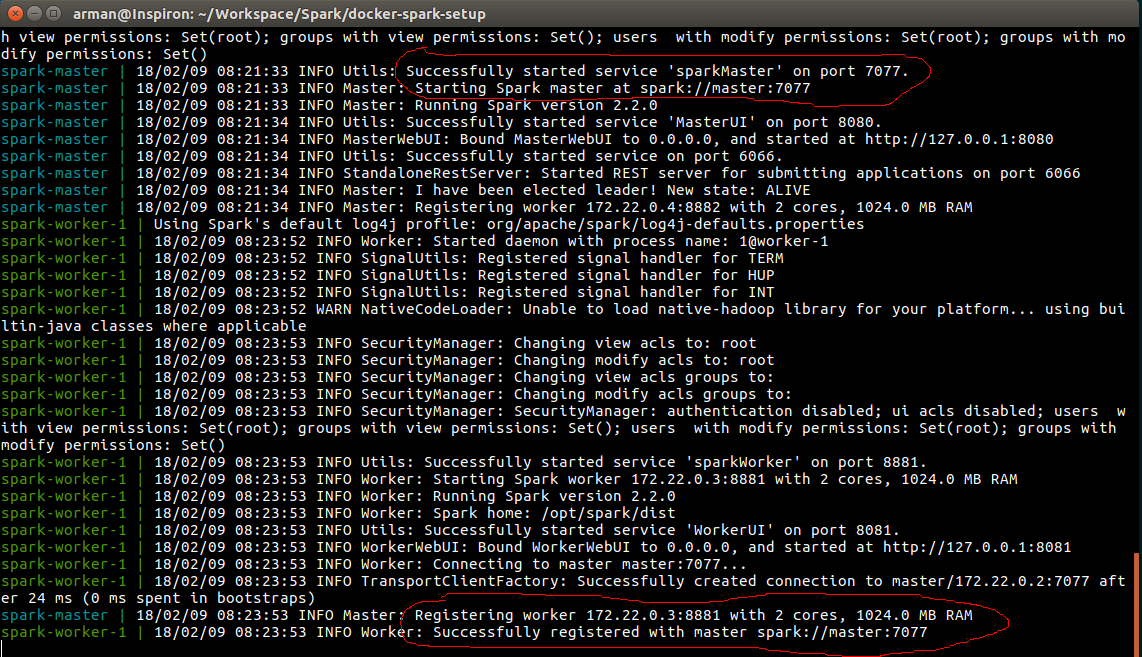

- Running Docker Compose: If you followed step 4 then cd back to docker-spark-setup directory (

cd ../..). From docker-spark-setup directory run commandsudo docker-compose up. Please make sure no other service on your machine using ports 8080, 4040, 8881, 8882. This command will run three docker container on your machine, your screen should look like the screenshot below

To check running docker containers run this command sudo docker ps .

your screen should look like the screenshot below

-

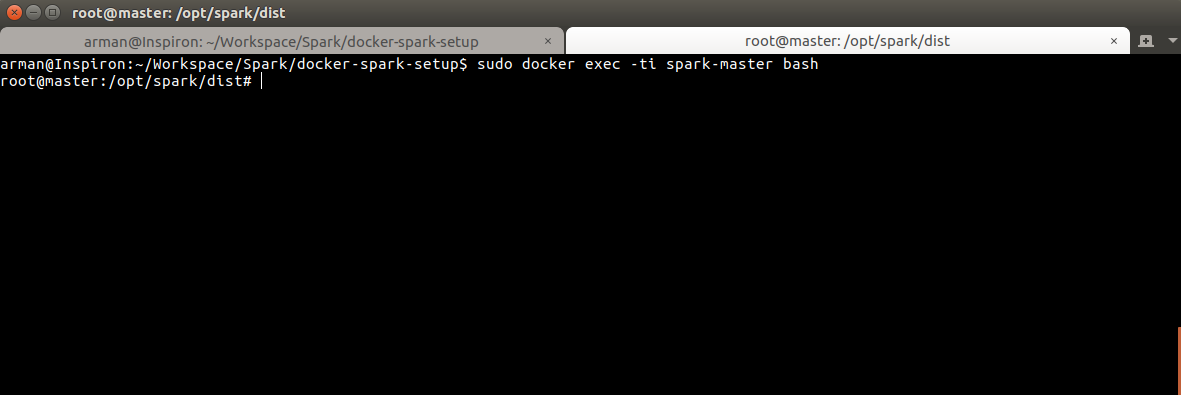

Open Container spark-master Bash: From any terminal run command

sudo docker exec -ti spark-master bash. your screen should look like the screenshot below

-

Deploy Driver App: To ensure the driver application is deployed on the worker nodes (cluster), we should supply the URL of the spark master node. From that terminal run this command

./bin/spark-submit --class com.dsi.spark.SortingTask --master spark:https://master:7077 /usr/spark-2.2.1/jars/sorting-1.0-SNAPSHOT.jar /usr/spark-2.2.1/test/resources/random-numbers.txt. your screen should look like the screenshot below

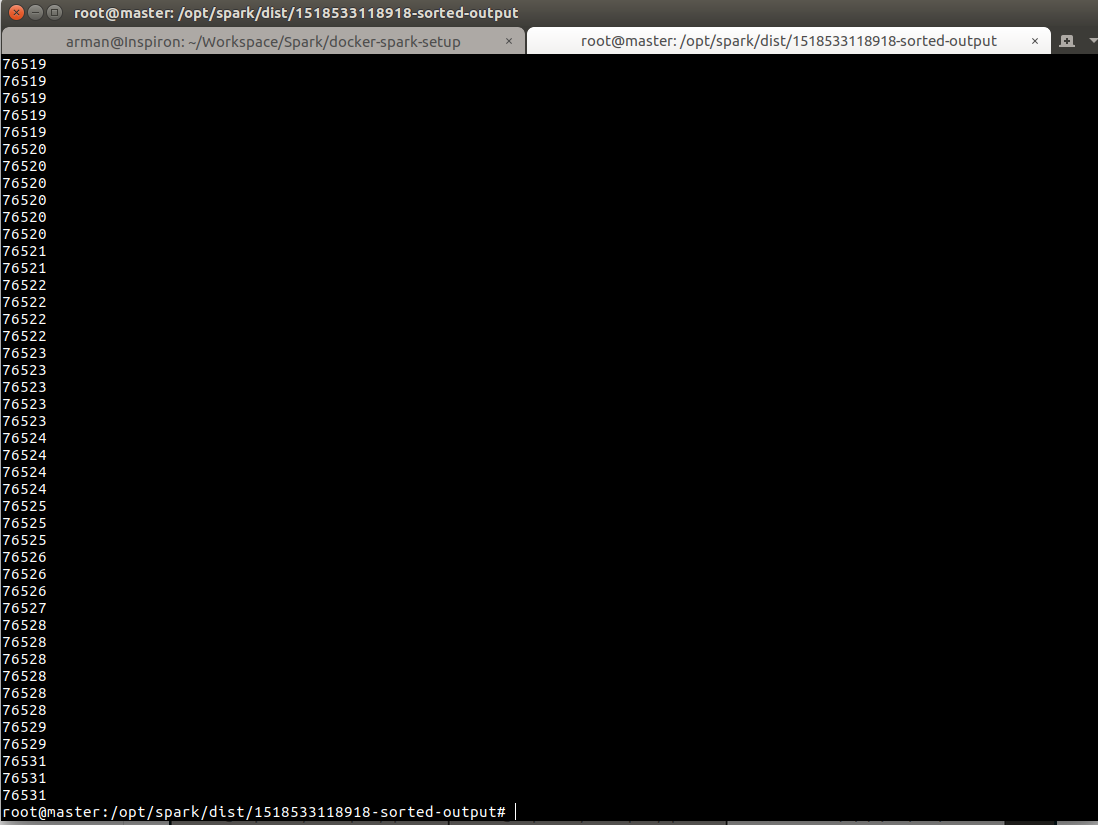

Here we have sorted 100,000 numbers of random integer values ranging from 13 to 76,531 and saved the sorted numbers to a directory named [some-random-numbers]-sorted-output in dist directory.

- Test Sorting Result: From the same terminal run command

ls. you'll see a directory named [some-random-numbers]-sorted-output. cd to this directory ( likecd 1518533118918-sorted-output/). Into that directory you'll see part-00000 text file. your screen should look like the screenshot below

Now run this command cat part-00000 .

your screen should look like the screenshot below

Congratulations!!! you have successfully deployed a spark driver application. (Y) :)