Retrieval augmented generation (RAG) demos with Llama-2-7b, Mistral-7b, Zephyr-7b, Gemma-2b, Llama-3-8b, Phi-3-mini

The demos use quantized models and run on CPU with acceptable inference time. They can run offline without Internet access, thus allowing deployment in an air-gapped environment.

The demos also allow user to

- apply propositionizer to document chunks

- perform reranking upon retrieval

- perform hypothetical document embedding (HyDE)

You will need to set up your development environment using conda, which you can install directly.

conda env create --name rag python=3.11

conda activate rag

pip install -r requirements.txtActivate the environment.

conda activate ragDownload and save the models in ./models and update config.yaml. The models used in this demo are:

- Embeddings

- Rerankers:

- BAAI/bge-reranker-base: save in

models/bge-reranker-base/ - facebook/tart-full-flan-t5-xl: save in

models/tart-full-flan-t5-xl/

- BAAI/bge-reranker-base: save in

- Propositionizer

- chentong00/propositionizer-wiki-flan-t5-large save in

models/propositionizer-wiki-flan-t5-large/

- chentong00/propositionizer-wiki-flan-t5-large save in

- LLMs

The LLMs can be loaded directly in the app, or they can be first deployed with Ollama server.

You can also choose to use models from Groq. Set GROQ_API_KEY in .env.

Since each model type has its own prompt format, include the format in ./src/prompt_templates.py. For example, the format used in openbuddy models is

"""{system}

User: {user}

Assistant:"""We shall use Phoenix for LLM tracing. Phoenix is an open-source observability library designed for experimentation, evaluation, and troubleshooting. Before running the app, start a phoenix server

python3 -m phoenix.server.main serveThe traces can be viewed at http:https://localhost:6006.

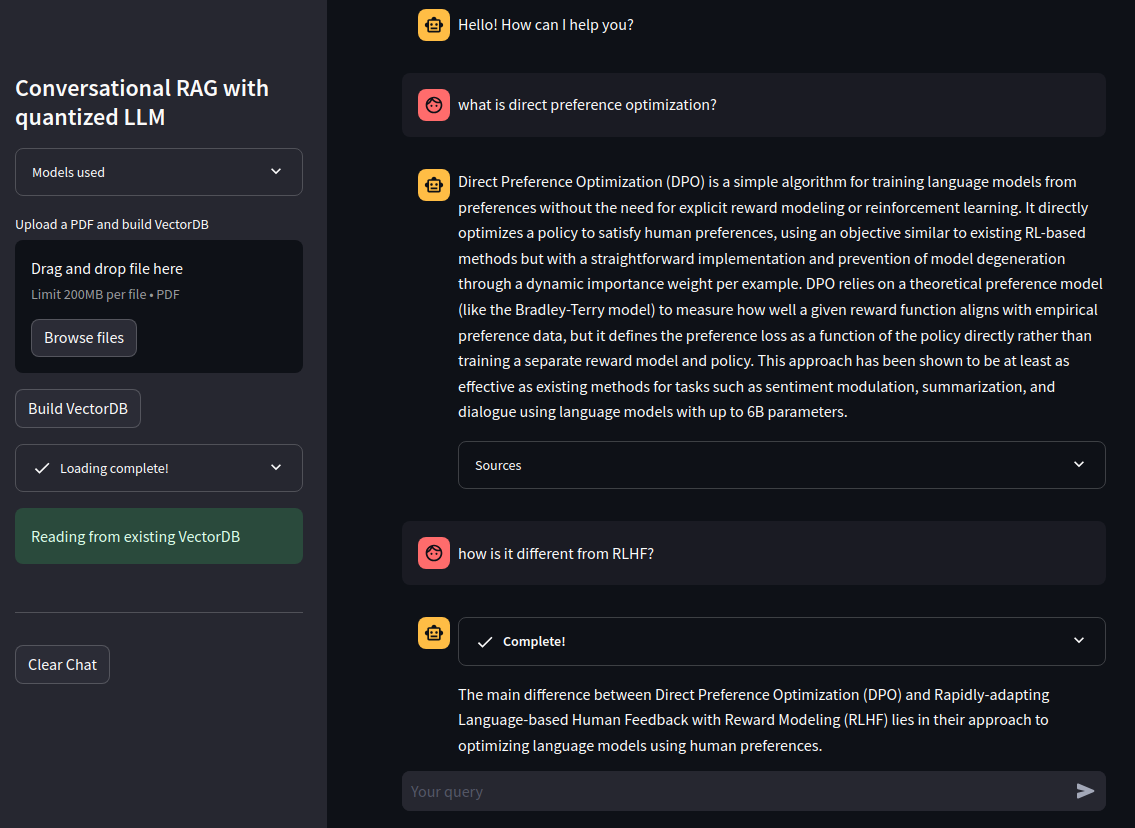

We use Streamlit as the interface for the demos. There are three demos:

- Conversational Retrieval using ReAct

Create vectorstore first and update config.yaml

python -m vectorize --filepaths <your-filepath>Run the app

streamlit run app_react.py- Conversational Retrieval

streamlit run app_conv.py- Retrieval QA

streamlit run app_qa.pyTo get started, upload a PDF and click on Build VectorDB. Creating vector DB will take a while.