Winner of the HANDS'2023 ARCTIC Challenge @ ICCV (1st place in both p1 and p2 tasks).

- Python 3.10.4

- CUDA 11.8

pip install -U pip wheel

pip install torch torchvision -c requirements_frozen.txt --extra-index-url https://download.pytorch.org/whl/cu118

pip install -r requirements_frozen.txtModify smplx package to return 21 joints for instead of 16:

vim .venv/lib/python3.10/site-packages/smplx/body_models.pyUncomment line 1681: joints = self.vertex_joint_selector(vertices, joints).

If you are unsure about where body_models.py is, run these on a terminal:

python

>>> import smplx

>>> print(smplx.__file__)Refer to the original README to setup the dataset.

For the allocentric task (p1) we used this command:

python scripts_method/train.py --setup p1 --method transformer_sf --trainsplit train --valsplit smallval --optimizer adamw --lr_scheduler cosine --lr 1e-4 --backbone vit-g --freeze_backbone --decoder_depth=12For the egocentric task (p2) we used this command (where 504802f70 is the checkpoint from the p1 run):

python scripts_method/train.py --setup p2 --method transformer_sf --trainsplit train --valsplit smallval --optimizer adamw --lr_scheduler cosine --lr 1e-4 --backbone vit-g --freeze_backbone --decoder_depth=12 --load_ckpt logs/504802f70/checkpoints/last.ckpt --precision 32[ Project Page ] [ Paper ] [ Video ] [ Register ARCTIC Account ] [ ICCV Competition ] [ Leaderboard ]

This is a repository for preprocessing, splitting, visualizing, and rendering (RGB, depth, segmentation masks) the ARCTIC dataset. Further, here, we provide code to reproduce our baseline models in our CVPR 2023 paper (Vancouver, British Columbia 🇨🇦) and developing custom models.

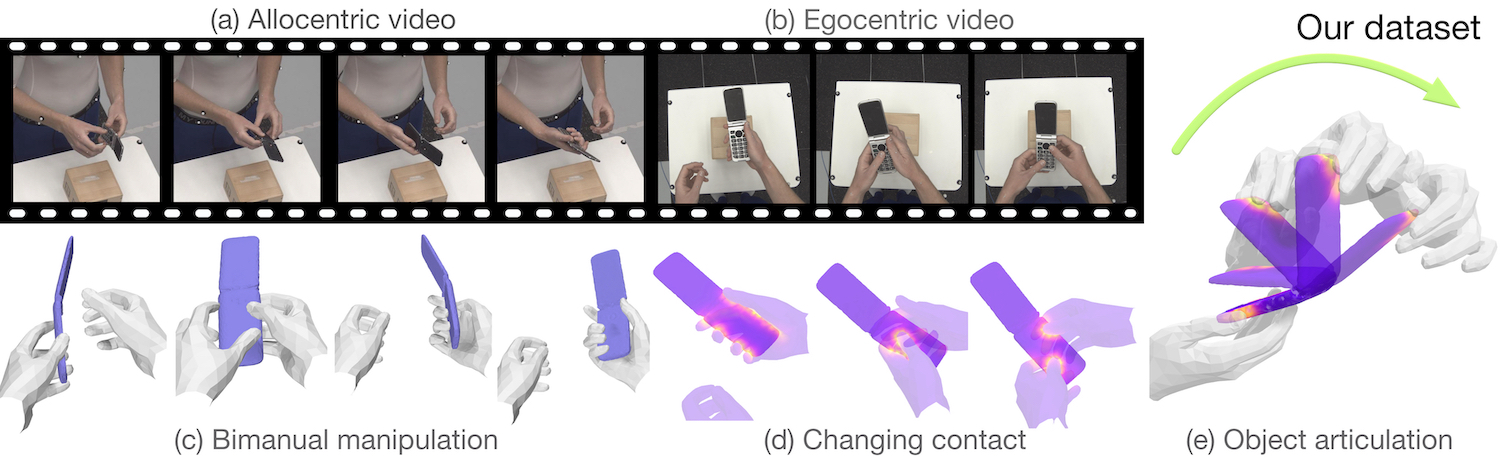

Our dataset contains heavily dexterous motion:

Summary on dataset:

- It contains 2.1M high-resolution images paired with annotated frames, enabling large-scale machine learning.

- Images are from 8x 3rd-person views and 1x egocentric view (for mixed-reality setting).

- It includes 3D groundtruth for SMPL-X, MANO, articulated objects.

- It is captured in a MoCap setup using 54 high-end Vicon cameras.

- It features highly dexterous bimanual manipulation motion (beyond quasi-static grasping).

Potential tasks with ARCTIC:

- Generating hand grasp or motion with articulated objects

- Generating full-body grasp or motion with articulated objects

- Benchmarking performance of articulated object pose estimators from depth images with human in the scene

- Studying our NEW tasks of consistent motion reconstruction and interaction field estimation

- Studying egocentric hand-object reconstruction

- Reconstructing full-body with hands and articulated objects from RGB images

Check out our project page for more details.

- 2023.09.11: ARCTIC leaderboard online!

- 2023.06.16: ICCV ARCTIC challenge starts!

- 2023.05.04: ARCTIC dataset with code for dataloaders, visualizers, models is officially announced (version 1.0)!

- 2023.03.25: ARCTIC ☃️ dataset (version 0.1) is available! 🎉

Invited talks/posters at CVPR2023:

- 4D-HOI workshop: Keynote

- Ego4D + EPIC workshop: Oral presentation

- Rhobin workshop: Poster

- 3D scene understanding: Oral presentation

- Instructions to download the ARCTIC dataset.

- Scripts to process our dataset and to build data splits.

- Rendering scripts to render our 3D data into RGB, depth, and segmentation masks.

- A viewer to interact with our dataset.

- Instructions to setup data, code, and environment to train our baselines.

- A generalized codebase to train, visualize and evaluate the results of ArcticNet and InterField for the ARCTIC benchmark.

- A viewer to interact with the prediction.

Get a copy of the code:

git clone https://github.com/zc-alexfan/arctic.git- Setup environment: see

docs/setup.md - Download and visualize ARCTIC dataset: see

docs/data/README.md - Training, evaluating for our ARCTIC baselines: see

docs/model/README.md. - Evaluation on test set: see

docs/leaderboard.md - FAQ: see

docs/faq.md

See LICENSE.

@inproceedings{fan2023arctic,

title = {{ARCTIC}: A Dataset for Dexterous Bimanual Hand-Object Manipulation},

author = {Fan, Zicong and Taheri, Omid and Tzionas, Dimitrios and Kocabas, Muhammed and Kaufmann, Manuel and Black, Michael J. and Hilliges, Otmar},

booktitle = {Proceedings IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023}

}Our paper benefits a lot from aitviewer. If you find our viewer useful, to appreciate their hard work, consider citing:

@software{kaufmann_vechev_aitviewer_2022,

author = {Kaufmann, Manuel and Vechev, Velko and Mylonopoulos, Dario},

doi = {10.5281/zenodo.1234},

month = {7},

title = {{aitviewer}},

url = {https://github.com/eth-ait/aitviewer},

year = {2022}

}Constructing the ARCTIC dataset is a huge effort. The authors deeply thank: Tsvetelina Alexiadis (TA) for trial coordination; Markus Höschle (MH), Senya Polikovsky, Matvey Safroshkin, Tobias Bauch (TB) for the capture setup; MH, TA and Galina Henz for data capture; Priyanka Patel for alignment; Giorgio Becherini and Nima Ghorbani for MoSh++; Leyre Sánchez Vinuela, Andres Camilo Mendoza Patino, Mustafa Alperen Ekinci for data cleaning; TB for Vicon support; MH and Jakob Reinhardt for object scanning; Taylor McConnell for Vicon support, and data cleaning coordination; Benjamin Pellkofer for IT/web support; Neelay Shah, Jean-Claude Passy, Valkyrie Felso for evaluation server. We also thank Adrian Spurr and Xu Chen for insightful discussion. OT and DT were supported by the German Federal Ministry of Education and Research (BMBF): Tübingen AI Center, FKZ: 01IS18039B".

For technical questions, please create an issue. For other questions, please contact [email protected].

For commercial licensing, please contact [email protected].