Inspired by our first APS360 lecture, in which we were shown a pigeon identifying cancerous cells [1], we designed a project to use machine learning (ML) to classify complex cancer histopathological images. AICancer is an algorithm that differentiates between 5 classes of cancer images: first between colon and lung cell images, then between malignant and benign cells within these categories, and then finally into specific types of malignant cells.

Credits:

- https://github.com/sheralskumar

- https://github.com/anna-cog

- https://github.com/justin13601

- https://github.com/TE-Harrison

Disclaimer: This is purely an educational project. The information in this repository is not intended or implied to be a substitute for professional medical diagnoses. All content, including text, graphics, images and information, contained in or available through this repository is for educational purposes only.

- NumPy

- PyTorch

- Matplotlib

- Kaggle

- Split-folders

- Torchsummary

- Torchvision

- Pillow

Features:

- Uses PyTorch Library

- 4 Convolutional Neural Network Models

- Early Stopping via Checkpoint Files

- Histopathological Images Input in .jpeg or .png Format

- Classification of 5 Cancer Cell Classes

- Python 3.6.9

Install necessary packages:

pip install -r requirements.txt

Edit start.py to include histopathological image path:

...

img = 'dataset\demo\lungn10.png'

...

Run start.py:

python start.py

Console output:

>>> Lung: Benign

- Overview

- Project Illustration

- Background & Related Work

- Data & Data Processing

- Model Architectures

- Baseline Model

- Quantitative Results

- Qualitative Results

- Model Evaluation

- Discussion

- Ethical Considerations

- Project Presentation

- References

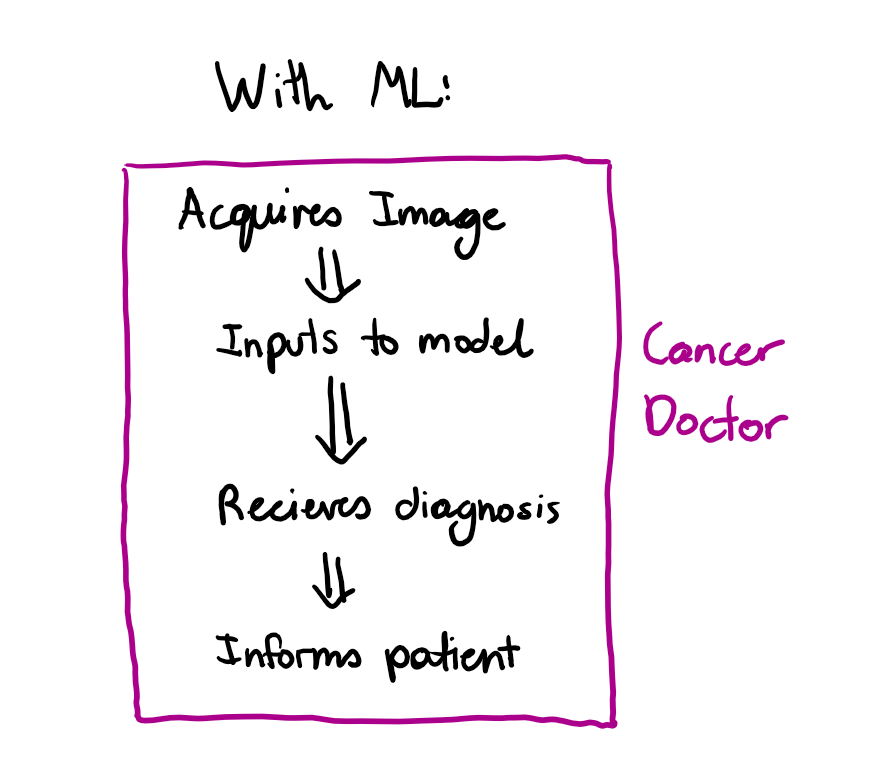

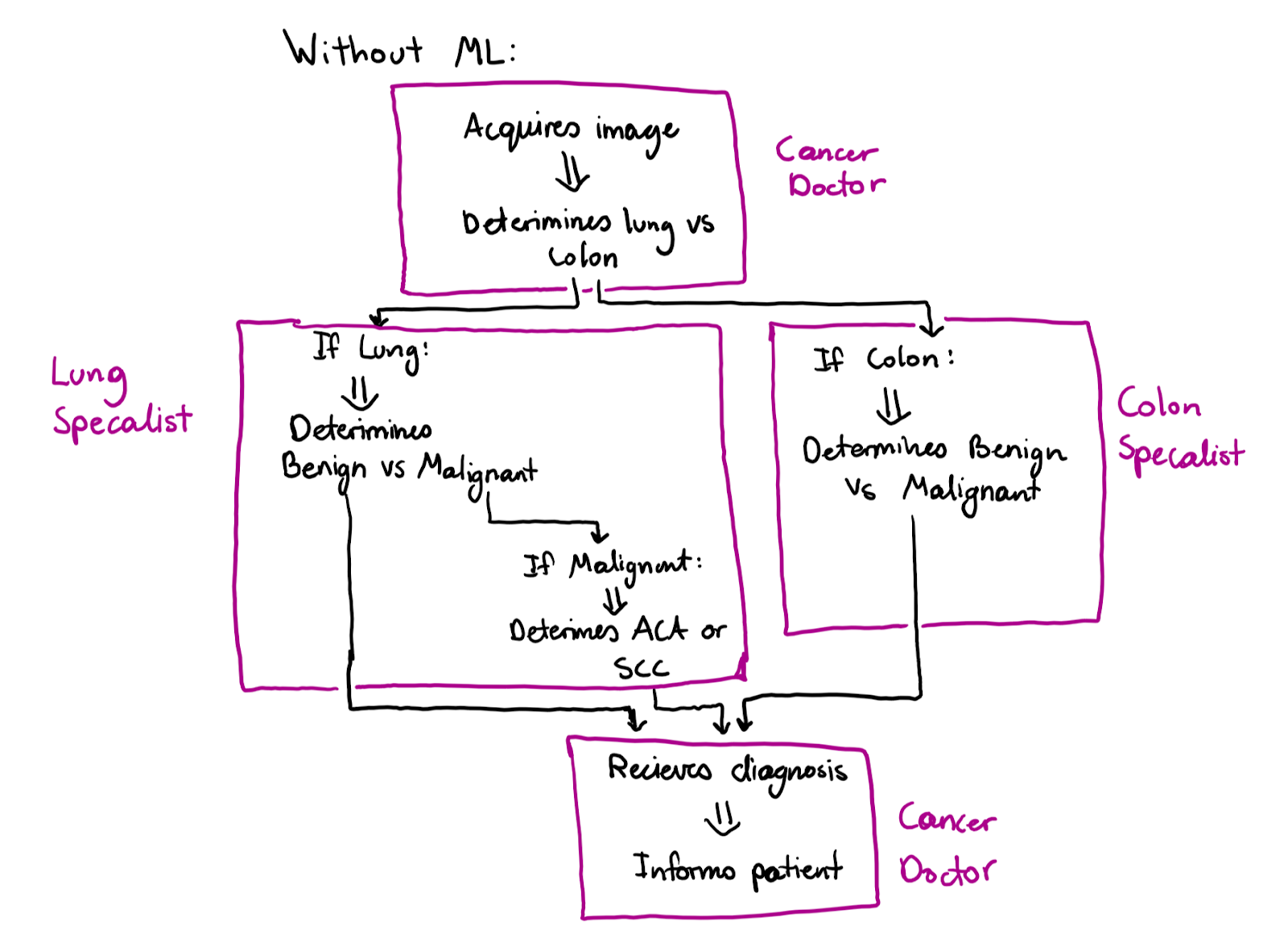

The current lung and colon cancer diagnosis process requires a doctor to take multiple steps in testing, consulting lung and colon specialists, and receiving secondary opinions before arriving at a complete diagnosis. In our project, a user would acquire and input a histopathological lung or colon image into the model and receive a full diagnosis of cell type, cancerous status, and type of malignancy if applicable.

ML streamlines the process by automating intermediate steps to give one complete output diagnosis. Users also do not have to be a doctor; they can be assistants who relay the output to the primary doctor to analyze. As such, our model acts as a ‘second opinion’ for medical professionals.

Table 1: Benefits of Using ML for Cancer Cell Classification

We chose to classify lung and colon cells as there is an abundance of data to train and test our model on. Doctors also may not be the ones to extract images from a patient and a classifier that can sort images by organ can reduce misunderstanding in these cases. Further, users can input multiple images in a batch (perhaps each from a different patient) without having to manually sort and remember organ types beforehand. Both organs can also be affected by adenocarcinoma, which adds complexity to the problem. With an organ differentiation step, we hope to minimize scenarios where cancer is diagnosed properly, but the organ is misclassified. With sufficient data we hope to expand classification to other organs, however we believe the chosen classes illustrate the potential of ML in this situation.

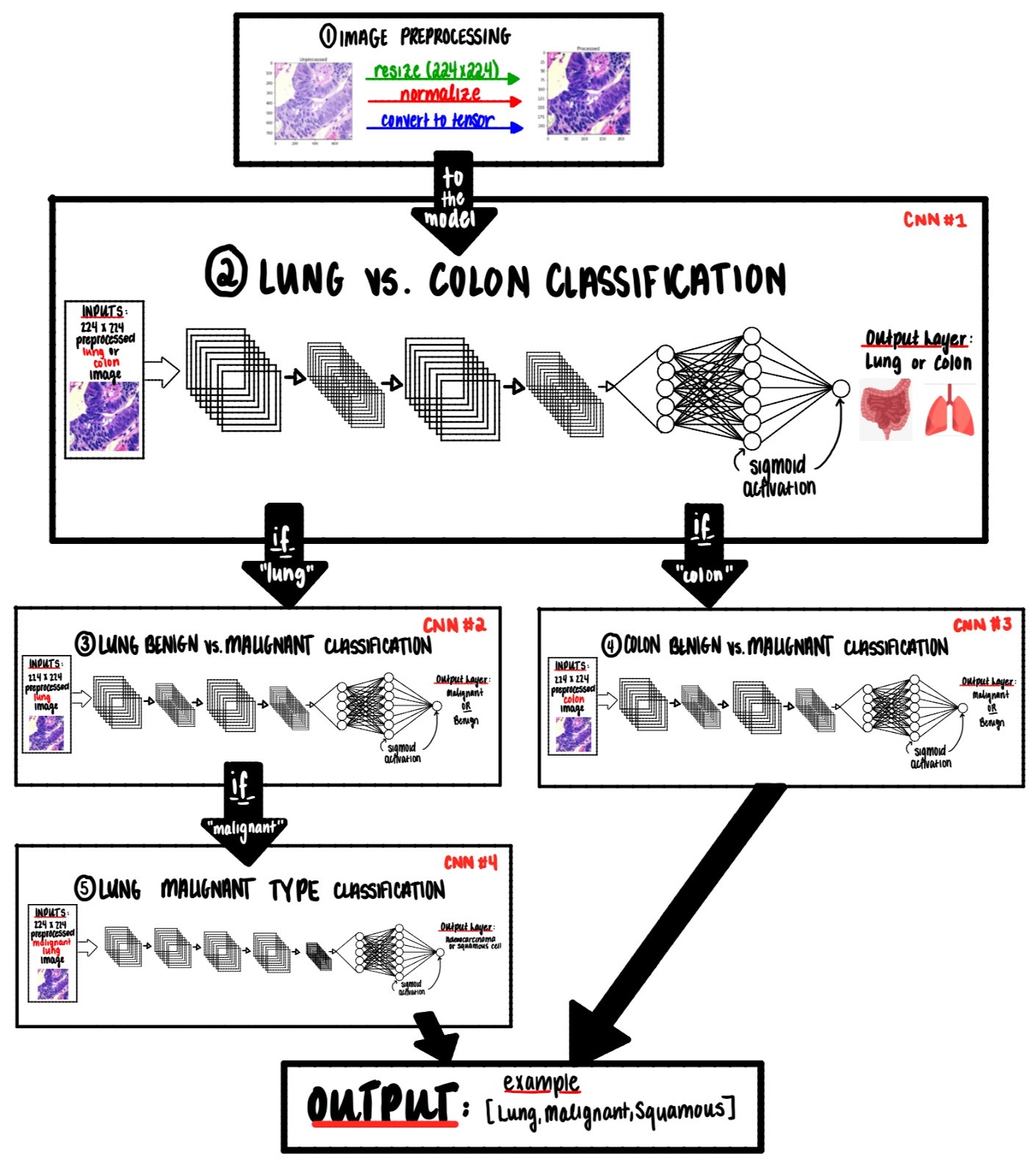

Figure 1: Project Illustration - Model Figures

ML is an evolving technology that is proving to be increasingly useful in cancer diagnosis. Algorithms are being created for some of the most common forms of the disease: brain, prostate, breast, lung, bone, skin and more [2].

Most recently, a new project received praise for its ability to detect cancerous tumours extremely effectively [3]. The initiative was led by Google Health and Imperial College London in an effort to use technology to enhance breast cancer screening methods [3]. The algorithm was created using a sample set of 29,000 mammograms, and was tested against the judgement of professional radiologists [3]. When verified against one radiologist, the effectiveness of the algorithm was proven to be better, on par with a two-person team [3].

The benefits of an algorithm like this one are highly attractive because it offers savings in time efficiency and can assist in healthcare systems lacking radiologists [3]. In theory, this algorithm should be able to supplement the opinion of one radiologist to obtain optimal results [3].

Our project has a similar goal of using AI to make decisions about the presence of cancer in scans. The success of this breast cancer algorithm shows that ML is capable of completing this task with remarkable results.

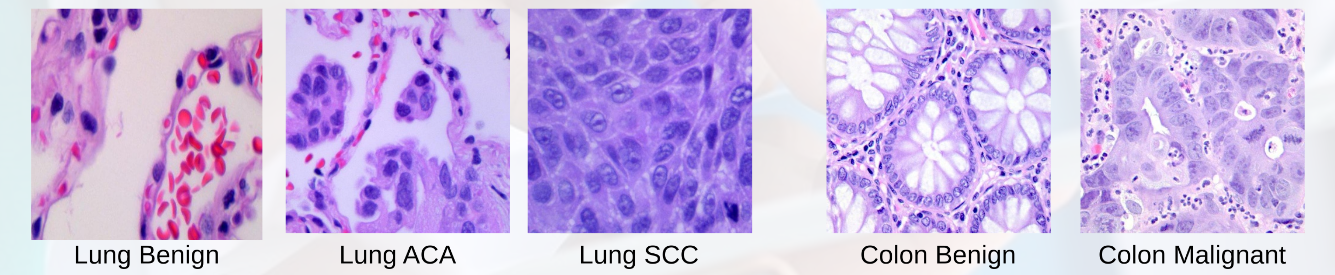

The data used is from Kaggle [4]. There are 5 classes of data: 2 colon types (benign and malignant: adenocarcinoma) and 3 lung types (benign, malignant - adenocarcinoma (ACA) and malignant - squamous cell carcinoma (SCC).

Figure 2: Data Visualization - Example from Each Class

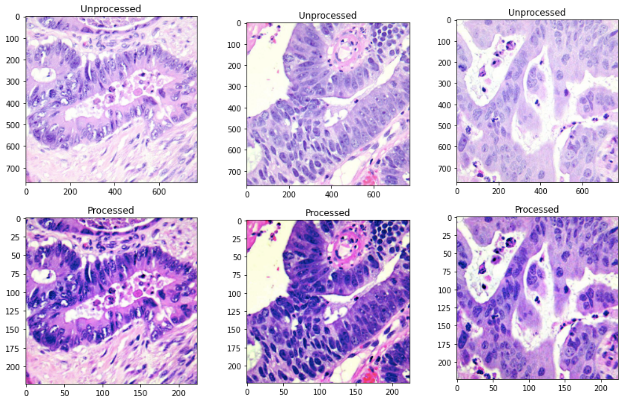

This dataset consists of 250 images of each class, which were pre-augmented to 5,000 of each class (total of 25,000 images) [4]. We normalized the pixel intensity of the images to the [0,1] range and images were resized to 224x224 pixels for consistency and to reduce load on our model. Finally, images were transformed to tensors.

Figure 3: Data Visualization - Unprocessed vs. Processed

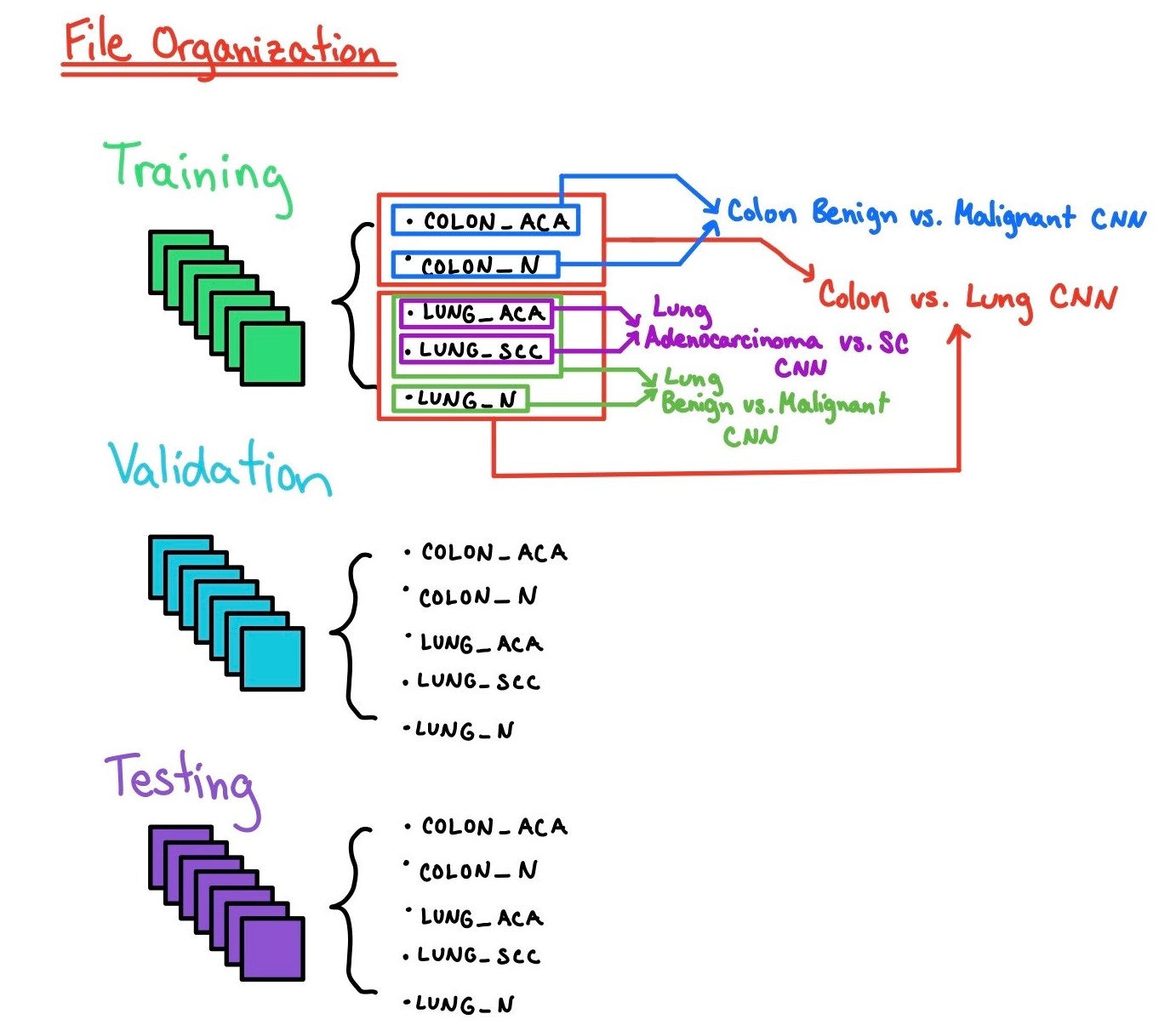

As the dataset used was heavily preprocessed beforehand, our main processing tasks were in splitting and sorting the data. Our classifier is made of 4 linked CNNs, so we needed to ensure that:

- Each CNN had an appropriate dataset for its classification job

- All CNNs had a balanced dataset

- All CNNs were trained on a subset of the same training set

- All CNNs were individually tested on a subset of the same testing set, which NONE of them had seen before

- The entire model of linked CNNs was completely tested on a new set that no individual CNN had seen before in training, validation, or individual testing

To achieve this, we split the dataset into training, validation, individual testing, and overall testing sets with the ratio 70 : 15 : 7.5 : 7.5. We created individual model datasets from these, as shown below:

Figure 4: Data Split per Model

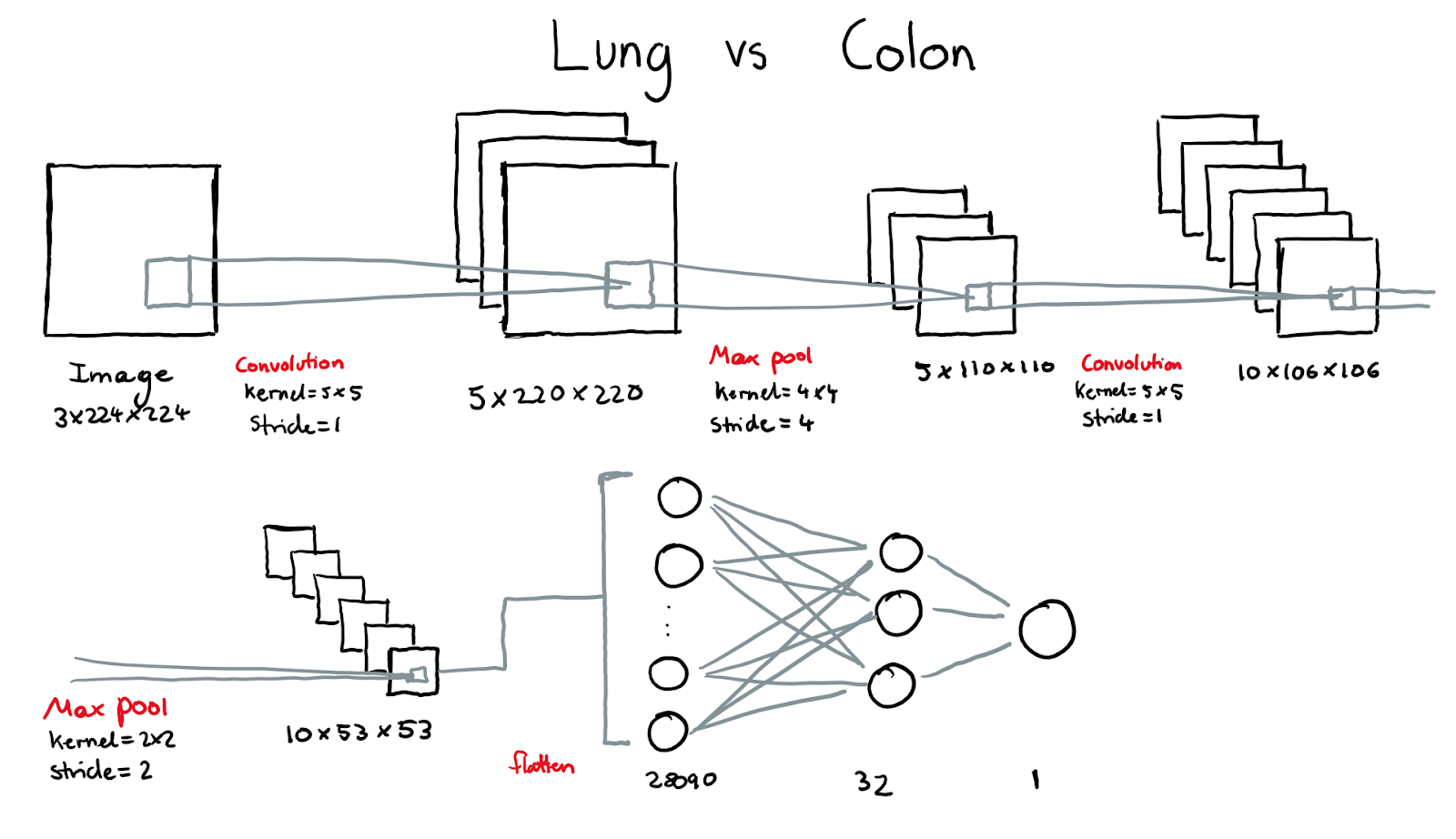

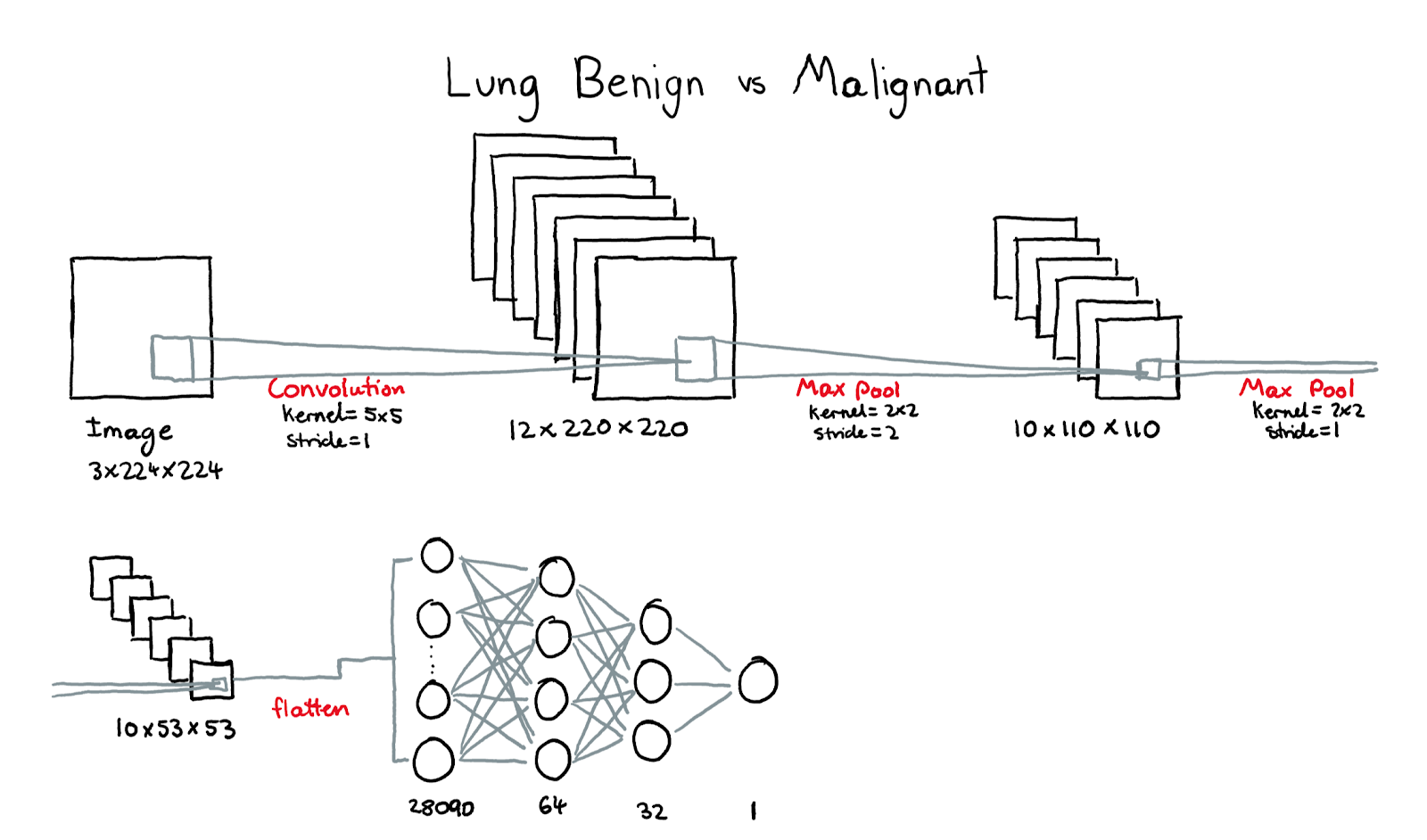

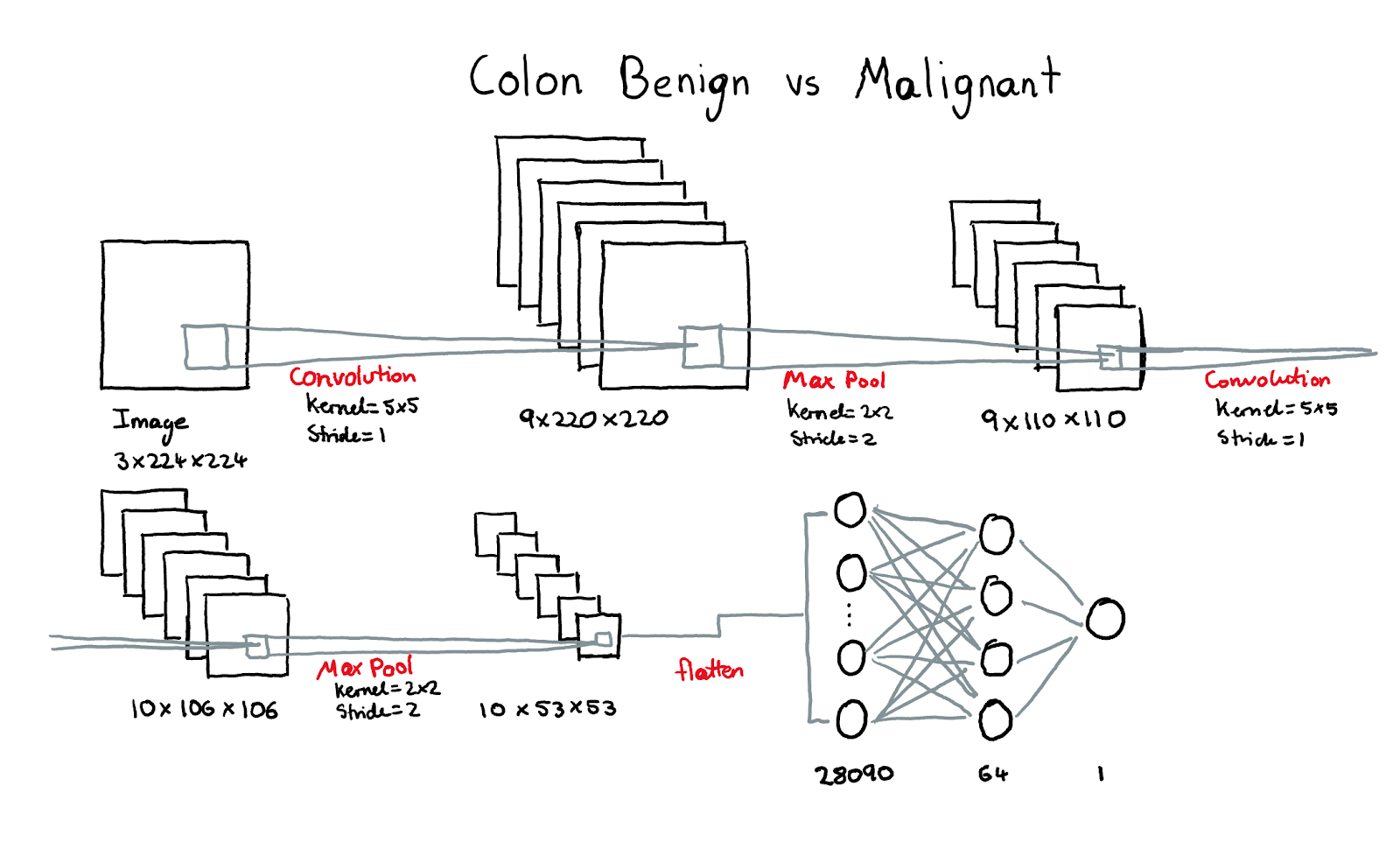

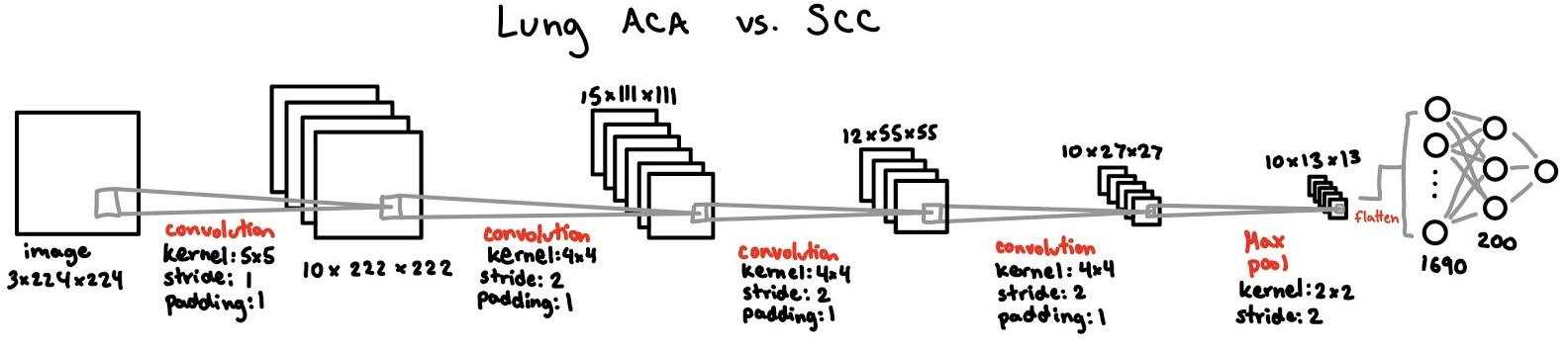

The architecture consists of four separate binary CNNs. All CNNs take in one preprocessed 224x224 square image and output either a zero or a one depending on the classification determined by the model. The first CNN distinguishes between lung and colon scans. If found to be a lung scan, the image is passed into CNN #2 which distinguishes between malignant and benign lung cells. If found to be a colon scan, the image is passed into CNN #3 which distinguishes between malignant and benign colon cells. The fourth CNN is used to classify the type of lung cancer: adenocarcinoma or squamous cell.

The CNN architecture was chosen for its invariance properties [5]. Since there is a high level of variance between images, such as differences in cell size, orientation and location, we require a model that can identify more complex patterns that may be present [5].

Each CNN was trained, validated and tested using code adapted from APS360 Lab 2 [11].

| Batch Size | Learning Rate | # of Epochs | # of Convolution Layers | # of Pooling Layers | # of Fully Connected Layers | |

|---|---|---|---|---|---|---|

| CNN 1: Lung vs. Colon | 256 | 0.001 | 14 | 2 | 2 | 2 |

| CNN 2: Lung Benign vs. Malignant | 150 | 0.01 | 9 | 2 | 2 | 2 |

| CNN 3: Colon Benign vs. Malignant | 256 | 0.001 | 14 | 2 | 2 | 2 |

| CNN 4: Lung SCC vs. ACA | 64 | 0.0065 | 13 | 4 | 1 | 2 |

Table 2: Finalized Model Hyperparameters for Each Convolutional Neural Network

Figure 5: CNN #1 - Lung vs. Colon Architecture

Figure 6: CNN #2 - Lung Benign vs. Malignant Architecture

Figure 7: CNN #3 - Colon Benign vs. Malignant Architecture

Figure 8: CNN #4 - Lung Malignant SCC vs. ACA Architecture

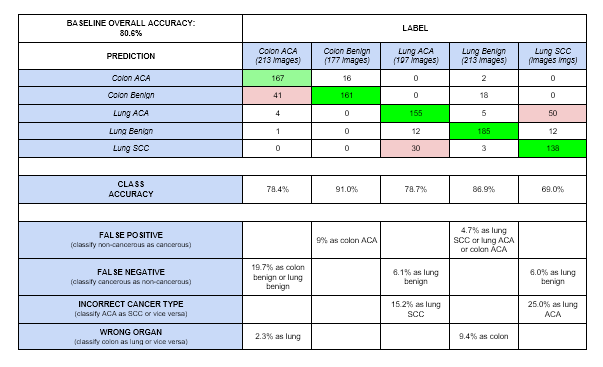

A Random Forests Classifier was the selected baseline [12]. 1,000 estimators were used to train over 7,000 images from the training set. Next, 1,000 images were taken from the validation set and run through the classifier. The model achieved an 80.6% accuracy. This model showed several weaknesses, including classifying 19.7% of colon ACA images as benign, and classifying 25.0% of lung SCC images as lung ACA. Overall, differentiation between the two cancerous lung subtypes was often confused, and high false negative rates made this model unsuitable for use.

Table 3: Baseline Model Confusion Matrix Analysis

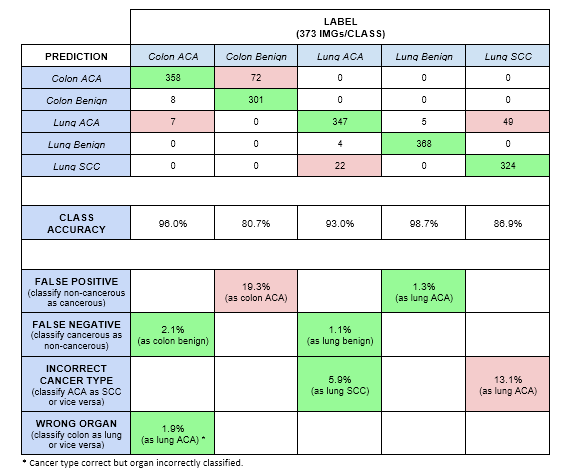

Table 4: Overall Model Confusion Matrix Analysis

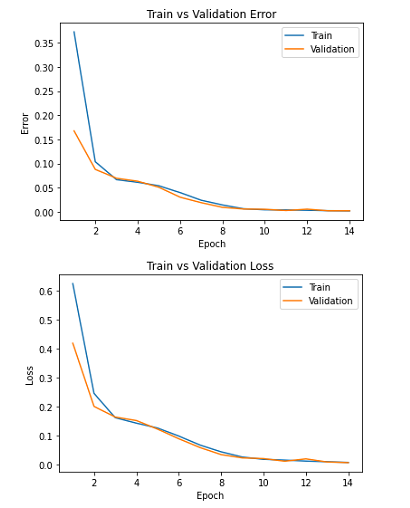

Performance of the model was reasonably good overall, with optimal results in lung benign, lung adenocarcinoma and colon adenocarcinoma scan classes. The overall model accuracy was 91.05%. The model had low error in differentiating lung and colon images. Accuracy between lung malignant and benign was also high. Since individual CNNs were used for each step of the classification process, each one had hyperparameters and architecture that best fit the classification job.

The accuracies of the more general convolutional neural networks (CNN #1 and CNN #2) are very high. Most error occurred in the periphery CNNs (CNN #3 and CNN #4). This ensured that minimal error propagation occurred.

| Training Accuracy | Validation Accuracy | Testing Accuracy | |

|---|---|---|---|

| CNN 1: Lung vs. Colon | 99.99% | 99.99% | 99.99% |

| CNN 2: Lung Benign vs. Malignant | 99.99% | 99.5% | 99.3% |

| CNN 3: Colon Benign vs. Malignant | 100% | 94.8% | 96.1% |

| CNN 4: Lung SCC vs. ACA | 96.0% | 90.1% | 89.5% |

Table 5: Training, Validation, & Testing Accuracies for Each Convolutional Neural Network

False negative results for both colon and lung were also low, indicating that the model would rarely ignore a cancerous scan. This is important, as false negative results could potentially cause the patient to seek treatment much later when the cancer is more serious. Our model avoids this crucial error.

Drawbacks include a tendency to give a false positive result on colon images. The model frequently misdiagnosed healthy colon scans as having cancer. This could result in a healthy patient getting an additional scan or biopsy, which could be expensive or uncomfortable. Although this is less serious than false negative classification, it could have detrimental effects.

Additionally, the model occasionally confused the cancerous lung subtypes, although significantly less frequently than the baseline model. This could potentially lead the patient to pursue a treatment which is not appropriate. This is why this model is only intended to be used with a doctor at this stage; although the results obtained are very favourable, it is not a replacement for a medical professional.

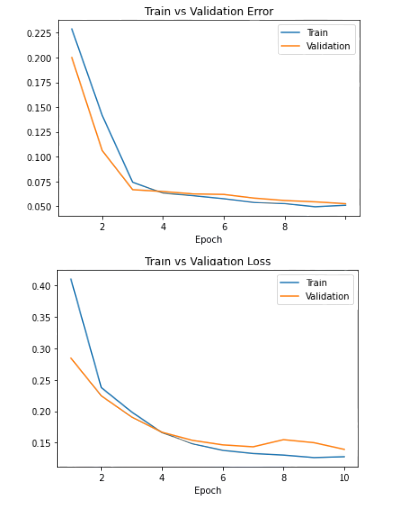

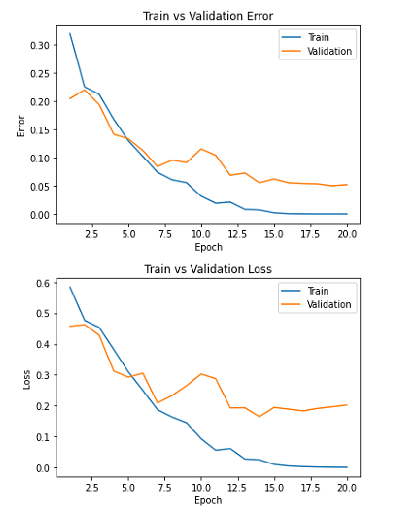

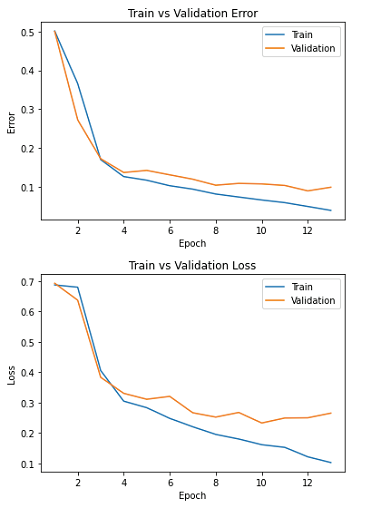

| CNN #1 - Error/Loss Training Curves | CNN #2 - Error/Loss Training Curves | CNN #3 - Error/Loss Training Curves | CNN #4 - Error/Loss Training Curves |

|---|---|---|---|

|

|

|

|

Table 6: Error/Loss Training Curves for Each Convolutional Neural Network

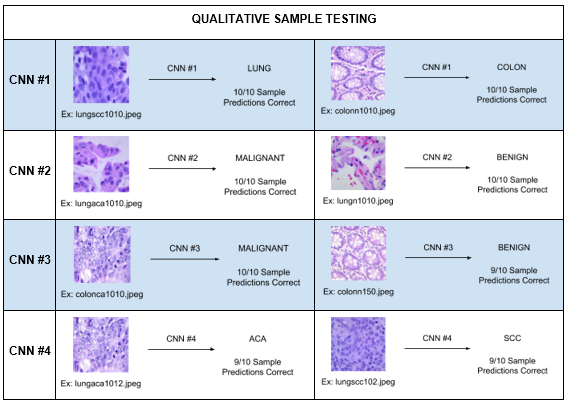

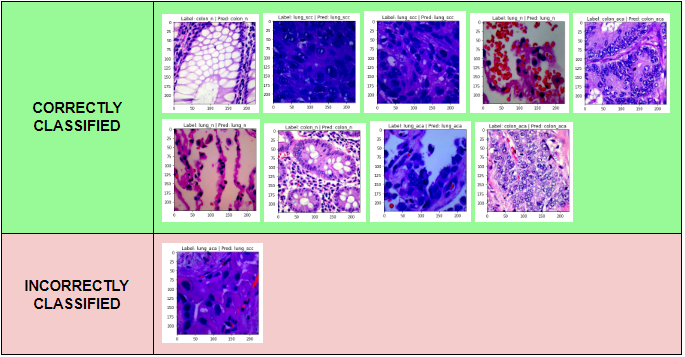

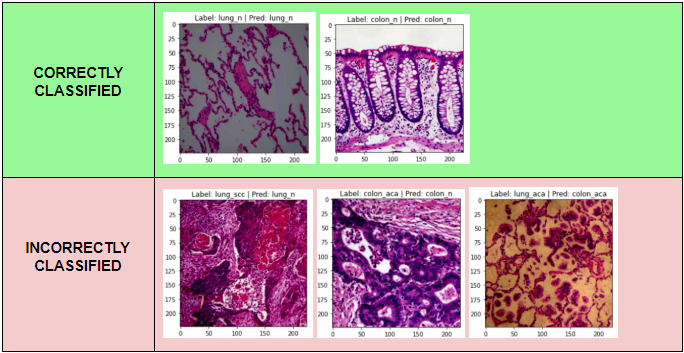

Table 7: Qualitative Sample Testing

In Table 7, we see that the model performs the worst on benign colon scans, which we predicted, as CNN #3 did worse on classifying benign images in sample testing. Further, the model classifies benign lung images the best. This makes sense as these images only go through CNN #1 and #2 which had nearly perfect testing and sample accuracy.

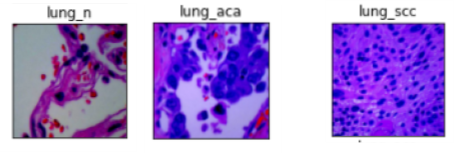

Benign colon scans are often classified as malignant, giving a high false positive rate for colon inputs. By comparison, benign lung scans are rarely classified as malignant, and if they are, they are only classified as ACA. Conversely, only lung ACA cells are mistakenly classified as benign (false negative). Looking at samples of these images shows why: some lung ACA samples have red organelles, similar pink colours, and negative space like benign images, whereas SCC images are overcrowded with dark blue cells.

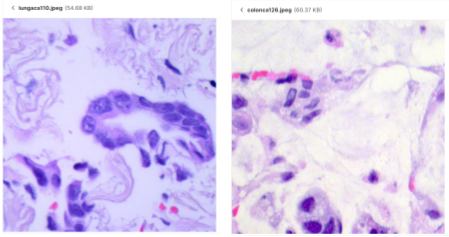

Figure 9: Data Visualization - Lung Samples

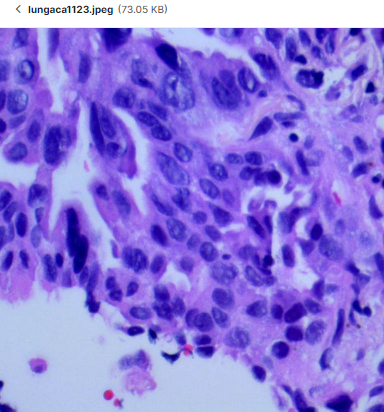

Lung malignant sub-types are mistaken often, but it is more likely to classify SCC images as ACA. This is justified when looking at the following sample, where there is overcrowding and little negative space:

Figure 10: Data Visualization - lungaca1123.png

Although lung vs. colon classification is nearly perfect, our model failed on several occasions and classified colon ACA as lung ACA. Colon and lung cells look very different in general, but when looking at some samples, those that do not have defined cell edges look similar. As the converse isn’t true, there must be some features in colon ACA cells that resemble a lung cell.

Figure 11: Data Visualization - Lung ACA Sample vs. Colon ACA Sample.png

To show the model performance on new data, a demo set containing 10 images was used. Due to the nature of this problem, it was difficult to acquire completely new data, so we were restricted to a subset of the original images (holdout set). As our model performed well on these images, it is capable of generalizing to never before seen images, provided they are of the same style.

Table 8: Classification of Dataset Images

A concern with using images from the same dataset is that the dataset had been augmented from 1250 original images. As we cannot identify augmented images, it is likely that testing images were not completely unique. This limits our ability to determine the models performance on completely new data, as although augmentation is sufficient to ensure the model doesn’t recognise the image, the style and features of the images are very similar.

To further validate our model, we input 1 image of each class found on Google Images [6][7][8][9]. It correctly classified 2 of the 5 samples: the lung and colon benign images. The model correctly differentiated between lung and colon images 80% of the time, but struggled with the individual classes. As it was difficult to find credible images, we were unable to determine the method through which the scans were taken and prepared. However, it is likely that the process differed from the one used to prepare the images in our dataset, which can be visually confirmed by the significant differences in these images from our original ones.

Table 9: Classification of Google-Sourced Images

Performance on correctly prepared images showed that the model could classify new data, provided the new data was prepared in the same way as the data in the dataset was prepared. Our model was trained on one dataset so it has learned to identify only one method of scan acquisition and processing, which limits performance. However, the architecture and concepts discussed could be generalized if more credible data is made available.

Using ML to complement medical diagnoses is a complicated and risky process. While false-positive results can induce further error, false-negative predictions prove fatal to a patient’s health and wellbeing. For example, in the case of CNN #2 and #3, incorrectly classifying a malignant image as benign in practice could be catastrophic to cancer survivorship.

As is the case with most ML models, accuracy is the focal point. Using a test dataset of 1865 images, the algorithm achieved 91.05% accuracy - a successful result when compared with the baseline. However, 8.95% of the test set, surmounting to a total of 167 images, were misclassified, which is extremely significant in medicine.

Because of the significance of even the tiniest error, it is important to note that at this stage any ML model should only be used in addition to a medical professional’s expertise. Perhaps when our algorithm is used in conjunction with the knowledge of doctors, we could reduce misdiagnoses to a true 0%.

Figure 12: AI in Medicine [10]

Given our unique approach to the problem, it is important to address the benefits of an algorithm with 4 binary neural networks rather than one traditional multi-class CNN. As part of our project investigation, we designed a multi-class classifier to justify our decision.

We noticed several shortcomings of the multi-class classifier: notably, it's memory and time intensive training (given that it would work with significantly more images divided into 5 classes), as well as lower accuracies (having only achieved 65% initially). Having 4 individual binary networks allows for precise tuning at each stage of the algorithm. In addition, having a high-accuracy model that differentiates between organs as the first step significantly reduces misclassification between cancers of similar types (Lung ACA vs. Colon ACA).

A tree of models also permits flexibility for future development. Perhaps CNN #1 could be modified to include the classification of other key organs of the body, or CNN #4 could include small cell lung cancer (SCLC) in addition to NSCLC images (ACA and SCC). Finally, multiple models allow users to selectively “enable” classification functions to best cater to their specific needs.

Through the development of this repository, we’ve realized the impact of having preprocessed data. While prepared data may be perfect for training or validating a model, that same model would not perform as well on data that is processed differently (prepared with different dyes etc.). In addition, aggregate accuracy values are often misleading, and false negative/positive values are a more precise way to identify weaknesses in a model. Lastly, we’ve acknowledged that a traditional approach to a problem is not always the best one, as in our case separate binary models performed better than a multi-class classifier.

Ethical consequences of this algorithm are far-reaching, as the data is highly sensitive and the output can directly impact patients. The scans used to train, validate and test this model must be anonymous and consent must be obtained from all patients before the scans are used.

This algorithm should not be blindly trusted to make serious health diagnoses without the consultation of an experienced doctor. Trusting an algorithm to make health decisions is unethical, as it has limited context in which to make decisions (i.e. one scan only, limited to only those types of cancer) and will have limited accuracy.

Once the algorithm is ready to use in a clinical or research setting, doctors should be provided with information on how it works and made aware of the risks. This will prevent professional judgement from becoming overly dependent on the program.

Ultimately, there is no doubt that we are still long ways away from being solely dependent on ML in the medical world, but with each project we stride closer to revolutionizing our health care.

[1] A. Szöllössi, “Pigeons classify breast cancer images,” BCC News, 20-Nov-2015. [Online]. Available: https://www.bbc.com/news/science-environment-34878151. [Accessed: 10-Aug-2020].

[2] N. Savage, “How AI is improving cancer diagnostics,” Nature News, 25-Mar-2020. [Online]. Available: https://www.nature.com/articles/d41586-020-00847-2. [Accessed: 13-Jun-2020].

[3] F. Walsh, “AI 'outperforms' doctors diagnosing breast cancer,” BBC News, 02-Jan-2020. [Online]. Available: https://www.bbc.com/news/health-50857759. [Accessed: 13-Jun-2020].

[4] Borkowski AA, Bui MM, Thomas LB, Wilson CP, DeLand LA, Mastorides SM. Lung and Colon Cancer Histopathological Image Dataset (LC25000). [Dataset]. Available: https://www.kaggle.com/andrewmvd/lung-and-colon-cancer-histopathological-images [Accessed: 18 May 2020].

[5] S. Colic, Class Lecture, Topic: “CNN Architectures and Transfer Learning.” APS360H1, Faculty of Applied Science and Engineering, University of Toronto, Toronto, Jun., 1, 2020

[6] Histology of the Lung. Youtube, 2016.

[7] J. Stojšić, “Precise Diagnosis of Histological Type of Lung Carcinoma: The First Step in Personalized Therapy,” Lung Cancer - Strategies for Diagnosis and Treatment, 2018.

[8] V. S. Chandan, “Normal Histology of Gastrointestinal Tract,” Surgical Pathology of Non-neoplastic Gastrointestinal Diseases, pp. 3–18, 2019.

[9] Memorang, “Colon Cancer (MCC Exam #3) Flashcards,” Memorang. [Online]. Available: https://www.memorangapp.com/flashcards/92659/Colon_Cancer/. [Accessed: 10-Aug-2020].

[10] J. Voigt, “The Future of Artificial Intelligence in Medicine,” Wharton Magazing. [Online]. Available: https://magazine.wharton.upenn.edu/digital/the-future-of-artificial-intelligence-in-medicine/. [Accessed: 10-Aug-2020].

[11] "Lab_2_Cats_vs_Dogs," APS360, Faculty of Applied Science and Engineering, University of Toronto, Toronto, summer 2020. [.ipynb file]. Available: https://q.utoronto.ca/courses/155423/files/7477635/download?download_frd=1.

[12] F. Boyles, "Using Random Forests in Python with Scikit-Learn," Oxford Protein Informatics Group, 26-Jul-2017. [Online]. Available: https://www.blopig.com/blog/2017/07/using-random-forests-in-python-with-scikit-learn/. [Accessed: 10-July-2020].