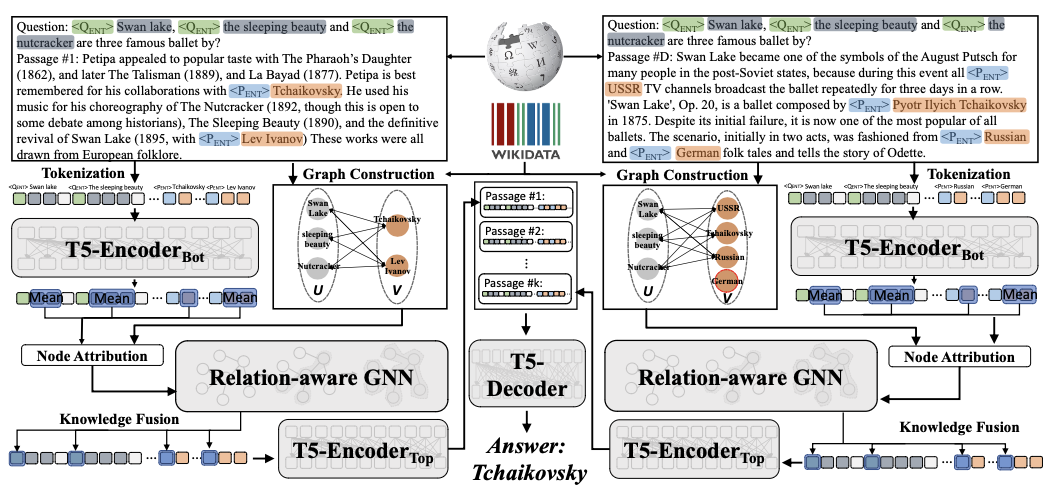

Hi all, this is the official repository for EMNLP 2022 paper: GRAPE:grapes: : Knowledge Graph Enhanced Passage Reader for Open-domain Question Answering. Our paper can be found at [arXiv link]. We sincerely apprecaite your interests in our projects!

To run our experiments, first please put the data folder in current directory like this:

GRAPE

| README.md

| train_reader.py

|

|----src

| | data.py

| | evaluation.py

| | model.py

| | ...

|

└----bash

| | script_multi_gpu.sh # Multi-GPU training and evaluation

|

|----data # download from the data source

|

|----graphs

| | bin_files

| | ...

|

|----json_input

| | jsonl_input_data

| | relation_json

| | test_relation_json # to test the EM on the subset where examples are fact-enhanced

With the directory structured like above, you can simply run:

cd bash

bash script_multi_gpu.sh # Multi-GPU

- For the multi-GPU version please change CUDA_VISIBLE_DEVICES and --nproc_per_node accordingly.

- Changing --n_context requires different graph files. (In this demo we only support --n_context 100)

- Ablation experiments can be tested by setting --gnn_mode to "NO_RELATION" or "NO_ATTENTION".

Detailed GPU consumption can be found in our paper. (roughly 30GB for large config with --per_gpu_batch_size set to 1, and 29GB for base config with --per_gpu_batch_size set to 3.)

The main libraries we utilize are:

- torch==1.11.0

- transformers==4.18.0

- dgl==0.8.2

- sentencepiece

To reproduce our results, please download the data folder from this google drive [drive link].

Inside the data folder are the json inputs as well as DGL graphs. We are still cleaning the code for data pre-processing and it is not included in this repository. Please contact the authors for these code.

We also uploaded the model checkpoints (both large and base configurations) for NQ and TQA as well as the intermediate outputs to the google drive [drive link].

If you find this repository useful in your research, please cite our paper:

@inproceedings{ju2022grape,

title={GRAPE: Knowledge Graph Enhanced Passage Reader for Open-domain Question Answering},

author={Ju, Mingxuan and Yu, Wenhao and Zhao, Tong and Zhang, Chuxu and Ye, Yanfang},

booktitle={Findings of Empirical Methods in Natural Language Processing},

year={2022}

}We modified our code from the repository of Fusion-in-Decoder (FiD) [repo].

Mingxuan Ju ([email protected]), Wenhao Yu ([email protected])