GPyM_TM is a Python package to perform topic modelling, either through the use of the Dirichlet multinomial mixture model (GSDMM) [1] or the Gamma Poisson mixture model (GPM) [2]. Each of the above models is available within the package in a separate class, namely GSDMM and GPM, respectively. The package is also available on Pypi.

The aim of topic modelling is to extract latent topics from large corpora. GSDMM [1] and GPM [2] assume each document belongs to a single topic, which is a suitable assumption for some short texts. Given an initial number of topics, K, this algorithm clusters documents and extracts the topical structures present within the corpus. If K is set to a high value, then the models will also automatically learn the number of clusters.

Further details about the GPM can be found in my thesis here.

The package is available online for use within Python 3 enviroments.

The installation can be performed through the use of a standard 'pip' install command, as provided below:

pip install GPyM-TM

The package has several dependencies, namely:

- numpy

- random

- math

- pandas

- re

- nltk

- gensim

- scipy

The class is named GSDMM, while the function itself is named DMM.

The function can take 6 possible arguments, two of which are required, and the remaining 4 being optional.

- corpus - text file, which has been cleaned and loaded into Python. That is, the text should all be lowercase, all punctuation and numbers should have also been removed.

- nTopics - the number of topics.

- alpha, beta - these are the distribution specific parameters.(The defaults for both of these parameters are 0.1.)

- nTopWords - number of top words per a topic.(The default is 10.)

- iters - number of Gibbs sampler iterations.(The default is 15.)

The function provides several components of output, namely:

- psi - topic x word matrix.

- theta - document x topic matrix.

- topics - the top words per topic.

- assignments - the topic numbers of selected topics only, as well as the final topic assignments.

- Final k - the final number of selected topics.

- coherence - the coherence score, which is a performance measure.

- selected_theta

- selected_psi

The class is named GPM, while the function itself is named GPM.

The function can take 8 possible arguments, two of which are required, and the remaining 6 being optional.

- corpus - text file, which has been cleaned and loaded into Python. That is, the text should all be lowercase, all punctuation and numbers should have also been removed.

- nTopics - the number of topics.

- alpha, beta and gam - these are the distribution specific parameters.(The defaults for these parameters are alpha = 0.001, beta = 0.001 and gam = 0.1 respectively.)

- nTopWords - number of top words per a topic.(The default is 10.)

- iters - number of Gibbs sampler iterations.(The default is 15.)

- N - this is a parameter used to normalize the document lengths, which is required for the Poisson model.

The function provides several components of output, namely:

- psi - topic x word matrix.

- theta - document x topic matrix.

- topics - the top words per topic.

- assignments - the topic numbers of selected topics only, as well as the final topic assignments.

- Final k - the final number of selected topics.

- coherence - the coherence score, which is a performance measure.

- selected_theta

- selected_psi

A more comprehensive tutorial is also available.

Run the following command within a Python command window:

pip install GPym_TM

Import the package into the relevant python script, with the following:

from GPyM_TM import GSDMM

from GPyM_TM import GPM

Call the class:

data_DMM = GSDMM.DMM(corpus, nTopics)

data_DMM = GSDMM.DMM(corpus, nTopics, alpha = 0.25, beta = 0.15, nTopWords = 12, iters =5)

data_GPM = GPM.GPM(corpus, nTopics)

data_GPM = GPM.GPM(corpus, nTopics, alpha = 0.002, beta = 0.03, gam = 0.06, nTopWords = 12, iters = 7, N = 8)

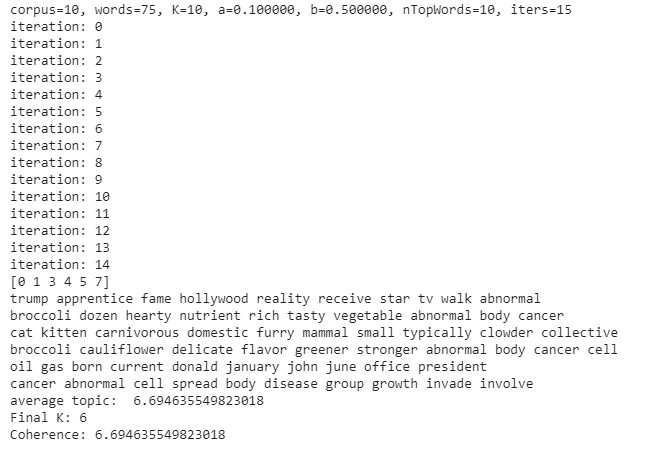

The output obtained for the Dirichlet multinomial mixture model appears as follows:

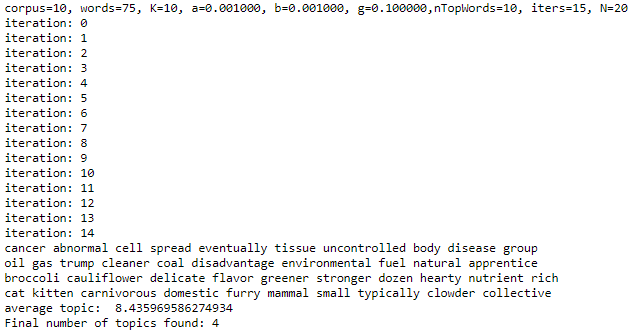

While, the output obtained for the Poisson model appears as follows:

Google Collab - Web framework

Python - Programming language of choice

Pypi - Distribution

I would like to extend a special thank you to my colleagues Alta de Waal and Ricardo Marques. None of this would have been possible without either of you.

Thank you!

This project is licensed under the MIT License - see the LICENSE file for details.