Python development AI assistant built on CodeLlama

- Install and run Ollama on your system.

- Create the model using the Modelfile in your terminal:

$ ollama create python-assistant -f Modelfile

- Run the Gradio UI via Docker:

OR install and run with Python 3.11 or higher:

$ docker compose up --build

$ pip install -r requirements.txt $ python main.py

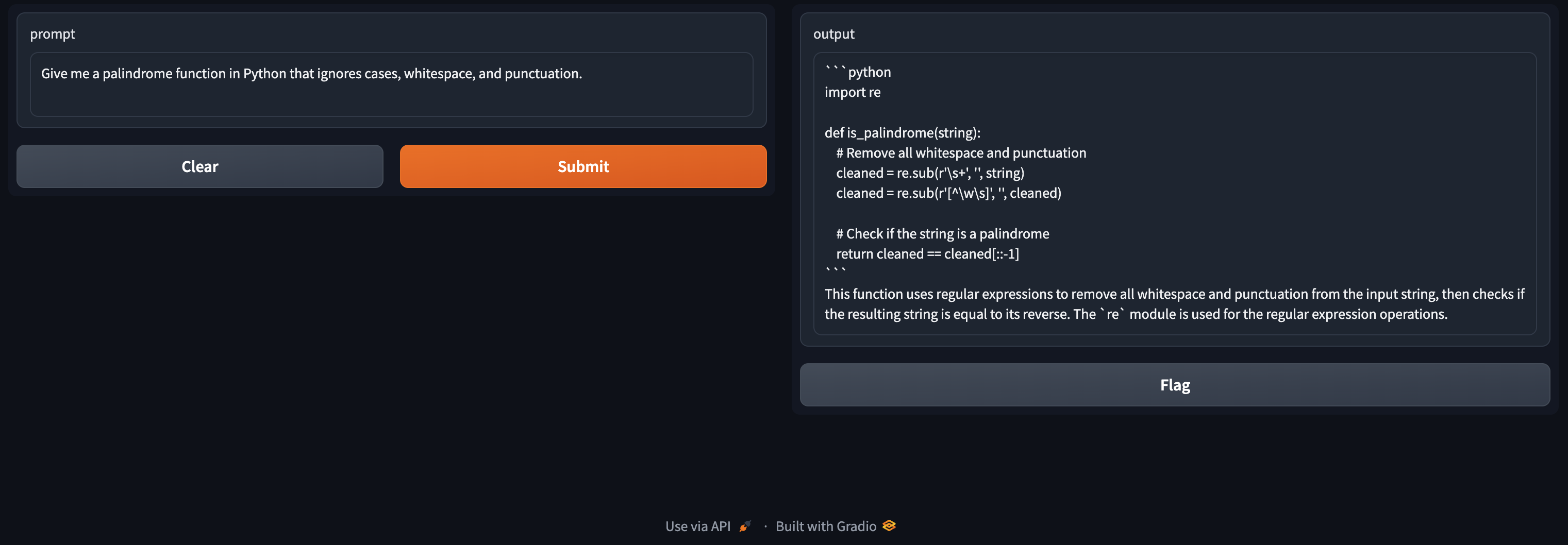

- Open the UI in your browser at http:https://localhost:7860/

These people have inspired the system instructions that are in the Modelfile

Special thanks goes to Matthew Berman for this video on wrapping the Ollama API in a Gradio UI.