- [06/11]🔥SliME is coming! We release the paper, code, models, and data for SliME!

- [06/11]🔥SliME-70B will be released soon.

Please follow the instructions below to install the required packages.

- Clone this repository

git clone https://github.com/yfzhang114/SliME.git- Install Package

conda create -n slime python=3.10 -y

conda activate slime

cd SliME

pip install --upgrade pip # enable PEP 660 support

pip install -e .- Install additional packages for training cases

pip install -e ".[train]"

pip install ninja

pip install datasets

pip install flash-attn --no-build-isolation

We provide all our fully finetuned models on Stage 1/2 and 3 data for SliME:

| Model | Base LLM | Vision Encoder | Finetuning Data | Finetuning schedule | Download |

|---|---|---|---|---|---|

| SliME-7B | Vicuna-7B-v1.5 | CLIP-L | SharedGPT+SMR | full_ft | ckpt |

| SliME-8B | Llama-3-8B-Instruct | CLIP-L | SharedGPT+SMR | full_ft | ckpt |

| SliME-13B | Vicuna-13B-v1.5 | CLIP-L | SharedGPT+SMR | full_ft | ckpt |

| SliME-70B | Llama-3-70B-Instruct | CLIP-L | SharedGPT+SMR | Lora | ckpt |

Here are the pretrained weights on Stage 1/2 data only:

| Model | Base LLM | Vision Encoder | Pretrain Data | Finetuning schedule | Download |

|---|---|---|---|---|---|

| SliME-7B | Vicuna-7B-v1.5 | CLIP-L | LLaVA-Pretrain | 1e | ckpt |

| SliME-8B | Llama-3-8B-Instruct | CLIP-L | LLaVA-Pretrain | 1e | ckpt |

| SliME-13B | Vicuna-13B-v1.5 | CLIP-L | LLaVA-Pretrain | 1e | ckpt |

| SliME-70B | Llama-3-70B-Instruct | CLIP-L | LLaVA-Pretrain | 1e | ckpt |

Please follow LLaVA and SharedGPT4V to prepare the corresponding images and data.

data

├── arxivqa

│ └── images

├── DVQA

│ └── images

├── Geometry3K

│ └── 0-2400 dirs

├── ChartQA

│ └── train_images

└── GeoQA3

│ ├── image

│ └── json

├── mathvision

├── scienceqa

├── tabmwp

└── GeoQA3

│ ├── train

│ └── test

│ └── val

└── ai2d

│ ├── abc_images

│ └── images

└── geoqa+

│ └── images

- Arxiv QA Download images using this download url

python playground/data/process_arxivqa.py- DVQA

Download images using this url.

- ChartQA

Clone this repo

extract all the training images in ChartQA_Dataset/train/png into ChartQA

- Geometry3K

Download images using this url.

The image path in our json file will be os.path.join(f'Geometry3K/i', 'img_diagram.png')

- GeoQA3

Download images using this url

extract all the training images in GeoQA3/image

- MathVision

Download images using this url

Our data will not include the images from test-mini split automatically

- ScienceQA

wget https://scienceqa.s3.us-west-1.amazonaws.com/images/train.zip

wget https://scienceqa.s3.us-west-1.amazonaws.com/images/val.zip

wget https://scienceqa.s3.us-west-1.amazonaws.com/images/test.zip

unzip -q train.zip

unzip -q val.zip

unzip -q test.zip

rm train.zip

rm val.zip

rm test.zip- Tabmwp

Download images using this url

- TextbookQA

Download images using this url

- AI2D:

Download images using this url

- GeoQA+

Download images using this url

SliME training consists of three stages: (1) training the global projector and attention adapter specifically; (2) training the local compression layer; and (3) training the full model.

SliME is trained on 8 A100 GPUs with 80GB memory. To train on fewer GPUs, you can reduce the per_device_train_batch_size and increase the gradient_accumulation_steps accordingly. Always keep the global batch size the same: per_device_train_batch_size x gradient_accumulation_steps x num_gpus.

Please make sure you download and organize the data following Preparation before training.

If you want to train and finetune SliME, please run the following command for SliME-7B with image size 336:

bash scripts/vicuna/vicuna_7b_pt.sh

bash scripts/vicuna/vicuna_7b_sft.shor for SliME-8B with image size 336:

bash scripts/llama/llama3_8b_pt.sh

bash scripts/llama/llama3_8b_sft.shBecause we reuse the pre-trained projecter weights from the SliME-7B, you can directly use the sft commands stage-3 instruction tuning by changing the PROJECTOR_DIR:

bash scripts/llama/llama3_8b_sft.shPlease find more training scripts of in scripts/.

We perform evaluation on several image-based benchmarks. Please see Evaluation for the detailes.

If you want to evaluate the model on image-based benchmarks, please use the scripts in scripts/MODEL_PATH/eval.

For example, run the following command for TextVQA evaluation with SliME-7B:

bash scripts/llama/eval/textvqa.shPlease find more evaluation scripts in scripts/MODEL_PATH.

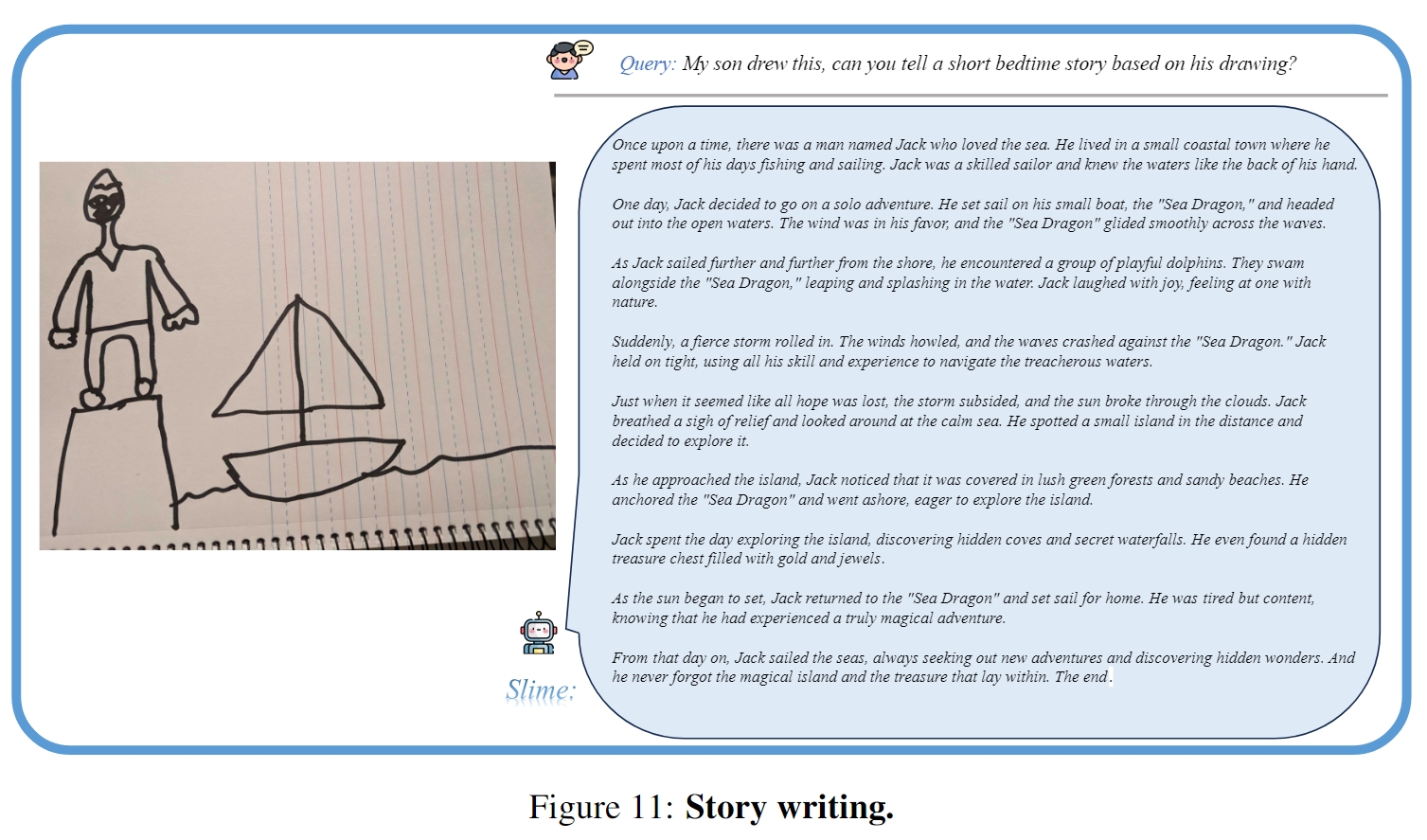

We provide some examples in this section. More examples can be found in our project page.

If you find this repo useful for your research, please consider citing the paper

@misc{zhang2024llavahd,

title={Beyond LLaVA-HD: Diving into High-Resolution Large Multimodal Models},

author={Yi-Fan Zhang and Qingsong Wen and Chaoyou Fu and Xue Wang and Zhang Zhang and Liang Wang and Rong Jin},

year={2024},

eprint={2406.08487},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

We would like to thank the following repos for their great work:

The data and checkpoint is intended and licensed for research use only. They are also restricted to uses that follow the license agreement of LLaVA, LLaMA, Vicuna and GPT-4. The dataset is CC BY NC 4.0 (allowing only non-commercial use) and models trained using the dataset should not be used outside of research purposes.